-

Notifications

You must be signed in to change notification settings - Fork 4.9k

New issue

Have a question about this project? Sign up for a free GitHub account to open an issue and contact its maintainers and the community.

By clicking “Sign up for GitHub”, you agree to our terms of service and privacy statement. We’ll occasionally send you account related emails.

Already on GitHub? Sign in to your account

[BUG] BlobClient.UploadAsync(Stream content, bool overwrite) deadlocks if the stream is not rewinded #14080

Comments

|

Thanks for the feedback! We are routing this to the appropriate team for follow-up. cc @xgithubtriage. |

|

Thank you for your feedback. Tagging and routing to the team best able to assist. |

|

Hi, I was able to reproduce your issue. I was able to put a Fiddler trace on it too, looks like the packet will take a while to upload before it realizes that it's missing the bytes from where the position is moved. So we send the Content-Length to be the size of the stream but unfortunately we hand it the bytes minus the position moved. So then we get a 408 (Request Body Incomplete) and we attempt to retry since its a 408 which is why you might be seeing a deadlock cause it takes a very long time. We will look to resolve this issue when we can. Notes: Fiddler Trace Request (This gets retried since its a 408) Fiddler Trace Response Stack Trace |

|

@pakrym, what would you think if we attempted to mitigate this with: |

I don't think that this mitigation would work. |

|

Are you sure? What about if a |

|

Sorry, it will always be |

|

I think The check I previously mentioned would ensure we are working with a valid position [0, stream.Length). |

|

Can you please explain how your proposal fixes the issue customers are seeing? |

|

It solves a common edge case of this issue, when I think its possible the deadlock when The other possibilities are it could be something in NonDisposingStream or StorageProgressExtensions. |

|

@kasobol-msft had found the source of the issue already, it's because

|

|

Understood, I will create a PR to address this throughout the Storage SDK. I still think it would be valuable to add the |

|

This issue still exist in Azure.Storage.Blobs 12.10.0 ? |

|

Hi, please provide reproduction of the problem you are running into using 12.10.0 with as much details as possible so we can resolve it. Then we can determine if we need to reopen this issue or open a different one. |

Consider the following code, where

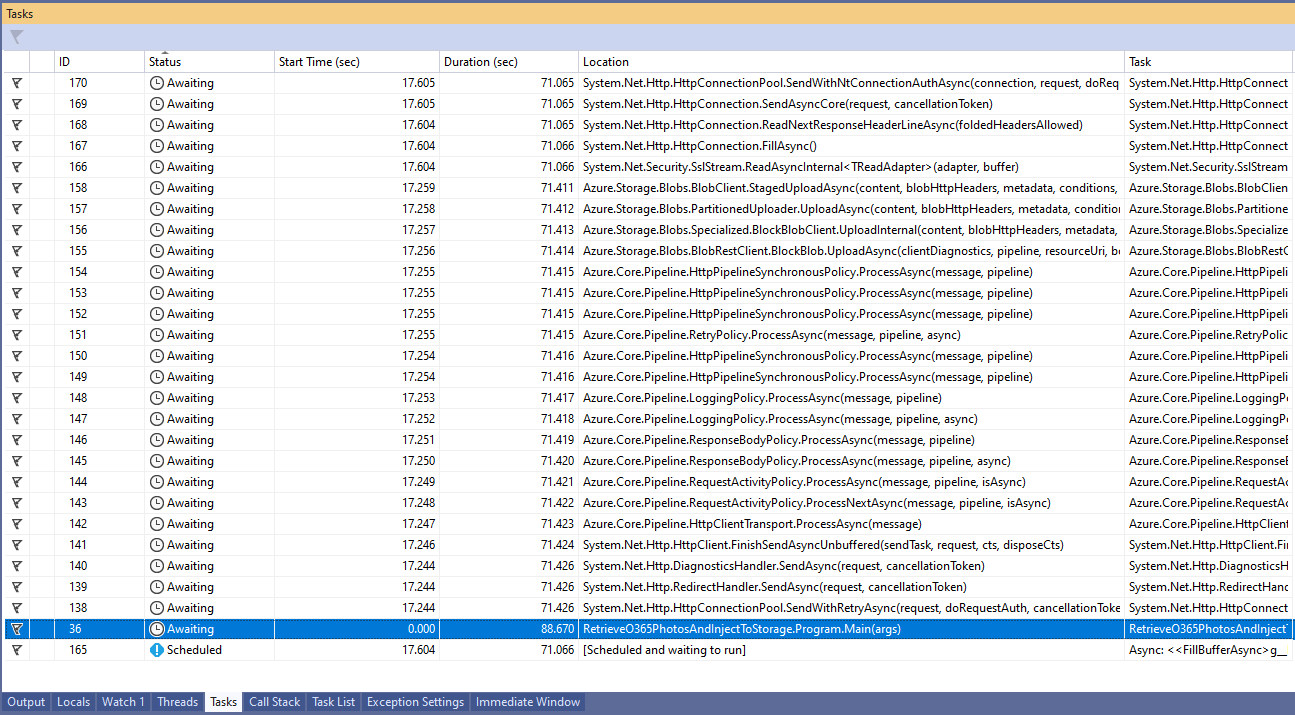

streamis valid and previously created, andposis a number larger than 0 and less than or equal to the stream's size:If this is run, the code will deadlock while awaiting. The

Tasksview in Visual Studio shows multiple tasks running:Upon analyzing, each task N is waiting for task N-1, with the exception of:

The call stack, while breaking into during the stall, is below:

Using

try/catcharound the async call doesn't result in anything throwing.Should the position in the stream be 0, the call completes as expected.

Environment:

Azure.Storage.Blobs 12.4.4dotnet --infooutput for .NET Core projects):Windows 10 .NET Core 3.1.0Visual Studio 16.5.0 Preview 2The text was updated successfully, but these errors were encountered: