();

+

+ /**

+ * The current size of the buffer. Every time the buffer contents are changed, this value

+ * is set. This is not to be confused with the maximum buffer size.

+ */

+ private int size;

+

+ /**

+ * Creates a new AudioBuffer with the given maximum size.

+ * @param maxSize The maximum size of the buffer.

+ */

+ public AudioBuffer(int maxSize) {

+ buffer = new float[maxSize];

+ }

+

+ /**

+ * Copies data into the buffer from the given source buffer. This will trigger the callbacks' {@link AudioBufferCallback#frameReceived(com.codename1.media.AudioBuffer) }

+ * method.

+ * @param source The source buffer to copy from.

+ */

+ public synchronized void copyFrom(AudioBuffer source) {

+ copyFrom(source.buffer, 0, source.size);

+ }

+

+ /**

+ * Copies data from the source array into this buffer. This will trigger the callbacks' {@link AudioBufferCallback#frameReceived(com.codename1.media.AudioBuffer) }

+ * method.

+ * @param source

+ */

+ public synchronized void copyFrom(float[] source) {

+ copyFrom(source, 0, source.length);

+ }

+

+ /**

+ * Copies data from the source array (in the given range) into the buffer. This will trigger the callbacks' {@link AudioBufferCallback#frameReceived(com.codename1.media.AudioBuffer) }

+ * method.

+ * @param source The source array to copy data from.

+ * @param offset The offset in the source array to begin copying from.

+ * @param len The length of the range to copy.

+ */

+ public synchronized void copyFrom(float[] source, int offset, int len) {

+ if (len > buffer.length) {

+ throw new IllegalArgumentException("Buffer size is "+buffer.length+" but attempt to copy "+len+" samples into it");

+ }

+ System.arraycopy(source, offset, buffer, 0, len);

+ size = len;

+ fireFrameReceived();

+ }

+

+ /**

+ * Copies data to another audio buffer. This will trigger callbacks in the destination.

+ * @param dest The destination audio buffer.

+ */

+ public synchronized void copyTo(AudioBuffer dest) {

+ dest.copyFrom(this);

+ }

+

+ /**

+ * Copies data from this buffer to the given float array.

+ * @param dest The destination float array to copy to.

+ */

+ public synchronized void copyTo(float[] dest) {

+ copyTo(dest, 0);

+ }

+

+ /**

+ * Copies data from this buffer to the given float array.

+ * @param dest The destination float array.

+ * @param offset The offset in the destination array to start copying to.

+ */

+ public synchronized void copyTo(float[] dest, int offset) {

+ int len = size;

+ if (dest.length < offset + len) {

+ throw new IllegalArgumentException("Destination is not big enough to store len "+len+" at offset "+offset+". Length only "+dest.length);

+ }

+

+ System.arraycopy(buffer, 0, dest, offset, len);

+ }

+

+ /**

+ * The current size of the buffer. This value will be changed each time data is copied into the buffer to reflect the current size of the data.

+ * @return

+ */

+ public int getSize() {

+ return size;

+ }

+

+ /**

+ * Gets the maximum size of the buffer. Trying to copy more than this amount of data into the buffer will result in an IndexOutOfBoundsException.

+ * @return

+ */

+ public int getMaxSize() {

+ return buffer.length;

+ }

+

+ /**

+ * Called when a frame is received. This will call the {@link AudioBufferCallback#frameReceived(com.codename1.media.AudioBuffer) } method in all

+ * registered callbacks.

+ */

+ private synchronized void fireFrameReceived() {

+ inFireFrame = true;

+

+ try {

+ for (AudioBufferCallback l : callbacks) {

+ l.frameReceived(this);

+ }

+ } finally {

+ inFireFrame = false;

+ while (!pendingOps.isEmpty()) {

+ Runnable r = pendingOps.remove(0);

+ r.run();

+ }

+ }

+ }

+

+ /**

+ * Adds a callback to be notified when the contents of this buffer are changed.

+ * @param l The AudioBufferCallback

+ */

+ public synchronized void addCallback(final AudioBufferCallback l) {

+ if (inFireFrame) {

+ pendingOps.add(new Runnable() {

+ public void run() {

+ callbacks.add(l);

+ }

+ });

+ } else {

+ callbacks.add(l);

+ }

+ }

+

+ /**

+ * Removes a callback from the audio buffer.

+ * @param l The callback to remove.

+ */

+ public synchronized void removeCallback(final AudioBufferCallback l) {

+ if (inFireFrame) {

+ pendingOps.add(new Runnable() {

+ public void run() {

+ callbacks.remove(l);

+ }

+ });

+ } else {

+ callbacks.remove(l);

+ }

+ }

+

+

+

+}

diff --git a/CodenameOne/src/com/codename1/media/MediaManager.java b/CodenameOne/src/com/codename1/media/MediaManager.java

index 06661fec09..c2b8e091bf 100644

--- a/CodenameOne/src/com/codename1/media/MediaManager.java

+++ b/CodenameOne/src/com/codename1/media/MediaManager.java

@@ -26,6 +26,8 @@

import com.codename1.util.AsyncResource;

import java.io.IOException;

import java.io.InputStream;

+import java.util.HashMap;

+import java.util.Map;

/**

*

@@ -49,8 +51,55 @@

*  */

public class MediaManager {

+

+ /**

+ * A static map of audio buffers. These can be used to register an Audio buffer to receive

+ * raw PCM data from the microphone.

+ * @since 7.0

+ */

+ private static Map audioBuffers = new HashMap();

private static RemoteControlListener remoteControlListener;

+ /**

+ * Gets an audio buffer at the given path.

+ * @param path The path to the Audio buffer. This path doesn't correspond to a real file. It is just

+ * used as a key to map to the audio buffer so that it can be addressed.

+ * @return The AudioBuffer or null if no buffer exists at that path.

+ * @since 7.0

+ */

+ public static synchronized AudioBuffer getAudioBuffer(String path) {

+ return getAudioBuffer(path, false, 256);

+ }

+

+ /**

+ * Gets or creates an audio buffer at the given path.

+ * @param path The path to the Audio buffer. This path doesn't correspond to a real file. It is just

+ * used as a key to map to the audio buffer so that it can be addressed.

+ * @param create If this flag is {@literal true} and no buffer exists at the given path,

+ * then the buffer will be created.

+ * @param size The maximum size of the buffer.

+ * @return The audio buffer or null if no buffer exists at that path and the {@literal create} flag is {@literal false}.

+ * @since 7.0

+ */

+ public static synchronized AudioBuffer getAudioBuffer(String path, boolean create, int size) {

+ if (create && !audioBuffers.containsKey(path)) {

+ audioBuffers.put(path, new AudioBuffer(size));

+ }

+

+ return audioBuffers.get(path);

+ }

+

+ /**

+ * Deletes the audio buffer at the given path.

+ * @param path The path to the audio buffer to delete.

+ * @since 7.0

+ */

+ public static synchronized void deleteAudioBuffer(String path) {

+ audioBuffers.remove(path);

+ }

+

+

+

/**

* Registers a listener to be notified of remote control events - e.g.

* the play/pause/seek buttons on the user's lock screen when background

@@ -278,6 +327,9 @@ public static Media createMediaRecorder(String path, String mimeType) throws IOE

* @since 7.0

*/

public static Media createMediaRecorder(MediaRecorderBuilder builder) throws IOException {

+ if (builder.isRedirectToAudioBuffer()) {

+ return builder.build();

+ }

String mimeType = builder.getMimeType();

if (mimeType == null && getAvailableRecordingMimeTypes().length > 0) {

mimeType = getAvailableRecordingMimeTypes()[0];

@@ -298,6 +350,7 @@ public static Media createMediaRecorder(MediaRecorderBuilder builder) throws IOE

" is not supported on this platform use "

+ "getAvailableRecordingMimeTypes()");

}

+

return Display.getInstance().createMediaRecorder(path, mimeType);

}

}

diff --git a/CodenameOne/src/com/codename1/media/MediaRecorderBuilder.java b/CodenameOne/src/com/codename1/media/MediaRecorderBuilder.java

index 9811f4706f..53f34d7bf3 100644

--- a/CodenameOne/src/com/codename1/media/MediaRecorderBuilder.java

+++ b/CodenameOne/src/com/codename1/media/MediaRecorderBuilder.java

@@ -34,6 +34,8 @@ public class MediaRecorderBuilder {

private int audioChannels=1,

bitRate=64000,

samplingRate=44100;

+ private boolean redirectToAudioBuffer;

+

private String mimeType = Display.getInstance().getAvailableRecordingMimeTypes()[0],

path;

@@ -91,6 +93,18 @@ public MediaRecorderBuilder path(String path) {

return this;

}

+ /**

+ * Set this flag to {@literal true} to redirect the microphone input to an audio buffer.

+ * This is handy if you just want to capture the raw PCM data from the microphone.

+ * @param redirect True to redirect output to an audio buffer. The {@link #path(java.lang.String) }

+ * parameter would then be used as the path to the audio buffer instead of the output file.

+ * @return Self for chaining.

+ */

+ public MediaRecorderBuilder redirectToAudioBuffer(boolean redirect) {

+ this.redirectToAudioBuffer = redirect;

+ return this;

+ }

+

/**

* Builds the MediaRecorder with the given settings.

* @return

@@ -147,7 +161,15 @@ public String getMimeType() {

public String getPath() {

return path;

}

-

-

+

+ /**

+ * True if the media recorder should redirect output to an audio buffer instead

+ * of a file.

+ * @return

+ */

+ public boolean isRedirectToAudioBuffer() {

+ return redirectToAudioBuffer;

+ }

+

}

diff --git a/CodenameOne/src/com/codename1/media/WAVWriter.java b/CodenameOne/src/com/codename1/media/WAVWriter.java

new file mode 100644

index 0000000000..dedcbdf83a

--- /dev/null

+++ b/CodenameOne/src/com/codename1/media/WAVWriter.java

@@ -0,0 +1,149 @@

+package com.codename1.media;

+

+import java.io.InputStream;

+import com.codename1.io.Util;

+import java.io.IOException;

+import com.codename1.io.FileSystemStorage;

+import java.io.OutputStream;

+import com.codename1.io.File;

+

+/**

+ * A class that can write raw PCM data to a WAV file.

+ *

+ *

+ * @since 7.0

+ * @author shannah

+ */

+public class WAVWriter implements AutoCloseable

+{

+ private File outputFile;

+ private OutputStream out;

+ private int samplingRate;

+ private int channels;

+ private int numBits;

+ private long dataLength;

+

+ /**

+ * Creates a new writer for writing a WAV file.

+ * @param outputFile The output file.

+ * @param samplingRate The sampling rate. E.g. 44100

+ * @param channels The number of channels. E.g. 1 or 2

+ * @param numBits 8 or 16

+ * @throws IOException

+ */

+ public WAVWriter(final File outputFile, final int samplingRate, final int channels, final int numBits) throws IOException {

+ this.outputFile = outputFile;

+ this.out = FileSystemStorage.getInstance().openOutputStream(outputFile.getAbsolutePath());

+ this.samplingRate = samplingRate;

+ this.channels = channels;

+ this.numBits = numBits;

+ }

+

+ private void writeHeader() throws IOException {

+ final byte[] header = new byte[44];

+ long totalDataLen = dataLength + 36;

+ final long bitrate = this.samplingRate * this.channels * this.numBits;

+ header[0] = 82;

+ header[1] = 73;

+ header[3] = (header[2] = 70);

+ header[4] = (byte)(totalDataLen & 0xFFL);

+ header[5] = (byte)(totalDataLen >> 8 & 0xFFL);

+ header[6] = (byte)(totalDataLen >> 16 & 0xFFL);

+ header[7] = (byte)(totalDataLen >> 24 & 0xFFL);

+ header[8] = 87;

+ header[9] = 65;

+ header[10] = 86;

+ header[11] = 69;

+ header[12] = 102;

+ header[13] = 109;

+ header[14] = 116;

+ header[15] = 32;

+ header[16] = (byte)this.numBits;

+ header[17] = 0;

+ header[19] = (header[18] = 0);

+ header[20] = 1;

+ header[21] = 0;

+ header[22] = (byte)this.channels;

+ header[23] = 0;

+ header[24] = (byte)(this.samplingRate & 0xFF);

+ header[25] = (byte)(this.samplingRate >> 8 & 0xFF);

+ header[26] = (byte)(this.samplingRate >> 16 & 0xFF);

+ header[27] = (byte)(this.samplingRate >> 24 & 0xFF);

+ header[28] = (byte)(bitrate / 8L & 0xFFL);

+ header[29] = (byte)(bitrate / 8L >> 8 & 0xFFL);

+ header[30] = (byte)(bitrate / 8L >> 16 & 0xFFL);

+ header[31] = (byte)(bitrate / 8L >> 24 & 0xFFL);

+ header[32] = (byte)(this.channels * this.numBits / 8);

+ header[33] = 0;

+ header[34] = 16;

+ header[35] = 0;

+ header[36] = 100;

+ header[37] = 97;

+ header[38] = 116;

+ header[39] = 97;

+ header[40] = (byte) (dataLength & 0xff);

+ header[41] = (byte) ((dataLength >> 8) & 0xff);

+ header[42] = (byte) ((dataLength >> 16) & 0xff);

+ header[43] = (byte) ((dataLength >> 24) & 0xff);

+ this.out.write(header);

+ }

+

+ /**

+ * Writes PCM data to the file.

+ * @param pcmData PCM data to write. These are float values between -1 and 1.

+ * @param offset Offset in pcmData array to start writing.

+ * @param len Length in pcmData array to write.

+ * @throws IOException

+ */

+ public void write(final float[] pcmData, final int offset, final int len) throws IOException {

+ for (int i = 0; i < len; ++i) {

+ final float sample = pcmData[offset + i];

+ if (this.numBits == 8) {

+ final byte byteSample = (byte)(sample * 127.0f);

+ this.out.write(byteSample & 0xff);

+ ++this.dataLength;

+ }

+ else {

+ if (this.numBits != 16) {

+ throw new IllegalArgumentException("numBits must be 8 or 16 but found " + this.numBits);

+ }

+ final short shortSample = (short)(sample * 32767.0f);

+ this.out.write(shortSample & 0xff);

+ this.out.write((shortSample >> 8) & 0xff);

+ this.dataLength += 2L;

+ }

+ }

+ }

+

+ private String getPCMFile() {

+ return this.outputFile.getAbsolutePath() + ".pcm";

+ }

+

+ /**

+ * Closes the writer, and writes the WAV file.

+ * @throws Exception

+ */

+ @Override

+ public void close() throws Exception {

+ this.out.close();

+ final FileSystemStorage fs = FileSystemStorage.getInstance();

+ fs.rename(this.outputFile.getAbsolutePath(), new File(this.getPCMFile()).getName());

+ try {

+ this.out = fs.openOutputStream(this.outputFile.getAbsolutePath());

+ final InputStream in = fs.openInputStream(this.getPCMFile());

+ this.writeHeader();

+ Util.copy(in, this.out);

+ try {

+ this.out.close();

+ }

+ catch (Throwable t) {}

+ try {

+ in.close();

+ }

+ catch (Throwable t2) {}

+ }

+ finally {

+ fs.delete(this.getPCMFile());

+ }

+ }

+}

\ No newline at end of file

diff --git a/CodenameOne/src/com/codename1/ui/Display.java b/CodenameOne/src/com/codename1/ui/Display.java

index 8195be5d53..2ac49d1be5 100644

--- a/CodenameOne/src/com/codename1/ui/Display.java

+++ b/CodenameOne/src/com/codename1/ui/Display.java

@@ -3606,6 +3606,24 @@ public void capturePhoto(ActionListener response){

public void captureAudio(ActionListener response) {

impl.captureAudio(response);

}

+

+ /**

+ * This method tries to invoke the device native hardware to capture audio.

+ * The method returns immediately and the response will be sent asynchronously

+ * to the given ActionListener Object

+ * The audio is saved to a file on the device.

+ *

+ * use this in the actionPerformed to retrieve the file path

+ * String path = (String) evt.getSource();

+ *

+ * @param recordingOptions Audio recording options.

+ * @param response a callback Object to retrieve the file path

+ * @throws RuntimeException if this feature failed or unsupported on the platform

+ * @since 7.0

+ */

+ public void captureAudio(MediaRecorderBuilder recordingOptions, ActionListener response) {

+ impl.captureAudio(recordingOptions, response);

+ }

/**

* This method tries to invoke the device native camera to capture video.

diff --git a/Ports/Android/src/com/codename1/impl/android/AndroidImplementation.java b/Ports/Android/src/com/codename1/impl/android/AndroidImplementation.java

index 7952e4995f..0eeadd573e 100644

--- a/Ports/Android/src/com/codename1/impl/android/AndroidImplementation.java

+++ b/Ports/Android/src/com/codename1/impl/android/AndroidImplementation.java

@@ -27,6 +27,7 @@

import com.codename1.location.AndroidLocationManager;

import android.app.*;

import android.content.pm.PackageManager.NameNotFoundException;

+import android.media.AudioTimestamp;

import android.support.v4.content.ContextCompat;

import android.view.MotionEvent;

import com.codename1.codescan.ScanResult;

@@ -82,6 +83,8 @@

import android.graphics.Matrix;

import android.graphics.drawable.BitmapDrawable;

import android.hardware.Camera;

+import android.media.AudioFormat;

+import android.media.AudioRecord;

import android.media.ExifInterface;

import android.media.MediaPlayer;

import android.media.MediaRecorder;

@@ -3568,7 +3571,7 @@ public void run() {

@Override

public Media createMediaRecorder(MediaRecorderBuilder builder) throws IOException {

- return createMediaRecorder(builder.getPath(), builder.getMimeType(), builder.getSamplingRate(), builder.getBitRate(), builder.getAudioChannels(), 0);

+ return createMediaRecorder(builder.getPath(), builder.getMimeType(), builder.getSamplingRate(), builder.getBitRate(), builder.getAudioChannels(), 0, builder.isRedirectToAudioBuffer());

}

@Override

@@ -3579,15 +3582,16 @@ public Media createMediaRecorder(final String path, final String mimeType) throw

return createMediaRecorder(builder);

}

+

- private Media createMediaRecorder(final String path, final String mimeType, final int sampleRate, final int bitRate, final int audioChannels, final int maxDuration) throws IOException {

+ private Media createMediaRecorder(final String path, final String mimeType, final int sampleRate, final int bitRate, final int audioChannels, final int maxDuration, final boolean redirectToAudioBuffer) throws IOException {

if (getActivity() == null) {

return null;

}

if(!checkForPermission(Manifest.permission.RECORD_AUDIO, "This is required to record audio")){

return null;

}

- final AndroidRecorder[] record = new AndroidRecorder[1];

+ final Media[] record = new Media[1];

final IOException[] error = new IOException[1];

final Object lock = new Object();

@@ -3596,34 +3600,190 @@ private Media createMediaRecorder(final String path, final String mimeType, fin

@Override

public void run() {

synchronized (lock) {

- MediaRecorder recorder = new MediaRecorder();

- recorder.setAudioSource(MediaRecorder.AudioSource.MIC);

- if(mimeType.contains("amr")){

+ if (redirectToAudioBuffer) {

+ final int channelConfig =audioChannels == 1 ? android.media.AudioFormat.CHANNEL_IN_MONO

+ : audioChannels == 2 ? android.media.AudioFormat.CHANNEL_IN_STEREO

+ : android.media.AudioFormat.CHANNEL_IN_MONO;

+ final AudioRecord recorder = new AudioRecord(

+ MediaRecorder.AudioSource.MIC,

+ sampleRate,

+ channelConfig,

+ AudioFormat.ENCODING_PCM_16BIT,

+ AudioRecord.getMinBufferSize(sampleRate, channelConfig, AudioFormat.ENCODING_PCM_16BIT)

+ );

+ final com.codename1.media.AudioBuffer audioBuffer = com.codename1.media.MediaManager.getAudioBuffer(path, true, 64);

+ final boolean[] stop = new boolean[1];

+

+ record[0] = new Media() {

+ private int lastTime;

+ private boolean isRecording;

+ @Override

+ public void play() {

+ isRecording = true;

+ recorder.startRecording();

+ new Thread(new Runnable() {

+ public void run() {

+ float[] audioData = new float[audioBuffer.getMaxSize()];

+ short[] buffer = new short[AudioRecord.getMinBufferSize(sampleRate, channelConfig, AudioFormat.ENCODING_PCM_16BIT)];

+ int read = -1;

+ int index = 0;

+ while (isRecording) {

+ while ((read = recorder.read(buffer, 0, buffer.length)) > 0) {

+ if (read > 0) {

+ for (int i=0; i= audioData.length) {

+ audioBuffer.copyFrom(audioData, 0, index);

+ index = 0;

+ }

+ }

+ if (index > 0) {

+ audioBuffer.copyFrom(audioData, 0, index);

+ index = 0;

+ }

+ System.out.println("Time is "+getTime());

+ } else {

+ System.out.println("read 0");

+ }

+ }

+ System.out.println("Time is "+getTime());

+ }

+ }

+

+ }).start();

+ }

+

+ @Override

+ public void pause() {

+

+ recorder.stop();

+ isRecording = false;

+ }

+

+ @Override

+ public void prepare() {

+

+ }

+

+ @Override

+ public void cleanup() {

+ pause();

+ com.codename1.media.MediaManager.deleteAudioBuffer(path);

+

+ }

+

+ @Override

+ public int getTime() {

+ if (isRecording) {

+ if (Build.VERSION.SDK_INT >= Build.VERSION_CODES.N) {

+ AudioTimestamp ts = new AudioTimestamp();

+ recorder.getTimestamp(ts, AudioTimestamp.TIMEBASE_MONOTONIC);

+ lastTime = (int) (ts.framePosition / ((float) sampleRate / 1000f));

+ }

+ }

+ return lastTime;

+ }

+

+ @Override

+ public void setTime(int time) {

+

+ }

+

+ @Override

+ public int getDuration() {

+ return getTime();

+ }

+

+ @Override

+ public void setVolume(int vol) {

+

+ }

+

+ @Override

+ public int getVolume() {

+ return 0;

+ }

+

+ @Override

+ public boolean isPlaying() {

+ return recorder.getRecordingState() == AudioRecord.RECORDSTATE_RECORDING;

+ }

+

+ @Override

+ public Component getVideoComponent() {

+ return null;

+ }

+

+ @Override

+ public boolean isVideo() {

+ return false;

+ }

+

+ @Override

+ public boolean isFullScreen() {

+ return false;

+ }

+

+ @Override

+ public void setFullScreen(boolean fullScreen) {

+

+ }

+

+ @Override

+ public void setNativePlayerMode(boolean nativePlayer) {

+

+ }

+

+ @Override

+ public boolean isNativePlayerMode() {

+ return false;

+ }

+

+ @Override

+ public void setVariable(String key, Object value) {

+

+ }

+

+ @Override

+ public Object getVariable(String key) {

+ return null;

+ }

+

+ };

+ lock.notify();

+ } else {

+ MediaRecorder recorder = new MediaRecorder();

+ recorder.setAudioSource(MediaRecorder.AudioSource.MIC);

+

+ if(mimeType.contains("amr")){

recorder.setOutputFormat(MediaRecorder.OutputFormat.AMR_NB);

recorder.setAudioEncoder(MediaRecorder.AudioEncoder.AMR_NB);

- }else{

- recorder.setOutputFormat(MediaRecorder.OutputFormat.MPEG_4);

- recorder.setAudioEncoder(MediaRecorder.AudioEncoder.AAC);

- recorder.setAudioSamplingRate(sampleRate);

- recorder.setAudioEncodingBitRate(bitRate);

- }

- if (audioChannels > 0) {

- recorder.setAudioChannels(audioChannels);

- }

- if (maxDuration > 0) {

- recorder.setMaxDuration(maxDuration);

- }

- recorder.setOutputFile(removeFilePrefix(path));

- try {

- recorder.prepare();

- record[0] = new AndroidRecorder(recorder);

- } catch (IllegalStateException ex) {

- Logger.getLogger(AndroidImplementation.class.getName()).log(Level.SEVERE, null, ex);

- } catch (IOException ex) {

- error[0] = ex;

- } finally {

- lock.notify();

+ }else{

+ recorder.setOutputFormat(MediaRecorder.OutputFormat.MPEG_4);

+ recorder.setAudioEncoder(MediaRecorder.AudioEncoder.AAC);

+ recorder.setAudioSamplingRate(sampleRate);

+ recorder.setAudioEncodingBitRate(bitRate);

+ }

+ if (audioChannels > 0) {

+ recorder.setAudioChannels(audioChannels);

+ }

+ if (maxDuration > 0) {

+ recorder.setMaxDuration(maxDuration);

+ }

+ recorder.setOutputFile(removeFilePrefix(path));

+ try {

+ recorder.prepare();

+ record[0] = new AndroidRecorder(recorder);

+ } catch (IllegalStateException ex) {

+ Logger.getLogger(AndroidImplementation.class.getName()).log(Level.SEVERE, null, ex);

+ } catch (IOException ex) {

+ error[0] = ex;

+ } finally {

+ lock.notify();

+ }

}

+

}

diff --git a/Ports/JavaSE/src/com/codename1/impl/javase/JavaSEPort.java b/Ports/JavaSE/src/com/codename1/impl/javase/JavaSEPort.java

index 81bc7d8829..0c3d0b4378 100644

--- a/Ports/JavaSE/src/com/codename1/impl/javase/JavaSEPort.java

+++ b/Ports/JavaSE/src/com/codename1/impl/javase/JavaSEPort.java

@@ -102,7 +102,9 @@

import com.codename1.l10n.L10NManager;

import com.codename1.location.Location;

import com.codename1.location.LocationManager;

+import com.codename1.media.AudioBuffer;

import com.codename1.media.Media;

+import com.codename1.media.MediaManager;

import com.codename1.media.MediaRecorderBuilder;

import com.codename1.notifications.LocalNotification;

import com.codename1.payment.Product;

@@ -146,6 +148,8 @@

import java.lang.reflect.InvocationTargetException;

import java.lang.reflect.Modifier;

import java.net.*;

+import java.nio.ByteBuffer;

+import java.nio.ByteOrder;

import java.nio.channels.FileChannel;

import java.security.MessageDigest;

import java.sql.DriverManager;

@@ -10549,7 +10553,8 @@ private boolean isMP3EncodingSupported() {

@Override

public Media createMediaRecorder(MediaRecorderBuilder builder) throws IOException {

- return createMediaRecorder(builder.getPath(), builder.getMimeType(), builder.getSamplingRate(), builder.getBitRate(), builder.getAudioChannels(), 0);

+

+ return createMediaRecorder(builder.getPath(), builder.getMimeType(), builder.getSamplingRate(), builder.getBitRate(), builder.getAudioChannels(), 0, builder.isRedirectToAudioBuffer());

}

@Override

@@ -10560,41 +10565,49 @@ public Media createMediaRecorder(final String path, String mime) throws IOExcept

return createMediaRecorder(builder);

}

- private Media createMediaRecorder(final String path, String mime, final int samplingRate, final int bitRate, final int audioChannels, final int maxDuration) throws IOException {

+ private Media createMediaRecorder(final String path, String mime, final int samplingRate, final int bitRate, final int audioChannels, final int maxDuration, final boolean redirectToAudioBuffer) throws IOException {

+ System.out.println("Is redirect to Audio Buffer? "+redirectToAudioBuffer);

checkMicrophoneUsageDescription();

if(!checkForPermission("android.permission.READ_PHONE_STATE", "This is required to access the mic")){

return null;

}

- if (mime == null) {

- if (path.endsWith(".wav") || path.endsWith(".WAV")) {

- mime = "audio/wav";

- } else if (path.endsWith(".mp3") || path.endsWith(".MP3")) {

- mime = "audio/mp3";

+

+ if (!redirectToAudioBuffer) {

+ if (mime == null) {

+ if (path.endsWith(".wav") || path.endsWith(".WAV")) {

+ mime = "audio/wav";

+ } else if (path.endsWith(".mp3") || path.endsWith(".MP3")) {

+ mime = "audio/mp3";

+ }

}

- }

- if (mime == null) {

- mime = getAvailableRecordingMimeTypes()[0];

- }

- boolean foundMimetype = false;

- for (String mt : getAvailableRecordingMimeTypes()) {

- if (mt.equalsIgnoreCase(mime)) {

- foundMimetype = true;

- break;

+ if (mime == null) {

+ mime = getAvailableRecordingMimeTypes()[0];

+ }

+ boolean foundMimetype = false;

+ for (String mt : getAvailableRecordingMimeTypes()) {

+ if (mt.equalsIgnoreCase(mime)) {

+ foundMimetype = true;

+ break;

+ }

+

+

+ }

+

+ if (!foundMimetype) {

+ throw new IOException("Mimetype "+mime+" not supported on this platform. Use getAvailableMimetypes() to find out what is supported");

}

-

-

- }

-

- if (!foundMimetype) {

- throw new IOException("Mimetype "+mime+" not supported on this platform. Use getAvailableMimetypes() to find out what is supported");

}

- final File file = new File(unfile(path));

- if (!file.getParentFile().exists()) {

- throw new IOException("Cannot write file "+path+" because the parent directory does not exist.");

+ final File file = redirectToAudioBuffer ? null : new File(unfile(path));

+ if (!redirectToAudioBuffer) {

+ if (!file.getParentFile().exists()) {

+ throw new IOException("Cannot write file "+path+" because the parent directory does not exist.");

+ }

}

File tmpFile = file;

- if (!"audio/wav".equalsIgnoreCase(mime) && !(tmpFile.getName().endsWith(".wav") || tmpFile.getName().endsWith(".WAV"))) {

- tmpFile = new File(tmpFile.getParentFile(), tmpFile.getName()+".wav");

+ if (!redirectToAudioBuffer) {

+ if (!"audio/wav".equalsIgnoreCase(mime) && !(tmpFile.getName().endsWith(".wav") || tmpFile.getName().endsWith(".WAV"))) {

+ tmpFile = new File(tmpFile.getParentFile(), tmpFile.getName()+".wav");

+ }

}

final File fTmpFile = tmpFile;

final String fMime = mime;

@@ -10602,27 +10615,39 @@ private Media createMediaRecorder(final String path, String mime, final int sam

java.io.File wavFile = fTmpFile;

File outFile = file;

AudioFileFormat.Type fileType = AudioFileFormat.Type.WAVE;

+

javax.sound.sampled.TargetDataLine line;

boolean recording;

javax.sound.sampled.AudioFormat getAudioFormat() {

+ if (redirectToAudioBuffer) {

+ javax.sound.sampled.AudioFormat format = new javax.sound.sampled.AudioFormat(

+ samplingRate,

+ 16,

+ audioChannels,

+ true,

+ false

+ );

+

+ return format;

+ }

float sampleRate = samplingRate;

int sampleSizeInBits = 8;

int channels = audioChannels;

boolean signed = true;

- boolean bigEndian = true;

+ boolean bigEndian = false;

javax.sound.sampled.AudioFormat format = new javax.sound.sampled.AudioFormat(sampleRate, sampleSizeInBits,

channels, signed, bigEndian);

return format;

}

+

+

@Override

public void play() {

if (line == null) {

try {

- AudioFormat format = getAudioFormat();

+ final AudioFormat format = getAudioFormat();

DataLine.Info info = new DataLine.Info(TargetDataLine.class, format);

-

- // checks if system supports the data line

if (!AudioSystem.isLineSupported(info)) {

throw new RuntimeException("Audio format not supported on this platform");

}

@@ -10638,7 +10663,37 @@ public void play() {

public void run() {

try {

AudioInputStream ais = new AudioInputStream(line);

- AudioSystem.write(ais, fileType, wavFile);

+ if (redirectToAudioBuffer) {

+

+ AudioBuffer buf = MediaManager.getAudioBuffer(path, true, 256);

+ int maxBufferSize = buf.getMaxSize();

+ float[] sampleBuffer = new float[maxBufferSize];

+ byte[] byteBuffer = new byte[samplingRate * audioChannels];

+ int bytesRead = -1;

+ while ((bytesRead = ais.read(byteBuffer)) >= 0) {

+ if (bytesRead > 0) {

+ int sampleBufferPos = 0;

+

+ for (int i = 0; i < bytesRead; i += 2) {

+ sampleBuffer[sampleBufferPos] = ((float)ByteBuffer.wrap(byteBuffer, i, 2)

+ .order(ByteOrder.LITTLE_ENDIAN)

+ .getShort())/ 0x8000;

+ sampleBufferPos++;

+ if (sampleBufferPos >= sampleBuffer.length) {

+ buf.copyFrom(sampleBuffer, 0, sampleBuffer.length);

+ sampleBufferPos = 0;

+ }

+

+ }

+ if (sampleBufferPos > 0) {

+ buf.copyFrom(sampleBuffer, 0, sampleBufferPos);

+ }

+ }

+ }

+ } else {

+ AudioSystem.write(ais, fileType, wavFile);

+ }

+

} catch (IOException ioe) {

throw new RuntimeException(ioe);

}

@@ -10684,12 +10739,15 @@ public void cleanup() {

pause();

}

recording = false;

+ if (redirectToAudioBuffer) {

+ MediaManager.deleteAudioBuffer(path);

+ }

if (line == null) {

return;

}

line.close();

- if (isMP3EncodingSupported() && "audio/mp3".equalsIgnoreCase(fMime)) {

+ if (!redirectToAudioBuffer && isMP3EncodingSupported() && "audio/mp3".equalsIgnoreCase(fMime)) {

final Throwable[] t = new Throwable[1];

CN.invokeAndBlock(new Runnable() {

public void run() {

diff --git a/Ports/iOSPort/nativeSources/CN1AudioUnit.h b/Ports/iOSPort/nativeSources/CN1AudioUnit.h

new file mode 100644

index 0000000000..df2c52d3a8

--- /dev/null

+++ b/Ports/iOSPort/nativeSources/CN1AudioUnit.h

@@ -0,0 +1,46 @@

+/*

+ * Copyright (c) 2012, Codename One and/or its affiliates. All rights reserved.

+ * DO NOT ALTER OR REMOVE COPYRIGHT NOTICES OR THIS FILE HEADER.

+ * This code is free software; you can redistribute it and/or modify it

+ * under the terms of the GNU General Public License version 2 only, as

+ * published by the Free Software Foundation. Codename One designates this

+ * particular file as subject to the "Classpath" exception as provided

+ * by Oracle in the LICENSE file that accompanied this code.

+ *

+ * This code is distributed in the hope that it will be useful, but WITHOUT

+ * ANY WARRANTY; without even the implied warranty of MERCHANTABILITY or

+ * FITNESS FOR A PARTICULAR PURPOSE. See the GNU General Public License

+ * version 2 for more details (a copy is included in the LICENSE file that

+ * accompanied this code).

+ *

+ * You should have received a copy of the GNU General Public License version

+ * 2 along with this work; if not, write to the Free Software Foundation,

+ * Inc., 51 Franklin St, Fifth Floor, Boston, MA 02110-1301 USA.

+ *

+ * Please contact Codename One through http://www.codenameone.com/ if you

+ * need additional information or have any questions.

+ */

+#import

+#import "xmlvm.h"

+

+#import

+

+@interface CN1AudioUnit : NSObject {

+ AudioBuffer * buff;

+ AudioQueueRef queue;

+ AudioStreamBasicDescription fmt;

+ NSThread *evtThread;

+ JAVA_ARRAY convertedSampleBuffer;

+ NSString* path;

+ int channels;

+ float sampleRate;

+}

+-(id)initWithPath:(NSString*)path channels:(int)channels sampleRate:(float)sampleRate sampleBuffer:(JAVA_ARRAY)sampleBuffer;

+-(BOOL)start;

+-(BOOL)stop;

+-(AudioBuffer*)audioBuffer;

+-(AudioQueueRef)queue;

+-(AudioStreamBasicDescription)fmt;

+-(NSString*)path;

+-(JAVA_ARRAY)convertedSampleBuffer;

+@end

diff --git a/Ports/iOSPort/nativeSources/CN1AudioUnit.m b/Ports/iOSPort/nativeSources/CN1AudioUnit.m

new file mode 100644

index 0000000000..86bf7781f4

--- /dev/null

+++ b/Ports/iOSPort/nativeSources/CN1AudioUnit.m

@@ -0,0 +1,189 @@

+/*

+ * Copyright (c) 2012, Codename One and/or its affiliates. All rights reserved.

+ * DO NOT ALTER OR REMOVE COPYRIGHT NOTICES OR THIS FILE HEADER.

+ * This code is free software; you can redistribute it and/or modify it

+ * under the terms of the GNU General Public License version 2 only, as

+ * published by the Free Software Foundation. Codename One designates this

+ * particular file as subject to the "Classpath" exception as provided

+ * by Oracle in the LICENSE file that accompanied this code.

+ *

+ * This code is distributed in the hope that it will be useful, but WITHOUT

+ * ANY WARRANTY; without even the implied warranty of MERCHANTABILITY or

+ * FITNESS FOR A PARTICULAR PURPOSE. See the GNU General Public License

+ * version 2 for more details (a copy is included in the LICENSE file that

+ * accompanied this code).

+ *

+ * You should have received a copy of the GNU General Public License version

+ * 2 along with this work; if not, write to the Free Software Foundation,

+ * Inc., 51 Franklin St, Fifth Floor, Boston, MA 02110-1301 USA.

+ *

+ * Please contact Codename One through http://www.codenameone.com/ if you

+ * need additional information or have any questions.

+ */

+#import "CN1AudioUnit.h"

+#import

+#import "com_codename1_media_MediaManager.h"

+#import "com_codename1_media_AudioBuffer.h"

+#import "com_codename1_impl_ios_IOSImplementation.h"

+

+static void HandleInputBuffer (

+ void *userData,

+ AudioQueueRef inAQ,

+ AudioQueueBufferRef inBuffer,

+ const AudioTimeStamp *inStartTime,

+ UInt32 inNumPackets,

+ const AudioStreamPacketDescription *inPacketDesc

+

+ ) {

+ CN1AudioUnit* audioUnit = (CN1AudioUnit*) userData;

+ struct ThreadLocalData* threadStateData = getThreadLocalData();

+ enteringNativeAllocations();

+

+ JAVA_ARRAY convertedSampleBuffer = [audioUnit convertedSampleBuffer];

+ JAVA_ARRAY_FLOAT* sampleData = (JAVA_ARRAY_FLOAT*)convertedSampleBuffer->data;

+ int len = convertedSampleBuffer->length;

+

+ JAVA_OBJECT audioBuffer = com_codename1_media_MediaManager_getAudioBuffer___java_lang_String_boolean_int_R_com_codename1_media_AudioBuffer(CN1_THREAD_GET_STATE_PASS_ARG fromNSString(CN1_THREAD_GET_STATE_PASS_ARG [audioUnit path]), JAVA_TRUE, 64);

+

+ SInt16 *inputFrames = (SInt16*)( inBuffer->mAudioData);

+ UInt32 numFrames = inNumPackets;

+

+ // If your DSP code can use integers, then don't bother converting to

+ // floats here, as it just wastes CPU. However, most DSP algorithms rely

+ // on floating point, and this is especially true if you are porting a

+ // VST/AU to iOS.

+ int index = 0;

+ for(int i = 0; i < numFrames; i++) {

+ sampleData[index] = (JAVA_ARRAY_FLOAT)inputFrames[i] / 32768;

+ index++;

+ if (index >= len) {

+ com_codename1_media_AudioBuffer_copyFrom___float_1ARRAY_int_int(CN1_THREAD_GET_STATE_PASS_ARG audioBuffer, (JAVA_OBJECT)convertedSampleBuffer, 0, index);

+ index = 0;

+ }

+ }

+

+ if (index > 0) {

+ com_codename1_media_AudioBuffer_copyFrom___float_1ARRAY_int_int(CN1_THREAD_GET_STATE_PASS_ARG audioBuffer, (JAVA_OBJECT)convertedSampleBuffer, 0, index);

+ index = 0;

+ }

+

+ AudioQueueEnqueueBuffer ([audioUnit queue], inBuffer, 0, NULL);

+

+ finishedNativeAllocations();

+

+}

+

+@implementation CN1AudioUnit

+

+-(JAVA_ARRAY)convertedSampleBuffer {

+ return convertedSampleBuffer;

+}

+

+-(NSString*)path {

+ return path;

+}

+

+-(AudioBuffer*)audioBuffer {

+ return buff;

+}

+

+-(AudioQueueRef)queue {

+ return queue;

+}

+

+-(AudioStreamBasicDescription)fmt {

+ return fmt;

+}

+

+

+

+-(id)initWithPath:(NSString*)_path channels:(int)_channels sampleRate:(float)_sampleRate sampleBuffer:(JAVA_ARRAY)sampleBuffer {

+ com_codename1_impl_ios_IOSImplementation_retain___java_lang_Object(CN1_THREAD_GET_STATE_PASS_SINGLE_ARG, (JAVA_OBJECT)sampleBuffer);

+

+ convertedSampleBuffer = sampleBuffer;

+ path = _path;

+ channels = _channels;

+ sampleRate = _sampleRate;

+

+

+

+

+ return self;

+}

+

+-(void)run {

+

+ NSError * error;

+

+ fmt.mFormatID = kAudioFormatLinearPCM;

+ fmt.mSampleRate = sampleRate;

+ fmt.mChannelsPerFrame = channels;

+ fmt.mBitsPerChannel = 16;

+ fmt.mFramesPerPacket = 1;

+ fmt.mBytesPerFrame = sizeof (SInt16);

+ fmt.mBytesPerPacket = sizeof (SInt16);

+

+

+ fmt.mFormatFlags = kLinearPCMFormatFlagIsSignedInteger | kLinearPCMFormatFlagIsPacked;

+

+ [NSRunLoop currentRunLoop];

+

+

+ OSStatus status = AudioQueueNewInput ( // 1

+ &fmt, // 2

+ HandleInputBuffer, // 3

+ self, // 4

+ NULL, // 5

+ kCFRunLoopCommonModes, // 6

+ 0, // 7

+ &queue // 8

+ );

+

+

+ int kNumberBuffers = 5;

+ int kSamplesSize = 4096;

+ AudioQueueBufferRef buffers[kNumberBuffers];

+ UInt32 bufferByteSize = kSamplesSize;

+ for (int i = 0; i < kNumberBuffers; ++i) {

+ OSStatus allocateStatus;

+ allocateStatus = AudioQueueAllocateBuffer (

+ queue,

+ bufferByteSize,

+ &buffers[i]

+ );

+ OSStatus enqueStatus;

+ NSLog(@"allocateStatus = %d" , allocateStatus);

+ enqueStatus = AudioQueueEnqueueBuffer (

+ queue,

+ buffers[i],

+ 0,

+ NULL

+ );

+ NSLog(@"enqueStatus = %d" , enqueStatus);

+ }

+ AudioQueueStart (queue, NULL);

+}

+

+-(BOOL)start {

+ [self run];

+ return YES;

+}

+-(BOOL)stop {

+ AudioQueueStop(queue, YES);

+ AudioQueueDispose(queue, YES);

+ return YES;

+}

+

+-(void)dealloc {

+

+ if (convertedSampleBuffer != NULL) {

+ com_codename1_impl_ios_IOSImplementation_release___java_lang_Object(CN1_THREAD_GET_STATE_PASS_ARG (JAVA_OBJECT)convertedSampleBuffer);

+ convertedSampleBuffer = NULL;

+ }

+

+

+ [super dealloc];

+}

+

+@end

+

diff --git a/Ports/iOSPort/nativeSources/IOSNative.m b/Ports/iOSPort/nativeSources/IOSNative.m

index c8f7d4b299..830c6ed2e4 100644

--- a/Ports/iOSPort/nativeSources/IOSNative.m

+++ b/Ports/iOSPort/nativeSources/IOSNative.m

@@ -34,7 +34,7 @@

#else

#include "cn1_globals.h"

#endif

-

+#import "CN1AudioUnit.h"

#import

#import "CodenameOne_GLViewController.h"

#import "NetworkConnectionImpl.h"

@@ -5247,6 +5247,85 @@ void com_codename1_impl_ios_IOSNative_nsDataToByteArray___long_byte_1ARRAY(CN1_T

}

+JAVA_LONG com_codename1_impl_ios_IOSNative_createAudioUnit___java_lang_String_int_float_float_1ARRAY_R_long(CN1_THREAD_STATE_MULTI_ARG JAVA_OBJECT instanceObject,

+ JAVA_OBJECT path, JAVA_INT audioChannels, JAVA_FLOAT sampleRate, JAVA_OBJECT sampleBuffer) {

+#ifdef INCLUDE_MICROPHONE_USAGE

+ __block CN1AudioUnit* recorder = nil;

+

+ __block NSString *exStr = nil;

+ dispatch_sync(dispatch_get_main_queue(), ^{

+ POOL_BEGIN();

+

+ AVAudioSession *audioSession = [AVAudioSession sharedInstance];

+ NSError *err = nil;

+ [audioSession setCategory :AVAudioSessionCategoryPlayAndRecord error:&err];

+ if(err){

+ CN1Log(@"audioSession: %@ %d %@", [err domain], [err code], [[err userInfo] description]);

+ exStr = [[err userInfo] description];

+ POOL_END();

+ return;

+ }

+ err = nil;

+ [audioSession setActive:YES error:&err];

+ if(err){

+ CN1Log(@"audioSession: %@ %d %@", [err domain], [err code], [[err userInfo] description]);

+ exStr = [[err userInfo] description];

+ POOL_END();

+ return;

+ }

+

+ if (isIOS7()) {

+ CN1Log(@"Asking for record permission");

+ [audioSession requestRecordPermission:^(BOOL granted) {

+ POOL_BEGIN();

+ if (granted) {

+ recorder = [[CN1AudioUnit alloc] initWithPath:toNSString(CN1_THREAD_STATE_PASS_ARG path) channels:audioChannels sampleRate:sampleRate sampleBuffer:(JAVA_ARRAY)sampleBuffer];

+ } else {

+ exStr = @"Denied access to use the microphone";

+ }

+ POOL_END();

+ }];

+ } else {

+ recorder = [[CN1AudioUnit alloc] initWithPath:toNSString(CN1_THREAD_STATE_PASS_ARG path) channels:audioChannels sampleRate:sampleRate sampleBuffer:(JAVA_ARRAY)sampleBuffer];

+ }

+ POOL_END();

+ });

+ if (exStr != nil) {

+ JAVA_OBJECT ex = __NEW_java_io_IOException(CN1_THREAD_STATE_PASS_SINGLE_ARG);

+ java_io_IOException___INIT_____java_lang_String(CN1_THREAD_STATE_PASS_ARG ex, fromNSString(CN1_THREAD_GET_STATE_PASS_ARG exStr));

+ throwException(threadStateData, ex);

+ return (JAVA_LONG)0;

+ } else {

+

+ return (JAVA_LONG)((BRIDGE_CAST void*)recorder);

+ }

+ #else

+ return (JAVA_LONG)0;

+ #endif

+ }

+

+

+

+

+void com_codename1_impl_ios_IOSNative_startAudioUnit___long(CN1_THREAD_STATE_MULTI_ARG JAVA_OBJECT instanceObject, JAVA_LONG peer) {

+ CN1AudioUnit* audioUnit = (BRIDGE_CAST CN1AudioUnit*)((void *)peer);

+ [audioUnit start];

+

+}

+

+void com_codename1_impl_ios_IOSNative_stopAudioUnit___long(CN1_THREAD_STATE_MULTI_ARG JAVA_OBJECT instanceObject, JAVA_LONG peer) {

+ CN1AudioUnit* audioUnit = (BRIDGE_CAST CN1AudioUnit*)((void *)peer);

+ [audioUnit stop];

+

+}

+

+void com_codename1_impl_ios_IOSNative_destroyAudioUnit___long(CN1_THREAD_STATE_MULTI_ARG JAVA_OBJECT instanceObject, JAVA_LONG peer) {

+ CN1AudioUnit* audioUnit = (BRIDGE_CAST CN1AudioUnit*)((void *)peer);

+ [audioUnit release];

+

+}

+

+

JAVA_LONG com_codename1_impl_ios_IOSNative_createAudioRecorder___java_lang_String_java_lang_String_int_int_int_int(CN1_THREAD_STATE_MULTI_ARG JAVA_OBJECT instanceObject,

JAVA_OBJECT destinationFile, JAVA_OBJECT mimeType, JAVA_INT sampleRate, JAVA_INT bitRate, JAVA_INT channels, JAVA_INT maxDuration) {

#ifdef INCLUDE_MICROPHONE_USAGE

diff --git a/Ports/iOSPort/src/com/codename1/impl/ios/IOSImplementation.java b/Ports/iOSPort/src/com/codename1/impl/ios/IOSImplementation.java

index 9bf8d60f48..f0965a9ecb 100644

--- a/Ports/iOSPort/src/com/codename1/impl/ios/IOSImplementation.java

+++ b/Ports/iOSPort/src/com/codename1/impl/ios/IOSImplementation.java

@@ -3023,7 +3023,7 @@ public static void finishedCreatingAudioRecorder(IOException ex) {

@Override

public Media createMediaRecorder(MediaRecorderBuilder builder) throws IOException {

- return createMediaRecorder(builder.getPath(), builder.getMimeType(), builder.getSamplingRate(), builder.getBitRate(), builder.getAudioChannels(), 0);

+ return createMediaRecorder(builder.getPath(), builder.getMimeType(), builder.getSamplingRate(), builder.getBitRate(), builder.getAudioChannels(), 0, builder.isRedirectToAudioBuffer());

}

@@ -3037,10 +3037,117 @@ public Media createMediaRecorder(final String path, final String mimeType) throw

}

- private Media createMediaRecorder(final String path, final String mimeType, final int sampleRate, final int bitRate, final int audioChannels, final int maxDuration) throws IOException {

+ private Media createMediaRecorder(final String path, final String mimeType, final int sampleRate, final int bitRate, final int audioChannels, final int maxDuration, final boolean redirectToAudioBuffer) throws IOException {

if (!nativeInstance.checkMicrophoneUsage()) {

throw new RuntimeException("Please add the ios.NSMicrophoneUsageDescription build hint");

}

+ if (redirectToAudioBuffer) {

+

+ return new Media() {

+ long peer = nativeInstance.createAudioUnit(path, audioChannels, sampleRate, new float[64]);

+ boolean isPlaying;

+ @Override

+ public void play() {

+ isPlaying = true;

+ nativeInstance.startAudioUnit(peer);

+ }

+

+ @Override

+ public void pause() {

+ isPlaying = false;

+ nativeInstance.stopAudioUnit(peer);

+ }

+

+ @Override

+ public void prepare() {

+

+ }

+

+ @Override

+ public void cleanup() {

+ if (peer == 0) {

+ return;

+ }

+ if (isPlaying) {

+ pause();

+ }

+

+ nativeInstance.destroyAudioUnit(peer);

+ }

+

+ @Override

+ public int getTime() {

+ return -1;

+ }

+

+ @Override

+ public void setTime(int time) {

+

+ }

+

+ @Override

+ public int getDuration() {

+ return -1;

+ }

+

+ @Override

+ public void setVolume(int vol) {

+

+ }

+

+ @Override

+ public int getVolume() {

+ return -1;

+ }

+

+ @Override

+ public boolean isPlaying() {

+ return isPlaying;

+ }

+

+ @Override

+ public Component getVideoComponent() {

+ return null;

+ }

+

+ @Override

+ public boolean isVideo() {

+ return false;

+ }

+

+ @Override

+ public boolean isFullScreen() {

+ return false;

+ }

+

+ @Override

+ public void setFullScreen(boolean fullScreen) {

+

+ }

+

+ @Override

+ public void setNativePlayerMode(boolean nativePlayer) {

+

+ }

+

+ @Override

+ public boolean isNativePlayerMode() {

+ return false;

+ }

+

+ @Override

+ public void setVariable(String key, Object value) {

+

+ }

+

+ @Override

+ public Object getVariable(String key) {

+ return null;

+ }

+

+ };

+ }

+

finishedCreatingAudioRecorder = false;

createAudioRecorderException = null;

final long[] peer = new long[] { nativeInstance.createAudioRecorder(path, mimeType, sampleRate, bitRate, audioChannels, maxDuration) };

diff --git a/Ports/iOSPort/src/com/codename1/impl/ios/IOSNative.java b/Ports/iOSPort/src/com/codename1/impl/ios/IOSNative.java

index 88523b8f1f..d205cb0e50 100644

--- a/Ports/iOSPort/src/com/codename1/impl/ios/IOSNative.java

+++ b/Ports/iOSPort/src/com/codename1/impl/ios/IOSNative.java

@@ -338,6 +338,14 @@ byte[] loadResource(String name, String type) {

// capture

native void captureCamera(boolean movie, int quality, int duration);

native void openGallery(int type);

+ native void destroyAudioUnit(long peer);

+

+ native long createAudioUnit(String path, int audioChannels, float sampleRate, float[] f);

+

+

+ native void startAudioUnit(long audioUnit);

+ native void stopAudioUnit(long audioUnit);

+

native long createAudioRecorder(final String path, final String mimeType, final int sampleRate, final int bitRate, final int audioChannels, final int maxDuration);

native void startAudioRecord(long peer);

native void pauseAudioRecord(long peer);

@@ -697,6 +705,7 @@ native void nativeSetTransformMutable(

native void setConnectionId(long peer, int id);

+

diff --git a/Samples/samples/AudioBufferSample/AudioBufferSample.java b/Samples/samples/AudioBufferSample/AudioBufferSample.java

new file mode 100644

index 0000000000..a08aba59cd

--- /dev/null

+++ b/Samples/samples/AudioBufferSample/AudioBufferSample.java

@@ -0,0 +1,158 @@

+package com.codename1.samples;

+

+

+import com.codename1.capture.Capture;

+import com.codename1.components.MultiButton;

+import com.codename1.io.File;

+import com.codename1.io.FileSystemStorage;

+import static com.codename1.ui.CN.*;

+import com.codename1.ui.Display;

+import com.codename1.ui.Form;

+import com.codename1.ui.Dialog;

+import com.codename1.ui.Label;

+import com.codename1.ui.plaf.UIManager;

+import com.codename1.ui.util.Resources;

+import com.codename1.io.Log;

+import com.codename1.ui.Toolbar;

+import java.io.IOException;

+import com.codename1.ui.layouts.BoxLayout;

+import com.codename1.io.NetworkEvent;

+import com.codename1.io.Util;

+import com.codename1.l10n.SimpleDateFormat;

+import com.codename1.media.AudioBuffer;

+

+import com.codename1.media.Media;

+import com.codename1.media.MediaManager;

+import com.codename1.media.MediaRecorderBuilder;

+import com.codename1.media.WAVWriter;

+import com.codename1.ui.FontImage;

+import com.codename1.ui.plaf.Style;

+import java.util.Arrays;

+import java.util.Date;

+

+/**

+ * This file was generated by Codename One for the purpose

+ * of building native mobile applications using Java.

+ */

+public class AudioBufferSample {

+

+ private Form current;

+ private Resources theme;

+

+ public void init(Object context) {

+ // use two network threads instead of one

+ updateNetworkThreadCount(2);

+

+ theme = UIManager.initFirstTheme("/theme");

+

+ // Enable Toolbar on all Forms by default

+ Toolbar.setGlobalToolbar(true);

+

+ // Pro only feature

+ Log.bindCrashProtection(true);

+

+ addNetworkErrorListener(err -> {

+ // prevent the event from propagating

+ err.consume();

+ if(err.getError() != null) {

+ Log.e(err.getError());

+ }

+ Log.sendLogAsync();

+ Dialog.show("Connection Error", "There was a networking error in the connection to " + err.getConnectionRequest().getUrl(), "OK", null);

+ });

+ }

+

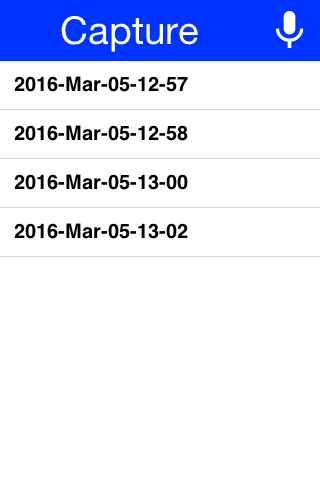

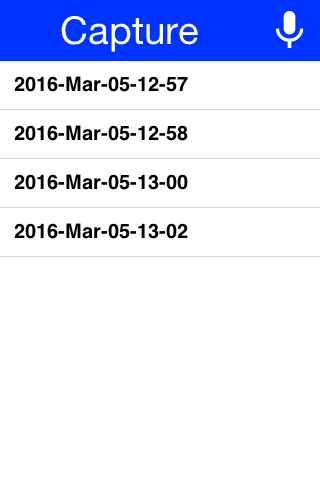

+ public void start() {

+ if (current != null) {

+ current.show();

+ return;

+ }

+ Form hi = new Form("Capture", BoxLayout.y());

+ hi.setToolbar(new Toolbar());

+ Style s = UIManager.getInstance().getComponentStyle("Title");

+ FontImage icon = FontImage.createMaterial(FontImage.MATERIAL_MIC, s);

+

+ FileSystemStorage fs = FileSystemStorage.getInstance();

+ String recordingsDir = fs.getAppHomePath() + "recordings/";

+ fs.mkdir(recordingsDir);

+ try {

+ for (String file : fs.listFiles(recordingsDir)) {

+ MultiButton mb = new MultiButton(file.substring(file.lastIndexOf("/") + 1));

+ mb.addActionListener((e) -> {

+ try {

+ Media m = MediaManager.createMedia(recordingsDir + file, false);

+ m.play();

+ } catch (Throwable err) {

+ Log.e(err);

+ }

+ });

+ hi.add(mb);

+ }

+

+ hi.getToolbar().addCommandToRightBar("", icon, (ev) -> {

+ try {

+ String path = "tmpBuffer.pcm";

+ WAVWriter wavFileWriter = new WAVWriter(new File("tmpBuffer.wav"), 44100, 1, 16);

+ AudioBuffer audioBuffer = MediaManager.getAudioBuffer(path, true, 64);

+ MediaRecorderBuilder options = new MediaRecorderBuilder()

+ .audioChannels(1)

+

+ .redirectToAudioBuffer(true)

+

+ .path(path);

+ System.out.println("Builder isredirect? "+options.isRedirectToAudioBuffer());

+ float[] byteBuffer = new float[audioBuffer.getMaxSize()];

+ audioBuffer.addCallback(buf->{

+ buf.copyTo(byteBuffer);

+ try {

+ wavFileWriter.write(byteBuffer, 0, audioBuffer.getSize());

+ } catch (Throwable t) {

+ Log.e(t);

+ }

+

+ });

+

+

+ String file = Capture.captureAudio(options);

+ wavFileWriter.close();

+ SimpleDateFormat sd = new SimpleDateFormat("yyyy-MMM-dd-kk-mm");

+ String fileName = sd.format(new Date());

+ String filePath = recordingsDir + fileName;

+ Util.copy(fs.openInputStream(new File("tmpBuffer.wav").getAbsolutePath()), fs.openOutputStream(filePath));

+ MultiButton mb = new MultiButton(fileName);

+ mb.addActionListener((e) -> {

+ try {

+ Media m = MediaManager.createMedia(filePath, false);

+ m.play();

+ } catch (IOException err) {

+ Log.e(err);

+ }

+ });

+ hi.add(mb);

+ hi.revalidate();

+ if (file != null) {

+ System.out.println(file);

+ }

+ } catch (Throwable err) {

+ Log.e(err);

+ }

+ });

+ } catch (Throwable err) {

+ Log.e(err);

+ }

+ hi.show();

+ }

+

+

+ public void stop() {

+ current = getCurrentForm();

+ if(current instanceof Dialog) {

+ ((Dialog)current).dispose();

+ current = getCurrentForm();

+ }

+ }

+

+ public void destroy() {

+ }

+

+}

diff --git a/Samples/samples/SwitchScrollWheelingIssue/SwitchScrollWheelingIssue.java b/Samples/samples/SwitchScrollWheelingIssue/SwitchScrollWheelingIssue.java

new file mode 100644

index 0000000000..124857d63a

--- /dev/null

+++ b/Samples/samples/SwitchScrollWheelingIssue/SwitchScrollWheelingIssue.java

@@ -0,0 +1,79 @@

+package com.codename1.samples;

+

+

+import com.codename1.components.Switch;

+import static com.codename1.ui.CN.*;

+import com.codename1.ui.Display;

+import com.codename1.ui.Form;

+import com.codename1.ui.Dialog;

+import com.codename1.ui.Label;

+import com.codename1.ui.plaf.UIManager;

+import com.codename1.ui.util.Resources;

+import com.codename1.io.Log;

+import com.codename1.ui.Toolbar;

+import java.io.IOException;

+import com.codename1.ui.layouts.BoxLayout;

+import com.codename1.io.NetworkEvent;

+import com.codename1.ui.Container;

+import com.codename1.ui.layouts.GridLayout;

+

+/**

+ * This file was generated by Codename One for the purpose

+ * of building native mobile applications using Java.

+ */

+public class SwitchScrollWheelingIssue {

+

+ private Form current;

+ private Resources theme;

+

+ public void init(Object context) {

+ // use two network threads instead of one

+ updateNetworkThreadCount(2);

+

+ theme = UIManager.initFirstTheme("/theme");

+

+ // Enable Toolbar on all Forms by default

+ Toolbar.setGlobalToolbar(true);

+

+ // Pro only feature

+ Log.bindCrashProtection(true);

+

+ addNetworkErrorListener(err -> {

+ // prevent the event from propagating

+ err.consume();

+ if(err.getError() != null) {

+ Log.e(err.getError());

+ }

+ Log.sendLogAsync();

+ Dialog.show("Connection Error", "There was a networking error in the connection to " + err.getConnectionRequest().getUrl(), "OK", null);

+ });

+ }

+

+ public void start() {

+ if(current != null){

+ current.show();

+ return;

+ }

+ Form f = new Form();

+ f.setLayout(new BoxLayout(BoxLayout.Y_AXIS));

+ f.setScrollable(true);

+ for (int x=1; x < 100; x++) {

+ Container cnt = new Container(new GridLayout(2));

+ cnt.addAll(new Label("Line" + x), new Switch());

+ f.add(cnt);

+ }

+ f.show();

+ }

+

+ public void stop() {

+ current = getCurrentForm();

+ if(current instanceof Dialog) {

+ ((Dialog)current).dispose();

+ current = getCurrentForm();

+ }

+ }

+

+ public void destroy() {

+ }

+

+}

*/

public class MediaManager {

+

+ /**

+ * A static map of audio buffers. These can be used to register an Audio buffer to receive

+ * raw PCM data from the microphone.

+ * @since 7.0

+ */

+ private static Map audioBuffers = new HashMap();

private static RemoteControlListener remoteControlListener;

+ /**

+ * Gets an audio buffer at the given path.

+ * @param path The path to the Audio buffer. This path doesn't correspond to a real file. It is just

+ * used as a key to map to the audio buffer so that it can be addressed.

+ * @return The AudioBuffer or null if no buffer exists at that path.

+ * @since 7.0

+ */

+ public static synchronized AudioBuffer getAudioBuffer(String path) {

+ return getAudioBuffer(path, false, 256);

+ }

+

+ /**

+ * Gets or creates an audio buffer at the given path.

+ * @param path The path to the Audio buffer. This path doesn't correspond to a real file. It is just

+ * used as a key to map to the audio buffer so that it can be addressed.

+ * @param create If this flag is {@literal true} and no buffer exists at the given path,

+ * then the buffer will be created.

+ * @param size The maximum size of the buffer.

+ * @return The audio buffer or null if no buffer exists at that path and the {@literal create} flag is {@literal false}.

+ * @since 7.0

+ */

+ public static synchronized AudioBuffer getAudioBuffer(String path, boolean create, int size) {

+ if (create && !audioBuffers.containsKey(path)) {

+ audioBuffers.put(path, new AudioBuffer(size));

+ }

+

+ return audioBuffers.get(path);

+ }

+

+ /**

+ * Deletes the audio buffer at the given path.

+ * @param path The path to the audio buffer to delete.

+ * @since 7.0

+ */

+ public static synchronized void deleteAudioBuffer(String path) {

+ audioBuffers.remove(path);

+ }

+

+

+

/**

* Registers a listener to be notified of remote control events - e.g.

* the play/pause/seek buttons on the user's lock screen when background

@@ -278,6 +327,9 @@ public static Media createMediaRecorder(String path, String mimeType) throws IOE

* @since 7.0

*/

public static Media createMediaRecorder(MediaRecorderBuilder builder) throws IOException {

+ if (builder.isRedirectToAudioBuffer()) {

+ return builder.build();

+ }

String mimeType = builder.getMimeType();

if (mimeType == null && getAvailableRecordingMimeTypes().length > 0) {

mimeType = getAvailableRecordingMimeTypes()[0];

@@ -298,6 +350,7 @@ public static Media createMediaRecorder(MediaRecorderBuilder builder) throws IOE

" is not supported on this platform use "

+ "getAvailableRecordingMimeTypes()");

}

+

return Display.getInstance().createMediaRecorder(path, mimeType);

}

}

diff --git a/CodenameOne/src/com/codename1/media/MediaRecorderBuilder.java b/CodenameOne/src/com/codename1/media/MediaRecorderBuilder.java

index 9811f4706f..53f34d7bf3 100644

--- a/CodenameOne/src/com/codename1/media/MediaRecorderBuilder.java

+++ b/CodenameOne/src/com/codename1/media/MediaRecorderBuilder.java

@@ -34,6 +34,8 @@ public class MediaRecorderBuilder {

private int audioChannels=1,

bitRate=64000,

samplingRate=44100;

+ private boolean redirectToAudioBuffer;

+

private String mimeType = Display.getInstance().getAvailableRecordingMimeTypes()[0],

path;

@@ -91,6 +93,18 @@ public MediaRecorderBuilder path(String path) {

return this;

}

+ /**

+ * Set this flag to {@literal true} to redirect the microphone input to an audio buffer.

+ * This is handy if you just want to capture the raw PCM data from the microphone.

+ * @param redirect True to redirect output to an audio buffer. The {@link #path(java.lang.String) }

+ * parameter would then be used as the path to the audio buffer instead of the output file.

+ * @return Self for chaining.

+ */

+ public MediaRecorderBuilder redirectToAudioBuffer(boolean redirect) {

+ this.redirectToAudioBuffer = redirect;

+ return this;

+ }

+

/**

* Builds the MediaRecorder with the given settings.

* @return

@@ -147,7 +161,15 @@ public String getMimeType() {

public String getPath() {

return path;

}

-

-

+

+ /**

+ * True if the media recorder should redirect output to an audio buffer instead

+ * of a file.

+ * @return

+ */

+ public boolean isRedirectToAudioBuffer() {

+ return redirectToAudioBuffer;

+ }

+

}

diff --git a/CodenameOne/src/com/codename1/media/WAVWriter.java b/CodenameOne/src/com/codename1/media/WAVWriter.java

new file mode 100644

index 0000000000..dedcbdf83a

--- /dev/null

+++ b/CodenameOne/src/com/codename1/media/WAVWriter.java

@@ -0,0 +1,149 @@

+package com.codename1.media;

+

+import java.io.InputStream;

+import com.codename1.io.Util;

+import java.io.IOException;

+import com.codename1.io.FileSystemStorage;

+import java.io.OutputStream;

+import com.codename1.io.File;

+

+/**

+ * A class that can write raw PCM data to a WAV file.

+ *

+ *

+ * @since 7.0

+ * @author shannah

+ */

+public class WAVWriter implements AutoCloseable

+{

+ private File outputFile;

+ private OutputStream out;

+ private int samplingRate;

+ private int channels;

+ private int numBits;

+ private long dataLength;

+

+ /**

+ * Creates a new writer for writing a WAV file.

+ * @param outputFile The output file.

+ * @param samplingRate The sampling rate. E.g. 44100

+ * @param channels The number of channels. E.g. 1 or 2

+ * @param numBits 8 or 16

+ * @throws IOException

+ */

+ public WAVWriter(final File outputFile, final int samplingRate, final int channels, final int numBits) throws IOException {

+ this.outputFile = outputFile;

+ this.out = FileSystemStorage.getInstance().openOutputStream(outputFile.getAbsolutePath());

+ this.samplingRate = samplingRate;

+ this.channels = channels;

+ this.numBits = numBits;

+ }

+

+ private void writeHeader() throws IOException {

+ final byte[] header = new byte[44];

+ long totalDataLen = dataLength + 36;

+ final long bitrate = this.samplingRate * this.channels * this.numBits;

+ header[0] = 82;

+ header[1] = 73;

+ header[3] = (header[2] = 70);

+ header[4] = (byte)(totalDataLen & 0xFFL);

+ header[5] = (byte)(totalDataLen >> 8 & 0xFFL);

+ header[6] = (byte)(totalDataLen >> 16 & 0xFFL);

+ header[7] = (byte)(totalDataLen >> 24 & 0xFFL);

+ header[8] = 87;

+ header[9] = 65;

+ header[10] = 86;

+ header[11] = 69;

+ header[12] = 102;

+ header[13] = 109;

+ header[14] = 116;

+ header[15] = 32;

+ header[16] = (byte)this.numBits;

+ header[17] = 0;

+ header[19] = (header[18] = 0);

+ header[20] = 1;

+ header[21] = 0;

+ header[22] = (byte)this.channels;

+ header[23] = 0;

+ header[24] = (byte)(this.samplingRate & 0xFF);

+ header[25] = (byte)(this.samplingRate >> 8 & 0xFF);

+ header[26] = (byte)(this.samplingRate >> 16 & 0xFF);

+ header[27] = (byte)(this.samplingRate >> 24 & 0xFF);

+ header[28] = (byte)(bitrate / 8L & 0xFFL);

+ header[29] = (byte)(bitrate / 8L >> 8 & 0xFFL);

+ header[30] = (byte)(bitrate / 8L >> 16 & 0xFFL);

+ header[31] = (byte)(bitrate / 8L >> 24 & 0xFFL);

+ header[32] = (byte)(this.channels * this.numBits / 8);

+ header[33] = 0;

+ header[34] = 16;

+ header[35] = 0;

+ header[36] = 100;

+ header[37] = 97;

+ header[38] = 116;

+ header[39] = 97;

+ header[40] = (byte) (dataLength & 0xff);

+ header[41] = (byte) ((dataLength >> 8) & 0xff);

+ header[42] = (byte) ((dataLength >> 16) & 0xff);

+ header[43] = (byte) ((dataLength >> 24) & 0xff);

+ this.out.write(header);

+ }

+

+ /**

+ * Writes PCM data to the file.

+ * @param pcmData PCM data to write. These are float values between -1 and 1.

+ * @param offset Offset in pcmData array to start writing.

+ * @param len Length in pcmData array to write.

+ * @throws IOException

+ */

+ public void write(final float[] pcmData, final int offset, final int len) throws IOException {

+ for (int i = 0; i < len; ++i) {

+ final float sample = pcmData[offset + i];

+ if (this.numBits == 8) {

+ final byte byteSample = (byte)(sample * 127.0f);

+ this.out.write(byteSample & 0xff);

+ ++this.dataLength;

+ }

+ else {

+ if (this.numBits != 16) {

+ throw new IllegalArgumentException("numBits must be 8 or 16 but found " + this.numBits);

+ }

+ final short shortSample = (short)(sample * 32767.0f);

+ this.out.write(shortSample & 0xff);

+ this.out.write((shortSample >> 8) & 0xff);

+ this.dataLength += 2L;

+ }

+ }

+ }

+

+ private String getPCMFile() {

+ return this.outputFile.getAbsolutePath() + ".pcm";

+ }

+

+ /**

+ * Closes the writer, and writes the WAV file.

+ * @throws Exception

+ */

+ @Override

+ public void close() throws Exception {

+ this.out.close();

+ final FileSystemStorage fs = FileSystemStorage.getInstance();

+ fs.rename(this.outputFile.getAbsolutePath(), new File(this.getPCMFile()).getName());

+ try {

+ this.out = fs.openOutputStream(this.outputFile.getAbsolutePath());

+ final InputStream in = fs.openInputStream(this.getPCMFile());

+ this.writeHeader();

+ Util.copy(in, this.out);

+ try {

+ this.out.close();

+ }

+ catch (Throwable t) {}

+ try {

+ in.close();

+ }

+ catch (Throwable t2) {}

+ }

+ finally {

+ fs.delete(this.getPCMFile());

+ }

+ }

+}

\ No newline at end of file

diff --git a/CodenameOne/src/com/codename1/ui/Display.java b/CodenameOne/src/com/codename1/ui/Display.java

index 8195be5d53..2ac49d1be5 100644

--- a/CodenameOne/src/com/codename1/ui/Display.java

+++ b/CodenameOne/src/com/codename1/ui/Display.java

@@ -3606,6 +3606,24 @@ public void capturePhoto(ActionListener response){

public void captureAudio(ActionListener response) {

impl.captureAudio(response);

}

+

+ /**

+ * This method tries to invoke the device native hardware to capture audio.

+ * The method returns immediately and the response will be sent asynchronously

+ * to the given ActionListener Object

+ * The audio is saved to a file on the device.

+ *

+ * use this in the actionPerformed to retrieve the file path

+ * String path = (String) evt.getSource();

+ *

+ * @param recordingOptions Audio recording options.

+ * @param response a callback Object to retrieve the file path

+ * @throws RuntimeException if this feature failed or unsupported on the platform

+ * @since 7.0

+ */

+ public void captureAudio(MediaRecorderBuilder recordingOptions, ActionListener response) {

+ impl.captureAudio(recordingOptions, response);

+ }

/**

* This method tries to invoke the device native camera to capture video.

diff --git a/Ports/Android/src/com/codename1/impl/android/AndroidImplementation.java b/Ports/Android/src/com/codename1/impl/android/AndroidImplementation.java

index 7952e4995f..0eeadd573e 100644

--- a/Ports/Android/src/com/codename1/impl/android/AndroidImplementation.java

+++ b/Ports/Android/src/com/codename1/impl/android/AndroidImplementation.java

@@ -27,6 +27,7 @@

import com.codename1.location.AndroidLocationManager;

import android.app.*;

import android.content.pm.PackageManager.NameNotFoundException;

+import android.media.AudioTimestamp;

import android.support.v4.content.ContextCompat;

import android.view.MotionEvent;

import com.codename1.codescan.ScanResult;

@@ -82,6 +83,8 @@

import android.graphics.Matrix;

import android.graphics.drawable.BitmapDrawable;

import android.hardware.Camera;

+import android.media.AudioFormat;

+import android.media.AudioRecord;

import android.media.ExifInterface;

import android.media.MediaPlayer;

import android.media.MediaRecorder;

@@ -3568,7 +3571,7 @@ public void run() {

@Override

public Media createMediaRecorder(MediaRecorderBuilder builder) throws IOException {

- return createMediaRecorder(builder.getPath(), builder.getMimeType(), builder.getSamplingRate(), builder.getBitRate(), builder.getAudioChannels(), 0);

+ return createMediaRecorder(builder.getPath(), builder.getMimeType(), builder.getSamplingRate(), builder.getBitRate(), builder.getAudioChannels(), 0, builder.isRedirectToAudioBuffer());

}

@Override

@@ -3579,15 +3582,16 @@ public Media createMediaRecorder(final String path, final String mimeType) throw

return createMediaRecorder(builder);

}

+

- private Media createMediaRecorder(final String path, final String mimeType, final int sampleRate, final int bitRate, final int audioChannels, final int maxDuration) throws IOException {

+ private Media createMediaRecorder(final String path, final String mimeType, final int sampleRate, final int bitRate, final int audioChannels, final int maxDuration, final boolean redirectToAudioBuffer) throws IOException {

if (getActivity() == null) {

return null;

}

if(!checkForPermission(Manifest.permission.RECORD_AUDIO, "This is required to record audio")){

return null;

}

- final AndroidRecorder[] record = new AndroidRecorder[1];

+ final Media[] record = new Media[1];

final IOException[] error = new IOException[1];

final Object lock = new Object();

@@ -3596,34 +3600,190 @@ private Media createMediaRecorder(final String path, final String mimeType, fin

@Override

public void run() {

synchronized (lock) {

- MediaRecorder recorder = new MediaRecorder();

- recorder.setAudioSource(MediaRecorder.AudioSource.MIC);

- if(mimeType.contains("amr")){

+ if (redirectToAudioBuffer) {

+ final int channelConfig =audioChannels == 1 ? android.media.AudioFormat.CHANNEL_IN_MONO

+ : audioChannels == 2 ? android.media.AudioFormat.CHANNEL_IN_STEREO

+ : android.media.AudioFormat.CHANNEL_IN_MONO;

+ final AudioRecord recorder = new AudioRecord(

+ MediaRecorder.AudioSource.MIC,

+ sampleRate,

+ channelConfig,

+ AudioFormat.ENCODING_PCM_16BIT,

+ AudioRecord.getMinBufferSize(sampleRate, channelConfig, AudioFormat.ENCODING_PCM_16BIT)

+ );

+ final com.codename1.media.AudioBuffer audioBuffer = com.codename1.media.MediaManager.getAudioBuffer(path, true, 64);

+ final boolean[] stop = new boolean[1];

+

+ record[0] = new Media() {

+ private int lastTime;

+ private boolean isRecording;

+ @Override

+ public void play() {

+ isRecording = true;

+ recorder.startRecording();

+ new Thread(new Runnable() {

+ public void run() {

+ float[] audioData = new float[audioBuffer.getMaxSize()];

+ short[] buffer = new short[AudioRecord.getMinBufferSize(sampleRate, channelConfig, AudioFormat.ENCODING_PCM_16BIT)];

+ int read = -1;

+ int index = 0;

+ while (isRecording) {

+ while ((read = recorder.read(buffer, 0, buffer.length)) > 0) {

+ if (read > 0) {

+ for (int i=0; i= audioData.length) {

+ audioBuffer.copyFrom(audioData, 0, index);

+ index = 0;

+ }

+ }

+ if (index > 0) {

+ audioBuffer.copyFrom(audioData, 0, index);

+ index = 0;

+ }

+ System.out.println("Time is "+getTime());

+ } else {

+ System.out.println("read 0");

+ }

+ }

+ System.out.println("Time is "+getTime());

+ }

+ }

+

+ }).start();

+ }

+

+ @Override

+ public void pause() {

+

+ recorder.stop();

+ isRecording = false;

+ }

+

+ @Override

+ public void prepare() {

+

+ }

+

+ @Override

+ public void cleanup() {

+ pause();

+ com.codename1.media.MediaManager.deleteAudioBuffer(path);

+

+ }

+

+ @Override

+ public int getTime() {

+ if (isRecording) {