forked from statOmics/PSLS

-

Notifications

You must be signed in to change notification settings - Fork 0

/

extra1_preliminary_tidyverse.Rmd

701 lines (533 loc) · 22.2 KB

/

extra1_preliminary_tidyverse.Rmd

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

35

36

37

38

39

40

41

42

43

44

45

46

47

48

49

50

51

52

53

54

55

56

57

58

59

60

61

62

63

64

65

66

67

68

69

70

71

72

73

74

75

76

77

78

79

80

81

82

83

84

85

86

87

88

89

90

91

92

93

94

95

96

97

98

99

100

101

102

103

104

105

106

107

108

109

110

111

112

113

114

115

116

117

118

119

120

121

122

123

124

125

126

127

128

129

130

131

132

133

134

135

136

137

138

139

140

141

142

143

144

145

146

147

148

149

150

151

152

153

154

155

156

157

158

159

160

161

162

163

164

165

166

167

168

169

170

171

172

173

174

175

176

177

178

179

180

181

182

183

184

185

186

187

188

189

190

191

192

193

194

195

196

197

198

199

200

201

202

203

204

205

206

207

208

209

210

211

212

213

214

215

216

217

218

219

220

221

222

223

224

225

226

227

228

229

230

231

232

233

234

235

236

237

238

239

240

241

242

243

244

245

246

247

248

249

250

251

252

253

254

255

256

257

258

259

260

261

262

263

264

265

266

267

268

269

270

271

272

273

274

275

276

277

278

279

280

281

282

283

284

285

286

287

288

289

290

291

292

293

294

295

296

297

298

299

300

301

302

303

304

305

306

307

308

309

310

311

312

313

314

315

316

317

318

319

320

321

322

323

324

325

326

327

328

329

330

331

332

333

334

335

336

337

338

339

340

341

342

343

344

345

346

347

348

349

350

351

352

353

354

355

356

357

358

359

360

361

362

363

364

365

366

367

368

369

370

371

372

373

374

375

376

377

378

379

380

381

382

383

384

385

386

387

388

389

390

391

392

393

394

395

396

397

398

399

400

401

402

403

404

405

406

407

408

409

410

411

412

413

414

415

416

417

418

419

420

421

422

423

424

425

426

427

428

429

430

431

432

433

434

435

436

437

438

439

440

441

442

443

444

445

446

447

448

449

450

451

452

453

454

455

456

457

458

459

460

461

462

463

464

465

466

467

468

469

470

471

472

473

474

475

476

477

478

479

480

481

482

483

484

485

486

487

488

489

490

491

492

493

494

495

496

497

498

499

500

501

502

503

504

505

506

507

508

509

510

511

512

513

514

515

516

517

518

519

520

521

522

523

524

525

526

527

528

529

530

531

532

533

534

535

536

537

538

539

540

541

542

543

544

545

546

547

548

549

550

551

552

553

554

555

556

557

558

559

560

561

562

563

564

565

566

567

568

569

570

571

572

573

574

575

576

577

578

579

580

581

582

583

584

585

586

587

588

589

590

591

592

593

594

595

596

597

598

599

600

601

602

603

604

605

606

607

608

609

610

611

612

613

614

615

616

617

618

619

620

621

622

623

624

625

626

627

628

629

630

631

632

633

634

635

636

637

638

639

640

641

642

643

644

645

646

647

648

649

650

651

652

653

654

655

656

657

658

659

660

661

662

663

664

665

666

667

668

669

670

671

672

673

674

675

676

677

678

679

680

681

682

683

684

685

686

687

688

689

690

691

692

693

694

695

696

697

698

699

700

701

---

title: "Preliminary: working with tidyverse"

author: "Lieven Clement and Jeroen Gilis"

date: "statOmics, Ghent University (https://statomics.github.io)"

---

In this tutorial, we will learn how to import, tidy, wrangle and

visualize data. All steps will be demonstrated for one example dataset. This

tutorial is strongly based on material from the `opencasestudies` initiative, see [link](https://opencasestudies.github.io/casestudies/ocs-healthexpenditure.html).

# NHANES dataset

The National Health and Nutrition Examination Survey (NHANES)

contains data that has been collected since 1960. For this tutorial,

we will make use of the data that were collected between 2009 and 2012,

for 10.000 U.S. civilians. The dataset contains a large number of

physical, demographic, nutritional and life-style-related parameters.

However, before we can actually start working with data, we will need to

learn how to import the required datasets into our Rstudio environment.

# Data Import

## Introduction to "Tidy data"

The [tidyverse](https://www.tidyverse.org) is "an opinionated

collection of R packages designed for data science. All packages

share an underlying philosophy and common APIs."

Another way of putting it is that it's a set of packages that are useful

specifically for data manipulation, exploration and visualization with a common

philosophy.

The common philosophy is called _"tidy"_ data. It is a standard way of mapping

the meaning of a dataset to its structure.

In _tidy_ data:

* Each variable forms a column.

* Each observation forms a row.

* Each type of observational unit forms a table.

```{r out.width = "95%", echo = FALSE}

knitr::include_graphics("http://r4ds.had.co.nz/images/tidy-1.png")

```

Below, we are interested in transforming the table on

the right to the the table on the left, which is

considered "tidy".

```{r out.width = "95%", echo = FALSE}

knitr::include_graphics("http://r4ds.had.co.nz/images/tidy-9.png")

```

Working with tidy data is useful because it creates a structured way of

organizing data values within a data set. This makes the data analysis

process more efficient and simplifies the development of data analysis tools

that work together. In this way, you can focus on the problem you are

investigating, rather than the uninteresting logistics of data.

### The `tidyverse` R package

We can install and load the set of R packages using

`install.packages("tidyverse")` function.

When we load the tidyverse package using `library(tidyverse)`,

there are six core R packages that load:

* [readr](http://readr.tidyverse.org), for data import.

* [tidyr](http://tidyr.tidyverse.org), for data tidying.

* [dplyr](http://dplyr.tidyverse.org), for data wrangling.

* [ggplot2](http://ggplot2.tidyverse.org), for data visualisation.

* [purrr](http://purrr.tidyverse.org), for functional programming.

* [tibble](http://tibble.tidyverse.org), for tibbles, a modern re-imagining of data frames.

Here, we load in the tidyverse.

```{r, message=FALSE, warning=FALSE}

library(tidyverse)

```

These packages are highlighted in bold here:

```{r out.width = "95%", echo = FALSE}

knitr::include_graphics("https://rviews.rstudio.com/post/2017-06-09-What-is-the-tidyverse_files/tidyverse1.png")

```

Because these packages all share the "tidy" philosophy,

the data analysis workflow is easier as you move from

package to package.

Here, we will focus on the `readr`, `dplyr` and `ggplot2` R packages to import

data, to wrangle data and to visualize the data, respectively.

Next, we will give a brief description of the

features in each of these packages.

There are several base R functions that allow you

read in data into R, which you may be familiar

with such as `read.table()`, `read.csv()`,

and `read.delim()`. Instead of using these,

we will use the functions in the

[readr](https://readr.tidyverse.org/articles/readr.html)

R package. The main reasons for this are

1. Compared to equivalent base R functions, the

functions in `readr` are around 10x faster.

2. You can specify the column types (e.g

character, integer, double, logical, date,

time, etc)

3. All parsing problems are recorded in

a data frame.

### The `readr` R package

```{r, message=FALSE, warning=FALSE}

library(readr)

```

The main functions in `readr` are:

`readr` functions | Description |

--- | ---------------------------------------------------------------------------------------- |

`read_delim()` | reads in a flat file data with a given character to separate fields |

`read_csv()` | reads in a CSV file |

`read_tsv()` | reads in a file with values separated by tabs |

`read_lines()` | reads only a certain number of lines from the file |

`read_file()` | reads a complete file into a string |

`write_csv()` | writes data frame to CSV |

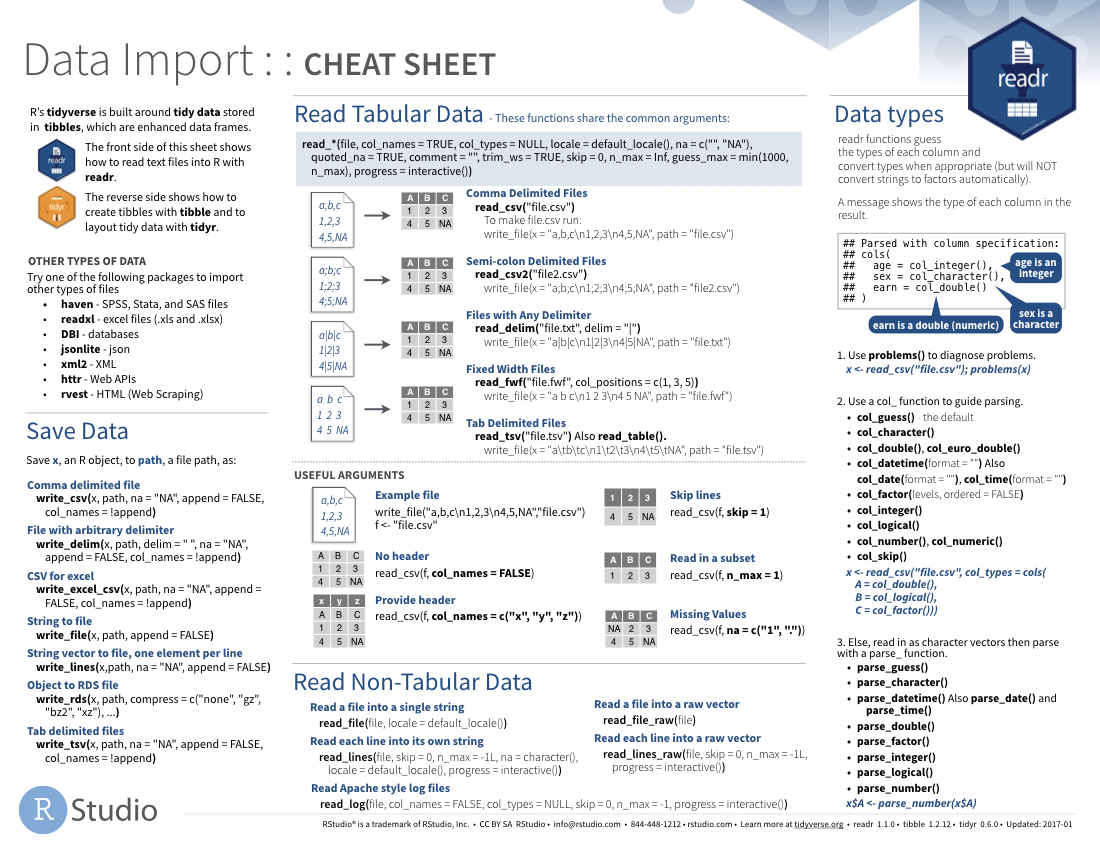

A useful cheatsheet for the functions in the

`readr` package can be found on RStudio's website:

## Read in data

Let's try reading in some data. We will begin by

reading in the `NHANES.csv` dataset.

```{r nhanesDatExpl}

NHANES <- read_csv("https://raw.githubusercontent.com/statOmics/PSLSData/main/NHANES.csv")

```

```{r}

head(NHANES)

```

```{r}

tail(NHANES)

```

```{r}

# knitr::kable(NHANES[c(1,4,5,6,7,8),c(1,3,4,7,17,20,21,25)],format = "markdown")

NHANES[c(1, 4, 5, 6, 7, 8), c(

"ID",

"Gender",

"Age",

"Race1",

"Weight",

"Height",

"BMI",

"BPSysAve"

)] # display a select set of variable and subjects

```

## Take a `glimpse()` at your data

One last thing in this section.

One way to look at our data would be to use

`head()` or `tail()`, as we just saw.

Another one you might have heard of is the

`str()` function. One you might not have

heard of is the `glimpse()` function. It's

used for a special type of object in R called

a `tibble`. Let's read the help file to learn

more.

```{r, eval=FALSE}

?tibble::tibble

```

It's kind of like `print()` where it shows you

columns running down the page. Let's try it out.

```{r}

glimpse(NHANES[, 1:10])

# or glimpse(NHANES) to see all the variables in the dataset

```

# Data Wrangling

A subset of the data analysis process can be thought about in the following way:

```{r out.width = "95%", echo = FALSE}

knitr::include_graphics("http://r4ds.had.co.nz/diagrams/data-science.png")

```

where each of these steps needs its own tools and software to complete.

After we import the data into R, if we are going to take advantage of

the _"tidyverse"_, this means we need to _transform_ the data into a form that

is _"tidy"_. If you recall, in _tidy_ data:

* Each variable forms a column.

* Each observation forms a row.

* Each type of observational unit forms a table.

For example, consider the following dataset:

Here:

* each row represents one company (row names are companies)

* each column represent one time point

* the stock prices are defined for each row/column pair

Alternatively, a data set can be structured in the following way:

* each row represents one time point (but no row names)

* the first column defines the time variable and the last three columns contain the stock prices for three companies

In both cases, the data is the same, but the structure is

different. This can be _frustrating_ to deal with as an

analyst because the meaning of the values (rows and columns)

in the two data sets are different. Providing a standardized

way of organizing values within a data set would alleviate

a major portion of this frustration.

For motivation, a _tidy_ version of the stock data we

looked at above looks like this: (we'll learn how the

functions work in just a moment)

In this "tidy" data set, we have three columns representing

three variables (time, company name and stock price).

Every row represents contains one stock price from a

particular time and for a specific company.

If we consider our `NHANES` dataframe, we see it is already in

a tidy format, as;

* Each variable forms a column.

* Each observation forms a row.

* Each type of observational unit forms a table.

Each row contains all of the information on

a single subject (US civilian) in the study.

After we finished with the manipulation and visualization of

the NHANES dataset, we will additional work with a dataset

on the effects of a certain drug, _captopril_, on the systolic

and diastolic blood pressure of patients. This will not be a _tidy_

dataset. Therefore, the details of tidying data with tidyverse will be

described below.

## The `dplyr` R package

### What is the `dplyr` R package ?

[`dplyr`](http://cran.rstudio.com/web/packages/dplyr/vignettes/introduction.html)

is a powerful R-package to transform and summarize

tabular data with rows and columns.

The package contains a set of functions

(or "verbs") to perform common data manipulation

operations such as filtering for rows, selecting

specific columns, re-ordering rows, adding new

columns and summarizing data.

In addition, `dplyr` contains a useful function to

perform another common task which is the is the

"split-apply-combine" concept. We will discuss

that in a little bit.

### Compare `dplyr` R package compare with base functions R

If you are familiar with R, you are probably familiar

with base R functions such as `split()`, `subset()`,

`apply()`, `sapply()`, `lapply()`, `tapply()` and

`aggregate()`. Compared to base functions in R, the

functions in `dplyr` are easier to work with, are

more consistent in the syntax and are targeted for

data analysis around data frames instead of just vectors.

The important `dplyr` verbs to remember are:

`dplyr` verbs | Description |

--- | ------------------------------------------------------------------------------------ |

`select()` | select columns |

`filter()` | filter rows |

`arrange()` | re-order or arrange rows |

`mutate()` | create new columns |

`summarize()` | summarize values |

`group_by()` | allows for group operations in the "split-apply-combine" concept |

### Pipe operator: %>%

Before we go any further, let's introduce the

pipe operator: `%>%`. In the `stocks` example,

we briefly saw this symbol. It is called the

pipe operator. `dplyr` imports

this operator from another package

(`magrittr`)

[see help file here](http://cran.r-project.org/web/packages/magrittr/vignettes/magrittr.html).

This operator allows you to pipe the output

from one function to the input of another

function. Instead of nesting functions

(reading from the inside to the

outside), the idea of of piping is to

read the functions from left to right.

In the online `stocks` example, we can pipe the

`stocks` data frame to the function that will

gather multiple columns into key-value pairs.

### combining and splittin columns with `unite()` and `separate()`

First, let's unite the `ID` and `SurveyYr` columns

in the `NHANES` dataset to a single column `united`.

To do this, we will use the `unite()`

function in the `tidyr` package.

Note that:

* `unite()` = unite multiple columns into one

* `separate()` = separate one column into multiple columns

```{r}

library(tidyverse)

NHANES <- NHANES %>%

unite(United,

ID:SurveyYr,

sep = "-"

)

head(NHANES)

```

Let us walk through the snippet of code from above.

First, we need to specify the name of the dataset that we want

to work with. Next, we use the pipe command to pipe the output

from the first function (here this is simply taking the NHANES dataset)

to the input of another function (in this case, the _unite_ function).

Finally, we apply the unite function. In this example,

the unite function takes three arguments

1. The name of the new column, as a string or symbol

2. A selection of colums we want to unite

3. The separator to use between values

The behaviour of the unite function is as expected; the initial variables

`ID` and `SurveyYr` are now united into a new variable `united`.

The `separate()` function performs the exact opposite operation of the

`unite()` function, as shown below;

```{r}

NHANES <- NHANES %>%

separate(United,

sep = "-",

into = c("ID", "SurveyYear")

)

head(NHANES)

```

### Select columns using `select()`

The two most basic functions are `select()` and

`filter()` which selects columns and filters

rows, respectively.

To select a range

of columns by name, use the ":" (colon) operator

```{r warning=FALSE,message=FALSE}

NHANES %>%

select(Gender:Age)

```

To select all columns that start with the

character string "t", use the function `starts_with()`

```{r warning=FALSE,message=FALSE}

NHANES %>%

select(starts_with("t"))

```

Some additional options to select columns based

on a specific criteria include

1. `ends_with()` = Select columns that end with

a character string

2. `contains()` = Select columns that contain

a character string

3. `matches()` = Select columns that match a

regular expression

4. `one_of()` = Select columns names that are

from a group of names

### Select rows using `filter()`

Let's say we want to investigate weight of

1. men that

2. are older than 18 years old, and

3. that are taller than 120 cm

```{r warning=FALSE,message=FALSE}

NHANES %>%

select(c("Gender", "Age", "Weight", "Height")) %>% ## select four colums

filter(

Gender == "male",

Age > 18,

Height > 120

) ## filter observations (rows) based on the required values

```

### Arrange or re-order rows using `arrange()`

Now, let's say we want to see the NHANES data ordered from

lowest to highest `Age`. To arrange (or re-order) rows by

a particular column you'll use the `arrange()` function:

```{r warning=FALSE,message=FALSE}

NHANES %>%

arrange(Age)

```

With this suite of _tidyr_ fucntions, in combination with

base R logical expression, we can easily select all of the

required data to assess specific, detailed research questions.

(*) Example; Within the Race1 category of Hispanics, select

women that are not married and display the first five

by descending height.

**Hint**: use the `desc()` function inside of

`arrange()` to order rows in a descending order.

```{r warning=FALSE,message=FALSE}

NHANES %>%

select(c("Race1", "Gender", "MaritalStatus", "Height")) %>%

filter(MaritalStatus != "Married", Race1 == "Hispanic", Gender == "female") %>%

arrange(desc(Height)) %>%

head(n = 5)

```

### Add columns using `mutate()`

Let's say we don't trust the BMI values in our dataset

and we decide to calculate them ourselves. BMI is typically

calculated by taking a person's weigth (in kg) and dividing

it by the its height (in m) squared.

We will now construct a new column, BMI_self, by adopting

this formula. To create new columns, we will use

the `mutate()` function in `dplyr`.

```{r}

NHANES %>%

select(c("Weight", "Height", "BMI")) %>%

mutate(BMI_self = Weight / (Height / 100)**2)

```

### Create summaries of columns using `summarise()`

The `summarise()` function in `dplyr`

will create summary statistics for a given

column in the data frame, such as finding

the maximum, minimum or average.

For example, to compute the average weigth

of the civilians, we can apply the `mean()` function

to the column `Weight` and call the

summary value `avg_Weight`.

```{r}

NHANES %>%

summarise(avg_Weight = mean(Weight, na.rm = TRUE))

## the addition argument na.rm = TRUE makes sure to remove missing

## values for the purpose of calculating the mean

```

There are many other summary statistics you

could consider such `sd()`, `min()`, `median()`,

`max()`, `sum()`, `n()` (returns the length of vector),

`first()` (returns first value in vector),

`last()` (returns last value in vector) and

`n_distinct()` (number of distinct values in vector).

We will elaborate on these functions later.

Note that choosing the most informative summary statistic

is very important! This can be shown with the following example;

```{r, echo=FALSE}

knitr::include_graphics("figures/Figure_partners.png")

```

In this sudy, men and woman were asked about their

"ideal number of partners desired over 30 years".

While almost all subjects desired a number betweeb 0 and 50,

three male subjects selected a number above 100.

These three _outliers_ in the data can have a large impact on

the data analysis, especially when we work with summary

statistics that are sensitive to these outliers.

When we look at the _mean_, for instance, we see that on average

woman desire 2.8 partners, while men desire 64.3 partners on average,

suggesting a large discrepancy between male and female desires.

However, if look at a more _robust_ summary statistic such as

the _median_, we see that the result is 1 for both men and woman.

It is clear that the mean value was completely distorted by the

three outliers in the data.

Another example of a more robust summary statistic is

the _geometric mean_.

### Group operations using `group_by()`

The `group_by()` verb is and incredibly powerful function in `dplyr`. It allows

us, for example, to calculate summary statistics for different groups of

observations.

If we take our example from above, let's say we want to split the data frame by

some variable (e.g. `Race1`), apply a function to the individual data frames

(`mean`) and then combine the output back into a summary data frame.

Let's see how that would look

```{r}

NHANES %>%

group_by(Race1) %>%

summarise(avg_Weight = mean(Weight, na.rm = TRUE))

```

Now that we have all the required functions for importing, tidying and wrangling

data in place, we will learn how to visualize our data with the ggplot2 package.

# Data Visualization

As you might have have already seen, there are many functions

available in base R that can create plots (e.g. `plot()`, `boxplot()`).

Others include: `hist()`, `qqplot()`, etc.

These functions are great because they come with a basic installation

of R and can be quite powerful when you need a quick visualization

of something when you are exploring data.

We are choosing to introduce `ggplot2` because, in our

opinion, it's one of the simplest ways for beginners to

create relatively complicated plots that are intuitive

and aesthetically pleasing.

## The `ggplot2` R package

The reasons [`ggplot2`](http://ggplot2.tidyverse.org)

is generally intuitive for beginners is the use of

[grammar of graphics](http://vita.had.co.nz/papers/layered-grammar.html)

or the `gg` in `ggplot2`. The idea is that you can construct

many sentences by learning just a few nouns, adjectives,

and verbs. There are specific "words" that we will need to

learn and once we do, you will be able to create

(or "write") hundreds of different plots.

The critical part to making graphics using `ggplot2` is the

data needs to be in a _tidy_ format. Given that we have

just spend the last two lectures learning about how to

work with _tidy_ data, we are primed to take

advantage of all that `ggplot2` has to offer!

We will show how it's easy to pipe _tidy_ data

(output) as input to other functions that creates

plots. This all works because we are working

within the _tidyverse_.

## `ggplot2` cheatsheet

The ggplot2 cheat sheet is a very handy list for looking up ggplot2 functions,

see [cheatsheet](https://github.com/rstudio/cheatsheets/raw/master/data-visualization.pdf)

## What is the `ggplot()` function?

As explained by Hadley Wickham:

> the grammar tells us that a statistical graphic is a mapping from data to aesthetic attributes (colour, shape, size) of geometric objects (points, lines, bars). The plot may also contain statistical transformations of the data and is drawn on a specific coordinates system.

#### `ggplot2` Terminology

* **ggplot** - the main function where you specify the data set and variables to plot (this is where we define the `x` and

`y` variable names)

* **geoms** - geometric objects

* e.g. `geom_point()`, `geom_bar()`, `geom_line()`, `geom_histogram()`

* **aes** - aesthetics

* shape, transparency, color, fill, linetype

* **scales** - define how your data will be plotted

* continuous, discrete, log, etc

There are three ways to initialize a `ggplot()` object.

An empty ggplot object

```{r}

library(ggplot2)

p <- ggplot()

```

A ggplot object associated with a dataset

```{r}

p <- NHANES %>%

filter(Gender == "male") %>%

ggplot()

```

or a ggplot object with a dataset and `x` and `y` defined

```{r}

p <- NHANES %>%

filter(Gender == "male") %>%

ggplot(aes(x = Height, y = Weight))

```

The function `aes()` is an aesthetic mapping

function inside the `ggplot()` object. We

use this function to specify plot attributes

(e.g. `x` and `y` variable names) that

will not change as we add more layers.

```{r, eval = FALSE}

p

```

Now we have initialize a ggplot object on a subset of

the NHANES dataset. But the plot is still empty! To actually

show the data, we will need an additional command, specifying

which visualization type we want to use. Importantly,

different types of visualizations will provide us with

different types of information! This we will discuss in

more detail in the following tutorial. For now, we will

demonstrate how to generate a scatterplot.

## Create scatter plots using `geom_point()`

Anything that goes in the `ggplot()` object becomes

a global setting. From there, we use the `geom`

objects to add more layers to the base `ggplot()`

object. These will define what we are interested in

illustrating using the data.

For the NHANES dataset, we can for instance look

at the relationship between a person's height and weigth

values.

```{r}

p <- NHANES %>%

filter(Gender == "male", Age >= 18) %>%

ggplot(aes(x = Height, y = Weight)) +

geom_point() +

xlab("Height (cm)") +

ylab("Weight (kg)")

p

```

We used the `xlab()` and `ylab()` functions

in `ggplot2` to specify the x-axis and y-axis

labels. Note that NA values were automatically

remove by the geom_point function.

ggplot2 also has a very broad panel of aesthetic features

for improving your plot. One very basic feature is that we

can give colors to the ggplot object. For instance, we can

give different colors to the dots in the previous

scatterplot based on a subject's gender.

```{r}

NHANES %>%

ggplot(aes(x = Height, y = Weight, color = Gender)) +

geom_point() +

xlab("Height (cm)") +

ylab("Weight (kg)")

```

This concludes the preliminary file. To see all of

these tidyverse and ggplot functionalities in action,

we will explore the NHANES dataset more thoroughly

in the first tutorial.

# Reference

https://opencasestudies.github.io/casestudies/ocs-healthexpenditure.html