From b52c2ea5d2da19367b3f18a04bb909f809add882 Mon Sep 17 00:00:00 2001

From: lugimzzz <63761690+lugimzzz@users.noreply.github.com>

Date: Mon, 31 Oct 2022 20:56:46 +0800

Subject: [PATCH] add soft link from examples to application (#3587)

---

examples/text_classification/README.md | 14 +-

.../text_classification/multi_label/README.md | 138 ----

.../text_classification/multi_label/data.py | 118 ---

.../multi_label/deploy/python/predict.py | 139 ----

.../multi_label/export_model.py | 57 --

.../text_classification/multi_label/metric.py | 74 --

.../text_classification/multi_label/model.py | 40 -

.../multi_label/predict.py | 112 ---

.../text_classification/multi_label/train.py | 179 ----

.../text_classification/pretrained_models | 1 +

.../pretrained_models/README.md | 256 ------

.../deploy/cpp/CMakeLists.txt | 227 ------

.../pretrained_models/deploy/cpp/compile.sh | 36 -

.../pretrained_models/deploy/cpp/run.sh | 11 -

.../deploy/cpp/seq_cls_infer.cc | 103 ---

.../deploy/cpp/tokenizer/CMakeLists.txt | 3 -

.../deploy/cpp/tokenizer/tokenizer.cc | 763 ------------------

.../deploy/cpp/tokenizer/tokenizer.h | 150 ----

.../deploy/python/predict.py | 182 -----

.../deploy/serving/client.py | 106 ---

.../deploy/serving/export_servable_model.py | 47 --

.../pretrained_models/export_model.py | 51 --

.../pretrained_models/predict.py | 112 ---

.../pretrained_models/train.py | 224 -----

.../pretrained_models/utils.py | 63 --

25 files changed, 8 insertions(+), 3198 deletions(-)

delete mode 100644 examples/text_classification/multi_label/README.md

delete mode 100644 examples/text_classification/multi_label/data.py

delete mode 100644 examples/text_classification/multi_label/deploy/python/predict.py

delete mode 100644 examples/text_classification/multi_label/export_model.py

delete mode 100644 examples/text_classification/multi_label/metric.py

delete mode 100644 examples/text_classification/multi_label/model.py

delete mode 100644 examples/text_classification/multi_label/predict.py

delete mode 100644 examples/text_classification/multi_label/train.py

create mode 120000 examples/text_classification/pretrained_models

delete mode 100644 examples/text_classification/pretrained_models/README.md

delete mode 100644 examples/text_classification/pretrained_models/deploy/cpp/CMakeLists.txt

delete mode 100755 examples/text_classification/pretrained_models/deploy/cpp/compile.sh

delete mode 100644 examples/text_classification/pretrained_models/deploy/cpp/run.sh

delete mode 100644 examples/text_classification/pretrained_models/deploy/cpp/seq_cls_infer.cc

delete mode 100644 examples/text_classification/pretrained_models/deploy/cpp/tokenizer/CMakeLists.txt

delete mode 100644 examples/text_classification/pretrained_models/deploy/cpp/tokenizer/tokenizer.cc

delete mode 100644 examples/text_classification/pretrained_models/deploy/cpp/tokenizer/tokenizer.h

delete mode 100644 examples/text_classification/pretrained_models/deploy/python/predict.py

delete mode 100644 examples/text_classification/pretrained_models/deploy/serving/client.py

delete mode 100644 examples/text_classification/pretrained_models/deploy/serving/export_servable_model.py

delete mode 100644 examples/text_classification/pretrained_models/export_model.py

delete mode 100644 examples/text_classification/pretrained_models/predict.py

delete mode 100644 examples/text_classification/pretrained_models/train.py

delete mode 100644 examples/text_classification/pretrained_models/utils.py

diff --git a/examples/text_classification/README.md b/examples/text_classification/README.md

index b002bdaff6d5..a15fcb511b50 100644

--- a/examples/text_classification/README.md

+++ b/examples/text_classification/README.md

@@ -1,15 +1,15 @@

# 文本分类

-提供了多个文本分类任务示例,基于传统序列模型的二分类,基于预训练模型的二分类和基于预训练模型的多标签文本分类。

+提供了多个文本分类任务示例,基于基于ERNIE 3.0预训练模型、传统序列模型、基于ERNIE-Doc超长文本预训练模型的文本分类。

-## RNN Models

+## Pretrained Model (PTMs)

-[Recurrent Neural Networks](./rnn) 展示了如何使用传统序列模型RNN、LSTM、GRU等网络完成文本分类任务。

+[Pretrained Models](./pretrained_models) 展示了如何使用以ERNIE 3.0 为代表的预模型,在多分类、多标签、层次分类场景下,基于预训练模型微调、提示学习(小样本)、语义索引等三种不同方案进行文本分类。预训练模型文本分类打通数据标注-模型训练-模型调优-模型压缩-预测部署全流程,旨在解决细分场景应用的痛点和难点,快速实现文本分类产品落地。

-## Pretrained Model (PTMs)

+## RNN Models

-[Pretrained Models](./pretrained_models) 展示了如何使用以ERNIE为代表的模型Fine-tune完成文本分类任务。

+[Recurrent Neural Networks](./rnn) 展示了如何使用传统序列模型RNN、LSTM、GRU等网络完成文本分类任务。

-## Multi-label Text Classification

+## ERNIE-Doc Text Classification

-[Multi-label Text Classification](./multi_label) 展示了如何使用以Bert为代表的预训练模型完成多标签文本分类任务。

+[ERNIE-Doc Text Classification](./ernie-doc) 展示了如何使用预训练模型ERNIE-Doc完成**超长文本**分类任务。

diff --git a/examples/text_classification/multi_label/README.md b/examples/text_classification/multi_label/README.md

deleted file mode 100644

index d34a7477eab9..000000000000

--- a/examples/text_classification/multi_label/README.md

+++ /dev/null

@@ -1,138 +0,0 @@

-# 多标签文本分类任务

-

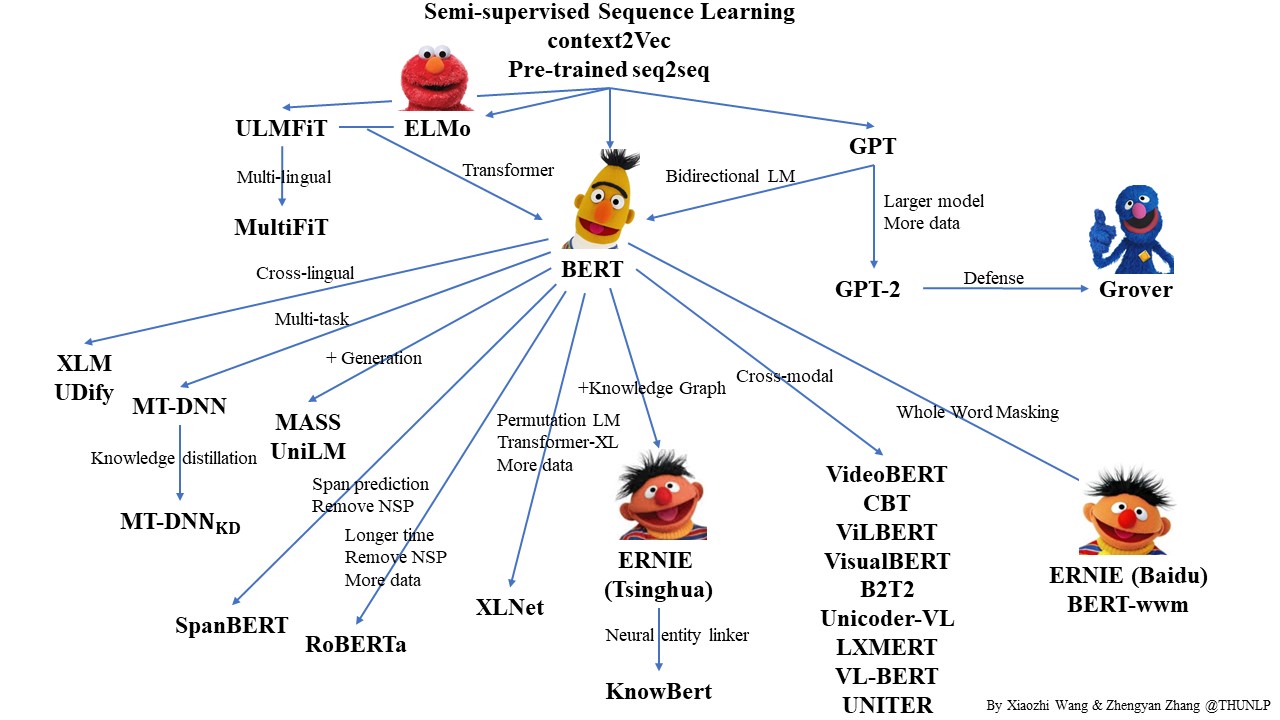

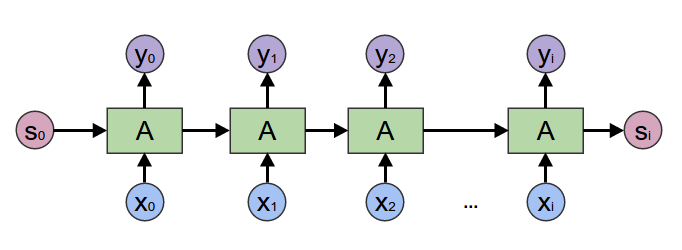

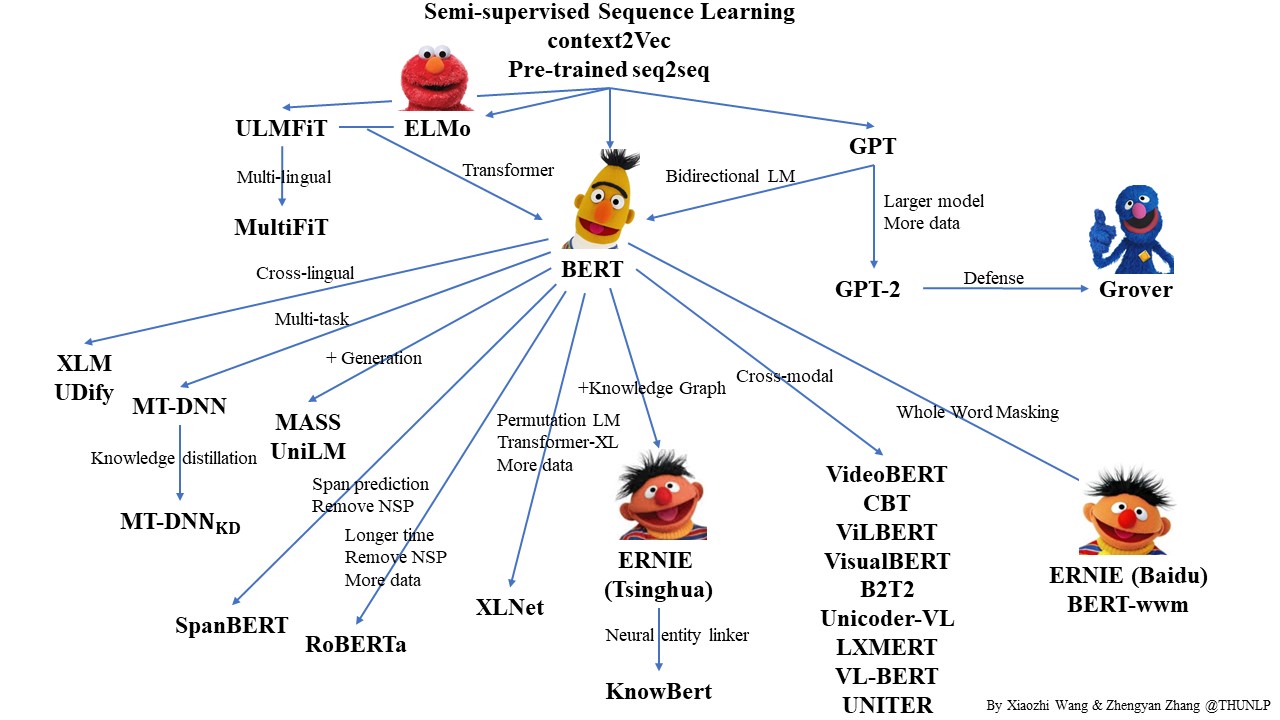

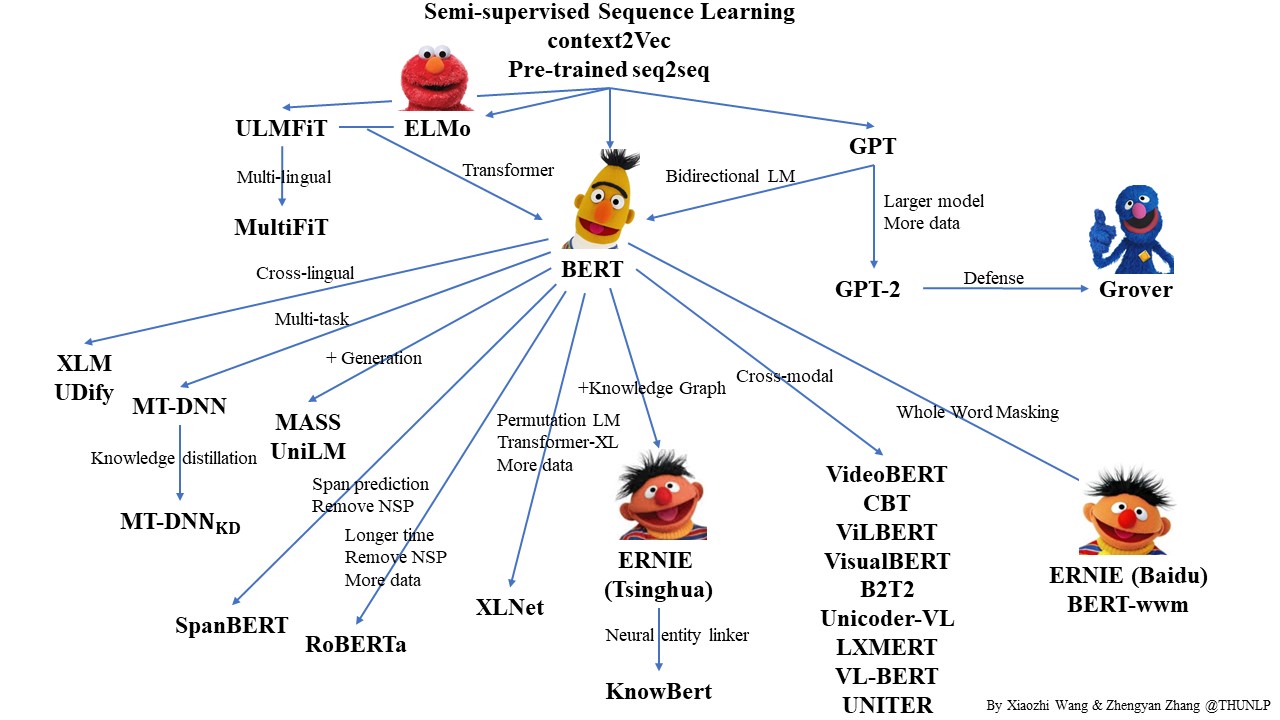

-近年来随着深度学习的发展,模型参数的数量飞速增长。为了训练这些参数,需要更大的数据集来避免过拟合。然而,对于大部分NLP任务来说,构建大规模的标注数据集非常困难(成本过高),特别是对于句法和语义相关的任务。相比之下,大规模的未标注语料库的构建则相对容易。为了利用这些数据,我们可以先从其中学习到一个好的表示,再将这些表示应用到其他任务中。最近的研究表明,基于大规模未标注语料库的预训练模型(Pretrained Models, PTM) 在NLP任务上取得了很好的表现。

-

-大量的研究表明基于大型语料库的预训练模型(Pretrained Models, PTM)可以学习通用的语言表示,有利于下游NLP任务,同时能够避免从零开始训练模型。随着计算能力的发展,深度模型的出现(即 Transformer)和训练技巧的增强使得 PTM 不断发展,由浅变深。

-

-

-

-

-

-本图片来自于:https://github.com/thunlp/PLMpapers

-

-本示例展示了如何以BERT([Bidirectional Encoder Representations from Transformers](https://arxiv.org/abs/1810.04805))预训练模型Finetune完成多标签文本分类任务。

-

-## 快速开始

-

-### 代码结构说明

-

-以下是本项目主要代码结构及说明:

-

-```text

-pretrained_models/

-├── deploy # 部署

-│ └── python

-│ └── predict.py # python预测部署示例

-├── export_model.py # 动态图参数导出静态图参数脚本

-├── predict.py # 预测脚本

-├── README.md # 使用说明

-├── data.py # 数据处理

-├── metric.py # 指标计算

-├── model.py # 模型网络

-└── train.py # 训练评估脚本

-```

-

-### 数据准备

-

-从Kaggle下载[Toxic Comment Classification Challenge](https://www.kaggle.com/c/jigsaw-toxic-comment-classification-challenge/data)数据集并将数据集文件放在`./data`路径下。

-以下是`./data`路径的文件组成:

-

-```text

-data/

-├── sample_submission.csv # 预测结果提交样例

-├── train.csv # 训练集

-├── test.csv # 测试集

-└── test_labels.csv # 测试数据标签,数值-1代表该条数据不参与打分

-```

-

-### 模型训练

-

-我们以Kaggle Toxic Comment Classification Challenge为示例数据集,可以运行下面的命令,在训练集(train.tsv)上进行模型训练

-```shell

-unset CUDA_VISIBLE_DEVICES

-python -m paddle.distributed.launch --gpus "0" train.py --device gpu --save_dir ./checkpoints

-```

-

-可支持配置的参数:

-

-* `save_dir`:可选,保存训练模型的目录;默认保存在当前目录checkpoints文件夹下。

-* `max_seq_length`:可选,BERT模型使用的最大序列长度,最大不能超过512, 若出现显存不足,请适当调低这一参数;默认为128。

-* `batch_size`:可选,批处理大小,请结合显存情况进行调整,若出现显存不足,请适当调低这一参数;默认为32。

-* `learning_rate`:可选,Fine-tune的最大学习率;默认为5e-5。

-* `weight_decay`:可选,控制正则项力度的参数,用于防止过拟合,默认为0.0。

-* `epochs`: 训练轮次,默认为3。

-* `warmup_proption`:可选,学习率warmup策略的比例,如果0.1,则学习率会在前10%训练step的过程中从0慢慢增长到learning_rate, 而后再缓慢衰减,默认为0.0。

-* `init_from_ckpt`:可选,模型参数路径,热启动模型训练;默认为None。

-* `seed`:可选,随机种子,默认为1000。

-* `device`: 选用什么设备进行训练,可选cpu或gpu。如使用gpu训练则参数gpus指定GPU卡号。

-* `data_path`: 可选,数据集文件路径,默认数据集存放在当前目录data文件夹下。

-

-代码示例中使用的预训练模型是BERT,如果想要使用其他预训练模型如ERNIE等,只需要更换`model`和`tokenizer`即可。

-

-程序运行时将会自动进行训练,评估。同时训练过程中会自动保存模型在指定的`save_dir`中。

-如:

-```text

-checkpoints/

-├── model_100

-│ ├── model_state.pdparams

-│ ├── tokenizer_config.json

-│ └── vocab.txt

-└── ...

-```

-

-**NOTE:**

-* 如需恢复模型训练,则可以设置`init_from_ckpt`,如`init_from_ckpt=checkpoints/model_100/model_state.pdparams`。

-* 使用动态图训练结束之后,还可以将动态图参数导出成静态图参数,具体代码见export_model.py。静态图参数保存在`output_path`指定路径中。

- 运行方式:

-

-```shell

-python export_model.py --params_path=./checkpoints/model_1000/model_state.pdparams --output_path=./static_graph_params

-```

-其中`params_path`是指动态图训练保存的参数路径,`output_path`是指静态图参数导出路径。

-

-导出模型之后,可以用于部署,deploy/python/predict.py文件提供了python部署预测示例。

-

-

-**NOTE:**

-* 可通过`threshold`参数调整最终预测结果,当预测概率值大于`threshold`时预测结果为1,否则为0;默认为0.5。

-运行方式:

-

-```shell

-python deploy/python/predict.py --model_file=static_graph_params.pdmodel --params_file=static_graph_params.pdiparams

-```

-

-待预测数据如以下示例:

-

-```text

-Your bullshit is not welcome here.

-Thank you for understanding. I think very highly of you and would not revert without discussion.

-```

-

-预测结果示例:

-

-```text

-Data: Your bullshit is not welcome here.

-toxic: 1

-severe_toxic: 0

-obscene: 0

-threat: 0

-insult: 0

-identity_hate: 0

-Data: Thank you for understanding. I think very highly of you and would not revert without discussion.

-toxic: 0

-severe_toxic: 0

-obscene: 0

-threat: 0

-insult: 0

-identity_hate: 0

-```

-

-### 模型预测

-

-启动预测:

-```shell

-export CUDA_VISIBLE_DEVICES=0

-python predict.py --device 'gpu' --params_path checkpoints/model_1000/model_state.pdparams

-```

-

-预测结果会以csv文件`sample_test.csv`保存在当前目录下。

diff --git a/examples/text_classification/multi_label/data.py b/examples/text_classification/multi_label/data.py

deleted file mode 100644

index eb5152774334..000000000000

--- a/examples/text_classification/multi_label/data.py

+++ /dev/null

@@ -1,118 +0,0 @@

-# Copyright (c) 2021 PaddlePaddle Authors. All Rights Reserved.

-#

-# Licensed under the Apache License, Version 2.0 (the "License");

-# you may not use this file except in compliance with the License.

-# You may obtain a copy of the License at

-#

-# http://www.apache.org/licenses/LICENSE-2.0

-#

-# Unless required by applicable law or agreed to in writing, software

-# distributed under the License is distributed on an "AS IS" BASIS,

-# WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.

-# See the License for the specific language governing permissions and

-# limitations under the License.

-

-import re

-import numpy as np

-import pandas as pd

-import paddle

-

-

-def convert_example(example, tokenizer, max_seq_length=512, is_test=False):

- """

- Builds model inputs from a sequence or a pair of sequence for sequence classification tasks

- by concatenating and adding special tokens. And creates a mask from the two sequences passed

- to be used in a sequence-pair classification task.

-

- A BERT sequence has the following format:

-

- - single sequence: ``[CLS] X [SEP]``

- - pair of sequences: ``[CLS] A [SEP] B [SEP]``

-

- A BERT sequence pair mask has the following format:

- ::

- 0 0 0 0 0 0 0 0 0 0 0 1 1 1 1 1 1 1 1 1

- | first sequence | second sequence |

-

- If only one sequence, only returns the first portion of the mask (0's).

-

-

- Args:

- example(obj:`list[str]`): List of input data, containing text and label if it have label.

- tokenizer(obj:`PretrainedTokenizer`): This tokenizer inherits from :class:`~paddlenlp.transformers.PretrainedTokenizer`

- which contains most of the methods. Users should refer to the superclass for more information regarding methods.

- max_seq_len(obj:`int`): The maximum total input sequence length after tokenization.

- Sequences longer than this will be truncated, sequences shorter will be padded.

- is_test(obj:`False`, defaults to `False`): Whether the example contains label or not.

-

- Returns:

- input_ids(obj:`list[int]`): The list of token ids.

- token_type_ids(obj: `list[int]`): List of sequence pair mask.

- label(obj:`numpy.array`, data type of int64, optional): The input label if not is_test.

- """

- encoded_inputs = tokenizer(text=example["text"], max_seq_len=max_seq_length)

- input_ids = encoded_inputs["input_ids"]

- token_type_ids = encoded_inputs["token_type_ids"]

-

- if not is_test:

- label = np.array(example["label"], dtype="float32")

- return input_ids, token_type_ids, label

- return input_ids, token_type_ids

-

-

-def create_dataloader(dataset,

- mode='train',

- batch_size=1,

- batchify_fn=None,

- trans_fn=None):

- if trans_fn:

- dataset = dataset.map(trans_fn)

-

- shuffle = True if mode == 'train' else False

- if mode == 'train':

- batch_sampler = paddle.io.DistributedBatchSampler(dataset,

- batch_size=batch_size,

- shuffle=shuffle)

- else:

- batch_sampler = paddle.io.BatchSampler(dataset,

- batch_size=batch_size,

- shuffle=shuffle)

-

- return paddle.io.DataLoader(dataset=dataset,

- batch_sampler=batch_sampler,

- collate_fn=batchify_fn,

- return_list=True)

-

-

-def read_custom_data(filename, is_test=False):

- """Reads data."""

- data = pd.read_csv(filename)

- for line in data.values:

- if is_test:

- text = line[1]

- yield {"text": clean_text(text), "label": ""}

- else:

- text, label = line[1], line[2:]

- yield {"text": clean_text(text), "label": label}

-

-

-def clean_text(text):

- text = text.replace("\r", "").replace("\n", "")

- text = re.sub(r"\\n\n", ".", text)

- return text

-

-

-def write_test_results(filename, results, label_info):

- """write test results"""

- data = pd.read_csv(filename)

- qids = [line[0] for line in data.values]

- results_dict = {k: [] for k in label_info}

- results_dict["id"] = qids

- results = list(map(list, zip(*results)))

- for key in results_dict:

- if key != "id":

- for result in results:

- results_dict[key] = result

- df = pd.DataFrame(results_dict)

- df.to_csv("sample_test.csv", index=False)

- print("Test results saved")

diff --git a/examples/text_classification/multi_label/deploy/python/predict.py b/examples/text_classification/multi_label/deploy/python/predict.py

deleted file mode 100644

index c4b6b1af4b6b..000000000000

--- a/examples/text_classification/multi_label/deploy/python/predict.py

+++ /dev/null

@@ -1,139 +0,0 @@

-# Copyright (c) 2021 PaddlePaddle Authors. All Rights Reserved.

-#

-# Licensed under the Apache License, Version 2.0 (the "License");

-# you may not use this file except in compliance with the License.

-# You may obtain a copy of the License at

-#

-# http://www.apache.org/licenses/LICENSE-2.0

-#

-# Unless required by applicable law or agreed to in writing, software

-# distributed under the License is distributed on an "AS IS" BASIS,

-# WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.

-# See the License for the specific language governing permissions and

-# limitations under the License.

-

-import argparse

-import sys

-

-import paddle

-import paddle.nn.functional as F

-from paddlenlp.data import Tuple, Pad

-from paddlenlp.transformers import BertTokenizer

-

-sys.path.append('./')

-

-from data import convert_example

-

-# yapf: disable

-parser = argparse.ArgumentParser()

-parser.add_argument("--model_file", type=str, required=True, default='./static_graph_params.pdmodel', help="The path to model info in static graph.")

-parser.add_argument("--params_file", type=str, required=True, default='./static_graph_params.pdiparams', help="The path to parameters in static graph.")

-parser.add_argument("--max_seq_length", default=128, type=int, help="The maximum total input sequence length after tokenization. "

- "Sequences longer than this will be truncated, sequences shorter will be padded.")

-parser.add_argument("--threshold", default=0.5, type=float, help="The threshold for converting probabilities to labels")

-parser.add_argument("--batch_size", default=2, type=int, help="Batch size per GPU/CPU for training.")

-parser.add_argument('--device', choices=['cpu', 'gpu', 'xpu'], default="gpu", help="Select which device to train model, defaults to gpu.")

-args = parser.parse_args()

-# yapf: enable

-

-

-class Predictor(object):

-

- def __init__(self, model_file, params_file, device, max_seq_length):

- self.max_seq_length = max_seq_length

-

- config = paddle.inference.Config(model_file, params_file)

- if device == "gpu":

- # set GPU configs accordingly

- config.enable_use_gpu(100, 0)

- elif device == "cpu":

- # set CPU configs accordingly,

- # such as enable_mkldnn, set_cpu_math_library_num_threads

- config.disable_gpu()

- elif device == "xpu":

- # set XPU configs accordingly

- config.enable_xpu(100)

- config.switch_use_feed_fetch_ops(False)

- self.predictor = paddle.inference.create_predictor(config)

-

- self.input_handles = [

- self.predictor.get_input_handle(name)

- for name in self.predictor.get_input_names()

- ]

-

- self.output_handle = self.predictor.get_output_handle(

- self.predictor.get_output_names()[0])

-

- def predict(self, data, tokenizer, batch_size=1, threshold=0.5):

- """

- Predicts the data labels.

-

- Args:

- data (obj:`List(Example)`): The processed data whose each element is a Example (numedtuple) object.

- A Example object contains `text`(word_ids) and `se_len`(sequence length).

- tokenizer(obj:`PretrainedTokenizer`): This tokenizer inherits from :class:`~paddlenlp.transformers.PretrainedTokenizer`

- which contains most of the methods. Users should refer to the superclass for more information regarding methods.

- batch_size(obj:`int`, defaults to 1): The number of batch.

- threshold(obj:`int`, defaults to 0.5): The threshold for converting probabilities to labels.

-

- Returns:

- results(obj:`dict`): All the predictions labels.

- """

- examples = []

- for text in data:

- example = {"text": text}

- input_ids, segment_ids = convert_example(

- example,

- tokenizer,

- max_seq_length=self.max_seq_length,

- is_test=True)

- examples.append((input_ids, segment_ids))

-

- batchify_fn = lambda samples, fn=Tuple(

- Pad(axis=0, pad_val=tokenizer.pad_token_id), # input

- Pad(axis=0, pad_val=tokenizer.pad_token_id), # segment

- ): fn(samples)

-

- # Seperates data into some batches.

- batches = [

- examples[idx:idx + batch_size]

- for idx in range(0, len(examples), batch_size)

- ]

-

- results = []

- for batch in batches:

- input_ids, segment_ids = batchify_fn(batch)

- self.input_handles[0].copy_from_cpu(input_ids)

- self.input_handles[1].copy_from_cpu(segment_ids)

- self.predictor.run()

- logits = paddle.to_tensor(self.output_handle.copy_to_cpu())

- probs = F.sigmoid(logits)

- preds = (probs.numpy() > threshold).astype(int)

- results.extend(preds)

- return results

-

-

-if __name__ == "__main__":

- # Define predictor to do prediction.

- predictor = Predictor(args.model_file, args.params_file, args.device,

- args.max_seq_length)

-

- # Load bert tokenizer

- tokenizer = BertTokenizer.from_pretrained('bert-base-uncased')

-

- data = [

- "Your bullshit is not welcome here.",

- "Thank you for understanding. I think very highly of you and would not revert without discussion.",

- ]

- label_info = [

- 'toxic', 'severe_toxic', 'obscene', 'threat', 'insult', 'identity_hate'

- ]

-

- results = predictor.predict(data,

- tokenizer,

- batch_size=args.batch_size,

- threshold=args.threshold)

- for idx, text in enumerate(data):

- print('Data: \t {}'.format(text))

- for i, k in enumerate(label_info):

- print('{}: \t {}'.format(k, results[idx][i]))

diff --git a/examples/text_classification/multi_label/export_model.py b/examples/text_classification/multi_label/export_model.py

deleted file mode 100644

index 6ca06c5d0019..000000000000

--- a/examples/text_classification/multi_label/export_model.py

+++ /dev/null

@@ -1,57 +0,0 @@

-# Copyright (c) 2021 PaddlePaddle Authors. All Rights Reserved.

-#

-# Licensed under the Apache License, Version 2.0 (the "License");

-# you may not use this file except in compliance with the License.

-# You may obtain a copy of the License at

-#

-# http://www.apache.org/licenses/LICENSE-2.0

-#

-# Unless required by applicable law or agreed to in writing, software

-# distributed under the License is distributed on an "AS IS" BASIS,

-# WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.

-# See the License for the specific language governing permissions and

-# limitations under the License.

-

-import argparse

-import os

-

-import paddle

-from paddlenlp.transformers import AutoModel

-

-from model import MultiLabelClassifier

-

-# yapf: disable

-parser = argparse.ArgumentParser()

-parser.add_argument("--params_path", type=str, required=True, default='./checkpoint/model_800/model_state.pdparams', help="The path to model parameters to be loaded.")

-parser.add_argument("--output_path", type=str, default='./static_graph_params', help="The path of model parameter in static graph to be saved.")

-args = parser.parse_args()

-# yapf: enable

-

-if __name__ == "__main__":

- # The number of labels should be in accordance with the training dataset.

- label_info = [

- 'toxic', 'severe_toxic', 'obscene', 'threat', 'insult', 'identity_hate'

- ]

-

- # Load pretrained model

- pretrained_model = AutoModel.from_pretrained("bert-base-uncased")

-

- model = MultiLabelClassifier(pretrained_model, num_labels=len(label_info))

-

- if args.params_path and os.path.isfile(args.params_path):

- state_dict = paddle.load(args.params_path)

- model.set_dict(state_dict)

- print("Loaded parameters from %s" % args.params_path)

- model.eval()

-

- # Convert to static graph with specific input description

- model = paddle.jit.to_static(

- model,

- input_spec=[

- paddle.static.InputSpec(shape=[None, None],

- dtype="int64"), # input_ids

- paddle.static.InputSpec(shape=[None, None],

- dtype="int64") # segment_ids

- ])

- # Save in static graph model.

- paddle.jit.save(model, args.output_path)

diff --git a/examples/text_classification/multi_label/metric.py b/examples/text_classification/multi_label/metric.py

deleted file mode 100644

index ea151b30b2c1..000000000000

--- a/examples/text_classification/multi_label/metric.py

+++ /dev/null

@@ -1,74 +0,0 @@

-# Copyright (c) 2021 PaddlePaddle Authors. All Rights Reserved.

-#

-# Licensed under the Apache License, Version 2.0 (the "License");

-# you may not use this file except in compliance with the License.

-# You may obtain a copy of the License at

-#

-# http://www.apache.org/licenses/LICENSE-2.0

-#

-# Unless required by applicable law or agreed to in writing, software

-# distributed under the License is distributed on an "AS IS" BASIS,

-# WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.

-# See the License for the specific language governing permissions and

-# limitations under the License.

-

-import numpy as np

-from sklearn.metrics import roc_auc_score, f1_score

-

-from paddle.metric import Metric

-

-

-class MultiLabelReport(Metric):

- """

- AUC and F1Score for multi-label text classification task.

- """

-

- def __init__(self, name='MultiLabelReport', average='micro'):

- super(MultiLabelReport, self).__init__()

- self.average = average

- self._name = name

- self.reset()

-

- def f1_score(self, y_prob):

- '''

- Returns the f1 score by searching the best threshhold

- '''

- best_score = 0

- for threshold in [i * 0.01 for i in range(100)]:

- self.y_pred = y_prob > threshold

- score = f1_score(y_pred=self.y_pred,

- y_true=self.y_true,

- average=self.average)

- if score > best_score:

- best_score = score

- return best_score

-

- def reset(self):

- """

- Resets all of the metric state.

- """

- self.y_prob = None

- self.y_true = None

-

- def update(self, probs, labels):

- if self.y_prob is not None:

- self.y_prob = np.append(self.y_prob, probs.numpy(), axis=0)

- else:

- self.y_prob = probs.numpy()

- if self.y_true is not None:

- self.y_true = np.append(self.y_true, labels.numpy(), axis=0)

- else:

- self.y_true = labels.numpy()

-

- def accumulate(self):

- auc = roc_auc_score(y_score=self.y_prob,

- y_true=self.y_true,

- average=self.average)

- f1_score = self.f1_score(y_prob=self.y_prob)

- return auc, f1_score

-

- def name(self):

- """

- Returns metric name

- """

- return self._name

diff --git a/examples/text_classification/multi_label/model.py b/examples/text_classification/multi_label/model.py

deleted file mode 100644

index 2223bfb8ef9f..000000000000

--- a/examples/text_classification/multi_label/model.py

+++ /dev/null

@@ -1,40 +0,0 @@

-# Copyright (c) 2021 PaddlePaddle Authors. All Rights Reserved.

-#

-# Licensed under the Apache License, Version 2.0 (the "License");

-# you may not use this file except in compliance with the License.

-# You may obtain a copy of the License at

-#

-# http://www.apache.org/licenses/LICENSE-2.0

-#

-# Unless required by applicable law or agreed to in writing, software

-# distributed under the License is distributed on an "AS IS" BASIS,

-# WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.

-# See the License for the specific language governing permissions and

-# limitations under the License.

-

-import paddle.nn as nn

-

-

-class MultiLabelClassifier(nn.Layer):

-

- def __init__(self, pretrained_model, num_labels=2, dropout=None):

- super(MultiLabelClassifier, self).__init__()

- self.ptm = pretrained_model

- self.num_labels = num_labels

- self.dropout = nn.Dropout(dropout if dropout is not None else self.ptm.

- config["hidden_dropout_prob"])

- self.classifier = nn.Linear(self.ptm.config["hidden_size"], num_labels)

-

- def forward(self,

- input_ids,

- token_type_ids=None,

- position_ids=None,

- attention_mask=None):

- _, pooled_output = self.ptm(input_ids,

- token_type_ids=token_type_ids,

- position_ids=position_ids,

- attention_mask=attention_mask)

-

- pooled_output = self.dropout(pooled_output)

- logits = self.classifier(pooled_output)

- return logits

diff --git a/examples/text_classification/multi_label/predict.py b/examples/text_classification/multi_label/predict.py

deleted file mode 100644

index 8e688d90a998..000000000000

--- a/examples/text_classification/multi_label/predict.py

+++ /dev/null

@@ -1,112 +0,0 @@

-# Copyright (c) 2021 PaddlePaddle Authors. All Rights Reserved.

-#

-# Licensed under the Apache License, Version 2.0 (the "License");

-# you may not use this file except in compliance with the License.

-# You may obtain a copy of the License at

-#

-# http://www.apache.org/licenses/LICENSE-2.0

-#

-# Unless required by applicable law or agreed to in writing, software

-# distributed under the License is distributed on an "AS IS" BASIS,

-# WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.

-# See the License for the specific language governing permissions and

-# limitations under the License.

-

-import argparse

-import os

-from functools import partial

-

-import paddle

-import paddle.nn.functional as F

-from paddlenlp.transformers import AutoModel, AutoTokenizer

-from paddlenlp.data import Tuple, Pad

-from paddlenlp.datasets import load_dataset

-

-from data import convert_example, create_dataloader, read_custom_data, write_test_results

-from model import MultiLabelClassifier

-

-# yapf: disable

-parser = argparse.ArgumentParser()

-parser.add_argument("--params_path", type=str, required=True, default='./checkpoint/model_800/model_state.pdparams', help="The path to model parameters to be loaded.")

-parser.add_argument("--max_seq_length", type=int, default=128, help="The maximum total input sequence length after tokenization. "

- "Sequences longer than this will be truncated, sequences shorter will be padded.")

-parser.add_argument("--batch_size", type=int, default=32, help="Batch size per GPU/CPU for training.")

-parser.add_argument('--device', choices=['cpu', 'gpu', 'xpu'], default="gpu", help="Select which device to train model, defaults to gpu.")

-parser.add_argument("--data_path", type=str, default="./data", help="The path of datasets to be loaded")

-args = parser.parse_args()

-# yapf: enable

-

-

-def predict(model, data_loader, batch_size=1):

- """

- Predicts the data labels.

-

- Args:

- model (obj:`paddle.nn.Layer`): A model to classify texts.

- data_loader(obj:`paddle.io.DataLoader`): The dataset loader which generates batches.

- batch_size(obj:`int`, defaults to 1): The number of batch.

-

- Returns:

- results(obj:`dict`): All the predictions labels.

- """

-

- results = []

- model.eval()

- for step, batch in enumerate(data_loader, start=1):

- input_ids, token_type_ids = batch

- logits = model(input_ids, token_type_ids)

- probs = F.sigmoid(logits)

- probs = probs.tolist()

- results.extend(probs)

- if step % 100 == 0:

- print("step %d, %d samples processed" % (step, step * batch_size))

- return results

-

-

-if __name__ == "__main__":

- paddle.set_device(args.device)

-

- # Load train dataset.

- file_name = 'test.csv'

- test_ds = load_dataset(read_custom_data,

- filename=os.path.join(args.data_path, file_name),

- is_test=True,

- lazy=False)

-

- # The dataset labels

- label_info = [

- 'toxic', 'severe_toxic', 'obscene', 'threat', 'insult', 'identity_hate'

- ]

-

- # Load pretrained model

- pretrained_model = AutoModel.from_pretrained("bert-base-uncased")

-

- # Load bert tokenizer

- tokenizer = AutoTokenizer.from_pretrained('bert-base-uncased')

-

- model = MultiLabelClassifier(pretrained_model, num_labels=len(label_info))

-

- trans_func = partial(convert_example,

- tokenizer=tokenizer,

- max_seq_length=args.max_seq_length,

- is_test=True)

- batchify_fn = lambda samples, fn=Tuple(

- Pad(axis=0, pad_val=tokenizer.pad_token_id), # input

- Pad(axis=0, pad_val=tokenizer.pad_token_type_id), # segment

- ): [data for data in fn(samples)]

- test_data_loader = create_dataloader(test_ds,

- mode='test',

- batch_size=args.batch_size,

- batchify_fn=batchify_fn,

- trans_fn=trans_func)

-

- if args.params_path and os.path.isfile(args.params_path):

- state_dict = paddle.load(args.params_path)

- model.set_dict(state_dict)

- print("Loaded parameters from %s" % args.params_path)

-

- results = predict(model, test_data_loader, args.batch_size)

- filename = os.path.join(args.data_path, file_name)

-

- # Write test result into csv file

- write_test_results(filename, results, label_info)

diff --git a/examples/text_classification/multi_label/train.py b/examples/text_classification/multi_label/train.py

deleted file mode 100644

index 4e14fac25d95..000000000000

--- a/examples/text_classification/multi_label/train.py

+++ /dev/null

@@ -1,179 +0,0 @@

-# Copyright (c) 2021 PaddlePaddle Authors. All Rights Reserved.

-#

-# Licensed under the Apache License, Version 2.0 (the "License");

-# you may not use this file except in compliance with the License.

-# You may obtain a copy of the License at

-#

-# http://www.apache.org/licenses/LICENSE-2.0

-#

-# Unless required by applicable law or agreed to in writing, software

-# distributed under the License is distributed on an "AS IS" BASIS,

-# WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.

-# See the License for the specific language governing permissions and

-# limitations under the License.

-

-from functools import partial

-import argparse

-import os

-import random

-import time

-

-import numpy as np

-import paddle

-import paddle.nn.functional as F

-

-from paddlenlp.transformers import AutoModel, AutoTokenizer

-from paddlenlp.data import Stack, Tuple, Pad

-from paddlenlp.datasets import load_dataset

-from paddlenlp.transformers import LinearDecayWithWarmup

-

-from data import convert_example, create_dataloader, read_custom_data

-from metric import MultiLabelReport

-from model import MultiLabelClassifier

-

-# yapf: disable

-parser = argparse.ArgumentParser()

-parser.add_argument("--save_dir", default='./checkpoints', type=str, help="The output directory where the model checkpoints will be written.")

-parser.add_argument("--max_seq_length", default=128, type=int, help="The maximum total input sequence length after tokenization. "

- "Sequences longer than this will be truncated, sequences shorter will be padded.")

-parser.add_argument("--batch_size", default=32, type=int, help="Batch size per GPU/CPU for training.")

-parser.add_argument("--learning_rate", default=5e-5, type=float, help="The initial learning rate for Adam.")

-parser.add_argument("--weight_decay", default=0.0, type=float, help="Weight decay if we apply some.")

-parser.add_argument("--epochs", default=3, type=int, help="Total number of training epochs to perform.")

-parser.add_argument("--warmup_proportion", default=0.0, type=float, help="Linear warmup proption over the training process.")

-parser.add_argument("--init_from_ckpt", type=str, default=None, help="The path of checkpoint to be loaded.")

-parser.add_argument("--seed", type=int, default=1000, help="random seed for initialization")

-parser.add_argument("--device", choices=["cpu", "gpu", "xpu"], default="gpu", help="Select which device to train model, defaults to gpu.")

-parser.add_argument("--data_path", type=str, default="./data", help="The path of datasets to be loaded")

-args = parser.parse_args()

-# yapf: enable

-

-

-def set_seed(seed):

- """sets random seed"""

- random.seed(seed)

- np.random.seed(seed)

- paddle.seed(seed)

-

-

-@paddle.no_grad()

-def evaluate(model, criterion, metric, data_loader):

- """

- Given a dataset, it evals model and computes the metric.

-

- Args:

- model(obj:`paddle.nn.Layer`): A model to classify texts.

- criterion(obj:`paddle.nn.Layer`): It can compute the loss.

- metric(obj:`paddle.metric.Metric`): The evaluation metric.

- data_loader(obj:`paddle.io.DataLoader`): The dataset loader which generates batches.

- """

- model.eval()

- metric.reset()

- losses = []

- for batch in data_loader:

- input_ids, token_type_ids, labels = batch

- logits = model(input_ids, token_type_ids)

- loss = criterion(logits, labels)

- probs = F.sigmoid(logits)

- losses.append(loss.numpy())

- metric.update(probs, labels)

- auc, f1_score = metric.accumulate()

- print("eval loss: %.5f, auc: %.5f, f1 score: %.5f" %

- (np.mean(losses), auc, f1_score))

- model.train()

- metric.reset()

-

-

-def do_train():

- paddle.set_device(args.device)

- rank = paddle.distributed.get_rank()

- if paddle.distributed.get_world_size() > 1:

- paddle.distributed.init_parallel_env()

-

- set_seed(args.seed)

-

- # Load train dataset.

- file_name = 'train.csv'

- train_ds = load_dataset(read_custom_data,

- filename=os.path.join(args.data_path, file_name),

- is_test=False,

- lazy=False)

-

- pretrained_model = AutoModel.from_pretrained("bert-base-uncased")

- tokenizer = AutoTokenizer.from_pretrained('bert-base-uncased')

-

- trans_func = partial(convert_example,

- tokenizer=tokenizer,

- max_seq_length=args.max_seq_length)

- batchify_fn = lambda samples, fn=Tuple(

- Pad(axis=0, pad_val=tokenizer.pad_token_id), # input

- Pad(axis=0, pad_val=tokenizer.pad_token_type_id), # segment

- Stack(dtype='float32') # label

- ): [data for data in fn(samples)]

- train_data_loader = create_dataloader(train_ds,

- mode='train',

- batch_size=args.batch_size,

- batchify_fn=batchify_fn,

- trans_fn=trans_func)

-

- model = MultiLabelClassifier(pretrained_model,

- num_labels=len(train_ds.data[0]["label"]))

-

- if args.init_from_ckpt and os.path.isfile(args.init_from_ckpt):

- state_dict = paddle.load(args.init_from_ckpt)

- model.set_dict(state_dict)

- model = paddle.DataParallel(model)

- num_training_steps = len(train_data_loader) * args.epochs

-

- lr_scheduler = LinearDecayWithWarmup(args.learning_rate, num_training_steps,

- args.warmup_proportion)

-

- # Generate parameter names needed to perform weight decay.

- # All bias and LayerNorm parameters are excluded.

- decay_params = [

- p.name for n, p in model.named_parameters()

- if not any(nd in n for nd in ["bias", "norm"])

- ]

-

- optimizer = paddle.optimizer.AdamW(

- learning_rate=lr_scheduler,

- parameters=model.parameters(),

- weight_decay=args.weight_decay,

- apply_decay_param_fun=lambda x: x in decay_params)

-

- metric = MultiLabelReport()

- criterion = paddle.nn.BCEWithLogitsLoss()

-

- global_step = 0

- tic_train = time.time()

- for epoch in range(1, args.epochs + 1):

- for step, batch in enumerate(train_data_loader, start=1):

- input_ids, token_type_ids, labels = batch

- logits = model(input_ids, token_type_ids)

- loss = criterion(logits, labels)

- probs = F.sigmoid(logits)

- metric.update(probs, labels)

- auc, f1_score = metric.accumulate()

-

- global_step += 1

- if global_step % 10 == 0 and rank == 0:

- print(

- "global step %d, epoch: %d, batch: %d, loss: %.5f, auc: %.5f, f1 score: %.5f, speed: %.2f step/s"

- % (global_step, epoch, step, loss, auc, f1_score, 10 /

- (time.time() - tic_train)))

- tic_train = time.time()

- loss.backward()

- optimizer.step()

- lr_scheduler.step()

- optimizer.clear_grad()

- if global_step % 100 == 0 and rank == 0:

- save_dir = os.path.join(args.save_dir, "model_%d" % global_step)

- if not os.path.exists(save_dir):

- os.makedirs(save_dir)

- save_param_path = os.path.join(save_dir, "model_state.pdparams")

- paddle.save(model.state_dict(), save_param_path)

- tokenizer.save_pretrained(save_dir)

-

-

-if __name__ == "__main__":

- do_train()

diff --git a/examples/text_classification/pretrained_models b/examples/text_classification/pretrained_models

new file mode 120000

index 000000000000..b64da903a76b

--- /dev/null

+++ b/examples/text_classification/pretrained_models

@@ -0,0 +1 @@

+../../applications/text_classification

\ No newline at end of file

diff --git a/examples/text_classification/pretrained_models/README.md b/examples/text_classification/pretrained_models/README.md

deleted file mode 100644

index 5a12aa9e34b2..000000000000

--- a/examples/text_classification/pretrained_models/README.md

+++ /dev/null

@@ -1,256 +0,0 @@

-# 使用预训练模型Fine-tune完成中文文本分类任务

-

-

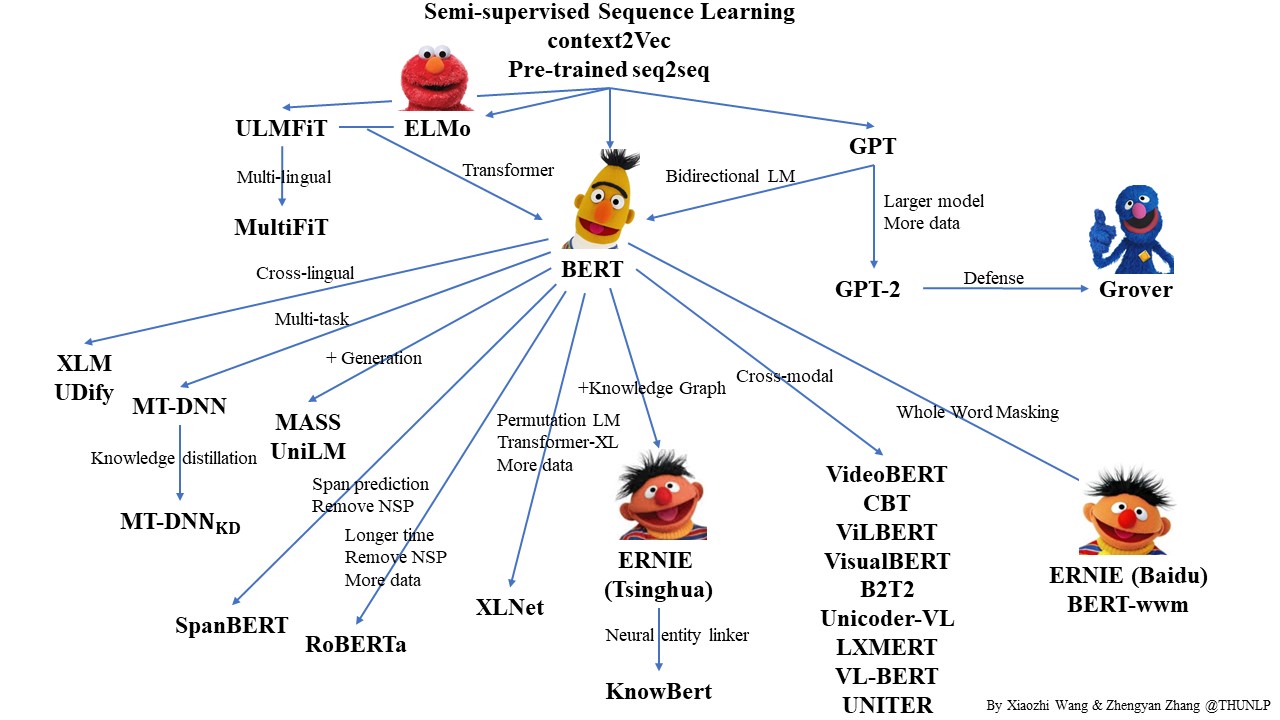

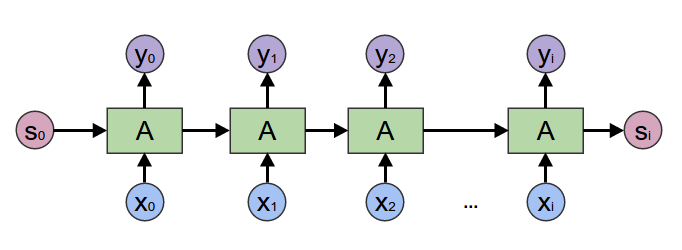

-在2017年之前,工业界和学术界对NLP文本处理依赖于序列模型[Recurrent Neural Network (RNN)](../rnn).

-

-

-

-

-

-

-[paddlenlp.seq2vec是什么? 瞧瞧它怎么完成情感分析](https://aistudio.baidu.com/aistudio/projectdetail/1283423)教程介绍了如何使用`paddlenlp.seq2vec`表征文本语义。

-

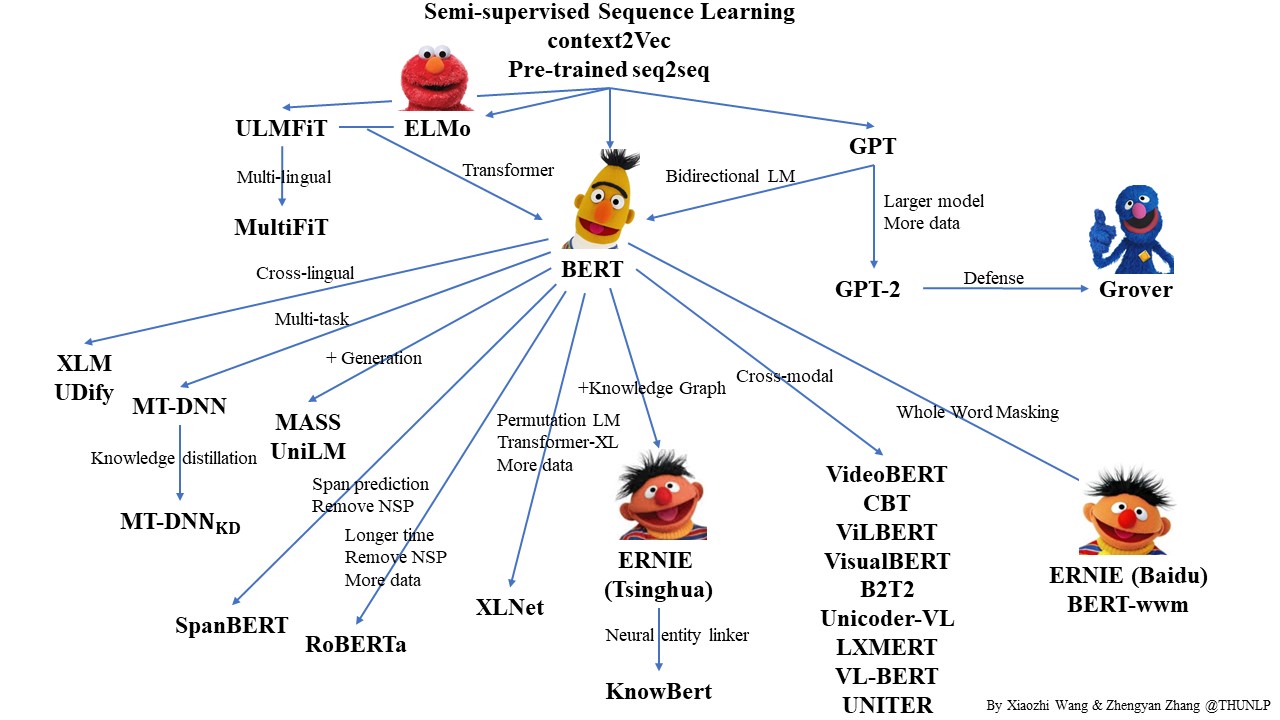

-近年来随着深度学习的发展,模型参数的数量飞速增长。为了训练这些参数,需要更大的数据集来避免过拟合。然而,对于大部分NLP任务来说,构建大规模的标注数据集非常困难(成本过高),特别是对于句法和语义相关的任务。相比之下,大规模的未标注语料库的构建则相对容易。为了利用这些数据,我们可以先从其中学习到一个好的表示,再将这些表示应用到其他任务中。最近的研究表明,基于大规模未标注语料库的预训练模型(Pretrained Models, PTM) 在NLP任务上取得了很好的表现。

-

-近年来,大量的研究表明基于大型语料库的预训练模型(Pretrained Models, PTM)可以学习通用的语言表示,有利于下游NLP任务,同时能够避免从零开始训练模型。随着计算能力的发展,深度模型的出现(即 Transformer)和训练技巧的增强使得 PTM 不断发展,由浅变深。

-

-

-

-

-

-

-本图片来自于:https://github.com/thunlp/PLMpapers

-

-本示例展示了以ERNIE([Enhanced Representation through Knowledge Integration](https://arxiv.org/abs/1904.09223))代表的预训练模型如何Finetune完成中文文本分类任务。

-

-## 模型简介

-

-本项目针对中文文本分类问题,开源了一系列模型,供用户可配置地使用:

-

-+ BERT([Bidirectional Encoder Representations from Transformers](https://arxiv.org/abs/1810.04805))中文模型,简写`bert-base-chinese`, 其由12层Transformer网络组成。

-+ ERNIE[ERNIE 3.0 Titan: Exploring Larger-scale Knowledge Enhanced Pre-training for Language Understanding and Generation](https://arxiv.org/abs/2112.12731),支持ERNIE 3.0-Medium 中文模型(简写`ernie-3.0-medium-zh`)和 ERNIE 3.0-Base-zh 等 ERNIE 3.0 轻量级中文模型。

-+ RoBERTa([A Robustly Optimized BERT Pretraining Approach](https://arxiv.org/abs/1907.11692)),支持 24 层 Transformer 网络的 `roberta-wwm-ext-large` 和 12 层 Transformer 网络的 `roberta-wwm-ext`。

-

-| 模型 | dev acc | test acc |

-| ---- | ------- | -------- |

-| bert-base-chinese | 0.93833 | 0.94750 |

-| bert-wwm-chinese | 0.94583 | 0.94917 |

-| bert-wwm-ext-chinese | 0.94667 | 0.95500 |

-| ernie-1.0-base-zh | 0.94667 | 0.95333 |

-| ernie-tiny | 0.93917 | 0.94833 |

-| roberta-wwm-ext | 0.94750 | 0.95250 |

-| roberta-wwm-ext-large | 0.95250 | 0.95333 |

-| rbt3 | 0.92583 | 0.93250 |

-| rbtl3 | 0.9341 | 0.93583 |

-

-

-## 快速开始

-

-### 代码结构说明

-

-以下是本项目主要代码结构及说明:

-

-```text

-pretrained_models/

-├── deploy # 部署

-│ └── python

-│ └── predict.py # python预测部署示例

-│ └── serving

-│ ├── client.py # 客户端预测脚本

-│ └── export_servable_model.py # 导出Serving模型及其配置

-├── export_model.py # 动态图参数导出静态图参数脚本

-├── predict.py # 预测脚本

-├── README.md # 使用说明

-└── train.py # 训练评估脚本

-```

-

-### 模型训练

-

-我们以中文情感分类公开数据集ChnSentiCorp为示例数据集,可以运行下面的命令,在训练集(train.tsv)上进行模型训练,并在开发集(dev.tsv)验证

-```shell

-$ unset CUDA_VISIBLE_DEVICES

-$ python -m paddle.distributed.launch --gpus "0" train.py --device gpu --save_dir ./checkpoints --use_amp False

-```

-

-可支持配置的参数:

-

-* `save_dir`:可选,保存训练模型的目录;默认保存在当前目录checkpoints文件夹下。

-* `dataset`:可选,xnli_cn,chnsenticorp 可选,默认为chnsenticorp数据集。

-* `max_seq_length`:可选,ERNIE/BERT模型使用的最大序列长度,最大不能超过512, 若出现显存不足,请适当调低这一参数;默认为128。

-* `batch_size`:可选,批处理大小,请结合显存情况进行调整,若出现显存不足,请适当调低这一参数;默认为32。

-* `learning_rate`:可选,Fine-tune的最大学习率;默认为5e-5。

-* `weight_decay`:可选,控制正则项力度的参数,用于防止过拟合,默认为0.00。

-* `epochs`: 训练轮次,默认为3。

-* `valid_steps`: evaluate的间隔steps数,默认100。

-* `save_steps`: 保存checkpoints的间隔steps数,默认100。

-* `logging_steps`: 日志打印的间隔steps数,默认10。

-* `warmup_proption`:可选,学习率warmup策略的比例,如果0.1,则学习率会在前10%训练step的过程中从0慢慢增长到learning_rate, 而后再缓慢衰减,默认为0.1。

-* `init_from_ckpt`:可选,模型参数路径,热启动模型训练;默认为None。

-* `seed`:可选,随机种子,默认为1000.

-* `device`: 选用什么设备进行训练,可选cpu或gpu。如使用gpu训练则参数gpus指定GPU卡号。

-* `use_amp`: 是否使用混合精度训练,默认为False。

-

-代码示例中使用的预训练模型是ERNIE,如果想要使用其他预训练模型如BERT等,只需更换`model` 和 `tokenizer`即可。

-

-```python

-# 使用ernie预训练模型

-# ernie-3.0-medium-zh

-model = AutoModelForSequenceClassification.from_pretrained('ernie-3.0-medium-zh',num_classes=2))

-tokenizer = AutoTokenizer.from_pretrained('ernie-3.0-medium-zh')

-

-# 使用bert预训练模型

-# bert-base-chinese

-model = AutoModelForSequenceClassification.from_pretrained('bert-base-chinese', num_class=2)

-tokenizer = AutoTokenizer.from_pretrained('bert-base-chinese')

-```

-更多预训练模型,参考[transformers](https://paddlenlp.readthedocs.io/zh/latest/model_zoo/index.html#transformer)

-

-

-程序运行时将会自动进行训练,评估,测试。同时训练过程中会自动保存模型在指定的`save_dir`中。

-如:

-```text

-checkpoints/

-├── model_100

-│ ├── model_config.json

-│ ├── model_state.pdparams

-│ ├── tokenizer_config.json

-│ └── vocab.txt

-└── ...

-```

-

-**NOTE:**

-* 如需恢复模型训练,则可以设置`init_from_ckpt`, 如`init_from_ckpt=checkpoints/model_100/model_state.pdparams`。

-* 如需使用ernie-tiny模型,则需要提前先安装sentencepiece依赖,如`pip install sentencepiece`

-* 使用动态图训练结束之后,还可以将动态图参数导出成静态图参数,具体代码见export_model.py。静态图参数保存在`output_path`指定路径中。

- 运行方式:

-

-```shell

-python export_model.py --params_path=./checkpoint/model_900/model_state.pdparams --output_path=./export

-```

-其中`params_path`是指动态图训练保存的参数路径,`output_path`是指静态图参数导出路径。

-

-导出模型之后,可以用于部署,deploy/python/predict.py文件提供了python部署预测示例。运行方式:

-

-```shell

-python deploy/python/predict.py --model_dir=./export

-```

-

-### 模型预测

-

-启动预测:

-```shell

-export CUDA_VISIBLE_DEVICES=0

-python predict.py --device 'gpu' --params_path checkpoints/model_900/model_state.pdparams

-```

-

-将待预测数据如以下示例:

-

-```text

-这个宾馆比较陈旧了,特价的房间也很一般。总体来说一般

-怀着十分激动的心情放映,可是看着看着发现,在放映完毕后,出现一集米老鼠的动画片

-作为老的四星酒店,房间依然很整洁,相当不错。机场接机服务很好,可以在车上办理入住手续,节省时间。

-```

-

-可以直接调用`predict`函数即可输出预测结果。

-

-如

-

-```text

-Data: 这个宾馆比较陈旧了,特价的房间也很一般。总体来说一般 Label: negative

-Data: 怀着十分激动的心情放映,可是看着看着发现,在放映完毕后,出现一集米老鼠的动画片 Label: negative

-Data: 作为老的四星酒店,房间依然很整洁,相当不错。机场接机服务很好,可以在车上办理入住手续,节省时间。 Label: positive

-```

-

-

-## 使用Paddle Serving API进行推理部署

-

-**NOTE:**

-

-使用Paddle Serving服务化部署需要将动态图保存的模型参数导出为静态图Inference模型参数文件。如何导出模型参考上述提到的**导出模型**。

-

-Inference模型参数文件:

-| 文件 | 说明 |

-|-------------------------------|----------------------------------------|

-| inference.pdiparams | 模型权重文件,供推理时加载使用 |

-| inference.pdmodel | 模型结构文件,供推理时加载使用 |

-

-

-### 依赖安装

-

-* 服务器端依赖:

-

-```shell

-pip install paddle-serving-app paddle-serving-client paddle-serving-server

-```

-

-如果服务器端可以使用GPU进行推理,则安装server的gpu版本,安装时要注意参考服务器当前CUDA、TensorRT的版本来安装对应的版本:[Serving readme](https://github.com/PaddlePaddle/Serving/tree/v0.6.0)

-

-```shell

-pip install paddle-serving-app paddle-serving-client paddle-serving-server-gpu

-```

-

-* 客户端依赖:

-

-```shell

-pip install paddle-serving-app paddle-serving-client

-```

-

-建议在**docker**容器中运行服务器端和客户端以避免一些系统依赖库问题,启动docker镜像的命令参考:[Serving readme](https://github.com/PaddlePaddle/Serving/tree/v0.6.0)

-

-### Serving的模型和配置导出

-

-使用Serving进行预测部署时,需要将静态图inference model导出为Serving可读入的模型参数和配置。运行方式如下:

-

-```shell

-python -u deploy/serving/export_servable_model.py \

- --inference_model_dir ./export/ \

- --model_file inference.pdmodel \

- --params_file inference.pdiparams

-```

-

-可支持配置的参数:

-* `inference_model_dir`: Inference推理模型所在目录,这里假设为 export 目录。

-* `model_file`: 推理需要加载的模型结构文件。

-* `params_file`: 推理需要加载的模型权重文件。

-

-执行命令后,会在当前目录下生成2个目录:serving_server 和 serving_client。serving_server目录包含服务器端所需的模型和配置,需将其拷贝到服务器端容器中;serving_client目录包含客户端所需的配置,需将其拷贝到客户端容器中。

-

-### 服务器启动server

-

-在服务器端容器中,启动server

-

-```shell

-python -m paddle_serving_server.serve \

- --model ./serving_server \

- --port 8090

-```

-其中:

-* `model`: server加载的模型和配置所在目录。

-* `port`: 表示server开启的服务端口8090。

-

-如果服务器端可以使用GPU进行推理计算,则启动服务器时可以配置server使用的GPU id

-

-```shell

-python -m paddle_serving_server.serve \

- --model ./serving_server \

- --port 8090 \

- --gpu_id 0

-```

-* `gpu_id`: server使用0号GPU。

-

-

-### 客服端发送推理请求

-

-在客户端容器中,使用前面得到的serving_client目录启动client发起RPC推理请求。和使用Paddle Inference API进行推理一样。

-

-### 从命令行读取输入数据发起推理请求

-```shell

-python deploy/serving/client.py \

- --client_config_file ./serving_client/serving_client_conf.prototxt \

- --server_ip_port 127.0.0.1:8090 \

- --max_seq_length 128

-```

-其中参数释义如下:

-- `client_config_file` 表示客户端需要加载的配置文件。

-- `server_ip_port` 表示服务器端的ip地址和端口号。ip地址和端口号需要根据实际情况进行更换。

-- `max_seq_length` 表示输入的最大句子长度,超过该长度将被截断。

diff --git a/examples/text_classification/pretrained_models/deploy/cpp/CMakeLists.txt b/examples/text_classification/pretrained_models/deploy/cpp/CMakeLists.txt

deleted file mode 100644

index 6f7f19af1857..000000000000

--- a/examples/text_classification/pretrained_models/deploy/cpp/CMakeLists.txt

+++ /dev/null

@@ -1,227 +0,0 @@

-cmake_minimum_required(VERSION 3.0)

-project(seq_cls_infer CXX C)

-option(WITH_MKL "Compile demo with MKL/OpenBlas support, default use MKL." ON)

-option(WITH_GPU "Compile demo with GPU/CPU, default use CPU." OFF)

-option(WITH_STATIC_LIB "Compile demo with static/shared library, default use static." ON)

-option(USE_TENSORRT "Compile demo with TensorRT." OFF)

-option(WITH_ROCM "Compile demo with rocm." OFF)

-

-if(NOT WITH_STATIC_LIB)

- add_definitions("-DPADDLE_WITH_SHARED_LIB")

-else()

- # PD_INFER_DECL is mainly used to set the dllimport/dllexport attribute in dynamic library mode.

- # Set it to empty in static library mode to avoid compilation issues.

- add_definitions("/DPD_INFER_DECL=")

-endif()

-

-macro(safe_set_static_flag)

- foreach(flag_var

- CMAKE_CXX_FLAGS CMAKE_CXX_FLAGS_DEBUG CMAKE_CXX_FLAGS_RELEASE

- CMAKE_CXX_FLAGS_MINSIZEREL CMAKE_CXX_FLAGS_RELWITHDEBINFO)

- if(${flag_var} MATCHES "/MD")

- string(REGEX REPLACE "/MD" "/MT" ${flag_var} "${${flag_var}}")

- endif(${flag_var} MATCHES "/MD")

- endforeach(flag_var)

-endmacro()

-

-if(NOT DEFINED PADDLE_LIB)

- message(FATAL_ERROR "please set PADDLE_LIB with -DPADDLE_LIB=/path/paddle/lib")

-endif()

-if(NOT DEFINED PROJECT_NAME)

- message(FATAL_ERROR "please set PROJECT_NAME with -DPROJECT_NAME=demo_name")

-endif()

-

-include_directories("${PADDLE_LIB}/")

-set(PADDLE_LIB_THIRD_PARTY_PATH "${PADDLE_LIB}/third_party/install/")

-include_directories("${PADDLE_LIB_THIRD_PARTY_PATH}protobuf/include")

-include_directories("${PADDLE_LIB_THIRD_PARTY_PATH}glog/include")

-include_directories("${PADDLE_LIB_THIRD_PARTY_PATH}gflags/include")

-include_directories("${PADDLE_LIB_THIRD_PARTY_PATH}xxhash/include")

-include_directories("${PADDLE_LIB_THIRD_PARTY_PATH}cryptopp/include")

-include_directories("${PADDLE_LIB_THIRD_PARTY_PATH}utf8proc/include")

-

-link_directories("${PADDLE_LIB_THIRD_PARTY_PATH}protobuf/lib")

-link_directories("${PADDLE_LIB_THIRD_PARTY_PATH}glog/lib")

-link_directories("${PADDLE_LIB_THIRD_PARTY_PATH}gflags/lib")

-link_directories("${PADDLE_LIB_THIRD_PARTY_PATH}xxhash/lib")

-link_directories("${PADDLE_LIB_THIRD_PARTY_PATH}cryptopp/lib")

-link_directories("${PADDLE_LIB_THIRD_PARTY_PATH}utf8proc/lib")

-link_directories("${PADDLE_LIB}/paddle/lib")

-

-include_directories("${PROJECT_SOURCE_DIR}/tokenizer")

-add_subdirectory("tokenizer")

-

-if (WIN32)

- add_definitions("/DGOOGLE_GLOG_DLL_DECL=")

- option(MSVC_STATIC_CRT "use static C Runtime library by default" ON)

- if (MSVC_STATIC_CRT)

- if (WITH_MKL)

- set(FLAG_OPENMP "/openmp")

- endif()

- set(CMAKE_C_FLAGS_DEBUG "${CMAKE_C_FLAGS_DEBUG} /bigobj /MTd ${FLAG_OPENMP}")

- set(CMAKE_C_FLAGS_RELEASE "${CMAKE_C_FLAGS_RELEASE} /bigobj /MT ${FLAG_OPENMP}")

- set(CMAKE_CXX_FLAGS_DEBUG "${CMAKE_CXX_FLAGS_DEBUG} /bigobj /MTd ${FLAG_OPENMP}")

- set(CMAKE_CXX_FLAGS_RELEASE "${CMAKE_CXX_FLAGS_RELEASE} /bigobj /MT ${FLAG_OPENMP}")

- safe_set_static_flag()

- if (WITH_STATIC_LIB)

- add_definitions(-DSTATIC_LIB)

- endif()

- endif()

-else()

- if(WITH_MKL)

- set(FLAG_OPENMP "-fopenmp")

- endif()

- set(CMAKE_CXX_FLAGS "${CMAKE_CXX_FLAGS} -std=c++11 ${FLAG_OPENMP}")

-endif()

-

-if(WITH_GPU)

- if(NOT WIN32)

- set(CUDA_LIB "/usr/local/cuda/lib64/" CACHE STRING "CUDA Library")

- else()

- if(CUDA_LIB STREQUAL "")

- set(CUDA_LIB "C:\\Program\ Files\\NVIDIA GPU Computing Toolkit\\CUDA\\v8.0\\lib\\x64")

- endif()

- endif(NOT WIN32)

-endif()

-

-if (USE_TENSORRT AND WITH_GPU)

- set(TENSORRT_ROOT "" CACHE STRING "The root directory of TensorRT library")

- if("${TENSORRT_ROOT}" STREQUAL "")

- message(FATAL_ERROR "The TENSORRT_ROOT is empty, you must assign it a value with CMake command. Such as: -DTENSORRT_ROOT=TENSORRT_ROOT_PATH ")

- endif()

- set(TENSORRT_INCLUDE_DIR ${TENSORRT_ROOT}/include)

- set(TENSORRT_LIB_DIR ${TENSORRT_ROOT}/lib)

- file(READ ${TENSORRT_INCLUDE_DIR}/NvInfer.h TENSORRT_VERSION_FILE_CONTENTS)

- string(REGEX MATCH "define NV_TENSORRT_MAJOR +([0-9]+)" TENSORRT_MAJOR_VERSION

- "${TENSORRT_VERSION_FILE_CONTENTS}")

- if("${TENSORRT_MAJOR_VERSION}" STREQUAL "")

- file(READ ${TENSORRT_INCLUDE_DIR}/NvInferVersion.h TENSORRT_VERSION_FILE_CONTENTS)

- string(REGEX MATCH "define NV_TENSORRT_MAJOR +([0-9]+)" TENSORRT_MAJOR_VERSION

- "${TENSORRT_VERSION_FILE_CONTENTS}")

- endif()

- if("${TENSORRT_MAJOR_VERSION}" STREQUAL "")

- message(SEND_ERROR "Failed to detect TensorRT version.")

- endif()

- string(REGEX REPLACE "define NV_TENSORRT_MAJOR +([0-9]+)" "\\1"

- TENSORRT_MAJOR_VERSION "${TENSORRT_MAJOR_VERSION}")

- message(STATUS "Current TensorRT header is ${TENSORRT_INCLUDE_DIR}/NvInfer.h. "

- "Current TensorRT version is v${TENSORRT_MAJOR_VERSION}. ")

- include_directories("${TENSORRT_INCLUDE_DIR}")

- link_directories("${TENSORRT_LIB_DIR}")

-endif()

-

-if(WITH_MKL)

- set(MATH_LIB_PATH "${PADDLE_LIB_THIRD_PARTY_PATH}mklml")

- include_directories("${MATH_LIB_PATH}/include")

- if(WIN32)

- set(MATH_LIB ${MATH_LIB_PATH}/lib/mklml${CMAKE_STATIC_LIBRARY_SUFFIX}

- ${MATH_LIB_PATH}/lib/libiomp5md${CMAKE_STATIC_LIBRARY_SUFFIX})

- else()

- set(MATH_LIB ${MATH_LIB_PATH}/lib/libmklml_intel${CMAKE_SHARED_LIBRARY_SUFFIX}

- ${MATH_LIB_PATH}/lib/libiomp5${CMAKE_SHARED_LIBRARY_SUFFIX})

- endif()

- set(MKLDNN_PATH "${PADDLE_LIB_THIRD_PARTY_PATH}mkldnn")

- if(EXISTS ${MKLDNN_PATH})

- include_directories("${MKLDNN_PATH}/include")

- if(WIN32)

- set(MKLDNN_LIB ${MKLDNN_PATH}/lib/mkldnn.lib)

- else(WIN32)

- set(MKLDNN_LIB ${MKLDNN_PATH}/lib/libmkldnn.so.0)

- endif(WIN32)

- endif()

-else()

- set(OPENBLAS_LIB_PATH "${PADDLE_LIB_THIRD_PARTY_PATH}openblas")

- include_directories("${OPENBLAS_LIB_PATH}/include/openblas")

- if(WIN32)

- set(MATH_LIB ${OPENBLAS_LIB_PATH}/lib/openblas${CMAKE_STATIC_LIBRARY_SUFFIX})

- else()

- set(MATH_LIB ${OPENBLAS_LIB_PATH}/lib/libopenblas${CMAKE_STATIC_LIBRARY_SUFFIX})

- endif()

-endif()

-

-if(WITH_STATIC_LIB)

- set(DEPS ${PADDLE_LIB}/paddle/lib/libpaddle_inference${CMAKE_STATIC_LIBRARY_SUFFIX})

-else()

- if(WIN32)

- set(DEPS ${PADDLE_LIB}/paddle/lib/paddle_inference${CMAKE_STATIC_LIBRARY_SUFFIX})

- else()

- set(DEPS ${PADDLE_LIB}/paddle/lib/libpaddle_inference${CMAKE_SHARED_LIBRARY_SUFFIX})

- endif()

-endif()

-

-if (NOT WIN32)

- set(EXTERNAL_LIB "-lrt -ldl -lpthread")

- set(DEPS ${DEPS}

- ${MATH_LIB} ${MKLDNN_LIB}

- glog gflags protobuf xxhash cryptopp utf8proc

- ${EXTERNAL_LIB})

-else()

- set(DEPS ${DEPS}

- ${MATH_LIB} ${MKLDNN_LIB}

- glog gflags_static libprotobuf xxhash cryptopp-static utf8proc_static ${EXTERNAL_LIB})

- set(DEPS ${DEPS} shlwapi.lib)

-endif(NOT WIN32)

-

-if(WITH_GPU)

- if(NOT WIN32)

- if (USE_TENSORRT)

- set(DEPS ${DEPS} ${TENSORRT_LIB_DIR}/libnvinfer${CMAKE_SHARED_LIBRARY_SUFFIX})

- set(DEPS ${DEPS} ${TENSORRT_LIB_DIR}/libnvinfer_plugin${CMAKE_SHARED_LIBRARY_SUFFIX})

- endif()

- set(DEPS ${DEPS} ${CUDA_LIB}/libcudart${CMAKE_SHARED_LIBRARY_SUFFIX})

- else()

- if(USE_TENSORRT)

- set(DEPS ${DEPS} ${TENSORRT_LIB_DIR}/nvinfer${CMAKE_STATIC_LIBRARY_SUFFIX})

- set(DEPS ${DEPS} ${TENSORRT_LIB_DIR}/nvinfer_plugin${CMAKE_STATIC_LIBRARY_SUFFIX})

- if(${TENSORRT_MAJOR_VERSION} GREATER_EQUAL 7)

- set(DEPS ${DEPS} ${TENSORRT_LIB_DIR}/myelin64_1${CMAKE_STATIC_LIBRARY_SUFFIX})

- endif()

- endif()

- set(DEPS ${DEPS} ${CUDA_LIB}/cudart${CMAKE_STATIC_LIBRARY_SUFFIX} )

- set(DEPS ${DEPS} ${CUDA_LIB}/cublas${CMAKE_STATIC_LIBRARY_SUFFIX} )

- set(DEPS ${DEPS} ${CUDA_LIB}/cudnn${CMAKE_STATIC_LIBRARY_SUFFIX} )

- endif()

-endif()

-

-if(WITH_ROCM)

- if(NOT WIN32)

- set(DEPS ${DEPS} ${ROCM_LIB}/libamdhip64${CMAKE_SHARED_LIBRARY_SUFFIX})

- endif()

-endif()

-

-set(DEPS ${DEPS} Tokenizer)

-

-add_executable(${PROJECT_NAME} ${PROJECT_NAME}.cc)

-

-target_link_libraries(${PROJECT_NAME} ${DEPS})

-if(WIN32)

- if(USE_TENSORRT)

- add_custom_command(TARGET ${PROJECT_NAME} POST_BUILD

- COMMAND ${CMAKE_COMMAND} -E copy ${TENSORRT_LIB_DIR}/nvinfer${CMAKE_SHARED_LIBRARY_SUFFIX}

- ${CMAKE_BINARY_DIR}/${CMAKE_BUILD_TYPE}

- COMMAND ${CMAKE_COMMAND} -E copy ${TENSORRT_LIB_DIR}/nvinfer_plugin${CMAKE_SHARED_LIBRARY_SUFFIX}

- ${CMAKE_BINARY_DIR}/${CMAKE_BUILD_TYPE}

- )

- if(${TENSORRT_MAJOR_VERSION} GREATER_EQUAL 7)

- add_custom_command(TARGET ${PROJECT_NAME} POST_BUILD

- COMMAND ${CMAKE_COMMAND} -E copy ${TENSORRT_LIB_DIR}/myelin64_1${CMAKE_SHARED_LIBRARY_SUFFIX}

- ${CMAKE_BINARY_DIR}/${CMAKE_BUILD_TYPE})

- endif()

- endif()

- if(WITH_MKL)

- add_custom_command(TARGET ${PROJECT_NAME} POST_BUILD

- COMMAND ${CMAKE_COMMAND} -E copy ${MATH_LIB_PATH}/lib/mklml.dll ${CMAKE_BINARY_DIR}/Release

- COMMAND ${CMAKE_COMMAND} -E copy ${MATH_LIB_PATH}/lib/libiomp5md.dll ${CMAKE_BINARY_DIR}/Release

- COMMAND ${CMAKE_COMMAND} -E copy ${MKLDNN_PATH}/lib/mkldnn.dll ${CMAKE_BINARY_DIR}/Release

- )

- else()

- add_custom_command(TARGET ${PROJECT_NAME} POST_BUILD

- COMMAND ${CMAKE_COMMAND} -E copy ${OPENBLAS_LIB_PATH}/lib/openblas.dll ${CMAKE_BINARY_DIR}/Release

- )

- endif()

- if(NOT WITH_STATIC_LIB)

- add_custom_command(TARGET ${PROJECT_NAME} POST_BUILD

- COMMAND ${CMAKE_COMMAND} -E copy "${PADDLE_LIB}/paddle/lib/paddle_inference.dll" ${CMAKE_BINARY_DIR}/${CMAKE_BUILD_TYPE}

- )

- endif()

-endif()

diff --git a/examples/text_classification/pretrained_models/deploy/cpp/compile.sh b/examples/text_classification/pretrained_models/deploy/cpp/compile.sh

deleted file mode 100755

index 67bc6b4cdeb7..000000000000

--- a/examples/text_classification/pretrained_models/deploy/cpp/compile.sh

+++ /dev/null

@@ -1,36 +0,0 @@

-#!/bin/bash

-set +x

-set -e

-

-work_path=$(dirname $(readlink -f $0))

-

-# 1. check paddle_inference exists

-if [ ! -d "${work_path}/lib/paddle_inference" ]; then

- echo "Please download paddle_inference lib and move it in cpp_deploy/lib"

- exit 1

-fi

-

-# 2. compile

-mkdir -p build

-cd build

-rm -rf *

-

-# same with the seq_cls_infer.cc

-PROJECT_NAME=seq_cls_infer

-

-WITH_MKL=ON

-WITH_GPU=ON

-

-LIB_DIR=${work_path}/lib/paddle_inference

-CUDNN_LIB=/usr/lib/x86_64-linux-gnu/

-CUDA_LIB=/usr/local/cuda/lib64

-

-cmake .. -DPADDLE_LIB=${LIB_DIR} \

- -DWITH_MKL=${WITH_MKL} \

- -DPROJECT_NAME=${PROJECT_NAME} \

- -DWITH_GPU=${WITH_GPU} \

- -DWITH_STATIC_LIB=OFF \

- -DCUDNN_LIB=${CUDNN_LIB} \

- -DCUDA_LIB=${CUDA_LIB}

-

-make -j

diff --git a/examples/text_classification/pretrained_models/deploy/cpp/run.sh b/examples/text_classification/pretrained_models/deploy/cpp/run.sh

deleted file mode 100644

index 5efb21231bf8..000000000000

--- a/examples/text_classification/pretrained_models/deploy/cpp/run.sh

+++ /dev/null

@@ -1,11 +0,0 @@

-#!/bin/bash

-set +x

-set -e

-

-work_path=$(dirname $(readlink -f $0))

-

-# 1. compile

-bash ${work_path}/compile.sh

-

-# 2. run

-./build/seq_cls_infer --model_file ./export/inference.pdmodel --params_file ./export/inference.pdiparams --vocab_file ./vocab.txt

diff --git a/examples/text_classification/pretrained_models/deploy/cpp/seq_cls_infer.cc b/examples/text_classification/pretrained_models/deploy/cpp/seq_cls_infer.cc

deleted file mode 100644

index 63734d07971f..000000000000

--- a/examples/text_classification/pretrained_models/deploy/cpp/seq_cls_infer.cc

+++ /dev/null

@@ -1,103 +0,0 @@

-

-

-#include

-#include

-

-#include

-#include

-#include

-#include

-#include

-

-#include "paddle/include/paddle_inference_api.h"

-#include "tokenizer.h"

-

-using paddle_infer::Config;

-using paddle_infer::Predictor;

-using paddle_infer::CreatePredictor;

-

-DEFINE_string(model_file, "", "Path to the inference model file.");

-DEFINE_string(params_file, "", "Path to the inference parameters file.");

-DEFINE_string(vocab_file, "", "Path to the vocab file.");

-DEFINE_int32(seq_len, 128, "Sequence length should less than or equal to 512.");

-DEFINE_bool(use_gpu, true, "enable gpu");

-

-

-template

-void PrepareInput(Predictor* predictor,

- const std::vector& data,

- const std::string& name,

- const std::vector& shape) {

- auto input = predictor->GetInputHandle(name);

- input->Reshape(shape);

- input->CopyFromCpu(data.data());

-}

-

-template

-void GetOutput(Predictor* predictor,

- std::string output_name,

- std::vector* out_data) {

- auto output = predictor->GetOutputHandle(output_name);

- std::vector output_shape = output->shape();

- int out_num = std::accumulate(

- output_shape.begin(), output_shape.end(), 1, std::multiplies());

- out_data->resize(out_num);

- output->CopyToCpu(out_data->data());

-}

-

-int main(int argc, char* argv[]) {

- google::ParseCommandLineFlags(&argc, &argv, true);

- tokenizer::Vocab vocab;

- tokenizer::LoadVocab(FLAGS_vocab_file, &vocab);

- tokenizer::BertTokenizer tokenizer(vocab, false);

-

- std::vector data{

- "这个宾馆比较陈旧了,特价的房间也很一般。总体来说一般",

- "请问:有些字打错了,我怎么样才可以回去编辑一下啊?",

- "本次入住酒店的网络不是很稳定,断断续续,希望能够改进。"};

- int batch_size = data.size();

- std::vector>>

- batch_encode_inputs(batch_size);

- tokenizer.BatchEncode(&batch_encode_inputs,

- data,

- std::vector(),

- false,

- FLAGS_seq_len,

- true);

-

- std::vector input_ids(batch_size * FLAGS_seq_len);

- for (size_t i = 0; i < batch_size; i++) {

- std::copy(batch_encode_inputs[i]["input_ids"].begin(),

- batch_encode_inputs[i]["input_ids"].end(),

- input_ids.begin() + i * FLAGS_seq_len);

- }

- std::vector toke_type_ids(batch_size * FLAGS_seq_len);

- for (size_t i = 0; i < batch_size; i++) {

- std::copy(batch_encode_inputs[i]["token_type_ids"].begin(),

- batch_encode_inputs[i]["token_type_ids"].end(),

- toke_type_ids.begin() + i * FLAGS_seq_len);

- }

-

- Config config;

- config.SetModel(FLAGS_model_file, FLAGS_params_file);

- if (FLAGS_use_gpu) {

- config.EnableUseGpu(100, 0);

- }

- auto predictor = CreatePredictor(config);

-

- auto input_names = predictor->GetInputNames();

- std::vector shape{batch_size, FLAGS_seq_len};

- PrepareInput(predictor.get(), input_ids, input_names[0], shape);

- PrepareInput(predictor.get(), toke_type_ids, input_names[1], shape);

-

- predictor->Run();

- std::vector logits;

- auto output_names = predictor->GetOutputNames();

- GetOutput(predictor.get(), output_names[0], &logits);

- for (size_t i = 0; i < data.size(); i++) {

- std::string label =

- (logits[i * 2] < logits[i * 2 + 1]) ? "negative" : "positive";

- std::cout << data[i] << " : " << label << std::endl;

- }

- return 0;

-}

\ No newline at end of file

diff --git a/examples/text_classification/pretrained_models/deploy/cpp/tokenizer/CMakeLists.txt b/examples/text_classification/pretrained_models/deploy/cpp/tokenizer/CMakeLists.txt

deleted file mode 100644

index 125a775ba676..000000000000

--- a/examples/text_classification/pretrained_models/deploy/cpp/tokenizer/CMakeLists.txt

+++ /dev/null

@@ -1,3 +0,0 @@

-add_library(Tokenizer tokenizer.cc)

-link_directories("${PADDLE_LIB_THIRD_PARTY_PATH}utf8proc/lib")

-target_link_libraries(Tokenizer utf8proc)

\ No newline at end of file

diff --git a/examples/text_classification/pretrained_models/deploy/cpp/tokenizer/tokenizer.cc b/examples/text_classification/pretrained_models/deploy/cpp/tokenizer/tokenizer.cc

deleted file mode 100644

index 59d3abb3b1ed..000000000000

--- a/examples/text_classification/pretrained_models/deploy/cpp/tokenizer/tokenizer.cc

+++ /dev/null

@@ -1,763 +0,0 @@

-/* Copyright (c) 2021 PaddlePaddle Authors. All Rights Reserved.

-Licensed under the Apache License, Version 2.0 (the "License");

-you may not use this file except in compliance with the License.

-You may obtain a copy of the License at

- http://www.apache.org/licenses/LICENSE-2.0

-Unless required by applicable law or agreed to in writing, software

-distributed under the License is distributed on an "AS IS" BASIS,

-WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.

-See the License for the specific language governing permissions and

-limitations under the License. */

-

-#include

-

-#include

-#include

-#include

-#include

-#include

-#include

-#include

-#include

-#include

-

-#include

-

-#include "tokenizer.h"

-

-namespace tokenizer {

-

-using std::bad_cast;

-using std::codecvt_utf8;

-using std::cout;

-using std::endl;

-using std::exception;

-using std::ifstream;

-using std::min;

-using std::runtime_error;

-using std::unordered_map;

-using std::unordered_set;

-using std::shared_ptr;

-using std::string;

-using std::vector;

-using std::wstring;

-using std::wstring_convert;

-

-std::wstring_convert> kConverter;

-const wstring kStripChars = L" \t\n\r\v\f";

-

-void ConvertStrToWstr(const std::string& src, std::wstring* res) {

- *res = kConverter.from_bytes(src);

-}

-

-void ConvertWstrToStr(const std::wstring& src, std::string* res) {

- *res = kConverter.to_bytes(src);

-}

-

-void NormalizeNfd(const std::string& s, std::string* ret) {

- *ret = "";

- char* result = reinterpret_cast(

- utf8proc_NFD(reinterpret_cast(s.c_str())));

- if (result) {

- *ret = std::string(result);

- free(result);

- }

-}

-

-bool IsControl(const wchar_t& ch) {

- if (ch == L'\t' || ch == L'\n' || ch == L'\r') return false;

- auto cat = utf8proc_category(ch);

- if (cat == UTF8PROC_CATEGORY_CC || cat == UTF8PROC_CATEGORY_CF) return true;

- return false;

-}

-

-bool IsWhiteSpace(const wchar_t& ch) {

- if (ch == L' ' || ch == L'\t' || ch == L'\n' || ch == L'\r') return true;

- auto cat = utf8proc_category(ch);

- if (cat == UTF8PROC_CATEGORY_ZS) return true;

- return false;

-}

-

-bool IsPunctuation(const wchar_t& ch) {

- if ((ch >= 33 && ch <= 47) || (ch >= 58 && ch <= 64) ||

- (ch >= 91 && ch <= 96) || (ch >= 123 && ch <= 126))

- return true;

- auto cat = utf8proc_category(ch);

- if (cat == UTF8PROC_CATEGORY_PD || cat == UTF8PROC_CATEGORY_PS ||

- cat == UTF8PROC_CATEGORY_PE || cat == UTF8PROC_CATEGORY_PC ||

- cat == UTF8PROC_CATEGORY_PO // sometimes ¶ belong SO

- ||

- cat == UTF8PROC_CATEGORY_PI || cat == UTF8PROC_CATEGORY_PF)

- return true;

- return false;

-}

-

-bool IsStripChar(const wchar_t& ch) {

- return kStripChars.find(ch) != wstring::npos;

-}

-

-void Strip(const wstring& text, wstring* ret) {

- *ret = text;

- if (ret->empty()) return;

- size_t pos = 0;

- while (pos < ret->size() && IsStripChar(ret->at(pos))) pos++;

- if (pos != 0) *ret = ret->substr(pos, ret->size() - pos);

- pos = ret->size() - 1;

- while (IsStripChar(ret->at(pos))) pos--;

- ret->substr(0, pos + 1);

-}

-

-vector Split(const wstring& text) {

- vector result;

- boost::split(result, text, boost::is_any_of(kStripChars));

- return result;

-}

-

-void Split(const wstring& text, vector* result) {

- // vector result;

- boost::split(*result, text, boost::is_any_of(kStripChars));

- // return result;

-}

-

-void WhiteSpaceTokenize(const wstring& text, vector* res) {

- wstring stext;

- Strip(text, &stext);

- if (stext.empty()) {

- return;

- } else {

- Split(text, res);

- }

-}

-

-void ToLower(const wstring& s, wstring* res) {

- res->clear();

- res->resize(s.size());

- for (size_t i = 0; i < s.size(); i++) {

- res->at(i) = utf8proc_tolower(s[i]);

- }

-}

-

-void LoadVocab(const std::string& vocabFile, Vocab* vocab) {

- size_t index = 0;

- std::ifstream ifs(vocabFile, std::ifstream::in);

- if (!ifs) {

- throw std::runtime_error("open file failed");

- }

- std::string line;

- while (getline(ifs, line)) {

- std::wstring token;

- ConvertStrToWstr(line, &token);

- if (token.empty()) break;

- (*vocab)[token] = index;

- index++;

- }

-}

-

-BasicTokenizer::BasicTokenizer(bool do_lower_case /* = true */)

- : do_lower_case_(do_lower_case) {}

-

-void BasicTokenizer::clean_text(const wstring& text, wstring* output) const {

- output->clear();

- wchar_t space_char = L' ';

- for (const wchar_t& cp : text) {

- if (cp == 0 || cp == 0xfffd || IsControl(cp)) continue;

- if (IsWhiteSpace(cp))

- output->push_back(std::move(space_char));

- else

- output->push_back(std::move(cp));

- }

-}

-

-bool BasicTokenizer::is_chinese_char(const wchar_t& ch) const {

- if ((ch >= 0x4E00 && ch <= 0x9FFF) || (ch >= 0x3400 && ch <= 0x4DBF) ||

- (ch >= 0x20000 && ch <= 0x2A6DF) || (ch >= 0x2A700 && ch <= 0x2B73F) ||

- (ch >= 0x2B740 && ch <= 0x2B81F) || (ch >= 0x2B820 && ch <= 0x2CEAF) ||

- (ch >= 0xF900 && ch <= 0xFAFF) || (ch >= 0x2F800 && ch <= 0x2FA1F))

- return true;

- return false;

-}

-

-void BasicTokenizer::tokenize_chinese_chars(const wstring& text,

- wstring* output) const {

- wchar_t space_char = L' ';

- for (auto& ch : text) {

- if (is_chinese_char(ch)) {

- output->push_back(std::move(space_char));

- output->push_back(std::move(ch));

- output->push_back(std::move(space_char));

- } else {

- output->push_back(std::move(ch));

- }

- }

-}

-

-void BasicTokenizer::run_strip_accents(const wstring& text,

- wstring* output) const {

- // Strips accents from a piece of text.

- wstring unicode_text;

- try {

- string tmp, nor_tmp;

- ConvertWstrToStr(text, &tmp);

- NormalizeNfd(tmp, &nor_tmp);

- ConvertStrToWstr(nor_tmp, &unicode_text);

- } catch (bad_cast& e) {

- std::cout << e.what() << endl;

- *output = L"";

- return;

- }

- output->clear();

- for (auto& ch : unicode_text) {

- auto&& cat = utf8proc_category(ch);

- if (cat == UTF8PROC_CATEGORY_MN) continue;

- output->push_back(std::move(ch));

- }

-}

-

-void BasicTokenizer::run_split_on_punc(const wstring& text,

- vector* output) const {

- output->clear();

- size_t i = 0;

- bool start_new_word = true;

- while (i < text.size()) {

- wchar_t ch = text[i];

- if (IsPunctuation(ch)) {

- output->emplace_back(wstring(&ch, 1));

- start_new_word = true;

- } else {

- if (start_new_word) output->emplace_back(wstring());

- start_new_word = false;

- output->at(output->size() - 1) += ch;

- }

- i++;

- }

-}

-

-void BasicTokenizer::Tokenize(const string& text, vector* res) const {

- wstring tmp, c_text, unicode_text;

- ConvertStrToWstr(text, &tmp);

- clean_text(tmp, &c_text);

- tokenize_chinese_chars(c_text, &unicode_text);

-

- vector original_tokens;

- WhiteSpaceTokenize(unicode_text, &original_tokens);

-

- vector split_tokens;

- for (wstring& token : original_tokens) {

- if (do_lower_case_) {

- tmp.clear();

- ToLower(token, &tmp);

- wstring stoken;

- run_strip_accents(tmp, &stoken);

- }

- vector tokens;

- run_split_on_punc(token, &tokens);

- for (size_t i = 0; i < tokens.size(); ++i) {

- split_tokens.emplace_back(tokens[i]);

- }

- }

- WhiteSpaceTokenize(boost::join(split_tokens, L" "), res);

-}

-

-WordPieceTokenizer::WordPieceTokenizer(

- Vocab& vocab,

- const wstring& unk_token /* = L"[UNK]"*/,

- const size_t max_input_chars_per_word /* = 100 */)

- : vocab_(vocab),

- unk_token_(unk_token),

- max_input_chars_per_word_(max_input_chars_per_word) {}

-

-void WordPieceTokenizer::Tokenize(const wstring& text,

- vector* output_tokens) const {

- // vector output_tokens;

- vector tokens;

- WhiteSpaceTokenize(text, &tokens);

- for (auto& token : tokens) {

- if (token.size() > max_input_chars_per_word_) {

- output_tokens->emplace_back(unk_token_);

- }

- bool is_bad = false;

- size_t start = 0;

- vector sub_tokens;

- while (start < token.size()) {

- size_t end = token.size();

- wstring cur_sub_str;

- bool has_cur_sub_str = false;

- while (start < end) {

- wstring substr = token.substr(start, end - start);

- if (start > 0) substr = L"##" + substr;

- if (vocab_.find(substr) != vocab_.end()) {

- cur_sub_str = substr;

- has_cur_sub_str = true;

- break;

- }

- end--;

- }

- if (!has_cur_sub_str) {

- is_bad = true;

- break;

- }

- sub_tokens.emplace_back(cur_sub_str);

- start = end;

- }

- if (is_bad) {

- output_tokens->emplace_back(unk_token_);

- } else {

- for (size_t i = 0; i < sub_tokens.size(); ++i)