diff --git a/docs/index.md b/docs/index.md

index ee0323919..0f092ab76 100644

--- a/docs/index.md

+++ b/docs/index.md

@@ -8,152 +8,48 @@

./README.md:description

--8<--

-## 📝案例列表

-

-

-

-数学(AI for Math)

-

-| 问题类型 | 案例名称 | 优化算法 | 模型类型 | 训练方式 | 数据集 | 参考资料 |

-|-----|---------|-----|---------|----|---------|---------|

-| 微分方程 | [拉普拉斯方程](./zh/examples/laplace2d.md) | 机理驱动 | MLP | 无监督学习 | - | - |

-| 微分方程 | [伯格斯方程](./zh/examples/deephpms.md) | 机理驱动 | MLP | 无监督学习 | [Data](https://github.com/maziarraissi/DeepHPMs/tree/master/Data) | [Paper](https://arxiv.org/pdf/1801.06637.pdf) |

-| 微分方程 | [洛伦兹方程](./zh/examples/lorenz.md) | 数据驱动 | Transformer-Physx | 监督学习 | [Data](https://github.com/zabaras/transformer-physx) | [Paper](https://arxiv.org/abs/2010.03957) |

-| 微分方程 | [若斯叻方程](./zh/examples/rossler.md) | 数据驱动 | Transformer-Physx | 监督学习 | [Data](https://github.com/zabaras/transformer-physx) | [Paper](https://arxiv.org/abs/2010.03957) |

-| 算子学习 | [DeepONet](./zh/examples/deeponet.md) | 数据驱动 | MLP | 监督学习 | [Data](https://deepxde.readthedocs.io/en/latest/demos/operator/antiderivative_unaligned.html) | [Paper](https://export.arxiv.org/pdf/1910.03193.pdf) |

-| 微分方程 | 梯度增强的物理知识融合PDE求解coming soon | 机理驱动 | gPINN | 半监督学习 | - | [Paper](https://www.sciencedirect.com/science/article/abs/pii/S0045782522001438?via%3Dihub) |

-| 积分方程 | [沃尔泰拉积分方程](./zh/examples/volterra_ide.md) | 机理驱动 | MLP | 无监督学习 | - | [Project](https://github.com/lululxvi/deepxde/blob/master/examples/pinn_forward/Volterra_IDE.py) |

-

-

-技术科学(AI for Technology)

-

-| 问题类型 | 案例名称 | 优化算法 | 模型类型 | 训练方式 | 数据集 | 参考资料 |

-|-----|---------|-----|---------|----|---------|---------|

-| 定常不可压流体 | [2D 定常方腔流](./zh/examples/ldc2d_steady.md) | 机理驱动 | MLP | 无监督学习 | - | |

-| 定常不可压流体 | [2D 达西流](./zh/examples/darcy2d.md) | 机理驱动 | MLP | 无监督学习 | - | |

-| 定常不可压流体 | [2D 管道流](./zh/examples/labelfree_DNN_surrogate.md) | 机理驱动 | MLP | 无监督学习 | - | [Paper](https://arxiv.org/abs/1906.02382) |

-| 定常不可压流体 | [3D 血管瘤](./zh/examples/aneurysm.md) | 机理驱动 | MLP | 无监督学习 | [Data](https://paddle-org.bj.bcebos.com/paddlescience/datasets/aneurysm/aneurysm_dataset.tar) | [Project](https://docs.nvidia.com/deeplearning/modulus/modulus-v2209/user_guide/intermediate/adding_stl_files.html)|

-| 定常不可压流体 | [任意 2D 几何体绕流](./zh/examples/deepcfd.md) | 数据驱动 | DeepCFD | 监督学习 | - | [Paper](https://arxiv.org/abs/2004.08826)|

-| 非定常不可压流体 | [2D 非定常方腔流](./zh/examples/ldc2d_unsteady.md) | 机理驱动 | MLP | 无监督学习 | - | -|

-| 非定常不可压流体 | [Re100 2D 圆柱绕流](./zh/examples/cylinder2d_unsteady.md) | 机理驱动 | MLP | 半监督学习 | [Data](https://paddle-org.bj.bcebos.com/paddlescience/datasets/cylinder2d_unsteady_Re100/cylinder2d_unsteady_Re100_dataset.tar) | [Paper](https://arxiv.org/abs/2004.08826)|

-| 非定常不可压流体 | [Re100~750 2D 圆柱绕流](./zh/examples/cylinder2d_unsteady_transformer_physx.md) | 数据驱动 | Transformer-Physx | 监督学习 | [Data](https://github.com/zabaras/transformer-physx) | [Paper](https://arxiv.org/abs/2010.03957)|

-| 可压缩流体 | [2D 空气激波](./zh/examples/shock_wave.md) | 机理驱动 | PINN-WE | 无监督学习 | - | [Paper](https://arxiv.org/abs/2206.03864)|

-| 流固耦合 | [涡激振动](./zh/examples/viv.md) | 机理驱动 | MLP | 半监督学习 | [Data](https://github.com/PaddlePaddle/PaddleScience/blob/develop/examples/fsi/VIV_Training_Neta100.mat) | [Paper](https://arxiv.org/abs/2206.03864)|

-| 多相流 | [气液两相流](./zh/examples/bubble.md) | 机理驱动 | BubbleNet | 半监督学习 | [Data](https://paddle-org.bj.bcebos.com/paddlescience/datasets/BubbleNet/bubble.mat) | [Paper](https://pubs.aip.org/aip/adv/article/12/3/035153/2819394/Predicting-micro-bubble-dynamics-with-semi-physics)|

-| 多相流 | [twophasePINN](https://aistudio.baidu.com/projectdetail/5379212) | 机理驱动 | MLP | 无监督学习 | - | [Paper](https://doi.org/10.1016/j.mlwa.2021.100029)|

-| 多相流 | 非高斯渗透率场估计coming soon | 机理驱动 | cINN | 监督学习 | - | [Paper](https://pubs.aip.org/aip/adv/article/12/3/035153/2819394/Predicting-micro-bubble-dynamics-with-semi-physics)|

-| 流场高分辨率重构 | [2D 湍流流场重构](./docs/zh/examples/tempoGAN.md) | 数据驱动 | tempoGAN | 监督学习 | [Train Data](https://paddle-org.bj.bcebos.com/paddlescience/datasets/tempoGAN/2d_train.mat)

[Eval Data](https://paddle-org.bj.bcebos.com/paddlescience/datasets/tempoGAN/2d_valid.mat) | [Paper](https://dl.acm.org/doi/10.1145/3197517.3201304)|

-| 流场高分辨率重构 | [2D 湍流流场重构](https://aistudio.baidu.com/projectdetail/4493261?contributionType=1) | 数据驱动 | cycleGAN | 监督学习 | [Train Data](https://paddle-org.bj.bcebos.com/paddlescience/datasets/tempoGAN/2d_train.mat)

[Eval Data](https://paddle-org.bj.bcebos.com/paddlescience/datasets/tempoGAN/2d_valid.mat) | [Paper](https://arxiv.org/abs/2007.15324)|

-| 流场高分辨率重构 | [基于Voronoi嵌入辅助深度学习的稀疏传感器全局场重建](https://aistudio.baidu.com/projectdetail/5807904) | 数据驱动 | CNN | 监督学习 | [Data1](https://drive.google.com/drive/folders/1K7upSyHAIVtsyNAqe6P8TY1nS5WpxJ2c)

[Data2](https://drive.google.com/drive/folders/1pVW4epkeHkT2WHZB7Dym5IURcfOP4cXu)

[Data3](https://drive.google.com/drive/folders/1xIY_jIu-hNcRY-TTf4oYX1Xg4_fx8ZvD) | [Paper](https://arxiv.org/pdf/2202.11214.pdf) |

-| 流场高分辨率重构 | 基于扩散的流体超分重构coming soon | 数理融合 | DDPM | 监督学习 | - | [Paper](https://www.sciencedirect.com/science/article/pii/S0021999123000670)|

-| 受力分析 | [1D 欧拉梁变形](../examples/euler_beam/euler_beam.py) | 机理驱动 | MLP | 无监督学习 | - | - |

-| 受力分析 | [2D 平板变形](https://aistudio.baidu.com/aistudio/projectdetail/5792325) | 机理驱动 | MLP | 无监督学习 | - | - |

-| 受力分析 | [3D 连接件变形](./zh/examples/bracket.md) | 机理驱动 | MLP | 无监督学习 | [Data](https://paddle-org.bj.bcebos.com/paddlescience/datasets/bracket/bracket_dataset.tar) | [Tutorial](https://docs.nvidia.com/deeplearning/modulus/modulus-v2209/user_guide/foundational/linear_elasticity.html) |

-| 受力分析 | [结构震动模拟](./zh/examples/phylstm.md) | 机理驱动 | PhyLSTM | 监督学习 | [Data](https://paddle-org.bj.bcebos.com/paddlescience/datasets/PhyLSTM/data_boucwen.mat) | [Paper](https://arxiv.org/abs/2002.10253) |

-

-

-材料科学(AI for Material)

-

-| 问题类型 | 案例名称 | 优化算法 | 模型类型 | 训练方式 | 数据集 | 参考资料 |

-|-----|---------|-----|---------|----|---------|---------|

-| 材料设计 | [散射板设计(反问题)](./zh/examples/hpinns.md) | 数理融合 | 数据驱动 | 监督学习 | [Train Data](https://paddle-org.bj.bcebos.com/paddlescience/datasets/hPINNs/hpinns_holo_train.mat)

[Eval Data](https://paddle-org.bj.bcebos.com/paddlescience/datasets/hPINNs/hpinns_holo_valid.mat) | [Paper](https://arxiv.org/pdf/2102.04626.pdf) |

-| 材料生成 | 面向对称感知的周期性材料生成coming soon | 数据驱动 | SyMat | 监督学习 | - | - |

-

-

-地球科学(AI for Earth Science)

-

-| 问题类型 | 案例名称 | 优化算法 | 模型类型 | 训练方式 | 数据集 | 参考资料 |

-|-----|---------|-----|---------|----|---------|---------|

-| 天气预报 | [FourCastNet 气象预报](./zh/examples/fourcastnet.md) | 数据驱动 | FourCastNet | 监督学习 | [ERA5](https://app.globus.org/file-manager?origin_id=945b3c9e-0f8c-11ed-8daf-9f359c660fbd&origin_path=%2F~%2Fdata%2F) | [Paper](https://arxiv.org/pdf/2202.11214.pdf) |

-| 天气预报 | GraphCast 气象预报coming soon | 数据驱动 | GraphCastNet* | 监督学习 | - | [Paper](https://arxiv.org/abs/2212.12794) |

-| 大气污染物 | [UNet 污染物扩散](https://aistudio.baidu.com/projectdetail/5663515?channel=0&channelType=0&sUid=438690&shared=1&ts=1698221963752) | 数据驱动 | UNet | 监督学习 | [Data](https://aistudio.baidu.com/datasetdetail/198102) | - |

-

-## 🚀快速安装

-

-=== "方式1: 源码安装[推荐]"

-

- --8<--

- ./README.md:git_install

- --8<--

-

-=== "方式2: pip安装"

-

- ``` shell

- pip install paddlesci

- ```

-

-=== "[完整安装流程](./zh/install_setup.md)"

-

- ``` shell

- pip install paddlesci

- ```

-

- ``` shell

- pip install paddlesci

- ```

+

+

+!!! tip "快速安装"

+

+ === "方式1: 源码安装[推荐]"

+

+ --8<--

+ ./README.md:git_install

+ --8<--

+

+ === "方式2: pip安装"

+

+ ``` shell

+ pip install paddlesci

+ ```

+

+ === "[完整安装流程](./zh/install_setup.md)"

+

+ ``` shell

+ pip install paddlesci

+ ```

--8<--

-./README.md:feature

+./README.md:update

--8<--

--8<--

-./README.md:support

+./README.md:feature

--8<--

--8<--

-./README.md:contribution

+./README.md:support

--8<--

--8<--

-./README.md:collaboration

+./README.md:contribution

--8<--

--8<--

./README.md:thanks

--8<--

-- PaddleScience 的部分代码由以下优秀社区开发者贡献(按 [Contributors](https://github.com/PaddlePaddle/PaddleScience/graphs/contributors) 排序):

-

-

-  -

-  -

-  -

-  -

-  -

-  -

-  -

-  -

-  -

-  -

-  -

-  -

-  -

-  -

-  -

-  -

-  -

---8<--

-./README.md:cooperation

---8<--

-

--8<--

./README.md:license

--8<--

diff --git a/docs/zh/api/arch.md b/docs/zh/api/arch.md

index 03e4f6253..30358350e 100644

--- a/docs/zh/api/arch.md

+++ b/docs/zh/api/arch.md

@@ -7,7 +7,6 @@

- Arch

- MLP

- DeepONet

- - DeepPhyLSTM

- LorenzEmbedding

- RosslerEmbedding

- CylinderEmbedding

@@ -17,6 +16,5 @@

- ModelList

- AFNONet

- PrecipNet

- - UNetEx

show_root_heading: false

heading_level: 3

diff --git a/docs/zh/api/data/dataset.md b/docs/zh/api/data/dataset.md

index 96e64da56..f2e8f0708 100644

--- a/docs/zh/api/data/dataset.md

+++ b/docs/zh/api/data/dataset.md

@@ -1,4 +1,4 @@

-# Data.dataset(数据集) 模块

+# Data(数据) 模块

::: ppsci.data.dataset

handler: python

@@ -16,6 +16,4 @@

- LorenzDataset

- RosslerDataset

- VtuDataset

- - MeshAirfoilDataset

- - MeshCylinderDataset

show_root_heading: false

diff --git a/docs/zh/api/data/process/batch_transform.md b/docs/zh/api/data/process/batch_transform.md

index 4121821ee..fa3b426e6 100644

--- a/docs/zh/api/data/process/batch_transform.md

+++ b/docs/zh/api/data/process/batch_transform.md

@@ -1 +1 @@

-# Data.batch_transform(批预处理) 模块

+# Batch Transform(批预处理) 模块

diff --git a/docs/zh/api/data/process/transform.md b/docs/zh/api/data/process/transform.md

index 78647df16..3e7f511c6 100644

--- a/docs/zh/api/data/process/transform.md

+++ b/docs/zh/api/data/process/transform.md

@@ -1,4 +1,4 @@

-# Data.transform(预处理) 模块

+# Transform(预处理) 模块

::: ppsci.data.process.transform

handler: python

@@ -10,5 +10,4 @@

- Log1p

- CropData

- SqueezeData

- - FunctionalTransform

show_root_heading: false

diff --git a/docs/zh/api/lr_scheduler.md b/docs/zh/api/lr_scheduler.md

index 8de28be5b..8dfae5a6c 100644

--- a/docs/zh/api/lr_scheduler.md

+++ b/docs/zh/api/lr_scheduler.md

@@ -1,4 +1,4 @@

-# Optimizer.lr_scheduler(学习率) 模块

+# Lr_scheduler(学习率) 模块

::: ppsci.optimizer.lr_scheduler

handler: python

diff --git a/docs/zh/api/optimizer.md b/docs/zh/api/optimizer.md

index d220b82a9..6bb0e0e9c 100644

--- a/docs/zh/api/optimizer.md

+++ b/docs/zh/api/optimizer.md

@@ -1,4 +1,4 @@

-# Optimizer.optimizer(优化器) 模块

+# Optimizer(优化器) 模块

::: ppsci.optimizer.optimizer

handler: python

diff --git a/docs/zh/api/utils.md b/docs/zh/api/utils.md

new file mode 100644

index 000000000..8ce7878a3

--- /dev/null

+++ b/docs/zh/api/utils.md

@@ -0,0 +1,24 @@

+# Utils(工具) 模块

+

+::: ppsci.utils

+ handler: python

+ options:

+ members:

+ - initializer

+ - logger

+ - misc

+ - load_csv_file

+ - load_mat_file

+ - load_vtk_file

+ - run_check

+ - profiler

+ - AttrDict

+ - ExpressionSolver

+ - AverageMeter

+ - set_random_seed

+ - load_checkpoint

+ - load_pretrain

+ - save_checkpoint

+ - lambdify

+ show_root_heading: false

+ heading_level: 3

diff --git a/docs/zh/development.md b/docs/zh/development.md

index 8204d1c6b..4ceeb4763 100644

--- a/docs/zh/development.md

+++ b/docs/zh/development.md

@@ -1,58 +1,48 @@

# 开发指南

-本文档介绍如何基于 PaddleScience 套件进行代码开发并最终贡献到 PaddleScience 套件中。

-

-PaddleScience 相关的论文复现、API 开发任务开始之前需提交 RFC 文档,请参考:[PaddleScience RFC Template](https://github.com/PaddlePaddle/community/blob/master/rfcs/Science/template.md)

+本文档介绍如何基于 PaddleScience 套件进行代码开发并最终贡献到 PaddleScience 套件中

## 1. 准备工作

-1. 将 PaddleScience fork 到**自己的仓库**

-2. 克隆**自己仓库**里的 PaddleScience 到本地,并进入该目录

+1. 将 PaddleScience fork 到自己的仓库

+2. 克隆自己仓库里的 PaddleScience 到本地,并进入该目录

``` sh

- git clone -b develop https://github.com/USER_NAME/PaddleScience.git

+ git clone https://github.com/your_username/PaddleScience.git

cd PaddleScience

```

- 上方 `clone` 命令中的 `USER_NAME` 字段请填入的自己的用户名。

-

3. 安装必要的依赖包

``` sh

- pip install -r requirements.txt -i https://pypi.tuna.tsinghua.edu.cn/simple

+ pip install -r requirements.txt

+ # 安装较慢时可以加上-i选项,提升下载速度

+ # pip install -r requirements.txt -i https://pypi.douban.com/simple/

```

-4. 基于当前所在的 `develop` 分支,新建一个分支(假设新分支名字为 `dev_model`)

+4. 基于 develop 分支,新建一个新分支(假设新分支名字为dev_model)

``` sh

git checkout -b "dev_model"

```

-5. 添加 PaddleScience 目录到系统环境变量 `PYTHONPATH` 中

+5. 添加 PaddleScience 目录到系统环境变量 PYTHONPATH 中

``` sh

export PYTHONPATH=$PWD:$PYTHONPATH

```

-6. 执行以下代码,验证安装的 PaddleScience 基础功能是否正常

-

- ``` sh

- python -c "import ppsci; ppsci.run_check()"

- ```

-

- 如果出现 PaddleScience is installed successfully.✨ 🍰 ✨,则说明安装验证成功。

-

## 2. 编写代码

-完成上述准备工作后,就可以基于 PaddleScience 开始开发自己的案例或者功能了。

-

-假设新建的案例代码文件路径为:`PaddleScience/examples/demo/demo.py`,接下来开始详细介绍这一流程

+完成上述准备工作后,就可以基于 PaddleScience 提供的 API 开始编写自己的案例代码了,接下来开始详细介绍

+这一过程。

### 2.1 导入必要的包

-PaddleScience 所提供的 API 全部在 `ppsci.*` 模块下,因此在 `demo.py` 的开头首先需要导入 `ppsci` 这个顶层模块,接着导入日志打印模块 `logger`,方便打印日志时自动记录日志到本地文件中,最后再根据您自己的需要,导入其他必要的模块。

+PaddleScience 所提供的 API 全部在 `ppsci` 模块下,因此在代码文件的开头首先需要导入 `ppsci` 这个顶

+层模块以及日志打印模块,然后再根据您自己的需要,导入其他必要的模块。

-``` py title="examples/demo/demo.py"

+``` py linenums="1"

import ppsci

from ppsci.utils import logger

@@ -62,75 +52,59 @@ from ppsci.utils import logger

### 2.2 设置运行环境

-在运行 `demo.py` 之前,需要进行一些必要的运行环境设置,如固定随机种子(保证实验可复现性)、设置输出目录并初始化日志打印模块(保存重要实验数据)。

+在运行 python 的主体代码之前,我们同样需要设置一些必要的运行环境,比如固定随机种子、设置模型/日志保存

+目录、初始化日志打印模块。

-``` py title="examples/demo/demo.py"

+``` py linenums="1"

if __name__ == "__main__":

# set random seed for reproducibility

ppsci.utils.misc.set_random_seed(42)

# set output directory

- OUTPUT_DIR = "./output_example"

+ output_dir = "./output_example"

# initialize logger

- logger.init_logger("ppsci", f"{OUTPUT_DIR}/train.log", "info")

+ logger.init_logger("ppsci", f"{output_dir}/train.log", "info")

```

-完成上述步骤之后,`demo.py` 已经搭好了必要框架。接下来介绍如何基于自己具体的需求,对 `ppsci.*` 下的其他模块进行开发或者复用,以最终在 `demo.py` 中使用。

-

### 2.3 构建模型

#### 2.3.1 构建已有模型

-PaddleScience 内置了一些常见的模型,如 `MLP` 模型,如果您想使用这些内置的模型,可以直接调用 [`ppsci.arch.*`](./api/arch.md) 下的 API,并填入模型实例化所需的参数,即可快速构建模型。

+PaddleScience 内置了一些常见的模型,如 `MLP` 模型,如果您想使用这些内置的模型,可以直接调用

+[`ppsci.arch`](./api/arch.md) 下的 API,并填入模型实例化所需的参数,即可快速构建模型。

-``` py title="examples/demo/demo.py"

+``` py linenums="1"

# create a MLP model

model = ppsci.arch.MLP(("x", "y"), ("u", "v", "p"), 9, 50, "tanh")

```

-上述代码实例化了一个 `MLP` 全连接模型,其输入数据为两个字段:`"x"`、`"y"`,输出数据为三个字段:`"u"`、`"v"`、`"w"`;模型具有 $9$ 层隐藏层,每层的神经元个数为 $50$ 个,每层隐藏层使用的激活函数均为 $\tanh$ 双曲正切函数。

-

#### 2.3.2 构建新的模型

-当 PaddleScience 内置的模型无法满足您的需求时,您就可以通过新增模型文件并编写模型代码的方式,使用您自定义的模型,步骤如下:

+当 PaddleScienc 内置的模型无法满足您的需求时,您也可以通过新增模型文件并编写模型代码的方式,使用您自

+定义的模型,步骤如下:

-1. 在 `ppsci/arch/` 文件夹下新建模型结构文件,以 `new_model.py` 为例。

-2. 在 `new_model.py` 文件中导入 PaddleScience 的模型基类所在的模块 `base`,并从 `base.Arch` 派生出您想创建的新模型类(以

-`Class NewModel` 为例)。

+1. 在 `ppsci/arch` 下新建模型结构文件,以 `new_model.py` 为例

+2. 在 `new_model.py` 文件中导入 PaddleScience 的模型基类所在模块 `base`,并让创建的新模型类(以

+`Class NewModel` 为例)从 `base.Arch` 继承。

- ``` py title="ppsci/arch/new_model.py"

+ ``` py linenums="1" title="ppsci/arch/new_model.py"

from ppsci.arch import base

class NewModel(base.Arch):

def __init__(self, ...):

...

- # initialization

+ # init

def forward(self, ...):

...

# forward

```

-3. 编写 `NewModel.__init__` 方法,其被用于模型创建时的初始化操作,包括模型层、参数变量初始化;然后再编写 `NewModel.forward` 方法,其定义了模型从接受输入、计算输出这一过程。以 `MLP.__init__` 和 `MLP.forward` 为例,如下所示。

-

- === "MLP.\_\_init\_\_"

-

- ``` py

- --8<--

- ppsci/arch/mlp.py:73:138

- --8<--

- ```

-

- === "MLP.forward"

-

- ``` py

- --8<--

- ppsci/arch/mlp.py:140:167

- --8<--

- ```

+3. 编写 `__init__` 代码,用于模型创建时的初始化;然后再编写 `forward` 代码,用于定义模型接受输入、

+计算输出这一前向过程。

-4. 在 `ppsci/arch/__init__.py` 中导入编写的新模型类 `NewModel`,并添加到 `__all__` 中

+4. 在 `ppsci/arch/__init__.py` 中导入编写的新模型类,并添加到 `__all__` 中

- ``` py title="ppsci/arch/__init__.py" hl_lines="3 8"

+ ``` py linenums="1" title="ppsci/arch/__init__.py" hl_lines="3 8"

...

...

from ppsci.arch.new_model import NewModel

@@ -142,11 +116,8 @@ model = ppsci.arch.MLP(("x", "y"), ("u", "v", "p"), 9, 50, "tanh")

]

```

-完成上述新模型代码编写的工作之后,在 `demo.py` 中,就能通过调用 `ppsci.arch.NewModel`,实例化刚才编写的模型,如下所示。

-

-``` py title="examples/demo/demo.py"

-model = ppsci.arch.NewModel(...)

-```

+完成上述新模型代码编写的工作之后,我们就能像 PaddleScience 内置模型一样,以

+`ppsci.arch.NewModel` 的方式,调用我们编写的新模型类,并用于创建模型实例。

### 2.4 构建方程

@@ -155,103 +126,39 @@ model = ppsci.arch.NewModel(...)

#### 2.4.1 构建已有方程

PaddleScience 内置了一些常见的方程,如 `NavierStokes` 方程,如果您想使用这些内置的方程,可以直接

-调用 [`ppsci.equation.*`](./api/equation.md) 下的 API,并填入方程实例化所需的参数,即可快速构建方程。

+调用 [`ppsci.equation`](./api/equation.md) 下的 API,并填入方程实例化所需的参数,即可快速构建模型。

-``` py title="examples/demo/demo.py"

+``` py linenums="1"

# create a Vibration equation

viv_equation = ppsci.equation.Vibration(2, -4, 0)

```

#### 2.4.2 构建新的方程

-当 PaddleScience 内置的方程无法满足您的需求时,您也可以通过新增方程文件并编写方程代码的方式,使用您自定义的方程。

-

-假设需要计算的方程公式如下所示。

-

-$$

-\begin{cases}

- \begin{align}

- \dfrac{\partial u}{\partial x} + \dfrac{\partial u}{\partial y} &= u + 1, \tag{1} \\

- \dfrac{\partial v}{\partial x} + \dfrac{\partial v}{\partial y} &= v. \tag{2}

- \end{align}

-\end{cases}

-$$

-

-> 其中 $x$, $y$ 为模型输入,表示$x$、$y$轴坐标;$u=u(x,y)$、$v=v(x,y)$ 是模型输出,表示 $(x,y)$ 处的 $x$、$y$ 轴方向速度。

-

-首先我们需要将上述方程进行适当移项,将含有变量、函数的项移动到等式左侧,含有常数的项移动到等式右侧,方便后续转换成程序代码,如下所示。

-

-$$

-\begin{cases}

- \begin{align}

- \dfrac{\partial u}{\partial x} + \dfrac{\partial u}{\partial y} - u &= 1, \tag{3}\\

- \dfrac{\partial v}{\partial x} + \dfrac{\partial v}{\partial y} - v &= 0. \tag{4}

- \end{align}

-\end{cases}

-$$

+当 PaddleScienc 内置的方程无法满足您的需求时,您也可以通过新增方程文件并编写方程代码的方式,使用您自

+定义的方程,步骤如下:

-然后就可以将上述移项后的方程组根据以下步骤转换成对应的程序代码。

+1. 在 `ppsci/equation/pde` 下新建方程文件(如果您的方程并不是 PDE 方程,那么需要新建一个方程类文件

+夹,比如在 `ppsci/equation` 下新建 `ode` 文件夹,再将您的方程文件放在 `ode` 文件夹下,此处以 PDE

+类的方程 `new_pde.py` 为例)

-1. 在 `ppsci/equation/pde/` 下新建方程文件。如果您的方程并不是 PDE 方程,那么需要新建一个方程类文件夹,比如在 `ppsci/equation/` 下新建 `ode` 文件夹,再将您的方程文件放在 `ode` 文件夹下。此处以PDE类的方程 `new_pde.py` 为例。

+2. 在 `new_pde.py` 文件中导入 PaddleScience 的方程基类所在模块 `base`,并让创建的新方程类

+(以`Class NewPDE` 为例)从 `base.PDE` 继承

-2. 在 `new_pde.py` 文件中导入 PaddleScience 的方程基类所在模块 `base`,并从 `base.PDE` 派生 `Class NewPDE`。

-

- ``` py title="ppsci/equation/pde/new_pde.py"

+ ``` py linenums="1" title="ppsci/equation/pde/new_pde.py"

from ppsci.equation.pde import base

class NewPDE(base.PDE):

+ def __init__(self, ...):

+ ...

+ # init

```

-3. 编写 `__init__` 代码,用于方程创建时的初始化,在其中定义必要的变量和公式计算过程。PaddleScience 支持使用 sympy 符号计算库创建方程和直接使用 python 函数编写方程,两种方式如下所示。

-

- === "sympy expression"

-

- ``` py title="ppsci/equation/pde/new_pde.py"

- from ppsci.equation.pde import base

-

- class NewPDE(base.PDE):

- def __init__(self):

- x, y = self.create_symbols("x y") # 创建自变量 x, y

- u = self.create_function("u", (x, y)) # 创建关于自变量 (x, y) 的函数 u(x,y)

- v = self.create_function("v", (x, y)) # 创建关于自变量 (x, y) 的函数 v(x,y)

-

- expr1 = u.diff(x) + u.diff(y) - u # 对应等式(3)左侧表达式

- expr2 = v.diff(x) + v.diff(y) - v # 对应等式(4)左侧表达式

-

- self.add_equation("expr1", expr1) # 将expr1 的 sympy 表达式对象添加到 NewPDE 对象的公式集合中

- self.add_equation("expr2", expr2) # 将expr2 的 sympy 表达式对象添加到 NewPDE 对象的公式集合中

- ```

-

- === "python function"

-

- ``` py title="ppsci/equation/pde/new_pde.py"

- from ppsci.autodiff import jacobian

-

- from ppsci.equation.pde import base

-

- class NewPDE(base.PDE):

- def __init__(self):

- def expr1_compute_func(out):

- x, y = out["x"], out["y"] # 从 out 数据字典中取出自变量 x, y 的数据值

- u = out["u"] # 从 out 数据字典中取出因变量 u 的函数值

-

- expr1 = jacobian(u, x) + jacobian(u, y) - u # 对应等式(3)左侧表达式计算过程

- return expr1 # 返回计算结果值

-

- def expr2_compute_func(out):

- x, y = out["x"], out["y"] # 从 out 数据字典中取出自变量 x, y 的数据值

- v = out["v"] # 从 out 数据字典中取出因变量 v 的函数值

-

- expr2 = jacobian(v, x) + jacobian(v, y) - v # 对应等式(4)左侧表达式计算过程

- return expr2

-

- self.add_equation("expr1", expr1_compute_func) # 将 expr1 的计算函数添加到 NewPDE 对象的公式集合中

- self.add_equation("expr2", expr2_compute_func) # 将 expr2 的计算函数添加到 NewPDE 对象的公式集合中

- ```

+3. 编写 `__init__` 代码,用于方程创建时的初始化

4. 在 `ppsci/equation/__init__.py` 中导入编写的新方程类,并添加到 `__all__` 中

- ``` py title="ppsci/equation/__init__.py" hl_lines="3 8"

+ ``` py linenums="1" title="ppsci/equation/__init__.py" hl_lines="3 8"

...

...

from ppsci.equation.pde.new_pde import NewPDE

@@ -263,150 +170,29 @@ $$

]

```

-完成上述新方程代码编写的工作之后,我们就能像 PaddleScience 内置方程一样,以 `ppsci.equation.NewPDE` 的方式,调用我们编写的新方程类,并用于创建方程实例。

+完成上述新方程代码编写的工作之后,我们就能像 PaddleScience 内置方程一样,以

+`ppsci.equation.NewPDE` 的方式,调用我们编写的新方程类,并用于创建方程实例。

在方程构建完毕后之后,我们需要将所有方程包装为到一个字典中

-``` py title="examples/demo/demo.py"

+``` py linenums="1"

new_pde = ppsci.equation.NewPDE(...)

-equation = {..., "newpde": new_pde}

-```

-

-### 2.5 构建几何模块[可选]

-

-模型训练、验证时所用的输入、标签数据的来源,根据具体案例场景的不同而变化。大部分基于 PINN 的案例,其数据来自几何形状内部、表面采样得到的坐标点、法向量、SDF 值;而基于数据驱动的方法,其输入、标签数据大多数来自于外部文件,或通过 numpy 等第三方库构造的存放在内存中的数据。本章节主要对第一种情况所需的几何模块进行介绍,第二种情况则不一定需要几何模块,其构造方式可以参考 [#2.6 构建约束条件](#2.6)。

-

-#### 2.5.1 构建已有几何

-

-PaddleScience 内置了几类常用的几何形状,包括简单几何、复杂几何,如下所示。

-

-| 几何调用方式 | 含义 |

-| -- | -- |

-|`ppsci.geometry.Interval`| 1 维线段几何|

-|`ppsci.geometry.Disk`| 2 维圆面几何|

-|`ppsci.geometry.Polygon`| 2 维多边形几何|

-|`ppsci.geometry.Rectangle` | 2 维矩形几何|

-|`ppsci.geometry.Triangle` | 2 维三角形几何|

-|`ppsci.geometry.Cuboid` | 3 维立方体几何|

-|`ppsci.geometry.Sphere` | 3 维圆球几何|

-|`ppsci.geometry.Mesh` | 3 维 Mesh 几何|

-|`ppsci.geometry.PointCloud` | 点云几何|

-|`ppsci.geometry.TimeDomain` | 1 维时间几何(常用于瞬态问题)|

-|`ppsci.geometry.TimeXGeometry` | 1 + N 维带有时间的几何(常用于瞬态问题)|

-

-以计算域为 2 维矩形几何为例,实例化一个 x 轴边长为2,y 轴边长为 1,且左下角为点 (-1,-3) 的矩形几何代码如下:

-

-``` py title="examples/demo/demo.py"

-LEN_X, LEN_Y = 2, 1 # 定义矩形边长

-rect = ppsci.geometry.Rectangle([-1, -3], [-1 + LEN_X, -3 + LEN_Y]) # 通过左下角、右上角对角线坐标构造矩形

-```

-

-其余的几何体构造方法类似,参考 API 文档的 [ppsci.geometry](./api/geometry.md) 部分即可。

-

-#### 2.5.2 构建新的几何

-

-下面以构建一个新的几何体 —— 2 维椭圆(无旋转)为例进行介绍。

-

-1. 首先我们需要在二维几何的代码文件 `ppsci/geometry/geometry_2d.py` 中新建椭圆类 `Ellipse`,并制定其直接父类为 `geometry.Geometry` 几何基类。

-然后根据椭圆的代数表示公式:$\dfrac{x^2}{a^2} + \dfrac{y^2}{b^2} = 1$,可以发现表示一个椭圆需要记录其圆心坐标 $(x_0,y_0)$、$x$ 轴半径 $a$、$y$ 轴半径 $b$。因此该椭圆类的代码如下所示。

-

- ``` py title="ppsci/geometry/geometry_2d.py"

- class Ellipse(geometry.Geometry):

- def __init__(self, x0: float, y0: float, a: float, b: float)

- self.center = np.array((x0, y0), dtype=paddle.get_default_dtype())

- self.a = a

- self.b = b

- ```

-

-2. 为椭圆类编写必要的基础方法,如下所示。

-

- - 判断给定点集是否在椭圆内部

-

- ``` py title="ppsci/geometry/geometry_2d.py"

- def is_inside(self, x):

- return ((x / self.center) ** 2).sum(axis=1) < 1

- ```

-

- - 判断给定点集是否在椭圆边界上

-

- ``` py title="ppsci/geometry/geometry_2d.py"

- def on_boundary(self, x):

- return np.isclose(((x / self.center) ** 2).sum(axis=1), 1)

- ```

-

- - 在椭圆内部点随机采样(此处使用“拒绝采样法”实现)

-

- ``` py title="ppsci/geometry/geometry_2d.py"

- def random_points(self, n, random="pseudo"):

- res_n = n

- result = []

- max_radius = self.center.max()

- while (res_n < n):

- rng = sampler.sample(n, 2, random)

- r, theta = rng[:, 0], 2 * np.pi * rng[:, 1]

- x = np.sqrt(r) * np.cos(theta)

- y = np.sqrt(r) * np.sin(theta)

- candidate = max_radius * np.stack((x, y), axis=1) + self.center

- candidate = candidate[self.is_inside(candidate)]

- if len(candidate) > res_n:

- candidate = candidate[: res_n]

-

- result.append(candidate)

- res_n -= len(candidate)

- result = np.concatenate(result, axis=0)

- return result

- ```

-

- - 在椭圆边界随机采样(此处基于椭圆参数方程实现)

-

- ``` py title="ppsci/geometry/geometry_2d.py"

- def random_boundary_points(self, n, random="pseudo"):

- theta = 2 * np.pi * sampler.sample(n, 1, random)

- X = np.concatenate((self.a * np.cos(theta),self.b * np.sin(theta)), axis=1)

- return X + self.center

- ```

-

-3. 在 `ppsci/geometry/__init__.py` 中加入椭圆类 `Ellipse`,如下所示。

-

- ``` py title="ppsci/geometry/__init__.py" hl_lines="3 8"

- ...

- ...

- from ppsci.geometry.geometry_2d import Ellipse

-

- __all__ = [

- ...,

- ...,

- "Ellipse",

- ]

- ```

-

-完成上述实现之后,我们就能以如下方式实例化椭圆类。同样地,建议将所有几何类实例包装在一个字典中,方便后续索引。

-

-``` py title="examples/demo/demo.py"

-ellipse = ppsci.geometry.Ellipse(0, 0, 2, 1)

-geom = {..., "ellipse": ellipse}

+equation = {"newpde": new_pde}

```

-### 2.6 构建约束条件

-

-无论是 PINNs 方法还是数据驱动方法,它们总是需要利用数据来指导网络模型的训练,而这一过程在 PaddleScience 中由 `Constraint`(约束)模块负责。

+### 2.5 构建约束条件

-#### 2.6.1 构建已有约束

+无论是 PINNs 方法还是数据驱动方法,它们总是需要构造数据来指导网络模型的训练,而利用数据指导网络训练的

+这一过程,在 PaddleScience 中由 `Constraint`(约束) 负责。

-PaddleScience 内置了一些常见的约束,如下所示。

+#### 2.5.1 构建已有约束

-|约束名称|功能|

-|--|--|

-|`ppsci.constraint.BoundaryConstraint`|边界约束|

-|`ppsci.constraint.InitialConstraint` |内部点初值约束|

-|`ppsci.constraint.IntegralConstraint` |边界积分约束|

-|`ppsci.constraint.InteriorConstraint`|内部点约束|

-|`ppsci.constraint.PeriodicConstraint` |边界周期约束|

-|`ppsci.constraint.SupervisedConstraint` |监督数据约束|

+PaddleScience 内置了一些常见的约束,如主要用于数据驱动模型的 `SupervisedConstraint` 监督约束,

+主要用于物理信息驱动的 `InteriorConstraint` 内部点约束,如果您想使用这些内置的约束,可以直接调用

+[`ppsci.constraint`](./api/constraint.md) 下的 API,并填入约束实例化所需的参数,即可快速构建约

+束条件。

-如果您想使用这些内置的约束,可以直接调用 [`ppsci.constraint.*`](./api/constraint.md) 下的 API,并填入约束实例化所需的参数,即可快速构建约束条件。

-

-``` py title="examples/demo/demo.py"

+``` py linenums="1"

# create a SupervisedConstraint

sup_constraint = ppsci.constraint.SupervisedConstraint(

train_dataloader_cfg,

@@ -416,11 +202,9 @@ sup_constraint = ppsci.constraint.SupervisedConstraint(

)

```

-约束的参数填写方式,请参考对应的 API 文档参数说明和样例代码。

-

-#### 2.6.2 构建新的约束

+#### 2.5.2 构建新的约束

-当 PaddleScience 内置的约束无法满足您的需求时,您也可以通过新增约束文件并编写约束代码的方式,使用您自

+当 PaddleScienc 内置的约束无法满足您的需求时,您也可以通过新增约束文件并编写约束代码的方式,使用您自

定义的约束,步骤如下:

1. 在 `ppsci/constraint` 下新建约束文件(此处以约束 `new_constraint.py` 为例)

@@ -428,26 +212,20 @@ sup_constraint = ppsci.constraint.SupervisedConstraint(

2. 在 `new_constraint.py` 文件中导入 PaddleScience 的约束基类所在模块 `base`,并让创建的新约束

类(以 `Class NewConstraint` 为例)从 `base.PDE` 继承

- ``` py title="ppsci/constraint/new_constraint.py"

- from ppsci.constraint import base

-

- class NewConstraint(base.Constraint):

- ```

-

-3. 编写 `__init__` 方法,用于约束创建时的初始化。

-

- ``` py title="ppsci/constraint/new_constraint.py"

+ ``` py linenums="1" title="ppsci/constraint/new_constraint.py"

from ppsci.constraint import base

class NewConstraint(base.Constraint):

def __init__(self, ...):

...

- # initialization

+ # init

```

+3. 编写 `__init__` 代码,用于约束创建时的初始化

+

4. 在 `ppsci/constraint/__init__.py` 中导入编写的新约束类,并添加到 `__all__` 中

- ``` py title="ppsci/constraint/__init__.py" hl_lines="3 8"

+ ``` py linenums="1" title="ppsci/constraint/__init__.py" hl_lines="3 8"

...

...

from ppsci.constraint.new_constraint import NewConstraint

@@ -459,47 +237,38 @@ sup_constraint = ppsci.constraint.SupervisedConstraint(

]

```

-完成上述新约束代码编写的工作之后,我们就能像 PaddleScience 内置约束一样,以 `ppsci.constraint.NewConstraint` 的方式,调用我们编写的新约束类,并用于创建约束实例。

+完成上述新约束代码编写的工作之后,我们就能像 PaddleScience 内置约束一样,以

+`ppsci.constraint.NewConstraint` 的方式,调用我们编写的新约束类,并用于创建约束实例。

-``` py title="examples/demo/demo.py"

-new_constraint = ppsci.constraint.NewConstraint(...)

-constraint = {..., new_constraint.name: new_constraint}

-```

+### 2.6 定义超参数

-### 2.7 定义超参数

+在模型开始训练前,需要定义一些训练相关的超参数,如训练轮数、学习率等,如下所示

-在模型开始训练前,需要定义一些训练相关的超参数,如训练轮数、学习率等,如下所示。

-

-``` py title="examples/demo/demo.py"

-EPOCHS = 10000

-LEARNING_RATE = 0.001

+``` py linenums="1"

+epochs = 10000

+learning_rate = 0.001

```

-### 2.8 构建优化器

+### 2.7 构建优化器

-模型训练时除了模型本身,还需要定义一个用于更新模型参数的优化器,如下所示。

+模型训练时除了模型本身,还需要定义一个用于更新模型参数的优化器,如下所示

-``` py title="examples/demo/demo.py"

+``` py linenums="1"

optimizer = ppsci.optimizer.Adam(0.001)(model)

```

-### 2.9 构建评估器[可选]

-

-#### 2.9.1 构建已有评估器

-

-PaddleScience 内置了一些常见的评估器,如下所示。

+### 2.8 构建评估器

-|评估器名称|功能|

-|--|--|

-|`ppsci.validator.GeometryValidator`|几何评估器|

-|`ppsci.validator.SupervisedValidator` |监督数据评估器|

+#### 2.8.1 构建已有评估器

-如果您想使用这些内置的评估器,可以直接调用 [`ppsci.validate.*`](./api/validate.md) 下的 API,并填入评估器实例化所需的参数,即可快速构建评估器。

+PaddleScience 内置了一些常见的评估器,如 `SupervisedValidator` 评估器,如果您想使用这些内置的评

+估器,可以直接调用 [`ppsci.validate`](./api/validate.md) 下的 API,并填入评估器实例化所需的参数,

+即可快速构建评估器。

-``` py title="examples/demo/demo.py"

+``` py linenums="1"

# create a SupervisedValidator

eta_mse_validator = ppsci.validate.SupervisedValidator(

- valid_dataloader_cfg,

+ valida_dataloader_cfg,

ppsci.loss.MSELoss("mean"),

{"eta": lambda out: out["eta"], **equation["VIV"].equations},

metric={"MSE": ppsci.metric.MSE()},

@@ -507,35 +276,30 @@ eta_mse_validator = ppsci.validate.SupervisedValidator(

)

```

-#### 2.9.2 构建新的评估器

+#### 2.8.2 构建新的评估器

-当 PaddleScience 内置的评估器无法满足您的需求时,您也可以通过新增评估器文件并编写评估器代码的方式,使

+当 PaddleScienc 内置的评估器无法满足您的需求时,您也可以通过新增评估器文件并编写评估器代码的方式,使

用您自定义的评估器,步骤如下:

-1. 在 `ppsci/validate` 下新建评估器文件(此处以 `new_validator.py` 为例)。

+1. 在 `ppsci/validate` 下新建评估器文件(此处以 `new_validator.py` 为例)

-2. 在 `new_validator.py` 文件中导入 PaddleScience 的评估器基类所在模块 `base`,并让创建的新评估器类(以 `Class NewValidator` 为例)从 `base.Validator` 继承。

+2. 在 `new_validator.py` 文件中导入 PaddleScience 的评估器基类所在模块 `base`,并让创建的新评估

+器类(以 `Class NewValidator` 为例)从 `base.Validator` 继承

- ``` py title="ppsci/validate/new_validator.py"

- from ppsci.validate import base

-

- class NewValidator(base.Validator):

- ```

-

-3. 编写 `__init__` 代码,用于评估器创建时的初始化

-

- ``` py title="ppsci/validate/new_validator.py"

+ ``` py linenums="1" title="ppsci/validate/new_validator.py"

from ppsci.validate import base

class NewValidator(base.Validator):

def __init__(self, ...):

...

- # initialization

+ # init

```

-4. 在 `ppsci/validate/__init__.py` 中导入编写的新评估器类,并添加到 `__all__` 中。

+3. 编写 `__init__` 代码,用于评估器创建时的初始化

+

+4. 在 `ppsci/validate/__init__.py` 中导入编写的新评估器类,并添加到 `__all__` 中

- ``` py title="ppsci/validate/__init__.py" hl_lines="3 8"

+ ``` py linenums="1" title="ppsci/validate/__init__.py" hl_lines="3 8"

...

...

from ppsci.validate.new_validator import NewValidator

@@ -547,20 +311,23 @@ eta_mse_validator = ppsci.validate.SupervisedValidator(

]

```

-完成上述新评估器代码编写的工作之后,我们就能像 PaddleScience 内置评估器一样,以 `ppsci.validate.NewValidator` 的方式,调用我们编写的新评估器类,并用于创建评估器实例。同样地,在评估器构建完毕后之后,建议将所有评估器包装到一个字典中方便后续索引。

+完成上述新评估器代码编写的工作之后,我们就能像 PaddleScience 内置评估器一样,以

+`ppsci.equation.NewValidator` 的方式,调用我们编写的新评估器类,并用于创建评估器实例。

+

+在评估器构建完毕后之后,我们需要将所有评估器包装为到一个字典中

-``` py title="examples/demo/demo.py"

+``` py linenums="1"

new_validator = ppsci.validate.NewValidator(...)

-validator = {..., new_validator.name: new_validator}

+validator = {new_validator.name: new_validator}

```

-### 2.10 构建可视化器[可选]

+### 2.9 构建可视化器

PaddleScience 内置了一些常见的可视化器,如 `VisualizerVtu` 可视化器等,如果您想使用这些内置的可视

-化器,可以直接调用 [`ppsci.visualizer.*`](./api/visualize.md) 下的 API,并填入可视化器实例化所需的

+化器,可以直接调用 [`ppsci.visulizer`](./api/visualize.md) 下的 API,并填入可视化器实例化所需的

参数,即可快速构建模型。

-``` py title="examples/demo/demo.py"

+``` py linenums="1"

# manually collate input data for visualization,

# interior+boundary

vis_points = {}

@@ -570,7 +337,7 @@ for key in vis_interior_points:

)

visualizer = {

- "visualize_u_v": ppsci.visualize.VisualizerVtu(

+ "visulzie_u_v": ppsci.visualize.VisualizerVtu(

vis_points,

{"u": lambda d: d["u"], "v": lambda d: d["v"], "p": lambda d: d["p"]},

prefix="result_u_v",

@@ -580,11 +347,12 @@ visualizer = {

如需新增可视化器,步骤与其他模块的新增方法类似,此处不再赘述。

-### 2.11 构建Solver

+### 2.10 构建Solver

-[`Solver`](./api/solver.md) 是 PaddleScience 负责调用训练、评估、可视化的全局管理类。在训练开始前,需要把构建好的模型、约束、优化器等实例传给 `Solver` 以实例化,再调用它的内置方法进行训练、评估、可视化。

+[`Solver`](./api/solver.md) 是 PaddleScience 负责调用训练、评估、可视化的类,在训练开始前,需要把构建好的模型、约束、优化

+器等实例传给 Solver,再调用它的内置方法进行训练、评估、可视化。

-``` py title="examples/demo/demo.py"

+``` py linenums="1"

# initialize solver

solver = ppsci.solver.Solver(

model,

@@ -592,7 +360,7 @@ solver = ppsci.solver.Solver(

output_dir,

optimizer,

lr_scheduler,

- EPOCHS,

+ epochs,

iters_per_epoch,

eval_during_train=True,

eval_freq=eval_freq,

@@ -602,85 +370,39 @@ solver = ppsci.solver.Solver(

)

```

-### 2.12 训练

+### 2.11 训练

-PaddleScience 模型的训练只需调用一行代码。

+PaddleScience 模型的训练只需调用一行代码

-``` py title="examples/demo/demo.py"

+``` py linenums="1"

solver.train()

```

-### 2.13 评估

+### 2.12 评估

-PaddleScience 模型的评估只需调用一行代码。

+PaddleScience 模型的评估只需调用一行代码

-``` py title="examples/demo/demo.py"

+``` py linenums="1"

solver.eval()

```

-### 2.14 可视化[可选]

+### 2.13 可视化

-若 `Solver` 实例化时传入了 `visualizer` 参数,则 PaddleScience 模型的可视化只需调用一行代码。

+PaddleScience 模型的可视化只需调用一行代码

-``` py title="examples/demo/demo.py"

+``` py linenums="1"

solver.visualize()

```

-!!! tip "可视化方案"

-

- 对于一些复杂的案例,`Visualizer` 的编写成本并不低,并且不是任何数据类型都可以进行方便的可视化。因此可以在训练完成之后,手动构建用于预测的数据字典,再使用 `solver.predict` 得到模型预测结果,最后利用 `matplotlib` 等第三方库,对预测结果进行可视化并保存。

-

-## 3. 编写文档

-

-除了案例代码,PaddleScience 同时存放了对应案例的详细文档,使用 Markdown + [Mkdocs-Material](https://squidfunk.github.io/mkdocs-material/) 进行编写和渲染,撰写文档步骤如下。

-

-### 3.1 安装必要依赖包

-

-文档撰写过程中需进行即时渲染,预览文档内容以检查撰写的内容是否有误。因此需要按照如下命令,安装 mkdocs 相关依赖包。

-

-``` shell

-pip install -r docs/requirements.txt -i https://pypi.tuna.tsinghua.edu.cn/simple

-```

-

-### 3.2 撰写文档内容

-

-PaddleScience 文档基于 [Mkdocs-Material](https://squidfunk.github.io/mkdocs-material/)、[PyMdown](https://facelessuser.github.io/pymdown-extensions/extensions/arithmatex/) 等插件进行编写,其在 Markdown 语法基础上支持了多种扩展性功能,能极大地提升文档的美观程度和阅读体验。建议参考超链接内的文档内容,选择合适的功能辅助文档撰写。

-

-### 3.3 预览文档

-

-在 `PaddleScience/` 目录下执行以下命令,等待构建完成后,点击显示的链接进入本地网页预览文档内容。

-

-``` shell

-mkdocs serve

-

-# ====== 终端打印信息如下 ======

-# INFO - Building documentation...

-# INFO - Cleaning site directory

-# INFO - Documentation built in 20.95 seconds

-# INFO - [07:39:35] Watching paths for changes: 'docs', 'mkdocs.yml'

-# INFO - [07:39:35] Serving on http://127.0.0.1:8000/PaddlePaddle/PaddleScience/

-# INFO - [07:39:41] Browser connected: http://127.0.0.1:58903/PaddlePaddle/PaddleScience/

-# INFO - [07:40:41] Browser connected: http://127.0.0.1:58903/PaddlePaddle/PaddleScience/zh/development/

-```

-

-!!! tip "手动指定服务地址和端口号"

-

- 若默认端口号 8000 被占用,则可以手动指定服务部署的地址和端口,示例如下。

-

- ``` shell

- # 指定 127.0.0.1 为地址,8687 为端口号

- mkdocs serve -a 127.0.0.1:8687

- ```

-

-## 4. 整理代码并提交

+## 3. 整理代码并提交

-### 4.1 安装 pre-commit

+### 3.1 安装 pre-commit

PaddleScience 是一个开源的代码库,由多人共同参与开发,因此为了保持最终合入的代码风格整洁、一致,

PaddleScience 使用了包括 [isort](https://github.com/PyCQA/isort#installing-isort)、[black](https://github.com/psf/black) 等自动化代码检查、格式化插件,

让 commit 的代码遵循 python [PEP8](https://pep8.org/) 代码风格规范。

-因此在 commit 您的代码之前,请务必先执行以下命令安装 `pre-commit`。

+因此在 commit 你的代码之前,请务必先执行以下命令安装 `pre-commit`。

``` sh

pip install pre-commit

@@ -689,12 +411,12 @@ pre-commit install

关于 pre-commit 的详情请参考 [Paddle 代码风格检查指南](https://www.paddlepaddle.org.cn/documentation/docs/zh/develop/dev_guides/git_guides/codestyle_check_guide_cn.html)

-### 4.2 整理代码

+### 3.2 整理代码

在完成范例编写与训练后,确认结果无误,就可以整理代码。

使用 git 命令将所有新增、修改的代码文件以及必要的文档、图片等一并上传到自己仓库的 `dev_model` 分支上。

-### 4.3 提交 pull request

+### 3.3 提交 pull request

在 github 网页端切换到 `dev_model` 分支,并点击 "Contribute",再点击 "Open pull request" 按钮,

-将含有您的代码、文档、图片等内容的 `dev_model` 分支作为合入请求贡献到 PaddleScience。

+将含有你的代码、文档、图片等内容的 `dev_model` 分支作为合入请求贡献到 PaddleScience。

diff --git a/docs/zh/examples/NSFNet.md b/docs/zh/examples/NSFNet.md

new file mode 100644

index 000000000..2ba347562

--- /dev/null

+++ b/docs/zh/examples/NSFNet.md

@@ -0,0 +1,184 @@

+# NSFNet

+

+AI Studio快速体验

+

+=== "模型训练命令"

+

+ ``` sh

+ # VP_NSFNet1

+ python VP_NSFNet1.py

+

+ # VP_NSFNet2

+ python VP_NSFNet2.py

+

+ # VP_NSFNet3

+ python VP_NSFNet3.py

+ ```

+

+## 1. 背景简介

+ 最近几年,深度学习在很多领域取得了非凡的成就,尤其是计算机视觉和自然语言处理方面,而受启发于深度学习的快速发展,基于深度学习强大的函数逼近能力,神经网络在科学计算领域也取得了成功,现阶段的研究主要分为两大类,一类是将物理信息以及物理限制加入损失函数来对神经网络进行训练, 其代表有 PINN 以及 Deep Retz Net,另一类是通过数据驱动的深度神经网络算子,其代表有 FNO 以及 DeepONet。这些方法都在科学实践中获得了广泛应用,比如天气预测,量子化学,生物工程,以及计算流体等领域。而为充分探索PINN对流体方程的求解能力,本次复现[论文](https://arxiv.org/abs/2003.06496)作者先后使用具有解析解或数值解的二维、三维纳韦斯托克方程以及使用DNS方法进行高进度求解的数据集进行训练。论文实验表明PINN对不可压纳韦斯托克方程具有优秀的数值求解能力,本项目主要目标是使用PaddleScience复现论文所实现的高精度求解纳韦斯托克方程的代码。

+## 2. 问题定义

+本问题所使用的为最经典的PINN模型,对此不再赘述。

+

+主要介绍所求解的几类纳韦斯托克方程:

+

+不可压纳韦斯托克方程可以表示为:

+$$\frac{\partial \mathbf{u}}{\partial t}+(\mathbf{u} \cdot \nabla) \mathbf{u} =-\nabla p+\frac{1}{Re} \nabla^2 \mathbf{u} \quad \text { in } \Omega,$$

+

+$$\nabla \cdot \mathbf{u} =0 \quad \text { in } \Omega,$$

+

+$$\mathbf{u} =\mathbf{u}_{\Gamma} \quad \text { on } \Gamma_D,$$

+

+$$\frac{\partial \mathbf{u}}{\partial n} =0 \quad \text { on } \Gamma_N.$$

+

+### 2.1 Kovasznay flow

+$$u(x, y)=1-e^{\lambda x} \cos (2 \pi y),$$

+

+$$v(x, y)=\frac{\lambda}{2 \pi} e^{\lambda x} \sin (2 \pi y),$$

+

+$$p(x, y)=\frac{1}{2}\left(1-e^{2 \lambda x}\right),$$

+

+其中

+$$\lambda=\frac{1}{2 \nu}-\sqrt{\frac{1}{4 \nu^2}+4 \pi^2}, \quad \nu=\frac{1}{Re}=\frac{1}{40} .$$

+### 2.2 Cylinder wake

+该方程并没有解析解,为雷诺数为100的数值解,[AIstudio数据集](https://aistudio.baidu.com/datasetdetail/236213)。

+### 2.3 Beltrami flow

+$$u(x, y, z, t)= -a\left[e^{a x} \sin (a y+d z)+e^{a z} \cos (a x+d y)\right] e^{-d^2 t}, $$

+

+$$v(x, y, z, t)= -a\left[e^{a y} \sin (a z+d x)+e^{a x} \cos (a y+d z)\right] e^{-d^2 t}, $$

+

+$$w(x, y, z, t)= -a\left[e^{a z} \sin (a x+d y)+e^{a y} \cos (a z+d x)\right] e^{-d^2 t}, $$

+

+$$p(x, y, z, t)= -\frac{1}{2} a^2\left[e^{2 a x}+e^{2 a y}+e^{2 a z}+2 \sin (a x+d y) \cos (a z+d x) e^{a(y+z)} +2 \sin (a y+d z) \cos (a x+d y) e^{a(z+x)} +2 \sin (a z+d x) \cos (a y+d z) e^{a(x+y)}\right] e^{-2 d^2 t}.$$

+## 3. 问题求解

+为节约篇幅,问题求解以NSFNet3为例。

+### 3.1 模型构建

+本文使用PINN经典的MLP模型进行训练。

+``` py linenums="33"

+--8<--

+examples/NSFNet/VP_NSFNet3.py:175:175

+--8<--

+```

+### 3.2 超参数设定

+指定残差点、边界点、初值点的个数,以及可以指定边界损失函数和初值损失函数的权重

+``` py linenums="41"

+--8<--

+examples/NSFNet/VP_NSFNet3.py:178:186

+--8<--

+```

+### 3.3 数据生成

+因数据集为解析解,我们先构造解析解函数

+``` py linenums="10"

+--8<--

+examples/NSFNet/VP_NSFNet3.py:10:51

+--8<--

+```

+

+然后先后取边界点、初值点、以及用于计算残差的内部点(具体取法见[论文](https://arxiv.org/abs/2003.06496)节3.3)以及生成测试点。

+``` py linenums="53"

+--8<--

+examples/NSFNet/VP_NSFNet3.py:187:214

+--8<--

+```

+### 3.4 约束构建

+由于我们边界点和初值点具有解析解,因此我们使用监督约束

+``` py linenums="173"

+--8<--

+examples/NSFNet/VP_NSFNet3.py:266:277

+--8<--

+```

+

+其中alpha和beta为该损失函数的权重,在本代码中与论文中描述一致,都取为100

+

+使用内部点构造纳韦斯托克方程的残差约束

+``` py linenums="188"

+--8<--

+examples/NSFNet/VP_NSFNet3.py:280:297

+--8<--

+```

+### 3.5 评估器构建

+使用在数据生成时生成的测试点构造的测试集用于模型评估:

+``` py linenums="208"

+--8<--

+examples/NSFNet/VP_NSFNet3.py:305:313

+--8<--

+```

+

+### 3.6 优化器构建

+与论文中描述相同,我们使用分段学习率构造Adam优化器,其中可以通过调节_epoch_list_来调节训练轮数。

+``` py linenums="219"

+--8<--

+examples/NSFNet/VP_NSFNet3.py:316:325

+--8<--

+```

+

+### 3.7 模型训练与评估

+完成上述设置之后,只需要将上述实例化的对象按顺序传递给 `ppsci.solver.Solver`。

+

+``` py linenums="230"

+--8<--

+examples/NSFNet/VP_NSFNet3.py:328:345

+--8<--

+```

+

+最后启动训练即可:

+

+``` py linenums="249"

+--8<--

+examples/NSFNet/VP_NSFNet3.py:350:350

+--8<--

+```

+

+

+## 4. 完整代码

+``` py linenums="1" title="NSFNet.py"

+--8<--

+examples/NSFNet/VP_NSFNet1.py

+examples/NSFNet/VP_NSFNet2.py

+examples/NSFNet/VP_NSFNet3.py

+--8<--

+```

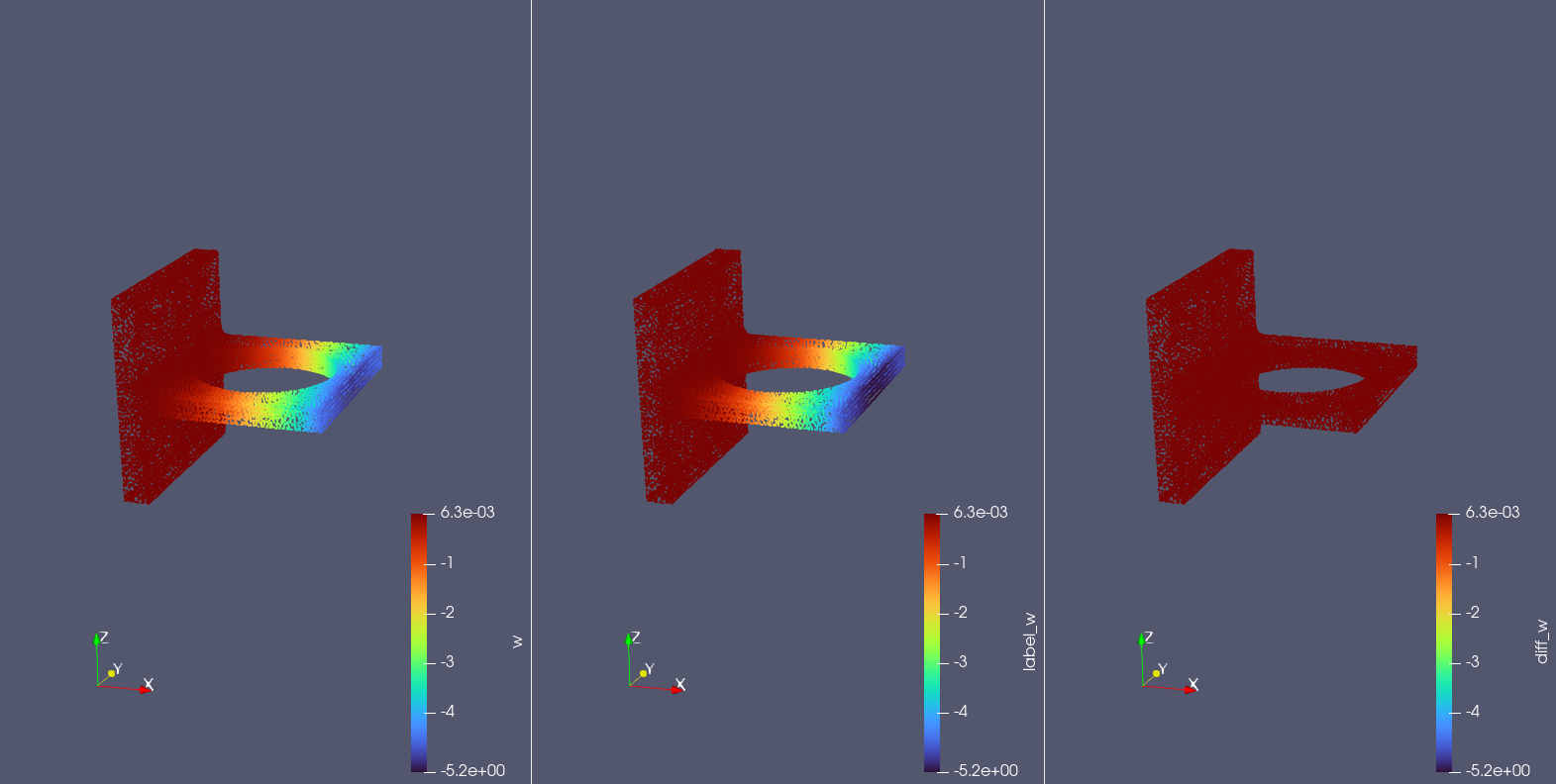

+## 5. 结果展示

+### NSFNet1:

+| size 4*50 | paper | code(without BFGS) | PaddleScience |

+|-------------------|--------|--------------------|---------|

+| u | 0.084% | 0.062% | 0.055% |

+| v | 0.425% | 0.431% | 0.399% |

+### NSFNet2:

+T=0

+| size 10*100| paper | code(without BFGS) | PaddleScience |

+|-------------------|--------|--------------------|---------|

+| u | /| 0.403% | 0.138% |

+| v | / | 1.5% | 0.488% |

+

+速度场

+

+

+

+涡流场(t=4.0)

+

+

+### NSFNet3:

+Test dataset:

+| size 10*100 | code(without BFGS) | PaddleScience |

+|-------------------|--------------------|---------|

+| u | 0.0766% | 0.059% |

+| v | 0.0689% | 0.082% |

+| w | 0.109% | 0.0732% |

+

+T=1

+| size 10*100 | paper | PaddleScience |

+|-------------------|--------|---------|

+| u | 0.426% | 0.115% |

+| v | 0.366% | 0.199% |

+| w | 0.587% | 0.217% |

+

+速度场 z=0

+

+

+## 6. 结果说明

+我们使用PINN对不可压纳韦斯托克方程进行数值求解。在PINN中,随机选取的时间和空间的坐标被当作输入值,所对应的速度场以及压强场被当作输出值,使用初值、边界条件当作监督约束以及纳韦斯托克方程本身的当作无监督约束条件加入损失函数进行训练。我们针对三个不同类型的PINN纳韦斯托克方程,设计了三个不同结构神经网络,即NSFNet1、NSFNet2、NSFNet3。通过损失函数的下降可以证明神经网络在求解纳韦斯托克方程中的收敛性,表明PINN拥有对不可压纳韦斯托克方程的求解能力。而通过实验结果表明,三个NSFNet方程都可以很好的逼近对应的纳韦斯托克方程,并且,我们发现增加边界约束以及初值约束的权重可以使得神经网络拥有更好的逼近效果。

+## 7. 参考资料

+[NSFnets (Navier-Stokes Flow nets): Physics-informed neural networks for the incompressible Navier-Stokes equations](https://arxiv.org/abs/2003.06496)

+

+[Github NSFnets](https://github.com/Alexzihaohu/NSFnets/tree/master)

diff --git a/docs/zh/examples/bracket.md b/docs/zh/examples/bracket.md

index b42ea7a34..747e1aaf9 100644

--- a/docs/zh/examples/bracket.md

+++ b/docs/zh/examples/bracket.md

@@ -2,34 +2,6 @@

-=== "模型训练命令"

-

- ``` sh

- # linux

- wget https://paddle-org.bj.bcebos.com/paddlescience/datasets/bracket/bracket_dataset.tar

- # windows

- # curl https://paddle-org.bj.bcebos.com/paddlescience/datasets/bracket/bracket_dataset.tar --output bracket_dataset.tar

- # unzip it

- tar -xvf bracket_dataset.tar

- python bracket.py

- ```

-

-=== "模型评估命令"

-

- ``` sh

- # linux

- wget https://paddle-org.bj.bcebos.com/paddlescience/datasets/bracket/bracket_dataset.tar

- # windows

- # curl https://paddle-org.bj.bcebos.com/paddlescience/datasets/bracket/bracket_dataset.tar --output bracket_dataset.tar

- # unzip it

- tar -xvf bracket_dataset.tar

- python bracket.py mode=eval EVAL.pretrained_model_path=https://paddle-org.bj.bcebos.com/paddlescience/models/bracket/bracket_pretrained.pdparams

- ```

-

-| 预训练模型 | 指标 |

-|:--| :--|

-| [bracket_pretrained.pdparams](https://paddle-org.bj.bcebos.com/paddlescience/models/bracket/bracket_pretrained.pdparams) | loss(commercial_ref_u_v_w_sigmas): 32.28704

-

---8<--

-./README.md:cooperation

---8<--

-

--8<--

./README.md:license

--8<--

diff --git a/docs/zh/api/arch.md b/docs/zh/api/arch.md

index 03e4f6253..30358350e 100644

--- a/docs/zh/api/arch.md

+++ b/docs/zh/api/arch.md

@@ -7,7 +7,6 @@

- Arch

- MLP

- DeepONet

- - DeepPhyLSTM

- LorenzEmbedding

- RosslerEmbedding

- CylinderEmbedding

@@ -17,6 +16,5 @@

- ModelList

- AFNONet

- PrecipNet

- - UNetEx

show_root_heading: false

heading_level: 3

diff --git a/docs/zh/api/data/dataset.md b/docs/zh/api/data/dataset.md

index 96e64da56..f2e8f0708 100644

--- a/docs/zh/api/data/dataset.md

+++ b/docs/zh/api/data/dataset.md

@@ -1,4 +1,4 @@

-# Data.dataset(数据集) 模块

+# Data(数据) 模块

::: ppsci.data.dataset

handler: python

@@ -16,6 +16,4 @@

- LorenzDataset

- RosslerDataset

- VtuDataset

- - MeshAirfoilDataset

- - MeshCylinderDataset

show_root_heading: false

diff --git a/docs/zh/api/data/process/batch_transform.md b/docs/zh/api/data/process/batch_transform.md

index 4121821ee..fa3b426e6 100644

--- a/docs/zh/api/data/process/batch_transform.md

+++ b/docs/zh/api/data/process/batch_transform.md

@@ -1 +1 @@

-# Data.batch_transform(批预处理) 模块

+# Batch Transform(批预处理) 模块

diff --git a/docs/zh/api/data/process/transform.md b/docs/zh/api/data/process/transform.md

index 78647df16..3e7f511c6 100644

--- a/docs/zh/api/data/process/transform.md

+++ b/docs/zh/api/data/process/transform.md

@@ -1,4 +1,4 @@

-# Data.transform(预处理) 模块

+# Transform(预处理) 模块

::: ppsci.data.process.transform

handler: python

@@ -10,5 +10,4 @@

- Log1p

- CropData

- SqueezeData

- - FunctionalTransform

show_root_heading: false

diff --git a/docs/zh/api/lr_scheduler.md b/docs/zh/api/lr_scheduler.md

index 8de28be5b..8dfae5a6c 100644

--- a/docs/zh/api/lr_scheduler.md

+++ b/docs/zh/api/lr_scheduler.md

@@ -1,4 +1,4 @@

-# Optimizer.lr_scheduler(学习率) 模块

+# Lr_scheduler(学习率) 模块

::: ppsci.optimizer.lr_scheduler

handler: python

diff --git a/docs/zh/api/optimizer.md b/docs/zh/api/optimizer.md

index d220b82a9..6bb0e0e9c 100644

--- a/docs/zh/api/optimizer.md

+++ b/docs/zh/api/optimizer.md

@@ -1,4 +1,4 @@

-# Optimizer.optimizer(优化器) 模块

+# Optimizer(优化器) 模块

::: ppsci.optimizer.optimizer

handler: python

diff --git a/docs/zh/api/utils.md b/docs/zh/api/utils.md

new file mode 100644

index 000000000..8ce7878a3

--- /dev/null

+++ b/docs/zh/api/utils.md

@@ -0,0 +1,24 @@

+# Utils(工具) 模块

+

+::: ppsci.utils

+ handler: python

+ options:

+ members:

+ - initializer

+ - logger

+ - misc

+ - load_csv_file

+ - load_mat_file

+ - load_vtk_file

+ - run_check

+ - profiler

+ - AttrDict

+ - ExpressionSolver

+ - AverageMeter

+ - set_random_seed

+ - load_checkpoint

+ - load_pretrain

+ - save_checkpoint

+ - lambdify

+ show_root_heading: false

+ heading_level: 3

diff --git a/docs/zh/development.md b/docs/zh/development.md

index 8204d1c6b..4ceeb4763 100644

--- a/docs/zh/development.md

+++ b/docs/zh/development.md

@@ -1,58 +1,48 @@

# 开发指南

-本文档介绍如何基于 PaddleScience 套件进行代码开发并最终贡献到 PaddleScience 套件中。

-

-PaddleScience 相关的论文复现、API 开发任务开始之前需提交 RFC 文档,请参考:[PaddleScience RFC Template](https://github.com/PaddlePaddle/community/blob/master/rfcs/Science/template.md)

+本文档介绍如何基于 PaddleScience 套件进行代码开发并最终贡献到 PaddleScience 套件中

## 1. 准备工作

-1. 将 PaddleScience fork 到**自己的仓库**

-2. 克隆**自己仓库**里的 PaddleScience 到本地,并进入该目录

+1. 将 PaddleScience fork 到自己的仓库

+2. 克隆自己仓库里的 PaddleScience 到本地,并进入该目录

``` sh

- git clone -b develop https://github.com/USER_NAME/PaddleScience.git

+ git clone https://github.com/your_username/PaddleScience.git

cd PaddleScience

```

- 上方 `clone` 命令中的 `USER_NAME` 字段请填入的自己的用户名。

-

3. 安装必要的依赖包

``` sh

- pip install -r requirements.txt -i https://pypi.tuna.tsinghua.edu.cn/simple

+ pip install -r requirements.txt

+ # 安装较慢时可以加上-i选项,提升下载速度

+ # pip install -r requirements.txt -i https://pypi.douban.com/simple/

```

-4. 基于当前所在的 `develop` 分支,新建一个分支(假设新分支名字为 `dev_model`)

+4. 基于 develop 分支,新建一个新分支(假设新分支名字为dev_model)

``` sh

git checkout -b "dev_model"

```

-5. 添加 PaddleScience 目录到系统环境变量 `PYTHONPATH` 中

+5. 添加 PaddleScience 目录到系统环境变量 PYTHONPATH 中

``` sh

export PYTHONPATH=$PWD:$PYTHONPATH

```

-6. 执行以下代码,验证安装的 PaddleScience 基础功能是否正常

-

- ``` sh

- python -c "import ppsci; ppsci.run_check()"

- ```

-

- 如果出现 PaddleScience is installed successfully.✨ 🍰 ✨,则说明安装验证成功。

-

## 2. 编写代码

-完成上述准备工作后,就可以基于 PaddleScience 开始开发自己的案例或者功能了。

-

-假设新建的案例代码文件路径为:`PaddleScience/examples/demo/demo.py`,接下来开始详细介绍这一流程

+完成上述准备工作后,就可以基于 PaddleScience 提供的 API 开始编写自己的案例代码了,接下来开始详细介绍

+这一过程。

### 2.1 导入必要的包

-PaddleScience 所提供的 API 全部在 `ppsci.*` 模块下,因此在 `demo.py` 的开头首先需要导入 `ppsci` 这个顶层模块,接着导入日志打印模块 `logger`,方便打印日志时自动记录日志到本地文件中,最后再根据您自己的需要,导入其他必要的模块。

+PaddleScience 所提供的 API 全部在 `ppsci` 模块下,因此在代码文件的开头首先需要导入 `ppsci` 这个顶

+层模块以及日志打印模块,然后再根据您自己的需要,导入其他必要的模块。

-``` py title="examples/demo/demo.py"

+``` py linenums="1"

import ppsci

from ppsci.utils import logger

@@ -62,75 +52,59 @@ from ppsci.utils import logger

### 2.2 设置运行环境

-在运行 `demo.py` 之前,需要进行一些必要的运行环境设置,如固定随机种子(保证实验可复现性)、设置输出目录并初始化日志打印模块(保存重要实验数据)。

+在运行 python 的主体代码之前,我们同样需要设置一些必要的运行环境,比如固定随机种子、设置模型/日志保存

+目录、初始化日志打印模块。

-``` py title="examples/demo/demo.py"

+``` py linenums="1"

if __name__ == "__main__":

# set random seed for reproducibility

ppsci.utils.misc.set_random_seed(42)

# set output directory

- OUTPUT_DIR = "./output_example"

+ output_dir = "./output_example"

# initialize logger

- logger.init_logger("ppsci", f"{OUTPUT_DIR}/train.log", "info")

+ logger.init_logger("ppsci", f"{output_dir}/train.log", "info")

```

-完成上述步骤之后,`demo.py` 已经搭好了必要框架。接下来介绍如何基于自己具体的需求,对 `ppsci.*` 下的其他模块进行开发或者复用,以最终在 `demo.py` 中使用。

-

### 2.3 构建模型

#### 2.3.1 构建已有模型

-PaddleScience 内置了一些常见的模型,如 `MLP` 模型,如果您想使用这些内置的模型,可以直接调用 [`ppsci.arch.*`](./api/arch.md) 下的 API,并填入模型实例化所需的参数,即可快速构建模型。

+PaddleScience 内置了一些常见的模型,如 `MLP` 模型,如果您想使用这些内置的模型,可以直接调用

+[`ppsci.arch`](./api/arch.md) 下的 API,并填入模型实例化所需的参数,即可快速构建模型。

-``` py title="examples/demo/demo.py"

+``` py linenums="1"

# create a MLP model

model = ppsci.arch.MLP(("x", "y"), ("u", "v", "p"), 9, 50, "tanh")

```

-上述代码实例化了一个 `MLP` 全连接模型,其输入数据为两个字段:`"x"`、`"y"`,输出数据为三个字段:`"u"`、`"v"`、`"w"`;模型具有 $9$ 层隐藏层,每层的神经元个数为 $50$ 个,每层隐藏层使用的激活函数均为 $\tanh$ 双曲正切函数。

-

#### 2.3.2 构建新的模型

-当 PaddleScience 内置的模型无法满足您的需求时,您就可以通过新增模型文件并编写模型代码的方式,使用您自定义的模型,步骤如下:

+当 PaddleScienc 内置的模型无法满足您的需求时,您也可以通过新增模型文件并编写模型代码的方式,使用您自

+定义的模型,步骤如下:

-1. 在 `ppsci/arch/` 文件夹下新建模型结构文件,以 `new_model.py` 为例。

-2. 在 `new_model.py` 文件中导入 PaddleScience 的模型基类所在的模块 `base`,并从 `base.Arch` 派生出您想创建的新模型类(以

-`Class NewModel` 为例)。

+1. 在 `ppsci/arch` 下新建模型结构文件,以 `new_model.py` 为例

+2. 在 `new_model.py` 文件中导入 PaddleScience 的模型基类所在模块 `base`,并让创建的新模型类(以

+`Class NewModel` 为例)从 `base.Arch` 继承。

- ``` py title="ppsci/arch/new_model.py"

+ ``` py linenums="1" title="ppsci/arch/new_model.py"

from ppsci.arch import base

class NewModel(base.Arch):

def __init__(self, ...):

...

- # initialization

+ # init

def forward(self, ...):

...

# forward

```

-3. 编写 `NewModel.__init__` 方法,其被用于模型创建时的初始化操作,包括模型层、参数变量初始化;然后再编写 `NewModel.forward` 方法,其定义了模型从接受输入、计算输出这一过程。以 `MLP.__init__` 和 `MLP.forward` 为例,如下所示。

-

- === "MLP.\_\_init\_\_"

-

- ``` py

- --8<--

- ppsci/arch/mlp.py:73:138

- --8<--

- ```

-

- === "MLP.forward"

-

- ``` py

- --8<--

- ppsci/arch/mlp.py:140:167

- --8<--

- ```

+3. 编写 `__init__` 代码,用于模型创建时的初始化;然后再编写 `forward` 代码,用于定义模型接受输入、

+计算输出这一前向过程。

-4. 在 `ppsci/arch/__init__.py` 中导入编写的新模型类 `NewModel`,并添加到 `__all__` 中

+4. 在 `ppsci/arch/__init__.py` 中导入编写的新模型类,并添加到 `__all__` 中

- ``` py title="ppsci/arch/__init__.py" hl_lines="3 8"

+ ``` py linenums="1" title="ppsci/arch/__init__.py" hl_lines="3 8"

...

...

from ppsci.arch.new_model import NewModel

@@ -142,11 +116,8 @@ model = ppsci.arch.MLP(("x", "y"), ("u", "v", "p"), 9, 50, "tanh")

]

```

-完成上述新模型代码编写的工作之后,在 `demo.py` 中,就能通过调用 `ppsci.arch.NewModel`,实例化刚才编写的模型,如下所示。

-

-``` py title="examples/demo/demo.py"

-model = ppsci.arch.NewModel(...)

-```

+完成上述新模型代码编写的工作之后,我们就能像 PaddleScience 内置模型一样,以

+`ppsci.arch.NewModel` 的方式,调用我们编写的新模型类,并用于创建模型实例。

### 2.4 构建方程

@@ -155,103 +126,39 @@ model = ppsci.arch.NewModel(...)

#### 2.4.1 构建已有方程

PaddleScience 内置了一些常见的方程,如 `NavierStokes` 方程,如果您想使用这些内置的方程,可以直接

-调用 [`ppsci.equation.*`](./api/equation.md) 下的 API,并填入方程实例化所需的参数,即可快速构建方程。

+调用 [`ppsci.equation`](./api/equation.md) 下的 API,并填入方程实例化所需的参数,即可快速构建模型。

-``` py title="examples/demo/demo.py"

+``` py linenums="1"

# create a Vibration equation

viv_equation = ppsci.equation.Vibration(2, -4, 0)

```

#### 2.4.2 构建新的方程

-当 PaddleScience 内置的方程无法满足您的需求时,您也可以通过新增方程文件并编写方程代码的方式,使用您自定义的方程。

-

-假设需要计算的方程公式如下所示。

-

-$$

-\begin{cases}

- \begin{align}

- \dfrac{\partial u}{\partial x} + \dfrac{\partial u}{\partial y} &= u + 1, \tag{1} \\

- \dfrac{\partial v}{\partial x} + \dfrac{\partial v}{\partial y} &= v. \tag{2}

- \end{align}

-\end{cases}

-$$

-

-> 其中 $x$, $y$ 为模型输入,表示$x$、$y$轴坐标;$u=u(x,y)$、$v=v(x,y)$ 是模型输出,表示 $(x,y)$ 处的 $x$、$y$ 轴方向速度。

-

-首先我们需要将上述方程进行适当移项,将含有变量、函数的项移动到等式左侧,含有常数的项移动到等式右侧,方便后续转换成程序代码,如下所示。

-

-$$

-\begin{cases}

- \begin{align}

- \dfrac{\partial u}{\partial x} + \dfrac{\partial u}{\partial y} - u &= 1, \tag{3}\\

- \dfrac{\partial v}{\partial x} + \dfrac{\partial v}{\partial y} - v &= 0. \tag{4}

- \end{align}

-\end{cases}

-$$

+当 PaddleScienc 内置的方程无法满足您的需求时,您也可以通过新增方程文件并编写方程代码的方式,使用您自

+定义的方程,步骤如下:

-然后就可以将上述移项后的方程组根据以下步骤转换成对应的程序代码。

+1. 在 `ppsci/equation/pde` 下新建方程文件(如果您的方程并不是 PDE 方程,那么需要新建一个方程类文件

+夹,比如在 `ppsci/equation` 下新建 `ode` 文件夹,再将您的方程文件放在 `ode` 文件夹下,此处以 PDE

+类的方程 `new_pde.py` 为例)

-1. 在 `ppsci/equation/pde/` 下新建方程文件。如果您的方程并不是 PDE 方程,那么需要新建一个方程类文件夹,比如在 `ppsci/equation/` 下新建 `ode` 文件夹,再将您的方程文件放在 `ode` 文件夹下。此处以PDE类的方程 `new_pde.py` 为例。

+2. 在 `new_pde.py` 文件中导入 PaddleScience 的方程基类所在模块 `base`,并让创建的新方程类

+(以`Class NewPDE` 为例)从 `base.PDE` 继承

-2. 在 `new_pde.py` 文件中导入 PaddleScience 的方程基类所在模块 `base`,并从 `base.PDE` 派生 `Class NewPDE`。

-

- ``` py title="ppsci/equation/pde/new_pde.py"

+ ``` py linenums="1" title="ppsci/equation/pde/new_pde.py"

from ppsci.equation.pde import base

class NewPDE(base.PDE):

+ def __init__(self, ...):

+ ...

+ # init

```

-3. 编写 `__init__` 代码,用于方程创建时的初始化,在其中定义必要的变量和公式计算过程。PaddleScience 支持使用 sympy 符号计算库创建方程和直接使用 python 函数编写方程,两种方式如下所示。

-

- === "sympy expression"

-

- ``` py title="ppsci/equation/pde/new_pde.py"

- from ppsci.equation.pde import base

-

- class NewPDE(base.PDE):

- def __init__(self):

- x, y = self.create_symbols("x y") # 创建自变量 x, y

- u = self.create_function("u", (x, y)) # 创建关于自变量 (x, y) 的函数 u(x,y)

- v = self.create_function("v", (x, y)) # 创建关于自变量 (x, y) 的函数 v(x,y)

-

- expr1 = u.diff(x) + u.diff(y) - u # 对应等式(3)左侧表达式

- expr2 = v.diff(x) + v.diff(y) - v # 对应等式(4)左侧表达式

-

- self.add_equation("expr1", expr1) # 将expr1 的 sympy 表达式对象添加到 NewPDE 对象的公式集合中

- self.add_equation("expr2", expr2) # 将expr2 的 sympy 表达式对象添加到 NewPDE 对象的公式集合中

- ```

-

- === "python function"

-

- ``` py title="ppsci/equation/pde/new_pde.py"

- from ppsci.autodiff import jacobian

-

- from ppsci.equation.pde import base

-

- class NewPDE(base.PDE):

- def __init__(self):

- def expr1_compute_func(out):

- x, y = out["x"], out["y"] # 从 out 数据字典中取出自变量 x, y 的数据值

- u = out["u"] # 从 out 数据字典中取出因变量 u 的函数值

-

- expr1 = jacobian(u, x) + jacobian(u, y) - u # 对应等式(3)左侧表达式计算过程

- return expr1 # 返回计算结果值

-

- def expr2_compute_func(out):

- x, y = out["x"], out["y"] # 从 out 数据字典中取出自变量 x, y 的数据值

- v = out["v"] # 从 out 数据字典中取出因变量 v 的函数值

-

- expr2 = jacobian(v, x) + jacobian(v, y) - v # 对应等式(4)左侧表达式计算过程

- return expr2

-

- self.add_equation("expr1", expr1_compute_func) # 将 expr1 的计算函数添加到 NewPDE 对象的公式集合中

- self.add_equation("expr2", expr2_compute_func) # 将 expr2 的计算函数添加到 NewPDE 对象的公式集合中

- ```

+3. 编写 `__init__` 代码,用于方程创建时的初始化

4. 在 `ppsci/equation/__init__.py` 中导入编写的新方程类,并添加到 `__all__` 中

- ``` py title="ppsci/equation/__init__.py" hl_lines="3 8"

+ ``` py linenums="1" title="ppsci/equation/__init__.py" hl_lines="3 8"

...

...

from ppsci.equation.pde.new_pde import NewPDE

@@ -263,150 +170,29 @@ $$

]

```

-完成上述新方程代码编写的工作之后,我们就能像 PaddleScience 内置方程一样,以 `ppsci.equation.NewPDE` 的方式,调用我们编写的新方程类,并用于创建方程实例。

+完成上述新方程代码编写的工作之后,我们就能像 PaddleScience 内置方程一样,以

+`ppsci.equation.NewPDE` 的方式,调用我们编写的新方程类,并用于创建方程实例。

在方程构建完毕后之后,我们需要将所有方程包装为到一个字典中

-``` py title="examples/demo/demo.py"

+``` py linenums="1"

new_pde = ppsci.equation.NewPDE(...)

-equation = {..., "newpde": new_pde}

-```

-

-### 2.5 构建几何模块[可选]

-

-模型训练、验证时所用的输入、标签数据的来源,根据具体案例场景的不同而变化。大部分基于 PINN 的案例,其数据来自几何形状内部、表面采样得到的坐标点、法向量、SDF 值;而基于数据驱动的方法,其输入、标签数据大多数来自于外部文件,或通过 numpy 等第三方库构造的存放在内存中的数据。本章节主要对第一种情况所需的几何模块进行介绍,第二种情况则不一定需要几何模块,其构造方式可以参考 [#2.6 构建约束条件](#2.6)。

-

-#### 2.5.1 构建已有几何

-

-PaddleScience 内置了几类常用的几何形状,包括简单几何、复杂几何,如下所示。

-

-| 几何调用方式 | 含义 |

-| -- | -- |

-|`ppsci.geometry.Interval`| 1 维线段几何|

-|`ppsci.geometry.Disk`| 2 维圆面几何|

-|`ppsci.geometry.Polygon`| 2 维多边形几何|

-|`ppsci.geometry.Rectangle` | 2 维矩形几何|

-|`ppsci.geometry.Triangle` | 2 维三角形几何|

-|`ppsci.geometry.Cuboid` | 3 维立方体几何|

-|`ppsci.geometry.Sphere` | 3 维圆球几何|

-|`ppsci.geometry.Mesh` | 3 维 Mesh 几何|

-|`ppsci.geometry.PointCloud` | 点云几何|

-|`ppsci.geometry.TimeDomain` | 1 维时间几何(常用于瞬态问题)|

-|`ppsci.geometry.TimeXGeometry` | 1 + N 维带有时间的几何(常用于瞬态问题)|

-

-以计算域为 2 维矩形几何为例,实例化一个 x 轴边长为2,y 轴边长为 1,且左下角为点 (-1,-3) 的矩形几何代码如下:

-

-``` py title="examples/demo/demo.py"

-LEN_X, LEN_Y = 2, 1 # 定义矩形边长

-rect = ppsci.geometry.Rectangle([-1, -3], [-1 + LEN_X, -3 + LEN_Y]) # 通过左下角、右上角对角线坐标构造矩形

-```

-

-其余的几何体构造方法类似,参考 API 文档的 [ppsci.geometry](./api/geometry.md) 部分即可。

-

-#### 2.5.2 构建新的几何

-

-下面以构建一个新的几何体 —— 2 维椭圆(无旋转)为例进行介绍。

-

-1. 首先我们需要在二维几何的代码文件 `ppsci/geometry/geometry_2d.py` 中新建椭圆类 `Ellipse`,并制定其直接父类为 `geometry.Geometry` 几何基类。

-然后根据椭圆的代数表示公式:$\dfrac{x^2}{a^2} + \dfrac{y^2}{b^2} = 1$,可以发现表示一个椭圆需要记录其圆心坐标 $(x_0,y_0)$、$x$ 轴半径 $a$、$y$ 轴半径 $b$。因此该椭圆类的代码如下所示。

-

- ``` py title="ppsci/geometry/geometry_2d.py"

- class Ellipse(geometry.Geometry):

- def __init__(self, x0: float, y0: float, a: float, b: float)

- self.center = np.array((x0, y0), dtype=paddle.get_default_dtype())

- self.a = a

- self.b = b

- ```

-

-2. 为椭圆类编写必要的基础方法,如下所示。

-

- - 判断给定点集是否在椭圆内部

-

- ``` py title="ppsci/geometry/geometry_2d.py"

- def is_inside(self, x):

- return ((x / self.center) ** 2).sum(axis=1) < 1

- ```

-

- - 判断给定点集是否在椭圆边界上

-

- ``` py title="ppsci/geometry/geometry_2d.py"

- def on_boundary(self, x):

- return np.isclose(((x / self.center) ** 2).sum(axis=1), 1)

- ```

-

- - 在椭圆内部点随机采样(此处使用“拒绝采样法”实现)

-

- ``` py title="ppsci/geometry/geometry_2d.py"

- def random_points(self, n, random="pseudo"):

- res_n = n

- result = []

- max_radius = self.center.max()

- while (res_n < n):

- rng = sampler.sample(n, 2, random)

- r, theta = rng[:, 0], 2 * np.pi * rng[:, 1]

- x = np.sqrt(r) * np.cos(theta)

- y = np.sqrt(r) * np.sin(theta)

- candidate = max_radius * np.stack((x, y), axis=1) + self.center

- candidate = candidate[self.is_inside(candidate)]

- if len(candidate) > res_n:

- candidate = candidate[: res_n]

-

- result.append(candidate)

- res_n -= len(candidate)

- result = np.concatenate(result, axis=0)

- return result

- ```

-

- - 在椭圆边界随机采样(此处基于椭圆参数方程实现)

-

- ``` py title="ppsci/geometry/geometry_2d.py"

- def random_boundary_points(self, n, random="pseudo"):

- theta = 2 * np.pi * sampler.sample(n, 1, random)

- X = np.concatenate((self.a * np.cos(theta),self.b * np.sin(theta)), axis=1)

- return X + self.center

- ```

-

-3. 在 `ppsci/geometry/__init__.py` 中加入椭圆类 `Ellipse`,如下所示。

-

- ``` py title="ppsci/geometry/__init__.py" hl_lines="3 8"

- ...

- ...

- from ppsci.geometry.geometry_2d import Ellipse

-

- __all__ = [

- ...,

- ...,

- "Ellipse",

- ]

- ```

-

-完成上述实现之后,我们就能以如下方式实例化椭圆类。同样地,建议将所有几何类实例包装在一个字典中,方便后续索引。

-

-``` py title="examples/demo/demo.py"

-ellipse = ppsci.geometry.Ellipse(0, 0, 2, 1)

-geom = {..., "ellipse": ellipse}

+equation = {"newpde": new_pde}

```

-### 2.6 构建约束条件

-

-无论是 PINNs 方法还是数据驱动方法,它们总是需要利用数据来指导网络模型的训练,而这一过程在 PaddleScience 中由 `Constraint`(约束)模块负责。

+### 2.5 构建约束条件

-#### 2.6.1 构建已有约束

+无论是 PINNs 方法还是数据驱动方法,它们总是需要构造数据来指导网络模型的训练,而利用数据指导网络训练的

+这一过程,在 PaddleScience 中由 `Constraint`(约束) 负责。

-PaddleScience 内置了一些常见的约束,如下所示。

+#### 2.5.1 构建已有约束

-|约束名称|功能|

-|--|--|

-|`ppsci.constraint.BoundaryConstraint`|边界约束|

-|`ppsci.constraint.InitialConstraint` |内部点初值约束|

-|`ppsci.constraint.IntegralConstraint` |边界积分约束|

-|`ppsci.constraint.InteriorConstraint`|内部点约束|

-|`ppsci.constraint.PeriodicConstraint` |边界周期约束|

-|`ppsci.constraint.SupervisedConstraint` |监督数据约束|

+PaddleScience 内置了一些常见的约束,如主要用于数据驱动模型的 `SupervisedConstraint` 监督约束,

+主要用于物理信息驱动的 `InteriorConstraint` 内部点约束,如果您想使用这些内置的约束,可以直接调用

+[`ppsci.constraint`](./api/constraint.md) 下的 API,并填入约束实例化所需的参数,即可快速构建约

+束条件。

-如果您想使用这些内置的约束,可以直接调用 [`ppsci.constraint.*`](./api/constraint.md) 下的 API,并填入约束实例化所需的参数,即可快速构建约束条件。

-

-``` py title="examples/demo/demo.py"

+``` py linenums="1"

# create a SupervisedConstraint

sup_constraint = ppsci.constraint.SupervisedConstraint(

train_dataloader_cfg,

@@ -416,11 +202,9 @@ sup_constraint = ppsci.constraint.SupervisedConstraint(

)

```

-约束的参数填写方式,请参考对应的 API 文档参数说明和样例代码。

-

-#### 2.6.2 构建新的约束

+#### 2.5.2 构建新的约束

-当 PaddleScience 内置的约束无法满足您的需求时,您也可以通过新增约束文件并编写约束代码的方式,使用您自

+当 PaddleScienc 内置的约束无法满足您的需求时,您也可以通过新增约束文件并编写约束代码的方式,使用您自

定义的约束,步骤如下:

1. 在 `ppsci/constraint` 下新建约束文件(此处以约束 `new_constraint.py` 为例)

@@ -428,26 +212,20 @@ sup_constraint = ppsci.constraint.SupervisedConstraint(

2. 在 `new_constraint.py` 文件中导入 PaddleScience 的约束基类所在模块 `base`,并让创建的新约束

类(以 `Class NewConstraint` 为例)从 `base.PDE` 继承

- ``` py title="ppsci/constraint/new_constraint.py"

- from ppsci.constraint import base

-

- class NewConstraint(base.Constraint):

- ```

-

-3. 编写 `__init__` 方法,用于约束创建时的初始化。

-

- ``` py title="ppsci/constraint/new_constraint.py"

+ ``` py linenums="1" title="ppsci/constraint/new_constraint.py"

from ppsci.constraint import base

class NewConstraint(base.Constraint):

def __init__(self, ...):

...

- # initialization

+ # init

```

+3. 编写 `__init__` 代码,用于约束创建时的初始化

+

4. 在 `ppsci/constraint/__init__.py` 中导入编写的新约束类,并添加到 `__all__` 中

- ``` py title="ppsci/constraint/__init__.py" hl_lines="3 8"

+ ``` py linenums="1" title="ppsci/constraint/__init__.py" hl_lines="3 8"

...

...

from ppsci.constraint.new_constraint import NewConstraint

@@ -459,47 +237,38 @@ sup_constraint = ppsci.constraint.SupervisedConstraint(

]

```

-完成上述新约束代码编写的工作之后,我们就能像 PaddleScience 内置约束一样,以 `ppsci.constraint.NewConstraint` 的方式,调用我们编写的新约束类,并用于创建约束实例。

+完成上述新约束代码编写的工作之后,我们就能像 PaddleScience 内置约束一样,以

+`ppsci.constraint.NewConstraint` 的方式,调用我们编写的新约束类,并用于创建约束实例。

-``` py title="examples/demo/demo.py"

-new_constraint = ppsci.constraint.NewConstraint(...)

-constraint = {..., new_constraint.name: new_constraint}

-```

+### 2.6 定义超参数

-### 2.7 定义超参数

+在模型开始训练前,需要定义一些训练相关的超参数,如训练轮数、学习率等,如下所示

-在模型开始训练前,需要定义一些训练相关的超参数,如训练轮数、学习率等,如下所示。

-

-``` py title="examples/demo/demo.py"

-EPOCHS = 10000

-LEARNING_RATE = 0.001

+``` py linenums="1"

+epochs = 10000

+learning_rate = 0.001

```

-### 2.8 构建优化器

+### 2.7 构建优化器

-模型训练时除了模型本身,还需要定义一个用于更新模型参数的优化器,如下所示。

+模型训练时除了模型本身,还需要定义一个用于更新模型参数的优化器,如下所示

-``` py title="examples/demo/demo.py"

+``` py linenums="1"

optimizer = ppsci.optimizer.Adam(0.001)(model)

```

-### 2.9 构建评估器[可选]

-

-#### 2.9.1 构建已有评估器

-

-PaddleScience 内置了一些常见的评估器,如下所示。

+### 2.8 构建评估器

-|评估器名称|功能|

-|--|--|

-|`ppsci.validator.GeometryValidator`|几何评估器|

-|`ppsci.validator.SupervisedValidator` |监督数据评估器|

+#### 2.8.1 构建已有评估器

-如果您想使用这些内置的评估器,可以直接调用 [`ppsci.validate.*`](./api/validate.md) 下的 API,并填入评估器实例化所需的参数,即可快速构建评估器。

+PaddleScience 内置了一些常见的评估器,如 `SupervisedValidator` 评估器,如果您想使用这些内置的评

+估器,可以直接调用 [`ppsci.validate`](./api/validate.md) 下的 API,并填入评估器实例化所需的参数,

+即可快速构建评估器。

-``` py title="examples/demo/demo.py"

+``` py linenums="1"

# create a SupervisedValidator

eta_mse_validator = ppsci.validate.SupervisedValidator(

- valid_dataloader_cfg,

+ valida_dataloader_cfg,

ppsci.loss.MSELoss("mean"),

{"eta": lambda out: out["eta"], **equation["VIV"].equations},

metric={"MSE": ppsci.metric.MSE()},

@@ -507,35 +276,30 @@ eta_mse_validator = ppsci.validate.SupervisedValidator(

)

```

-#### 2.9.2 构建新的评估器

+#### 2.8.2 构建新的评估器

-当 PaddleScience 内置的评估器无法满足您的需求时,您也可以通过新增评估器文件并编写评估器代码的方式,使

+当 PaddleScienc 内置的评估器无法满足您的需求时,您也可以通过新增评估器文件并编写评估器代码的方式,使

用您自定义的评估器,步骤如下:

-1. 在 `ppsci/validate` 下新建评估器文件(此处以 `new_validator.py` 为例)。

+1. 在 `ppsci/validate` 下新建评估器文件(此处以 `new_validator.py` 为例)

-2. 在 `new_validator.py` 文件中导入 PaddleScience 的评估器基类所在模块 `base`,并让创建的新评估器类(以 `Class NewValidator` 为例)从 `base.Validator` 继承。

+2. 在 `new_validator.py` 文件中导入 PaddleScience 的评估器基类所在模块 `base`,并让创建的新评估

+器类(以 `Class NewValidator` 为例)从 `base.Validator` 继承

- ``` py title="ppsci/validate/new_validator.py"

- from ppsci.validate import base

-

- class NewValidator(base.Validator):

- ```

-

-3. 编写 `__init__` 代码,用于评估器创建时的初始化

-

- ``` py title="ppsci/validate/new_validator.py"

+ ``` py linenums="1" title="ppsci/validate/new_validator.py"

from ppsci.validate import base

class NewValidator(base.Validator):

def __init__(self, ...):

...

- # initialization

+ # init

```

-4. 在 `ppsci/validate/__init__.py` 中导入编写的新评估器类,并添加到 `__all__` 中。

+3. 编写 `__init__` 代码,用于评估器创建时的初始化

+

+4. 在 `ppsci/validate/__init__.py` 中导入编写的新评估器类,并添加到 `__all__` 中

- ``` py title="ppsci/validate/__init__.py" hl_lines="3 8"

+ ``` py linenums="1" title="ppsci/validate/__init__.py" hl_lines="3 8"

...

...

from ppsci.validate.new_validator import NewValidator

@@ -547,20 +311,23 @@ eta_mse_validator = ppsci.validate.SupervisedValidator(

]

```

-完成上述新评估器代码编写的工作之后,我们就能像 PaddleScience 内置评估器一样,以 `ppsci.validate.NewValidator` 的方式,调用我们编写的新评估器类,并用于创建评估器实例。同样地,在评估器构建完毕后之后,建议将所有评估器包装到一个字典中方便后续索引。

+完成上述新评估器代码编写的工作之后,我们就能像 PaddleScience 内置评估器一样,以

+`ppsci.equation.NewValidator` 的方式,调用我们编写的新评估器类,并用于创建评估器实例。

+

+在评估器构建完毕后之后,我们需要将所有评估器包装为到一个字典中

-``` py title="examples/demo/demo.py"

+``` py linenums="1"

new_validator = ppsci.validate.NewValidator(...)

-validator = {..., new_validator.name: new_validator}

+validator = {new_validator.name: new_validator}

```

-### 2.10 构建可视化器[可选]

+### 2.9 构建可视化器

PaddleScience 内置了一些常见的可视化器,如 `VisualizerVtu` 可视化器等,如果您想使用这些内置的可视

-化器,可以直接调用 [`ppsci.visualizer.*`](./api/visualize.md) 下的 API,并填入可视化器实例化所需的

+化器,可以直接调用 [`ppsci.visulizer`](./api/visualize.md) 下的 API,并填入可视化器实例化所需的

参数,即可快速构建模型。

-``` py title="examples/demo/demo.py"

+``` py linenums="1"

# manually collate input data for visualization,

# interior+boundary

vis_points = {}

@@ -570,7 +337,7 @@ for key in vis_interior_points:

)

visualizer = {

- "visualize_u_v": ppsci.visualize.VisualizerVtu(

+ "visulzie_u_v": ppsci.visualize.VisualizerVtu(

vis_points,

{"u": lambda d: d["u"], "v": lambda d: d["v"], "p": lambda d: d["p"]},

prefix="result_u_v",

@@ -580,11 +347,12 @@ visualizer = {

如需新增可视化器,步骤与其他模块的新增方法类似,此处不再赘述。

-### 2.11 构建Solver

+### 2.10 构建Solver

-[`Solver`](./api/solver.md) 是 PaddleScience 负责调用训练、评估、可视化的全局管理类。在训练开始前,需要把构建好的模型、约束、优化器等实例传给 `Solver` 以实例化,再调用它的内置方法进行训练、评估、可视化。

+[`Solver`](./api/solver.md) 是 PaddleScience 负责调用训练、评估、可视化的类,在训练开始前,需要把构建好的模型、约束、优化

+器等实例传给 Solver,再调用它的内置方法进行训练、评估、可视化。

-``` py title="examples/demo/demo.py"

+``` py linenums="1"

# initialize solver

solver = ppsci.solver.Solver(

model,

@@ -592,7 +360,7 @@ solver = ppsci.solver.Solver(

output_dir,

optimizer,

lr_scheduler,

- EPOCHS,

+ epochs,

iters_per_epoch,

eval_during_train=True,

eval_freq=eval_freq,

@@ -602,85 +370,39 @@ solver = ppsci.solver.Solver(

)

```

-### 2.12 训练

+### 2.11 训练

-PaddleScience 模型的训练只需调用一行代码。

+PaddleScience 模型的训练只需调用一行代码

-``` py title="examples/demo/demo.py"

+``` py linenums="1"

solver.train()

```

-### 2.13 评估

+### 2.12 评估

-PaddleScience 模型的评估只需调用一行代码。

+PaddleScience 模型的评估只需调用一行代码

-``` py title="examples/demo/demo.py"

+``` py linenums="1"

solver.eval()

```

-### 2.14 可视化[可选]

+### 2.13 可视化

-若 `Solver` 实例化时传入了 `visualizer` 参数,则 PaddleScience 模型的可视化只需调用一行代码。

+PaddleScience 模型的可视化只需调用一行代码

-``` py title="examples/demo/demo.py"

+``` py linenums="1"

solver.visualize()

```

-!!! tip "可视化方案"

-

- 对于一些复杂的案例,`Visualizer` 的编写成本并不低,并且不是任何数据类型都可以进行方便的可视化。因此可以在训练完成之后,手动构建用于预测的数据字典,再使用 `solver.predict` 得到模型预测结果,最后利用 `matplotlib` 等第三方库,对预测结果进行可视化并保存。

-

-## 3. 编写文档

-

-除了案例代码,PaddleScience 同时存放了对应案例的详细文档,使用 Markdown + [Mkdocs-Material](https://squidfunk.github.io/mkdocs-material/) 进行编写和渲染,撰写文档步骤如下。

-

-### 3.1 安装必要依赖包

-

-文档撰写过程中需进行即时渲染,预览文档内容以检查撰写的内容是否有误。因此需要按照如下命令,安装 mkdocs 相关依赖包。

-

-``` shell

-pip install -r docs/requirements.txt -i https://pypi.tuna.tsinghua.edu.cn/simple

-```

-

-### 3.2 撰写文档内容

-

-PaddleScience 文档基于 [Mkdocs-Material](https://squidfunk.github.io/mkdocs-material/)、[PyMdown](https://facelessuser.github.io/pymdown-extensions/extensions/arithmatex/) 等插件进行编写,其在 Markdown 语法基础上支持了多种扩展性功能,能极大地提升文档的美观程度和阅读体验。建议参考超链接内的文档内容,选择合适的功能辅助文档撰写。

-

-### 3.3 预览文档

-

-在 `PaddleScience/` 目录下执行以下命令,等待构建完成后,点击显示的链接进入本地网页预览文档内容。

-

-``` shell

-mkdocs serve

-

-# ====== 终端打印信息如下 ======

-# INFO - Building documentation...

-# INFO - Cleaning site directory

-# INFO - Documentation built in 20.95 seconds

-# INFO - [07:39:35] Watching paths for changes: 'docs', 'mkdocs.yml'

-# INFO - [07:39:35] Serving on http://127.0.0.1:8000/PaddlePaddle/PaddleScience/

-# INFO - [07:39:41] Browser connected: http://127.0.0.1:58903/PaddlePaddle/PaddleScience/

-# INFO - [07:40:41] Browser connected: http://127.0.0.1:58903/PaddlePaddle/PaddleScience/zh/development/

-```

-

-!!! tip "手动指定服务地址和端口号"

-

- 若默认端口号 8000 被占用,则可以手动指定服务部署的地址和端口,示例如下。

-

- ``` shell

- # 指定 127.0.0.1 为地址,8687 为端口号

- mkdocs serve -a 127.0.0.1:8687

- ```

-

-## 4. 整理代码并提交

+## 3. 整理代码并提交

-### 4.1 安装 pre-commit

+### 3.1 安装 pre-commit

PaddleScience 是一个开源的代码库,由多人共同参与开发,因此为了保持最终合入的代码风格整洁、一致,

PaddleScience 使用了包括 [isort](https://github.com/PyCQA/isort#installing-isort)、[black](https://github.com/psf/black) 等自动化代码检查、格式化插件,

让 commit 的代码遵循 python [PEP8](https://pep8.org/) 代码风格规范。

-因此在 commit 您的代码之前,请务必先执行以下命令安装 `pre-commit`。

+因此在 commit 你的代码之前,请务必先执行以下命令安装 `pre-commit`。

``` sh

pip install pre-commit

@@ -689,12 +411,12 @@ pre-commit install

关于 pre-commit 的详情请参考 [Paddle 代码风格检查指南](https://www.paddlepaddle.org.cn/documentation/docs/zh/develop/dev_guides/git_guides/codestyle_check_guide_cn.html)

-### 4.2 整理代码

+### 3.2 整理代码

在完成范例编写与训练后,确认结果无误,就可以整理代码。

使用 git 命令将所有新增、修改的代码文件以及必要的文档、图片等一并上传到自己仓库的 `dev_model` 分支上。

-### 4.3 提交 pull request

+### 3.3 提交 pull request

在 github 网页端切换到 `dev_model` 分支,并点击 "Contribute",再点击 "Open pull request" 按钮,

-将含有您的代码、文档、图片等内容的 `dev_model` 分支作为合入请求贡献到 PaddleScience。

+将含有你的代码、文档、图片等内容的 `dev_model` 分支作为合入请求贡献到 PaddleScience。

diff --git a/docs/zh/examples/NSFNet.md b/docs/zh/examples/NSFNet.md

new file mode 100644

index 000000000..2ba347562

--- /dev/null

+++ b/docs/zh/examples/NSFNet.md

@@ -0,0 +1,184 @@

+# NSFNet

+

+AI Studio快速体验

+

+=== "模型训练命令"

+

+ ``` sh

+ # VP_NSFNet1

+ python VP_NSFNet1.py

+

+ # VP_NSFNet2

+ python VP_NSFNet2.py

+

+ # VP_NSFNet3

+ python VP_NSFNet3.py

+ ```

+

+## 1. 背景简介