You signed in with another tab or window. Reload to refresh your session.You signed out in another tab or window. Reload to refresh your session.You switched accounts on another tab or window. Reload to refresh your session.Dismiss alert

In the following experiment I have a topic named "avl-vam". I join it, leave it, join a second non-existing topic "avl-vama", leave that topic, then join the original topic again.

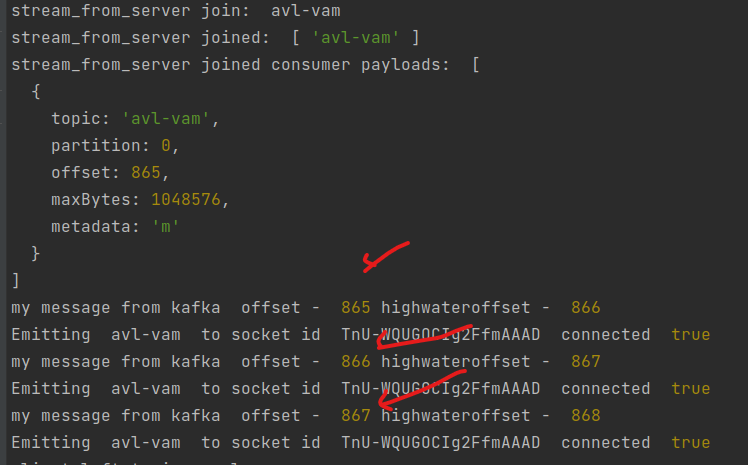

In the following diagnostic you see that I receive three messages (offsets: 865, 866, 867) on the topic before leaving it.

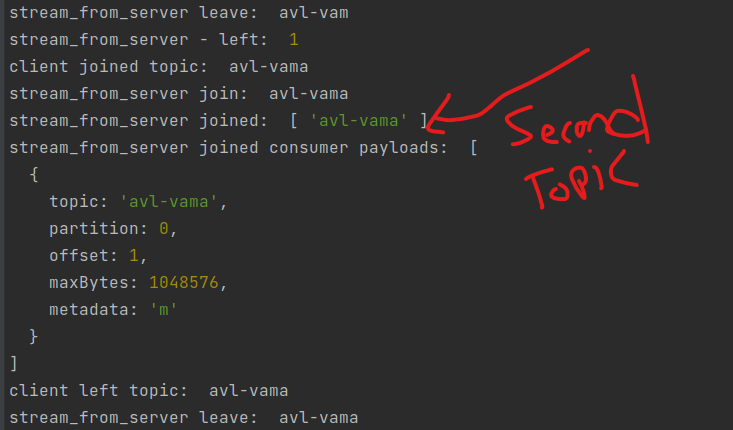

I then leave that first topic, and join then leave a second topic "avl-vama".

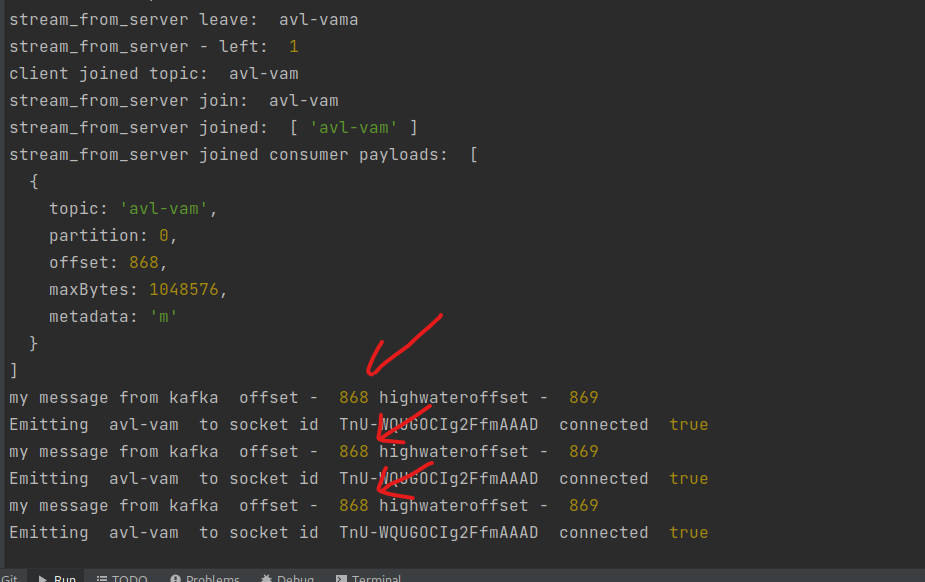

I then rejoin the original topic "avl-vam".

Then the very next message that arrives (offset 868) is seen in triplicate!

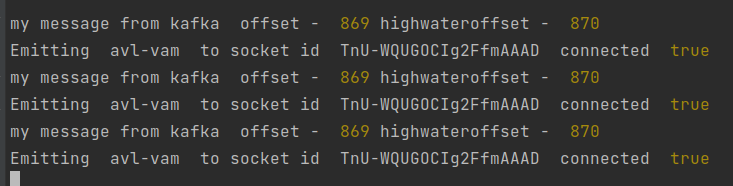

Each subsequent message is also seen in triplicate.

If I leave the topic again (call removeTopics), then join a second non-existing topic "avl-vamb", leave that, then rejoin the original topic, then I see five messages at the same offset.

This seems like a bug. Please advise.

I am using kafka-node 5.0.0.

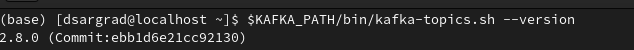

I am using kafka 2.8.0.

The text was updated successfully, but these errors were encountered:

davesargrad

changed the title

I call removeTopics on a consumer, and then add back that topic, I then receive multiple messages

I call removeTopics on a consumer, and then add back that topic, I then receive duplicate messages

Aug 5, 2021

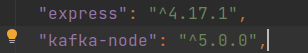

My code is simple

Here I initialize client:

I use this to join a topic:

I use this to leave a topic:

In the following experiment I have a topic named "avl-vam". I join it, leave it, join a second non-existing topic "avl-vama", leave that topic, then join the original topic again.

In the following diagnostic you see that I receive three messages (offsets: 865, 866, 867) on the topic before leaving it.

I then leave that first topic, and join then leave a second topic "avl-vama".

I then rejoin the original topic "avl-vam".

Then the very next message that arrives (offset 868) is seen in triplicate!

Each subsequent message is also seen in triplicate.

If I leave the topic again (call removeTopics), then join a second non-existing topic "avl-vamb", leave that, then rejoin the original topic, then I see five messages at the same offset.

This seems like a bug. Please advise.

I am using kafka-node 5.0.0.

I am using kafka 2.8.0.

The text was updated successfully, but these errors were encountered: