-

Notifications

You must be signed in to change notification settings - Fork 4.1k

New issue

Have a question about this project? Sign up for a free GitHub account to open an issue and contact its maintainers and the community.

By clicking “Sign up for GitHub”, you agree to our terms of service and privacy statement. We’ll occasionally send you account related emails.

Already on GitHub? Sign in to your account

Proposal: arbitrary task-like types returned from async methods #7169

Comments

|

The "value-tasklike" motivation is discussed more fully here: |

|

So... what would be the runtime semantics of these lambda expressions? We would need to know that so we can know what code to generate. |

|

The VB spec currently explains the semantics of async methods+lambdas like this:

This would be changed to say that, by assumption, the async method's return type is a Tasklike, which has an associated builder type (specified by attribute). An object of that builder type is implicitly created, and the return value of the async method is the result of the .Task property on that builder instance. The spec goes on to explain the semantics of exiting the method:

This would be amended to say that if control flow exits through an unhandled exception |

|

FWIW, one case where I ran this limitation was when I was designing an async variant of a generic interface that was covariant in T and I had to turn the T-returning methods into async variants. The requirement for Task forced me to give up on covariance or led me to the introduction of a covariant IFuture which allows to retain the covariant interface design but makes implementation using async methods impossible in a straightforward manner (i.e. without weird wrappers). |

|

@ljw1004 What code would the compiler generate for an |

|

Thanks @gafter. I updated the first post to flesh out more of the semantics, i.e. to complete the list of "builder" methods that the compiler's generated code will invoke... (1) it will invoke the static |

|

ValueTask example This shows producing (and consuming) a ValueTask. ValueTask provides substantial perf benefits by reducing the number of heap allocations, as shown in aspnet/HttpAbstraction#556 and aspnet/HttpAbstractions#553 The ValueTask is the one in System.Threading.Tasks.Extensions library - I copied the source code over. The idea is that ValueTask is a struct which contains either a result (if one has been provided), or a Task (if a Task was requested but the async method hadn't yet produced a result). var i1 = await g(0);

Console.WriteLine(i1);

var i2 = await g(100);

Console.WriteLine(i2);

static async ValueTask<int> g(int delay)

{

if (delay > 0) await Task.Delay(delay);

return delay;

}

namespace System.Threading.Tasks

{

[Tasklike(typeof(ValueTaskMethodBuilder<>))]

public struct ValueTask<TResult> : IEquatable<ValueTask<TResult>>

{

// A ValueTask holds *either* a value _result, *or* a task _task. Not both.

// The idea is that if it's constructed just with the value, it avoids the heap allocation of a Task.

internal readonly Task<TResult> _task;

internal readonly TResult _result;

public ValueTask(TResult result) { _result = result; _task = null; }

public ValueTask(Task<TResult> task) { _task = task; _result = default(TResult); if (_task == null) throw new ArgumentNullException(nameof(task)); }

public static implicit operator ValueTask<TResult>(Task<TResult> task) => new ValueTask<TResult>(task);

public static implicit operator ValueTask<TResult>(TResult result) => new ValueTask<TResult>(result);

public override int GetHashCode() => _task != null ? _task.GetHashCode() : _result != null ? _result.GetHashCode() : 0;

public override bool Equals(object obj) => obj is ValueTask<TResult> && Equals((ValueTask<TResult>)obj);

public bool Equals(ValueTask<TResult> other) => _task != null || other._task != null ? _task == other._task : EqualityComparer<TResult>.Default.Equals(_result, other._result);

public static bool operator ==(ValueTask<TResult> left, ValueTask<TResult> right) => left.Equals(right);

public static bool operator !=(ValueTask<TResult> left, ValueTask<TResult> right) => !left.Equals(right);

public Task<TResult> AsTask() => _task ?? Task.FromResult(_result);

public bool IsCompleted => _task == null || _task.IsCompleted;

public bool IsCompletedSuccessfully => _task == null || _task.Status == TaskStatus.RanToCompletion;

public bool IsFaulted => _task != null && _task.IsFaulted;

public bool IsCanceled => _task != null && _task.IsCanceled;

public TResult Result => _task == null ? _result : _task.GetAwaiter().GetResult();

public ValueTaskAwaiter<TResult> GetAwaiter() => new ValueTaskAwaiter<TResult>(this);

public ConfiguredValueTaskAwaitable<TResult> ConfigureAwait(bool continueOnCapturedContext) => new ConfiguredValueTaskAwaitable<TResult>(this, continueOnCapturedContext: continueOnCapturedContext);

public override string ToString() => _task == null ? _result.ToString() : _task.Status == TaskStatus.RanToCompletion ? _task.Result.ToString() : _task.Status.ToString();

}

}

namespace System.Runtime.CompilerServices

{

class ValueTaskMethodBuilder<TResult>

{

// This builder contains *either* an AsyncTaskMethodBuilder, *or* a result.

// At the moment someone retrieves its Task, that's when we collapse to the real AsyncTaskMethodBuilder

// and it's task, or just use the result.

internal AsyncTaskMethodBuilder<TResult> _taskBuilder; internal bool GotBuilder;

internal TResult _result; internal bool GotResult;

public static ValueTaskMethodBuilder<TResult> Create() => new ValueTaskMethodBuilder<TResult>();

public void Start<TStateMachine>(ref TStateMachine stateMachine) where TStateMachine : IAsyncStateMachine => stateMachine.MoveNext();

public void SetStateMachine(IAsyncStateMachine stateMachine) { EnsureTaskBuilder(); _taskBuilder.SetStateMachine(stateMachine); }

public void SetResult(TResult result)

{

if (GotBuilder) _taskBuilder.SetResult(result);

else _result = result;

GotResult = true;

}

public void SetException(System.Exception exception)

{

EnsureTaskBuilder();

_taskBuilder.SetException(exception);

}

private void EnsureTaskBuilder()

{

if (!GotBuilder && GotResult) throw new InvalidOperationException();

if (!GotBuilder) _taskBuilder = new AsyncTaskMethodBuilder<TResult>();

GotBuilder = true;

}

public ValueTask<TResult> Task

{

get

{

if (GotResult && !GotBuilder) return new ValueTask<TResult>(_result);

EnsureTaskBuilder();

return new ValueTask<TResult>(_taskBuilder.Task);

}

}

public void AwaitOnCompleted<TAwaiter, TStateMachine>(ref TAwaiter awaiter, ref TStateMachine stateMachine) where TAwaiter : INotifyCompletion where TStateMachine : IAsyncStateMachine

{

EnsureTaskBuilder();

_taskBuilder.AwaitOnCompleted(ref awaiter, ref stateMachine);

}

public void AwaitUnsafeOnCompleted<TAwaiter, TStateMachine>(ref TAwaiter awaiter, ref TStateMachine stateMachine) where TAwaiter : ICriticalNotifyCompletion where TStateMachine : IAsyncStateMachine

{

EnsureTaskBuilder();

_taskBuilder.AwaitUnsafeOnCompleted(ref awaiter, ref stateMachine);

}

}

public struct ValueTaskAwaiter<TResult> : ICriticalNotifyCompletion

{

private readonly ValueTask<TResult> _value;

internal ValueTaskAwaiter(ValueTask<TResult> value) { _value = value; }

public bool IsCompleted => _value.IsCompleted;

public TResult GetResult() => (_value._task == null) ? _value._result : _value._task.GetAwaiter().GetResult();

public void OnCompleted(Action continuation) => _value.AsTask().ConfigureAwait(continueOnCapturedContext: true).GetAwaiter().OnCompleted(continuation);

public void UnsafeOnCompleted(Action continuation) => _value.AsTask().ConfigureAwait(continueOnCapturedContext: true).GetAwaiter().UnsafeOnCompleted(continuation);

}

public struct ConfiguredValueTaskAwaitable<TResult>

{

private readonly ValueTask<TResult> _value;

private readonly bool _continueOnCapturedContext;

internal ConfiguredValueTaskAwaitable(ValueTask<TResult> value, bool continueOnCapturedContext) { _value = value; _continueOnCapturedContext = continueOnCapturedContext; }

public ConfiguredValueTaskAwaiter GetAwaiter() => new ConfiguredValueTaskAwaiter(_value, _continueOnCapturedContext);

public struct ConfiguredValueTaskAwaiter : ICriticalNotifyCompletion

{

private readonly ValueTask<TResult> _value;

private readonly bool _continueOnCapturedContext;

internal ConfiguredValueTaskAwaiter(ValueTask<TResult> value, bool continueOnCapturedContext) { _value = value; _continueOnCapturedContext = continueOnCapturedContext; }

public bool IsCompleted => _value.IsCompleted;

public TResult GetResult() => _value._task == null ? _value._result : _value._task.GetAwaiter().GetResult();

public void OnCompleted(Action continuation) => _value.AsTask().ConfigureAwait(_continueOnCapturedContext).GetAwaiter().OnCompleted(continuation);

public void UnsafeOnCompleted(Action continuation) => _value.AsTask().ConfigureAwait(_continueOnCapturedContext).GetAwaiter().UnsafeOnCompleted(continuation);

}

}

} |

|

ITask example This shows an async method that returns a covariant ITask, rather than the normal invariant Task. // Test ITask

ITask<string> its = f();

ITask<object> ito = its;

var io = await ito;

Console.WriteLine(io);

static async ITask<string> f()

{

await Task.Yield();

return "hello";

}

namespace System.Threading.Tasks

{

[Tasklike(typeof(ITaskMethodBuilder<>))]

interface ITask<out T>

{

ITaskAwaiter<T> GetAwaiter();

}

}

namespace System.Runtime.CompilerServices

{

interface ITaskAwaiter<out T> : INotifyCompletion, ICriticalNotifyCompletion

{

bool IsCompleted { get; }

T GetResult();

}

struct ITaskMethodBuilder<T>

{

private class ConcreteITask<U> : ITask<U>

{

private readonly Task<U> _task;

public ConcreteITask(Task<U> task) { _task = task; }

public ITaskAwaiter<U> GetAwaiter() => new ConcreteITaskAwaiter<U>(_task.GetAwaiter());

}

private class ConcreteITaskAwaiter<U> : ITaskAwaiter<U>

{

private readonly TaskAwaiter<U> _awaiter;

public ConcreteITaskAwaiter(TaskAwaiter<U> awaiter) { _awaiter = awaiter; }

public bool IsCompleted => _awaiter.IsCompleted;

public U GetResult() => _awaiter.GetResult();

public void OnCompleted(Action continuation) => _awaiter.OnCompleted(continuation);

public void UnsafeOnCompleted(Action continuation) => _awaiter.UnsafeOnCompleted(continuation);

}

private AsyncTaskMethodBuilder<T> _taskBuilder;

private ConcreteITask<T> _task;

public static ITaskMethodBuilder<T> Create() => new ITaskMethodBuilder<T>() { _taskBuilder = AsyncTaskMethodBuilder<T>.Create() };

public void Start<TStateMachine>(ref TStateMachine stateMachine) where TStateMachine : IAsyncStateMachine => _taskBuilder.Start(ref stateMachine);

public void SetStateMachine(IAsyncStateMachine stateMachine) => _taskBuilder.SetStateMachine(stateMachine);

public void SetResult(T result) => _taskBuilder.SetResult(result);

public void SetException(Exception exception) => _taskBuilder.SetException(exception);

public ITask<T> Task => (_task == null) ? _task = new ConcreteITask<T>(_taskBuilder.Task) : _task;

public void AwaitOnCompleted<TAwaiter, TStateMachine>(ref TAwaiter awaiter, ref TStateMachine stateMachine) where TAwaiter : INotifyCompletion where TStateMachine : IAsyncStateMachine => _taskBuilder.AwaitOnCompleted(ref awaiter, ref stateMachine);

public void AwaitUnsafeOnCompleted<TAwaiter, TStateMachine>(ref TAwaiter awaiter, ref TStateMachine stateMachine) where TAwaiter : ICriticalNotifyCompletion where TStateMachine : IAsyncStateMachine => _taskBuilder.AwaitUnsafeOnCompleted(ref awaiter, ref stateMachine);

}

} |

|

Weaving example This shows how we can use the feature to do "code-weaving", or "aspect-oriented programming". Here we make it so a specified action is executed prior to every cold await suspension, and after every await resumption. Also this shows an interesting trick whereby the body of an async method can configure its very own "async method builder" instance while it's in flight, by awaiting a special configurator object, and having the builder's AwaitUnsafeOnCompleted method recognize that configurator object. static async WeavingTask h()

{

await new WeavingConfiguration(() => Console.WriteLine("Weave suspend"), () => Console.WriteLine("Weave resume"));

Console.WriteLine("h.A");

await Delay(0);

Console.WriteLine("h.B");

await Delay(10);

Console.WriteLine("h.C");

await Delay(100);

Console.WriteLine("h.D");

}

static async Task Delay(int i)

{

Console.WriteLine($" about to delay {i}");

await Task.Delay(i);

Console.WriteLine($" done delay {i}");

}This is the output: namespace System.Threading.Tasks

{

[Tasklike(typeof(WeavingTaskMethodBuilder))]

class WeavingTask

{

private Task _task;

public WeavingTask(Task task) { _task = task; }

public TaskAwaiter GetAwaiter() => _task.GetAwaiter();

}

}

namespace System.Runtime.CompilerServices

{

class WeavingConfiguration : ICriticalNotifyCompletion

{

public readonly Action _beforeYield, _afterYield;

public WeavingConfiguration(Action beforeYield, Action afterYield) { _beforeYield = beforeYield; _afterYield = afterYield; }

public WeavingConfiguration GetAwaiter() => this;

public bool IsCompleted => false;

public void UnsafeOnCompleted(Action continuation) { }

public void OnCompleted(Action continuation) { }

public void GetResult() { }

}

struct WeavingTaskMethodBuilder

{

private AsyncTaskMethodBuilder _taskBuilder;

private WeavingTask _task;

private WeavingConfiguration _config;

public static WeavingTaskMethodBuilder Create() => new WeavingTaskMethodBuilder() { _taskBuilder = AsyncTaskMethodBuilder.Create() };

public void Start<TStateMachine>(ref TStateMachine stateMachine) where TStateMachine : IAsyncStateMachine => _taskBuilder.Start(ref stateMachine);

public void SetStateMachine(IAsyncStateMachine stateMachine) => _taskBuilder.SetStateMachine(stateMachine);

public void SetResult() => _taskBuilder.SetResult();

public void SetException(Exception exception) => _taskBuilder.SetException(exception);

public WeavingTask Task => (_task == null) ? _task = new WeavingTask(_taskBuilder.Task) : _task;

public void AwaitOnCompleted<TAwaiter, TStateMachine>(ref TAwaiter awaiter, ref TStateMachine stateMachine) where TAwaiter : INotifyCompletion where TStateMachine : IAsyncStateMachine => _taskBuilder.AwaitOnCompleted(ref awaiter, ref stateMachine);

public void AwaitUnsafeOnCompleted<TAwaiter, TStateMachine>(ref TAwaiter awaiter, ref TStateMachine stateMachine) where TAwaiter : ICriticalNotifyCompletion where TStateMachine : IAsyncStateMachine

{

if (awaiter is WeavingConfiguration)

{

_config = (WeavingConfiguration)(object)awaiter;

stateMachine.MoveNext();

return;

}

var myAwaiter = new MyAwaiter(awaiter, _config);

_taskBuilder.AwaitUnsafeOnCompleted(ref myAwaiter, ref stateMachine);

}

class MyAwaiter : ICriticalNotifyCompletion

{

private readonly ICriticalNotifyCompletion _awaiter;

private readonly WeavingConfiguration _config;

public MyAwaiter(ICriticalNotifyCompletion awaiter, WeavingConfiguration config) { _awaiter = awaiter; _config = config; }

public void OnCompleted(Action continuation) => _awaiter.OnCompleted(continuation);

public void UnsafeOnCompleted(Action continuation)

{

_config?._beforeYield?.Invoke();

_awaiter.UnsafeOnCompleted(() =>

{

_config?._afterYield?.Invoke();

continuation();

});

}

}

}

} |

|

IAsyncAction example It's kind of a pain that we can't in C# write methods that directly return an IAsyncAction. This example shows how to use the proposed feature to make that possible. static async IAsyncAction uwp()

{

await Task.Delay(100);

}

[Tasklike(typeof(IAsyncActionBuilder))]

interface IAsyncAction { }

class IAsyncActionBuilder

{

private AsyncTaskMethodBuilder _taskBuilder;

private IAsyncAction _task;

public static IAsyncActionBuilder Create() => new IAsyncActionBuilder() { _taskBuilder = AsyncTaskMethodBuilder.Create() };

public void Start<TStateMachine>(ref TStateMachine stateMachine) where TStateMachine : IAsyncStateMachine => _taskBuilder.Start(ref stateMachine);

public void SetStateMachine(IAsyncStateMachine stateMachine) => _taskBuilder.SetStateMachine(stateMachine);

public void SetResult() => _taskBuilder.SetResult();

public void SetException(Exception exception) => _taskBuilder.SetException(exception);

public IAsyncAction Task => (_task == null) ? _task = _taskBuilder.Task.AsAsyncAction() as IAsyncAction : _task;

public void AwaitOnCompleted<TAwaiter, TStateMachine>(ref TAwaiter awaiter, ref TStateMachine stateMachine) where TAwaiter : INotifyCompletion where TStateMachine : IAsyncStateMachine => _taskBuilder.AwaitOnCompleted(ref awaiter, ref stateMachine);

public void AwaitUnsafeOnCompleted<TAwaiter, TStateMachine>(ref TAwaiter awaiter, ref TStateMachine stateMachine) where TAwaiter : ICriticalNotifyCompletion where TStateMachine : IAsyncStateMachine => _taskBuilder.AwaitUnsafeOnCompleted(ref awaiter, ref stateMachine);

} |

|

Note: all of the above example assume this attribute: namespace System.Runtime.CompilerServices

{

public class TasklikeAttribute : Attribute

{

public TasklikeAttribute(Type builderType) { }

}

} |

|

✨ Great! ✨ |

|

Isn't this listed on the Language Feature Status? |

|

While you're there, messing with the async state machine.... 😉 There is currently a faster await path for completed tasks; however an async function still comes with a cost. There are faster patterns to avoid the state machine; which involve greater code complexity so it would be nice if the compiler could generate them as part of the state machine construction. Tail call clean up async Task MethodAsync()

{

// ... some code

await OtherMethodAsync();

}becomes Task MethodAsync()

{

// ... some code

return OtherMethodAsync();

}Like-wise single tail await async Task MethodAsync()

{

if (condition)

{

return;

}

else if (othercondition)

{

await OtherMethodAsync();

}

else

{

await AnotherMethodAsync();

}

}becomes Task MethodAsync()

{

if (condition)

{

return Task.CompletedTask;

}

else if (othercondition)

{

return OtherMethodAsync();

}

else

{

return AnotherMethodAsync();

}

}Mid async async Task MethodAsync()

{

if (condition)

{

return;

}

await OtherMethodAsync();

// some code

}splits at first non completed task into async awaiting function Task MethodAsync()

{

if (condition)

{

return Task.CompletedTask;

}

var task = OtherMethodAsync();

if (!task.IsCompleted)

{

return MethodAsyncAwaited(task);

}

MethodAsyncRemainder();

}

async Task MethodAsyncAwaited(Task task)

{

await task;

MethodAsyncRemainder();

}

void MethodAsyncRemainder()

{

// some code

}Similar patterns with ValueTask of postponing |

|

Re: Arch 64 bit - Cores 4 |

|

ValueTask by itself saves in allocations; but you don't get the full throughput in performance until you also delay the state machine creation (the actual |

|

"Tail await elimination" is definitely a promising pattern we manually optimize for quite regularly in our code base; it's easy enough to have a function that used to do multiple awaits and ultimately ends up being rewritten such that the There are some caveats though, such as getting rid of a single If we'd have the (much desirable) flexibility on the return type for an

|

|

Removed the

The issue with an analyzer rewrite for splitting the functions into pre-completed and |

|

@ufcpp - this isn't yet listed on the "language feature status" page because so far I've only done the easy bits (write the proposal and implement a prototype). I haven't yet started on the difficult bit of presenting it to LDM and trying to persuade people that it's a good idea and modifying its design in response to feedback! @benaadams - those are interesting ideas. I need to think more deeply about them. I appreciate that you linked to real-world cases of complexity that's (1) forced by the compiler not doing the optimizations, (2) has real-world perf numbers to back it up. But let's move the compiler-optimizations-of-async-methods into a different discussion topic. |

|

@ljw1004 no probs will create an issue for them, with deeper analysis. |

|

@benaadams automagically removing Their app will crash without any obvious reason. |

|

@i3arnon it should work? First function's conversion would be blocked due to await in try catch, second function would become: Task FooAsync(string value)

{

if (value == null) return Task.FromException(new ArgumentNullException());

return SendAsync(value);

} |

|

@benaadams no. |

|

You can always coerce with a cast to force overload resolution to pick the right thing. But... ugly! |

|

That's an interesting case indeed. We've run into similar ambiguities for the whole shebang of It seems to me that the insistence around the use of One thing that always seems to come back when designing APIs with a variety of different delegate types on overloads is the cliff one falls off when overload resolution falls short, requiring the insertion of ugly casts at the use site, or an overhaul of the API design to have different methods to avoid the problem altogether. I've always felt it strange there's no way to specify the return type of a lambda, unlike the ability to specify parameter types fully. It'd an odd asymmetry compared to named methods. Some languages such as C++ allow for an explicit return type to be specified on lambdas. With the introduction of tuples and identifiers for components of a tuple, it seems this may be useful as well; currently, AFAIK, there'd be no place to stick those identifiers (even if it's just for their documentation value): Func<int> f1_1 = () => 42;

Func<int> f1_2 = int () => 42;

Func<int, int> f2_1 = x => x + 1;

Func<int, int> f2_2 = (int x) => x + 1;

Func<int, int> f2_3 = int (int x) => x + 1;

Func<Task<int>> f3_1 = async () => 42;

Func<Task<int>> f3_2 = async Task<int> () => 42;

Func<int, (int a, int b)> f4_1 = () => (1, 2);

Func<int, (int a, int b)> f4_2 = () => (a: 1, b: 2);

Func<int, (int a, int b)> f4_3 = (int a, int b) () => (1, 2);

Func<int, (int a, int b)> f4_4 = (int a, int b) () => (a: 1, b: 2);I haven't given it much thought about possible syntactic ambiguities that may arise (C++ has a suffix notation with a thin arrow to specify a return type, but that'd be a totally new token; VB also has a suffix notation with With the Run(async Task<int> () => { return 1; }); // [3] with T=Task<int>

Run(async MyTask<int> () => { return 1; }); // [4] with T=int |

|

Related, if you do private void LongRunningAction()

{

// ...

}

Task.Run(LongRunningAction);You'll get the error |

|

Okay, here's my detailed idea on what to do about it. I'll do precisely what @bartdeset wrote and Mads Torgersen also suggested -- "return an open-ended set of possible inferred return types here" (even though, as Bart says, "it seems it'd be quite invasive"). Put another way, I'll make it so that "inferred return type" algorithm when it sees an async lambda is able to infer the magical return type I've written out examples at the bottom, so I can explain them in specifics rather than in generalities. Note: this post is all just theory, born from a day's worth of careful spec-reading, without actually having written any code. It might all be completely wrong! Next step is to implement it and see! What I'll do before coding, though, is write up this plan into a fork of the C# spec over here: https://github.com/ljw1004/csharpspec/tree/async-return More detailed plan (1) The inferred return type algorithm will be modified to return the pseudo-type (2) The type inference algorithm will be modified to become aware of InferredTask... The algorithm for fixing will say that if every bound (lower, upper, exact) is to an identical The algorithm for lower bound inference will say that if (Note: I'm concerned that this isn't quite right. What we have a single The type inference algorithm performs certain tests upon its types, and so will continue to perform those tests upon the pseudo-types The folks who call into type inference are method invocations, conversion of method groups, and best common type for a set of expressions. As a thought exercise, for a scenario where InferredTask participates in the algorithm for common type of a set of expressions, consider this example: void f<T,U>(Func<T> lambda, Func<T,U> lambda2)

f(async () => {}, x => {if (true) return x; else return x;});(3) The Overload resolution algorithm is changed. In particular, when computing the Better function member algorithm, it considers two parameter types Note that if OverloadResolution has no InferredTask coming in, then it will have no InferredTask going out. Note also that there are only four places that invoke overload resolution: method-invocation (as disussed), object-creation-expressions (but constructors are never generic so this won't feed InferredTask into overload resolution), element-access (but indexers are again never generic), and operator overload resolution (but operator overloads are again never generic). I do not propose to change conversion-of-method-group. Actually, to be honest, I haven't been able to come up in my head with an example where it would arise. After all, whenever you convert a method group, every method in that group has a concrete return type. (Even if I did come up with an example, as @benaadams notes above, return type is still typically ignored for method-group conversion). Example 1 void f<T>(Func<T> lambda) // [1]

void f<T>(Func<Task<T>> lambda) // [2]

f(async () => { return 1; });For [1] the inferred return type of the argument lambda expression is For [2] the inferred return type of the argument is For sake of overload resolution judging parameter types, the two parameter types In the tie-breaker for more specific, [2] wins, because Example 2 void g<T>(Func<T> lambda) // [3]

void g(Func<MyTask> lambda) // [4]

h(async () => { });For [3] the inferred return type of the argument lambda expression is For [4], type inference doesn't come into play at all. So the parameter type for [4] is just For sake of overload resolution judging parameter types, the two parameter types In the tie-breaker first rule, [4] wins because it's not generic. |

|

This type inference stuff is complicated and makes my head hurt. I think it is a problem, what I wrote above, about I also think that async debugging in Visual Studio might need a hook into the tasklike things. The way async debugging works is that

I think we'll have to allow for user-provided hooks for step [4] for the user-provided tasklikes. |

|

Oh boy, type inference is difficult. Here are some worked examples. Example 1: inferring

|

Proposal for type inference

|

|

I've been exploring the idea of The overall goal of

|

|

Here are some completely blank task-builders, as a starting point for experiment: [Tasklike(typeof(MyTaskBuilder))]

class MyTask { }

[Tasklike(typeof(MyTaskBuilder<>))]

class MyTask<T> { }

class MyTaskBuilder

{

public static MyTaskBuilder Create() => null;

public void SetStateMachine(IAsyncStateMachine stateMachine) { }

public void Start<TSM>(ref TSM stateMachine) where TSM : IAsyncStateMachine { }

public void AwaitOnCompleted<TA, TSM>(ref TA awaiter, ref TSM stateMachine) where TA : INotifyCompletion where TSM : IAsyncStateMachine { }

public void AwaitUnsafeOnCompleted<TA, TSM>(ref TA awaiter, ref TSM stateMachine) where TA : ICriticalNotifyCompletion where TSM : IAsyncStateMachine { }

public void SetResult() { }

public void SetException(Exception ex) { }

public MyTask Task => null;

}

class MyTaskBuilder<T>

{

public static MyTaskBuilder<T> Create() => null;

public void SetStateMachine(IAsyncStateMachine stateMachine) { }

public void Start<TSM>(ref TSM stateMachine) { }

public void AwaitOnCompleted<TA, TSM>(ref TA awaiter, ref TSM stateMachine) where TA : INotifyCompletion where TSM : IAsyncStateMachine { }

public void AwaitUnsafeOnCompleted<TA, TSM>(ref TA awaiter, ref TSM stateMachine) where TA : ICriticalNotifyCompletion where TSM : IAsyncStateMachine { }

public void SetResult(T result) { }

public void SetException(Exception ex) { }

public MyTask<T> Task => null;

}

namespace System.Runtime.CompilerServices

{

public class TasklikeAttribute : Attribute

{

public TasklikeAttribute(Type builder) { }

}

}edit: Thanks @bbarry in the comment below who reminded me about generic constraints. I'd forgotten about them. What would happen if the user provided a builder that is missing appropriate generic constraints? (it's theoretically acceptable). Or if they provided a builder that has too-strict generic constraints? (then codegen, if allowed to proceed, would produce a binary that failed PEVerify). In the end, I decided I might as well just require the builder to have the EXACT correct constraints. |

|

Assuming the generic constraints are allowed on the relevant methods |

|

I've updated the download link (at top of this issue) with a new version that works with overload resolution. Also I've written some more samples, including What kind of Observable do you think an async method should produce? (also once we add async iterators?) async IObservable<string> Option1()

{

// This behaves a lot like Task<string>.ToObservable() – the async method starts the moment

// you execute Option1, and if you subscribe while it's in-flight then you'll get an OnNext+OnCompleted

// as soon as the return statement executes, and if you subscribe later then you'll get OnNext+OnCompleted

// immediately the moment you subscribe (with a saved value).

// Presumably there's no way for Subscription.Dispose() to abort the async method...

await Task.Delay(100);

return "hello";

}

async IObservable<string> Option2()

{

// This behaves a lot like Observable.Create() – a fresh instance of the async method starts

// up for each subscriber at the moment of subscription, and you'll get an OnNext the moment

// each yield executes, and an OnCompleted when the method ends.

// Perhaps if the subscription has been disposed then yield could throw OperationCancelledException?

await Task.Delay(100);

yield return "hello";

await Task.Delay(200);

yield return "world";

await Task.Delay(300);

}It’s conceivable that both could exist, keyed off whether there’s a yield statement inside the body, but that seems altogether too subtle… |

|

Would this work with structs? Assuming |

|

@benaadams could you explain a bit further? The way SetStateMachine works is that it's only ever invoked when an async method encounters its first cold await. At that moment the state machine struct gets boxed on the heap. The state machine has a field for the builder, and the builder is a typically struct, so the builder is included within that box. And then the builder's SetStateMachine method is called so the builder gets a reference to the boxed state machine so that it can subsequently call stateMachine.MoveNext. Given that SetStateMachine is only ever called after the state machine struct has been boxed, I think there's no benefit to what you describe. |

|

@ljw1004 makes sense One of the issue with tasks is they aren't very good with fine grained awaits where they are often sync/hot awaits as at each step you allocate a Task. I'll do the installs and see if I can understand more. |

|

[Sorry, I did not read the whole thread - it is way too long! I saw @ljw1004's tweet asking about semantics of computations over There is a couple of things that do something similar:

They all have similar syntax with some sort of computation that can asynchronously The tricky case for the semantics is if you write something like this: Or the same thing written using This iterates over another observable (I made up

I think the sequential nature of the code makes it really hard to write computations that consume push-based sources. It becomes apparent once you want to write something like So, having this over |

|

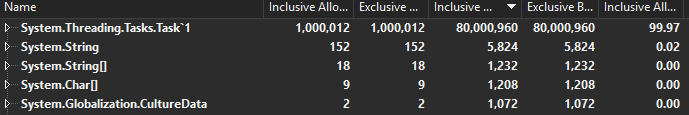

@ljw1004 I like this a lot! For https://gist.github.com/benaadams/e23dabec441a7fdf613557aba4688b33 Timing 100M ops - Only the Task branch allocates (this is all for sync completes) So full async and await add some overhead, as expected as its doing more, but its greatly improved over |

|

The extensions seem to make the non-async paths heavier (async-path obv can't compile) Uninstalling the extensions and commenting out the async ValueTask bits But it would still be better for the common case which would be async+await; and cuts all the allocations. |

|

@benaadams, thanks for the perf analysis. I've taken your gist, augmented it a bit, and checked it into source control: https://github.com/ljw1004/roslyn/blob/features/async-return/ArbitraryAsyncReturns-sample/Performance.cs Your last post "the extensions seem to make the non-async paths heavier" is just a case of experimental error, I'm sure. Repeat runs of the test give about 10% variation, and machines are always susceptible to hiccups. Looking at the output in ILDASM, my extensions produce exactly the same IL output for the three methods in question. But I think your tests might be a bit misleading... even your I've written up the performance results with some more explanation: Performance analysisNaive Task-returning async methodThis is the naive code you might write with async/await if you weren't concerned about performance. This takes about 120ns on JIT, about 90ns on .NET Native, when called with async Task<int> TestAsync(int delay)

{

await Task.Delay(delay);

return delay;

}

var x = await TestAsync(0);Switch it to naive ValueTask-returning async methodThe feature we're discussing lets you easily change it as below. async ValueTask<int> TestAsync(int delay)

{

await Task.Delay(delay);

return delay;

}

var x = await TestAsync(0);Manual optimization: avoid hot-pathsIt's okay to await an already-completed task such as Task.Delay(0) - the compiler does so with very little overhead. async ValueTask<int> TestAsync(int delay)

{

if (f > 0) await Task.Delay(f);

return delay;

}

var t = TestAsync();

var x = t.IsCompletedSuccessfully ? t.Result : await t;Ultimate manual optimization: async-eliminationWe can build upon the previous optimization with the ultimate optimization: eliminating the async method entirely on the hot path. ValueTask<int> TestAsync(int delay)

{

if (f > 0) return ValueTask<int>.FromTask(TestAsyncInner(delay));

else return ValueTask<int>.FromValue(delay);

}

async Task TestAsyncInner(int delay)

{

await Task.Delay(delay);

return delay;

}

var t = TestAsync();

var x = t.IsCompletedSuccessfully ? t.Result : await t;Ballpark figures? I think that all of these numbers (10ns up to 150ns overhead for calling into an async method) are pretty negligible so long as they're not done in the innermost inner loop of your code. And you should never do that anyway, e.g. because locals in an async method tend to be implemented as fields in a struct. Even the 150ns will quickly be dominated by everything else. The real win of this feature and ValueTask isn't in shaving off CPU cycles as exercised in these tests. Instead it's in avoiding heap allocations for cases where you have to return an already-completed |

|

I should have looked at the counts; it did allocate just in a smaller way - probably the cached Tasks :) Changing to returning 10 and dropping the iters to 1M (so the profile completes in a sane time) The Task path allocates 1M

The allocations are the definitely the big player here. However, there are areas where the overheads are a significant factor, if you don't write convoluted code. Parsing and messaging on async data are the two that come to mind. Whether parsing XML, parsing Form data; or reading messages from a Websocket - though that has other allocation issues. It would also likely be the next item on Async Observables/Enumerables after allocations; if they were doing small transformations like a where clause in Linq. |

Agree. Otherwise we will get "Avoid async/await on the fast(est) path" as it is now with LINQ. |

|

Performance aside, as I don't think it has relevance to the api surface. I'm wondering if/how cancellation tokens fit into the IObservable? e.g. async IObservable<string> Option1or2(CancellationToken cancellationToken)or even o.Subscribe((msg) => {}, (ex) => {}, () => {}, cancellationToken) |

|

@bbarry just to say thanks for the reminder about generic constraints. I was interested to learn that the C# compiler doesn't ever validate constraints on the well-known types and methods it uses. If someone provided a well-known type/method themselves, and placed too-strict constraints on it, the compiler would generate code that doesn't PEVerify. For this case I changed the compiler to make sure that the async method builders have exactly the expected constraints, neither more nor fewer. |

|

I'm closing this thread. I've summarized the results of this thread in the spec and design rationale documents. I have started a new discussion thread #10902 for further comments. |

Download

%userprofile%\AppData\Roaming\Microsoft\VisualStudioand%userprofile%\AppData\Local\Microsoft\VisualStudio. Doing so will reset all your VS settings to default.Introduction

It’s an ongoing user request for async methods to return arbitrary types. We’ve heard this particularly from people who want to return value Task-like types from their async methods for efficiency. In UWP development, it would be nice also to return IAsyncAction directly rather than having to return a Task and then write a wrapper.

The initial C# prototype of async did allow to return arbitrary types from async methods, but that was cut because we didn’t find a neat way to integrate it with generic type inference. Here’s a recap of the problem:

The feature was cut because we had no solution for the full generality of situations like

MyPerverse<T>orMyWeird<T>: no way to make the leap from the type of the operand of the return statement, to the generic type arguments of the Task-like type.Proposal: let’s just abandon the full generality cases

handk. They’re perverse and weird. Let’s say that async methods can return any typeFoo(with similar semantics toTask) or can return any typeFoo<T>(with similar semantics toTask<T>).More concretely, let's say that the return type of an async method or lambda can be any task-like type. A task-like type is one that has either have zero generic type parameters (like

Task) or one generic type parameter (likeTask<T>), and which is declared in one of these two forms, with an attribute:All the type inference and overload resolution that the compiler does today for

Task<T>, it should also do the same for other tasklikes. In particular it should work for target-typing of async lambdas, and it should work for overload resolution.For backwards-compatibility, if the return type is

System.Threading.Tasks.TaskorTask<T>and also lacks the[TaskLike]attribute, then the compiler implicitly picksSystem.Runtime.CompilerServices.AsyncTaskMethodBuilderorAsyncTaskMethodBuilder<T>for the builder.Semantics

[edited to incorporate @ngafter's comments below...]

The VB spec currently explains the semantics of async methods+lambdas like this:

This would be changed to say that, by assumption, the async method's return type is a Tasklike, which has an associated builder type (specified by attribute). An object of that builder type is implicitly created by invoking the builder's static Create method, and the return value of the async method is the result of the

.Taskproperty on that builder instance. (or void).The spec goes on to explain the semantics of exiting the method:

This would be amended to say that if control flow exits through an unhandled exception

exthen.SetException(ex)is called on the builder instance associated with this method instance. Otherwise, the.SetResult()is called, with or without an argument depending on the type.The spec currently gives semantics for how an async method is started:

This would be amended to say that the builder's

Startmethod is invoked, passing an instance that satisfies theIAsyncStateMachineinterface. The async method will start to execute when the builder first callsMoveNexton that instance, (which it may do insideStartfor a hot task, or later for a cold task). If the builder callsMoveNextmore than once (other than in response toAwaitOnCompleted) the behavior is undefined. If ever the builder'sSetStateMachinemethod is invoked, then any future calls it makes toMoveNextmust be through this new value.Note: this allows for a builder to produce cold tasklikes.

The spec currently gives semantics for how the await operator is executed:

This would be amended to say that either

builder.AwaitOnCompletedorbuilder.AwaitUnsafeOnCompletedis invoked. The builder is expected to synthesizes a delegate such that, when the delegate is invoked, then it winds up callingMoveNexton the state machine. Often the delegate restores context.Note: this allows for a builder to be notified in when a cold await operator starts, and when it finishes.

Note: the Task type is not sealed. I might chose to write

MyTask<int> FooAsync()which returns a subtype of Task, but also performs additional logging or other work.Semantics relating to overload resolution and type inference

Currently the rules for Inferred return type say that the inferred return type for an async lambda is always either

TaskorTask<T>for someTderived from the lambda body. This should be changed to say that the inferred return type of an async lambda can be whatever tasklike the target context expects.Currently the overload resolution rules for Better function member say that if two overload candidates have identical parameter lists then tie-breakers such as "more specific" are used to chose between then, otherwise "better conversion from expression" is used. This should be changed to say that tie-breakers will be used when parameter lists are identical up to tasklikes.

Limitations

This scheme wouldn't be able to represent the WinRT types

IAsyncOperationWithProgressorIAsyncActionWithProgress. It also wouldn't be able to represent the fact that WinRT async interfaces have a cancel method upon them. We might consider allowing the async method to access its own builder instance via some special keyword, e.g._thisasync.cancel.ThrowIfCancellationRequested(), but that seems too hacky and I think it's not worth it.Compilation notes and edge cases

Concrete tasklike. The following kind of thing is conceptually impossible, because the compiler needs to know the concrete type of the tasklike that's being constructed (in order to construct it).

Incomplete builder: binding. The compiler should recognize the following as an async method that doesn't need a return statement, and should bind it accordingly. There is nothing wrong with the

asyncmodifier nor the absence of areturnkeyword. The fact thatMyTasklike's builder doesn't fulfill the pattern is an error that comes later on: it doesn't prevent the compiler from binding methodf.Wrong generic. To be a tasklike, a type must (1) have a [Tasklike] attribute on it, (2) have arity 0 or 1. If it has the attribute but the wrong arity then it's not a Tasklike.

Incomplete builder: codegen. If the builder doesn't fulfill the pattern, well, that's an edge case. It should definitely give errors (like it does today e.g. if you have an async Task-returning method and target .NET4.0), but it doesn't matter to have high-quality errors (again it doesn't have high quality errors today). One unusual case of failed builder is where the builder has the wrong constraints on its generic type parameters. As far as I can tell, constraints aren't taken into account elsewhere in the compiler (the only other well known methods with generic parameters are below, and they're all in mscorlib, so no chance of them ever getting wrong)

The text was updated successfully, but these errors were encountered: