-

Notifications

You must be signed in to change notification settings - Fork 162

101: NVME oF setup on RHEL7&RHEL8

This note introduces the steps to configure NVME-oF (NVME over Fabric) drives on RHEL7/RHEL8 using inbox drivers and nvme_strom for SSD-to-GPU Direct SQL over the RoCE (RDMA over Converged Ethernet).

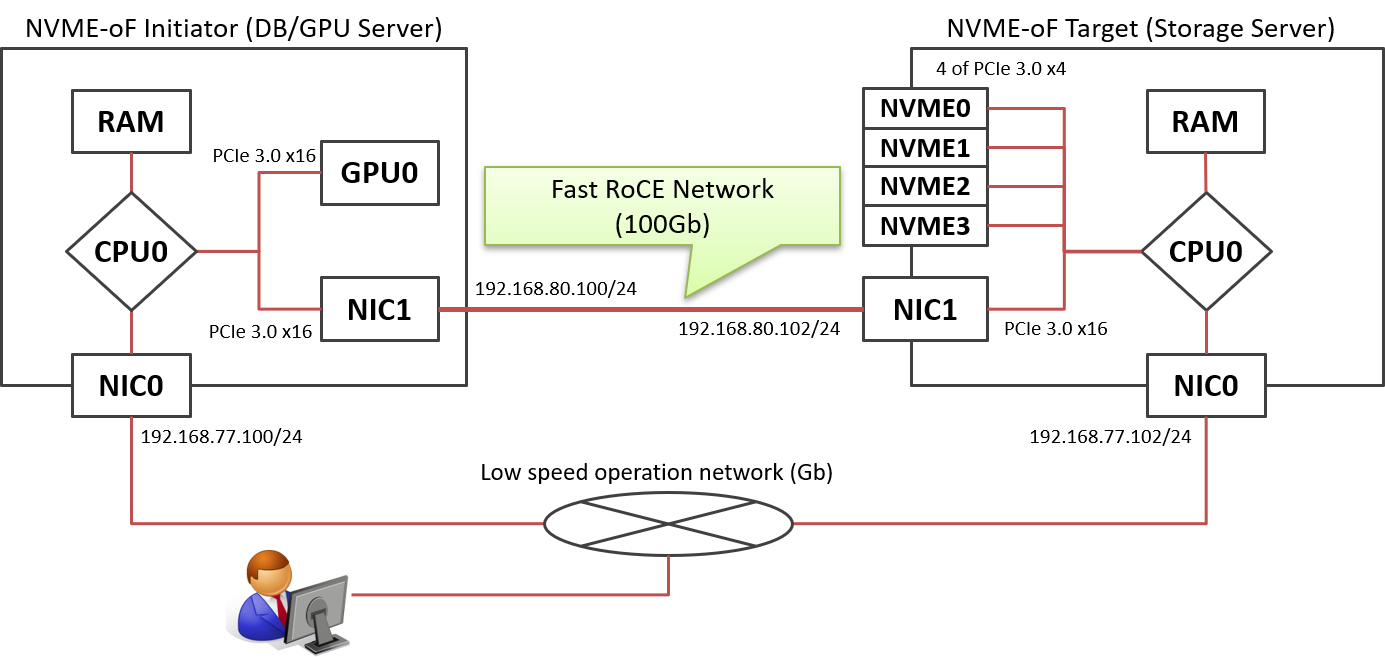

We assume a simple system landscape below. Here are two nodes; one is NVME-oF target (storage server in generic), and the other is NVME-oF initiator (storage client in generic). They are connected over the dedicated fast RoCE network (192.168.80.0/24) without any network gateway in L3. They are also connected to the operation network (192.168.77.0/24) used for daily operations by applications / users.

NVME-oF target installs 4x NVME-SSD drives that are connected to the same PCIe root complex of the network card on the RoCE network. We have tested the configuration in this note using Mellanox ConnectX-5, so some of the description implicitly assumes Mellanox's device.

NVME-oF initiator also installs GPU device that is connected to the same PCIe root complex of the network. That is a kind of hardware-level optimization for device-to-device RDMA.

Install the rdma-core package, and confirm mlx5_ib module is successfully loaded.

# yum -y install rdma-core

# lsmod | grep mlx5

mlx5_ib 262895 0

:

Enables the configuration to load the nvmet-rdma module on system startup time, and reconstruct the initramfs image of the Linux kernel.

# echo nvmet-rdma > /etc/modules-load.d/nvmet-rdma.conf

# dracut -f

Here is nothing special. We can assign IP-address and relevant properties by editing /etc/sysconfig/network-scripts/ifcfg-IFNAME or using nmtui command.

In this example, IP-address is 192.168.80.102/24, MTU is 9000.

We can set up NVME-oF target devices using proper filesystem operations on sysfs (/sys).

First, you need to create a subsystem that is a unit of devices and network port to be exported via NVME-oF protocol.

In this example, nvme-iwashi is name of the subsystem newly created, and allows to accept connections from any host for simplify.

# cd /sys/kernel/config/nvmet/subsystems/

# mkdir -p nvme-iwashi

# cd nvme-iwashi/

# echo 1 > attr_allow_any_host

Second, make namespaces under the subsystem then associate them with local NVME devices (/dev/nvme0n1 ... /dev/nvme3n1 in this case).

# cd namespaces/

# mkdir -p 1 2 3 4

# echo -n /dev/nvme0n1 > 1/device_path

# echo -n /dev/nvme1n1 > 2/device_path

# echo -n /dev/nvme2n1 > 3/device_path

# echo -n /dev/nvme3n1 > 4/device_path

# echo 1 > 1/enable

# echo 1 > 2/enable

# echo 1 > 3/enable

# echo 1 > 4/enable

Third, make a network port and assign network parameters.

# cd /sys/kernel/config/nvmet/ports/

# mkdir -p 1

# echo 192.168.80.102 > 1/addr_traddr

# echo rdma > 1/addr_trtype

# echo 4420 > 1/addr_trsvcid

# echo ipv4 > 1/addr_adrfam

Finally, link the network port above and the subsystem by ln -sf.

# cd subsystems/

# ln -sf ../../../subsystems/nvme-iwashi ./

That's all at NVME-oF target.

That is almost equivalent to the setup at NVME-oF Target, but configures to load nvme-rdma, not nvmet-rdma.

Install the nvme_strom package from the SWDC of HeteroDB. It includes Linux kernel module to intermediate SSD-to-GPU P2P RDMA and patched nvme-rdma module.

# yum -y install nvme_strom

Install the rdma-core package, and confirm mlx5_ib module is successfully loaded.

# yum -y install rdma-core

# lsmod | grep mlx5

mlx5_ib 262895 0

:

Enables the configuration to load the nvme-rdma module on system startup time, and reconstruct the initramfs image of the Linux kernel.

# echo nvmet-rdma > /etc/modules-load.d/nvmet-rdma.conf

# dracut -f

The nvme_strom package replaces the inbox nvme-rdma package by the patched version to accept physical address of PCIe devices on P2P RDMA. If its installation was successfully done, modinfo nvme-rdma shows a kernel module stored in /lib/modules/KERNEL_VER/extra, not in /lib/modules/KERNEL_VER/kernel/drivers/nvme/host.

$ modinfo nvme-rdma

filename: /lib/modules/4.18.0-147.3.1.el8_1.x86_64/extra/nvme-rdma.ko.xz

version: 2.2

description: Enhanced nvme-rdma for SSD-to-GPU Direct SQL

license: GPL v2

rhelversion: 8.1

srcversion: 28FE12E176B093B644FA4F9

depends: nvme-fabrics,ib_core,nvme-core,rdma_cm

name: nvme_rdma

vermagic: 4.18.0-147.3.1.el8_1.x86_64 SMP mod_unload modversions

parm: register_always:Use memory registration even for contiguous memory regions (bool)

First, you try to discover the configured NVME-oF devices using nvme command.

If remote devices are not discovered correctly, back to the prior steps and check configurations; especially, network stack.

# nvme discover -t rdma -a 192.168.80.102 -s 4420

Discovery Log Number of Records 1, Generation counter 1

=====Discovery Log Entry 0======

trtype: rdma

adrfam: ipv4

subtype: nvme subsystem

treq: not specified

portid: 1

trsvcid: 4420

subnqn: nvme-iwashi

traddr: 192.168.80.102

rdma_prtype: not specified

rdma_qptype: connected

rdma_cms: rdma-cm

rdma_pkey: 0x0000

Once remote NVME-oF devices are discovered, you can connect to the devices.

# nvme connect -t rdma -n nvme-iwashi -a 192.168.80.102 -s 4420

# ls -l /dev/nvme*

crw-------. 1 root root 10, 58 Nov 3 11:15 /dev/nvme-fabrics

crw-------. 1 root root 245, 0 Nov 3 11:22 /dev/nvme0

brw-rw----. 1 root disk 259, 0 Nov 3 11:22 /dev/nvme0n1

brw-rw----. 1 root disk 259, 2 Nov 3 11:22 /dev/nvme0n2

brw-rw----. 1 root disk 259, 4 Nov 3 11:22 /dev/nvme0n3

brw-rw----. 1 root disk 259, 6 Nov 3 11:22 /dev/nvme0n4

Note that nvme command is distributed in nvme-cli package. If not installed, run yum install -y nvme-cli.

Once NVME-oF devices are connected, you can operate the drives as if local disks.

- Makes partitions

# fdisk /dev/nvme0n1

# fdisk /dev/nvme0n2

# fdisk /dev/nvme0n3

# fdisk /dev/nvme0n4

- Makes md-raid0 volume

# mdadm -C /dev/md0 -c128 -l0 -n4 /dev/nvme0n?p1

# mdadm --detail --scan > /etc/mdadm.conf

- Format the drive

# mkfs.ext4 -LNVMEOF /dev/md0

- Mount the drive

# mount /dev/md0 /nvme/0

- Test SSD-to-GPU Direct

# ssd2gpu_test -d 0 /nvme/0/flineorder.arrow

GPU[0] Tesla V100-PCIE-32GB - file: /nvme/0/flineorder.arrow, i/o size: 681.73GB, buffer 32MB x 6

read: 681.74GB, time: 68.89sec, throughput: 9.90GB/s

nr_ram2gpu: 0, nr_ssd2gpu: 178712932, average DMA size: 128.0KB

You can shutdown the NVME-oF devices as follows.

- Unmount the filesystem

# umount /nvme/0

- Shutdown md-raid0 device

mdadm --misc --stop /dev/md0

- Disconnect NVME-oF Target

# nvme disconnect -d /dev/nvme0

- Unlink network port from the subsystem.

# rm -f /sys/kernel/config/nvmet/ports/*/subsystems/*

- Remove the network port

# rmdir /sys/kernel/config/nvmet/ports/*

- Remove all the namespaces from the subsystem

# rmdir /sys/kernel/config/nvmet/subsystems/nvme-iwashi/namespaces/*

- Remove the subsystem

# rmdir /sys/kernel/config/nvmet/subsystems/*

- Author: KaiGai Kohei kaigai@heterodb.com

- Initial Date: 18-Jan-2020

- Software version: NVME-Strom v2.2