Scrapes Any LinkedIn Data

$ pip install git+git://github.com/jqueguiner/lk_scraper$ docker-compose up -d

$ docker-compose run lk_scraper python3First, you need to run a Selenium server

$ docker run -d -p 4444:4444 --shm-size 2g selenium/standalone-firefox:3.141.59-20200326After running this command, from the browser navigate to your IP address followed by the port number and /grid/console. So the command will be http://localhost:4444/grid/console.

Navigate to LinkedIn.com and log in

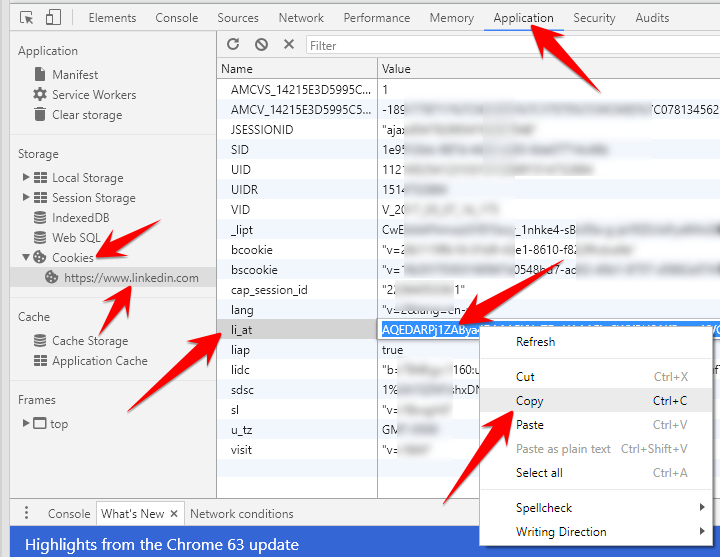

Open up the browser developer tools (Ctrl-Shift-I or right click -> inspect element)

Select the Application tab

Under the Storage header on the left-hand menu, click the Cookies dropdown and select www.linkedin.com

Find the li_at cookie, and double click the value to select it before copying

Select Storage tab

Click the Cookies dropdown and select www.linkedin.com

Find and copy the li_at value

You can add your LinkedIn li_at cookie in the config file that is located in your home (~/.lk_scraper/config.yml)

see

from lk_scraper import Scraper

li_at = "My_super_linkedin_cookie"

scraper = Scraper(li_at=li_at)(Not implemented Yet)

$ export LI_AT="My_super_linkedin_cookie"Run the Jupyter notebook linkedin-example.ipynb

from lk_scraper import Scraper

scraper = Scraper()from lk_scraper import Scraper

scraper = Scraper()

company = scraper.get_object(object_name='company', object_id='apple')from lk_scraper import Scraper

scraper = Scraper()

profil = scraper.get_object(object_name='profil', object_id='jlqueguiner')