-

Notifications

You must be signed in to change notification settings - Fork 12.6k

New issue

Have a question about this project? Sign up for a free GitHub account to open an issue and contact its maintainers and the community.

By clicking “Sign up for GitHub”, you agree to our terms of service and privacy statement. We’ll occasionally send you account related emails.

Already on GitHub? Sign in to your account

tsc --watch initial build 3x slower than tsc #34119

Comments

|

FWIW, the slowdown appears to come from the recursive application of export type DeepReadonlyObject<T> = {

readonly [P in keyof T]: DeepReadonly<T[P]>

}And used like this: // ...

: T extends {}

? DeepReadonlyObject<T>

// ...Then watch is just as fast as a regular tsc compile. Unfortunately, the editor tools don't display the type as nicely since they actually show |

|

@aaronjensen Thanks for the feedback! I'm surprised to hear that A thousand file repro is fine, if we have access to the thousand files. 😉 They're not on GH, are they? Alternatively, we've just added a new |

They are, but in a private repo. So unless you have access to private repos or there's a way to temporarily grant it (i'd need to receive my client's permission), then no, unfortunately.

Not that I can see. Here's my Here's the version the profiles were generated with And here are some profiles.

|

|

incremental uses ".d.ts" emit to decide what files need to be built. So please modify your tsconfig.json to remove |

|

EDIT This was with @sheetalkamat My original issue is about Here's the timing info from Note that I actually have some type errors in all of these builds that were introduced by 3.7 and I haven't taken the time to fix them yet. |

|

yes.. --watch uses same logic as incremental (incremental is just about serializing the partial data that --watch uses to disk) As seen the issue lies with the emit time is what is the issue. |

|

Run again with the proper settings: And the faster one, run the same way: |

|

I suspect it's the same issue as a couple other threads - your type is all anonymous in the slow form, so everything gets printed structurally in a recursive fashion, resulting in a huge declaration file. The alternate form is faster because the printback terminates with a named type. To confirm:

|

|

@weswigham okay, will try. I've been running an incremental build with declaration: true and emitDeclarationOnly: true and it's been running for over 15 minutes... will let it keep going for a bit more but may have to come back to it later. |

|

The type emission for the fast version looks totally wrong. Here's an example property: fast: slow: This happens on 3.8 nightly and 3.6.4 The build took over 20 minutes and it actually failed to emit every file it should have. I'll have to get a profile from it later. |

|

@weswigham The zip file posted above includes cpu profiles. I think you're right that it's spending too much time emitting declarations, probably because it's not using names. I'm seeing 65% of time in |

|

Specifically a lot of time in |

|

Yep, looking at the traces I see pretty much all the time spent in There's also a fair chunk of time spent in GC and in path component-related operations that might also need some optimization - I'm guessing the large number of import types we manufacture require a large number of path calculations which end up making a lot of garbage - some caching may be in order here. |

|

Are you good on the cpu profiles I provided originally? Anything else you'd like me to try? In general, it'd be helpful to know the rules for when TypeScript expands a type and when it's able to give it a name. Looking at the generated types and it expands my entire redux state everywhere it's used, which is pretty verbose. Is there anything circular referencing things that could cause that? |

|

@aaronjensen I think we have everything we need for now. @weswigham is there a mitigation we can suggest, other than rolling back to the original type? |

|

Okay. One thing that helps a little is this hack: type MyStateType = DeepReadonly<

{

foo: FooState,

bar: BarState

}

>

export interface MyState extends MyStateType {}Is it a (separate) issue that is worth opening the import { DeepReadonly } from 'utility-types'

export type Foo = DeepReadonly<{ foo: string }>and in import { Foo } from './foo'

export const useFoo = ({ foo }: { foo: Foo }) => {}Turns into in export declare const useFoo: ({ foo }: {

foo: {

readonly foo: string;

};

}) => void;I'd like/expect to see: export declare const useFoo: ({ foo }: {

foo: import("./foo").Foo

}) => void;edit it doesn't even need to be in a different file, if import { DeepReadonly } from 'utility-types';

export declare type Foo = DeepReadonly<{

foo: string;

}>;

export declare const useFoo: ({ foo }: {

foo: {

readonly foo: string;

};

}) => void; |

|

Okay, it turns out that: import { DeepReadonly } from 'utility-types'

export type Foo = DeepReadonly<{ foo: string }>

export const useFoo: ({ foo }: { foo: Foo }) => void = ({ foo }) => {}Results in: import { DeepReadonly } from 'utility-types';

export declare type Foo = DeepReadonly<{

foo: string;

}>;

export declare const useFoo: ({ foo }: {

foo: Foo;

}) => void;That's definitely surprising. I figured it out while trying to track down a "XXX has or is using name ..." issue. Is there a way/plan to properly infer the original type instead of the expanded type in this situation? I'd imagine it'd actually help the emit situation in this original issue. edit Apparently export functionimport { DeepReadonly } from 'utility-types'

export type Foo = DeepReadonly<{ foo: string }>

export const useFoo: ({ foo }: { foo: Foo }) => void = ({ foo }) => {}

export function useFoo2({ foo }: { foo: Foo }) {

return 2

}import { DeepReadonly } from 'utility-types';

export declare type Foo = DeepReadonly<{

foo: string;

}>;

export declare const useFoo: ({ foo }: {

foo: Foo;

}) => void;

export declare function useFoo2({ foo }: {

foo: Foo;

}): number; |

|

Created #34776 to track some of the optimizations we have in mind here. |

|

Thanks, @DanielRosenwasser. Any thoughts on this:

I'd be happy to open a new issue for it. Essentially, It'd be great if: const foo = (bar: Bar) => {}resulted in emitting the same types as: function foo(bar: Bar) {} |

|

It wouldn't rewrite as a function declaration, but here's what you probably have in mind: #34778 |

|

At least what I mean is that in that issue, you wouldn't have to think about the way |

|

Yes, that's what I meant, not that it would rewrite, but that it would emit with the type alias intact as it does when defined with |

|

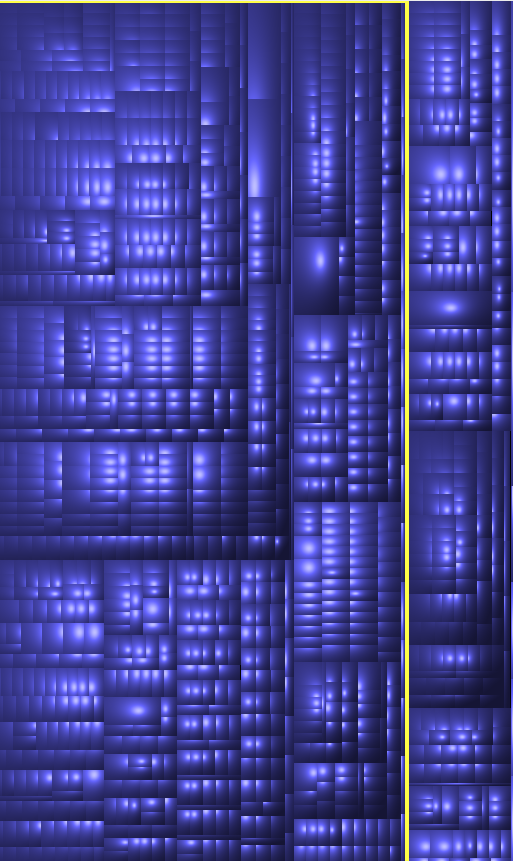

FWIW, I tracked down the bad declarations using an emit only build, and then used a file size explorer to determine which files were slowing the build down. This got my build a 3x speedup (70secs -> 20secs)

I used Disk Inventory X and found big files and cut them down. Before: |

|

As far as I understand, switching from type aliases to interfaces seems to help reduce the emitted declaration size (and therefore speed up declaration emit time). Related Twitter thread: https://twitter.com/drosenwasser/status/1319205169918144513. However, this doesn't help in cases where the problematic types are generated dynamically using mapped types, as is the case when using tools such as io-ts or Unionize. What can we do in these cases? It seems to me that the only solution would be for TS to treat type aliases in the same way it treats interfaces. That is, always preserve the display name and never inline the structure. Related: #32287. /cc @DanielRosenwasser |

In some cases, you can mask it as well, as i'm doing for mobx-state-tree models It's super verbose but works |

|

These all obscure steps are things that better be done by TypeScript internally. |

TypeScript Version: 3.7.0-dev.20191011, 3.6.4, 3.5.2

Search Terms:

DeepReadonly

slow watch mode

Code

The slowness occurs on a codebase of around 1000 files. I can't distill it into a repro, but I can show the type that causes the slowness and an alternate type that does not.

I noticed the slowness when I replaced our implementation of

DeepReadonlywith the one fromts-essentials. One thing I should note in case it is helpful, is that in our codebaseDeepReadonlyis only used about 80 times. It's also used nested in some instances, a DeepReadonly type is included as a property of another DeepReadonly type, for example.Here is the type from

ts-essentials:Here is ours:

Expected behavior:

Both types, when used in our codebase would take a similar amount of time for both a

tscand the initial build oftsc --watch.Actual behavior:

Our original

DeepReadonlytakes about 47 seconds to build usingtsc. The initial build withtsc --watchalso takes a similar amount of time, around 49 seconds.With the

ts-essentialsversion, atscbuild takes around 48 seconds. The initial build withtsc --watchtakes anywhere from 3-5 minutes.Playground Link:

N/A

Related Issues:

None for sure.

The text was updated successfully, but these errors were encountered: