ImVoxelNet: Image to Voxels Projection for Monocular and Multi-View General-Purpose 3D Object Detection

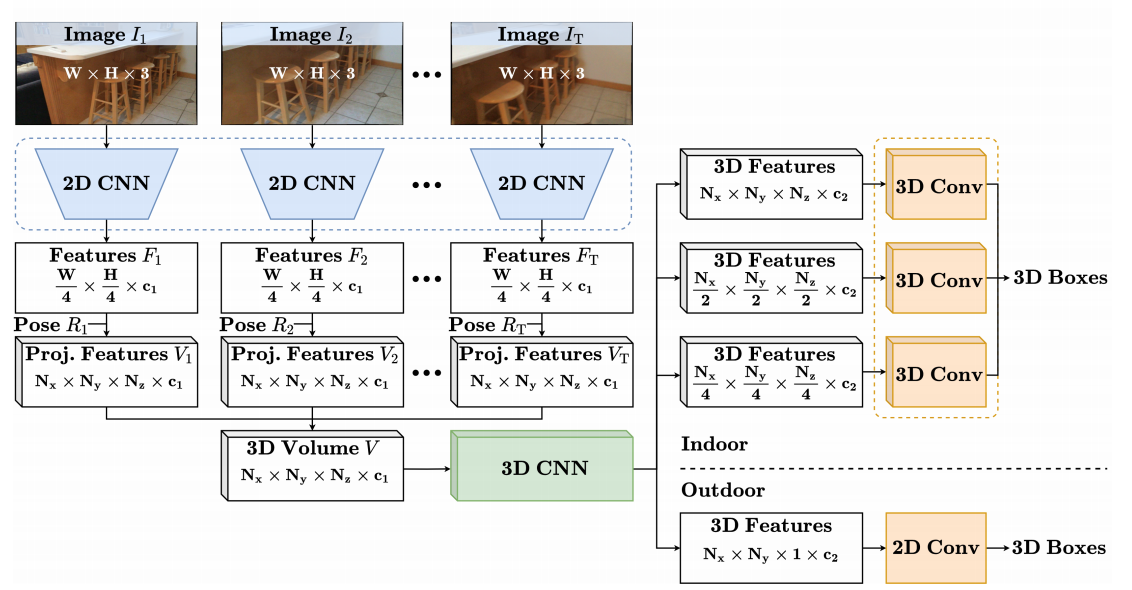

In this paper, we introduce the task of multi-view RGB-based 3D object detection as an end-to-end optimization problem. To address this problem, we propose ImVoxelNet, a novel fully convolutional method of 3D object detection based on posed monocular or multi-view RGB images. The number of monocular images in each multiview input can variate during training and inference; actually, this number might be unique for each multi-view input. ImVoxelNet successfully handles both indoor and outdoor scenes, which makes it general-purpose. Specifically, it achieves state-of-the-art results in car detection on KITTI (monocular) and nuScenes (multi-view) benchmarks among all methods that accept RGB images. Moreover, it surpasses existing RGB-based 3D object detection methods on the SUN RGB-D dataset. On ScanNet, ImVoxelNet sets a new benchmark for multi-view 3D object detection.

We implement a monocular 3D detector ImVoxelNet and provide its results and checkpoints on KITTI and SUN RGB-D datasets. Inference time is given for a single NVidia RTX 3090 GPU. Results for ScanNet and nuScenes are currently available in ImVoxelNet authors repo (based on mmdetection3d).

| Backbone | Class | Lr schd | Mem (GB) | Inf time (fps) | mAP | Download |

|---|---|---|---|---|---|---|

| ResNet-50 | Car | 3x | 14.8 | 8.4 | 17.26 | model | log |

| Backbone | Lr schd | Mem (GB) | Inf time (fps) | mAP@0.25 | mAP@0.5 | Download |

|---|---|---|---|---|---|---|

| ResNet-50 | 2x | 7.2 | 22.5 | 40.96 | 13.50 | model | log |

@article{rukhovich2021imvoxelnet,

title={ImVoxelNet: Image to Voxels Projection for Monocular and Multi-View General-Purpose 3D Object Detection},

author={Danila Rukhovich, Anna Vorontsova, Anton Konushin},

booktitle={Proceedings of the IEEE/CVF Winter Conference on Applications of Computer Vision},

pages={2397--2406},

year={2022}

}