diff --git a/.circleci/config.yml b/.circleci/config.yml

index d2125123c3..fd4dc7f12f 100644

--- a/.circleci/config.yml

+++ b/.circleci/config.yml

@@ -30,7 +30,7 @@ jobs:

name: Build documentation

command: |

source venv/bin/activate

- tox -e docs -vv

+ tox -e compile,docs -vv

- store_artifacts:

path: docs/src/_build

diff --git a/.gitignore b/.gitignore

index aef8db9736..c3ba120f37 100644

--- a/.gitignore

+++ b/.gitignore

@@ -7,6 +7,8 @@

*.o

*.so

*.pyc

+*.pyo

+*.pyd

# Packages #

############

diff --git a/.travis.yml b/.travis.yml

index 3cbccc0b0a..e8df82ceec 100644

--- a/.travis.yml

+++ b/.travis.yml

@@ -13,7 +13,10 @@ language: python

matrix:

include:

- python: '2.7'

- env: TOXENV="flake8"

+ env: TOXENV="flake8,flake8-docs"

+

+ - python: '3.6'

+ env: TOXENV="flake8,flake8-docs"

- python: '2.7'

env: TOXENV="py27-linux"

@@ -24,5 +27,13 @@ matrix:

- python: '3.6'

env: TOXENV="py36-linux"

+ - python: '3.7'

+ env:

+ - TOXENV="py37-linux"

+ - BOTO_CONFIG="/dev/null"

+ dist: xenial

+ sudo: true

+

+

install: pip install tox

script: tox -vv

diff --git a/CHANGELOG.md b/CHANGELOG.md

index 7dd69eb6de..3e51b0f8dd 100644

--- a/CHANGELOG.md

+++ b/CHANGELOG.md

@@ -1,5 +1,107 @@

Changes

===========

+## 3.6.0, 2018-09-20

+

+### :star2: New features

+* File-based training for `*2Vec` models (__[@persiyanov](https://github.com/persiyanov)__, [#2127](https://github.com/RaRe-Technologies/gensim/pull/2127) & [#2078](https://github.com/RaRe-Technologies/gensim/pull/2078) & [#2048](https://github.com/RaRe-Technologies/gensim/pull/2048))

+

+ New training mode for `*2Vec` models (word2vec, doc2vec, fasttext) that allows model training to scale linearly with the number of cores (full GIL elimination). The result of our Google Summer of Code 2018 project by Dmitry Persiyanov.

+

+ **Benchmark**

+ - Dataset: `full English Wikipedia`

+ - Cloud: `GCE`

+ - CPU: `Intel(R) Xeon(R) CPU @ 2.30GHz 32 cores`

+ - BLAS: `MKL`

+

+

+ | Model | Queue-based version [sec] | File-based version [sec] | speed up | Accuracy (queue-based) | Accuracy (file-based) |

+ |-------|------------|--------------------|----------|----------------|-----------------------|

+ | Word2Vec | 9230 | **2437** | **3.79x** | 0.754 (± 0.003) | 0.750 (± 0.001) |

+ | Doc2Vec | 18264 | **2889** | **6.32x** | 0.721 (± 0.002) | 0.683 (± 0.003) |

+ | FastText | 16361 | **10625** | **1.54x** | 0.642 (± 0.002) | 0.660 (± 0.001) |

+

+ Usage:

+

+ ```python

+ import gensim.downloader as api

+ from multiprocessing import cpu_count

+ from gensim.utils import save_as_line_sentence

+ from gensim.test.utils import get_tmpfile

+ from gensim.models import Word2Vec, Doc2Vec, FastText

+

+

+ # Convert any corpus to the needed format: 1 document per line, words delimited by " "

+ corpus = api.load("text8")

+ corpus_fname = get_tmpfile("text8-file-sentence.txt")

+ save_as_line_sentence(corpus, corpus_fname)

+

+ # Choose num of cores that you want to use (let's use all, models scale linearly now!)

+ num_cores = cpu_count()

+

+ # Train models using all cores

+ w2v_model = Word2Vec(corpus_file=corpus_fname, workers=num_cores)

+ d2v_model = Doc2Vec(corpus_file=corpus_fname, workers=num_cores)

+ ft_model = FastText(corpus_file=corpus_fname, workers=num_cores)

+

+ ```

+ [Read notebook tutorial with full description.](https://github.com/RaRe-Technologies/gensim/blob/develop/docs/notebooks/Any2Vec_Filebased.ipynb)

+

+

+### :+1: Improvements

+

+* Add scikit-learn wrapper for `FastText` (__[@mcemilg](https://github.com/mcemilg)__, [#2178](https://github.com/RaRe-Technologies/gensim/pull/2178))

+* Add multiprocessing support for `BM25` (__[@Shiki-H](https://github.com/Shiki-H)__, [#2146](https://github.com/RaRe-Technologies/gensim/pull/2146))

+* Add `name_only` option for downloader api (__[@aneesh-joshi](https://github.com/aneesh-joshi)__, [#2143](https://github.com/RaRe-Technologies/gensim/pull/2143))

+* Make `word2vec2tensor` script compatible with `python3` (__[@vsocrates](https://github.com/vsocrates)__, [#2147](https://github.com/RaRe-Technologies/gensim/pull/2147))

+* Add custom filter for `Wikicorpus` (__[@mattilyra](https://github.com/mattilyra)__, [#2089](https://github.com/RaRe-Technologies/gensim/pull/2089))

+* Make `similarity_matrix` support non-contiguous dictionaries (__[@Witiko](https://github.com/Witiko)__, [#2047](https://github.com/RaRe-Technologies/gensim/pull/2047))

+

+

+### :red_circle: Bug fixes

+

+* Fix memory consumption in `AuthorTopicModel` (__[@philipphager](https://github.com/philipphager)__, [#2122](https://github.com/RaRe-Technologies/gensim/pull/2122))

+* Correctly process empty documents in `AuthorTopicModel` (__[@probinso](https://github.com/probinso)__, [#2133](https://github.com/RaRe-Technologies/gensim/pull/2133))

+* Fix ZeroDivisionError `keywords` issue with short input (__[@LShostenko](https://github.com/LShostenko)__, [#2154](https://github.com/RaRe-Technologies/gensim/pull/2154))

+* Fix `min_count` handling in phrases detection using `npmi_scorer` (__[@lopusz](https://github.com/lopusz)__, [#2072](https://github.com/RaRe-Technologies/gensim/pull/2072))

+* Remove duplicate count from `Phraser` log message (__[@robguinness](https://github.com/robguinness)__, [#2151](https://github.com/RaRe-Technologies/gensim/pull/2151))

+* Replace `np.integer` -> `np.int` in `AuthorTopicModel` (__[@menshikh-iv](https://github.com/menshikh-iv)__, [#2145](https://github.com/RaRe-Technologies/gensim/pull/2145))

+

+

+### :books: Tutorial and doc improvements

+

+* Update docstring with new analogy evaluation method (__[@akutuzov](https://github.com/akutuzov)__, [#2130](https://github.com/RaRe-Technologies/gensim/pull/2130))

+* Improve `prune_at` parameter description for `gensim.corpora.Dictionary` (__[@yxonic](https://github.com/yxonic)__, [#2128](https://github.com/RaRe-Technologies/gensim/pull/2128))

+* Fix `default` -> `auto` prior parameter in documentation for lda-related models (__[@Laubeee](https://github.com/Laubeee)__, [#2156](https://github.com/RaRe-Technologies/gensim/pull/2156))

+* Use heading instead of bold style in `gensim.models.translation_matrix` (__[@nzw0301](https://github.com/nzw0301)__, [#2164](https://github.com/RaRe-Technologies/gensim/pull/2164))

+* Fix quote of vocabulary from `gensim.models.Word2Vec` (__[@nzw0301](https://github.com/nzw0301)__, [#2161](https://github.com/RaRe-Technologies/gensim/pull/2161))

+* Replace deprecated parameters with new in docstring of `gensim.models.Doc2Vec` (__[@xuhdev](https://github.com/xuhdev)__, [#2165](https://github.com/RaRe-Technologies/gensim/pull/2165))

+* Fix formula in Mallet documentation (__[@Laubeee](https://github.com/Laubeee)__, [#2186](https://github.com/RaRe-Technologies/gensim/pull/2186))

+* Fix minor semantic issue in docs for `Phrases` (__[@RunHorst](https://github.com/RunHorst)__, [#2148](https://github.com/RaRe-Technologies/gensim/pull/2148))

+* Fix typo in documentation (__[@KenjiOhtsuka](https://github.com/KenjiOhtsuka)__, [#2157](https://github.com/RaRe-Technologies/gensim/pull/2157))

+* Additional documentation fixes (__[@piskvorky](https://github.com/piskvorky)__, [#2121](https://github.com/RaRe-Technologies/gensim/pull/2121))

+

+### :warning: Deprecations (will be removed in the next major release)

+

+* Remove

+ - `gensim.models.wrappers.fasttext` (obsoleted by the new native `gensim.models.fasttext` implementation)

+ - `gensim.examples`

+ - `gensim.nosy`

+ - `gensim.scripts.word2vec_standalone`

+ - `gensim.scripts.make_wiki_lemma`

+ - `gensim.scripts.make_wiki_online`

+ - `gensim.scripts.make_wiki_online_lemma`

+ - `gensim.scripts.make_wiki_online_nodebug`

+ - `gensim.scripts.make_wiki` (all of these obsoleted by the new native `gensim.scripts.segment_wiki` implementation)

+ - "deprecated" functions and attributes

+

+* Move

+ - `gensim.scripts.make_wikicorpus` ➡ `gensim.scripts.make_wiki.py`

+ - `gensim.summarization` ➡ `gensim.models.summarization`

+ - `gensim.topic_coherence` ➡ `gensim.models._coherence`

+ - `gensim.utils` ➡ `gensim.utils.utils` (old imports will continue to work)

+ - `gensim.parsing.*` ➡ `gensim.utils.text_utils`

+

+

## 3.5.0, 2018-07-06

This release comprises a glorious 38 pull requests from 28 contributors. Most of the effort went into improving the documentation—hence the release code name "Docs 💬"!

@@ -202,7 +304,7 @@ Apart from the **massive overhaul of all Gensim documentation** (including docst

- `gensim.parsing.*` ➡ `gensim.utils.text_utils`

-## 3.3.0, 2018-01-02

+## 3.3.0, 2018-02-02

:star2: New features:

* Re-designed all "*2vec" implementations (__[@manneshiva](https://github.com/manneshiva)__, [#1777](https://github.com/RaRe-Technologies/gensim/pull/1777))

diff --git a/MANIFEST.in b/MANIFEST.in

index 9bfc31660f..da4b2ee47e 100644

--- a/MANIFEST.in

+++ b/MANIFEST.in

@@ -4,14 +4,29 @@ include CHANGELOG.md

include COPYING

include COPYING.LESSER

include ez_setup.py

+

include gensim/models/voidptr.h

+include gensim/models/fast_line_sentence.h

+

include gensim/models/word2vec_inner.c

include gensim/models/word2vec_inner.pyx

include gensim/models/word2vec_inner.pxd

+include gensim/models/word2vec_corpusfile.cpp

+include gensim/models/word2vec_corpusfile.pyx

+include gensim/models/word2vec_corpusfile.pxd

+

include gensim/models/doc2vec_inner.c

include gensim/models/doc2vec_inner.pyx

+include gensim/models/doc2vec_inner.pxd

+include gensim/models/doc2vec_corpusfile.cpp

+include gensim/models/doc2vec_corpusfile.pyx

+

include gensim/models/fasttext_inner.c

include gensim/models/fasttext_inner.pyx

+include gensim/models/fasttext_inner.pxd

+include gensim/models/fasttext_corpusfile.cpp

+include gensim/models/fasttext_corpusfile.pyx

+

include gensim/models/_utils_any2vec.c

include gensim/models/_utils_any2vec.pyx

include gensim/corpora/_mmreader.c

diff --git a/README.md b/README.md

index d2b9e865f5..78c2209f42 100644

--- a/README.md

+++ b/README.md

@@ -119,29 +119,23 @@ Documentation

Adopters

--------

-

-

-| Name | Logo | URL | Description |

-|----------------------------------------|--------------------------------------------------------------------------------------------------------------------------------|--------------------------------------------------------------------------------------------------|-----------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------|

-| RaRe Technologies |  | [rare-technologies.com](http://rare-technologies.com) | Machine learning & NLP consulting and training. Creators and maintainers of Gensim. |

-| Mindseye |  | [mindseye.com](http://www.mindseyesolutions.com/) | Similarities in legal documents |

-| Talentpair |  | [talentpair.com](http://talentpair.com) | Data science driving high-touch recruiting |

-| Tailwind | | [Tailwindapp.com](https://www.tailwindapp.com/)| Post interesting and relevant content to Pinterest |

-| Issuu |  | [Issuu.com](https://issuu.com/)| Gensim’s LDA module lies at the very core of the analysis we perform on each uploaded publication to figure out what it’s all about.

-| Sports Authority |  | [sportsauthority.com](https://en.wikipedia.org/wiki/Sports_Authority)| Text mining of customer surveys and social media sources |

-| Search Metrics |  | [searchmetrics.com](http://www.searchmetrics.com/)| Gensim word2vec used for entity disambiguation in Search Engine Optimisation

-| Cisco Security |  | [cisco.com](http://www.cisco.com/c/en/us/products/security/index.html)| Large-scale fraud detection

-| 12K Research | | [12k.co](https://12k.co/)| Document similarity analysis on media articles

-| National Institutes of Health |  | [github/NIHOPA](https://github.com/NIHOPA/pipeline_word2vec)| Processing grants and publications with word2vec

-| Codeq LLC |  | [codeq.com](https://codeq.com)| Document classification with word2vec

-| Mass Cognition |  | [masscognition.com](http://www.masscognition.com/) | Topic analysis service for consumer text data and general text data |

-| Stillwater Supercomputing |  | [stillwater-sc.com](http://www.stillwater-sc.com/) | Document comprehension and association with word2vec |

-| Channel 4 |  | [channel4.com](http://www.channel4.com/) | Recommendation engine |

-| Amazon |  | [amazon.com](http://www.amazon.com/) | Document similarity|

-| SiteGround Hosting |  | [siteground.com](https://www.siteground.com/) | An ensemble search engine which uses different embeddings models and similarities, including word2vec, WMD, and LDA. |

-| Juju |  | [www.juju.com](http://www.juju.com/) | Provide non-obvious related job suggestions. |

-| NLPub |  | [nlpub.org](https://nlpub.org/) | Distributional semantic models including word2vec. |

-|Capital One |  | [www.capitalone.com](https://www.capitalone.com/) | Topic modeling for customer complaints exploration. |

+| Company | Logo | Industry | Use of Gensim |

+|---------|------|----------|---------------|

+| [RARE Technologies](http://rare-technologies.com) |  | ML & NLP consulting | Creators of Gensim – this is us! |

+| [Amazon](http://www.amazon.com/) |  | Retail | Document similarity. |

+| [National Institutes of Health](https://github.com/NIHOPA/pipeline_word2vec) |  | Health | Processing grants and publications with word2vec. |

+| [Cisco Security](http://www.cisco.com/c/en/us/products/security/index.html) |  | Security | Large-scale fraud detection. |

+| [Mindseye](http://www.mindseyesolutions.com/) |  | Legal | Similarities in legal documents. |

+| [Channel 4](http://www.channel4.com/) |  | Media | Recommendation engine. |

+| [Talentpair](http://talentpair.com) |  | HR | Candidate matching in high-touch recruiting. |

+| [Juju](http://www.juju.com/) |  | HR | Provide non-obvious related job suggestions. |

+| [Tailwind](https://www.tailwindapp.com/) |  | Media | Post interesting and relevant content to Pinterest. |

+| [Issuu](https://issuu.com/) |  | Media | Gensim's LDA module lies at the very core of the analysis we perform on each uploaded publication to figure out what it's all about. |

+| [Search Metrics](http://www.searchmetrics.com/) |  | Content Marketing | Gensim word2vec used for entity disambiguation in Search Engine Optimisation. |

+| [12K Research](https://12k.co/) | | Media | Document similarity analysis on media articles. |

+| [Stillwater Supercomputing](http://www.stillwater-sc.com/) |  | Hardware | Document comprehension and association with word2vec. |

+| [SiteGround](https://www.siteground.com/) |  | Web hosting | An ensemble search engine which uses different embeddings models and similarities, including word2vec, WMD, and LDA. |

+| [Capital One](https://www.capitalone.com/) |  | Finance | Topic modeling for customer complaints exploration. |

-------

diff --git a/appveyor.yml b/appveyor.yml

index 04da45cd43..c9bbf02931 100644

--- a/appveyor.yml

+++ b/appveyor.yml

@@ -28,6 +28,11 @@ environment:

PYTHON_ARCH: "64"

TOXENV: "py36-win"

+ - PYTHON: "C:\\Python37-x64"

+ PYTHON_VERSION: "3.7.0"

+ PYTHON_ARCH: "64"

+ TOXENV: "py37-win"

+

init:

- "ECHO %PYTHON% %PYTHON_VERSION% %PYTHON_ARCH%"

- "ECHO \"%APPVEYOR_SCHEDULED_BUILD%\""

diff --git a/docs/notebooks/Any2Vec_Filebased.ipynb b/docs/notebooks/Any2Vec_Filebased.ipynb

new file mode 100644

index 0000000000..0ad4c2a282

--- /dev/null

+++ b/docs/notebooks/Any2Vec_Filebased.ipynb

@@ -0,0 +1,550 @@

+{

+ "cells": [

+ {

+ "cell_type": "markdown",

+ "metadata": {},

+ "source": [

+ "# *2Vec File-based Training: API Tutorial\n",

+ "\n",

+ "This tutorial introduces a new file-based training mode for **`gensim.models.{Word2Vec, FastText, Doc2Vec}`** which leads to (much) faster training on machines with many cores. Below we demonstrate how to use this new mode, with Python examples."

+ ]

+ },

+ {

+ "cell_type": "markdown",

+ "metadata": {},

+ "source": [

+ "## In this tutorial\n",

+ "\n",

+ "1. We will show how to use the new training mode on Word2Vec, FastText and Doc2Vec.\n",

+ "2. Evaluate the performance of file-based training on the English Wikipedia and compare it to the existing queue-based training.\n",

+ "3. Show that model quality (analogy accuracies on `question-words.txt`) are almost the same for both modes."

+ ]

+ },

+ {

+ "cell_type": "markdown",

+ "metadata": {},

+ "source": [

+ "## Motivation\n",

+ "\n",

+ "The original implementation of Word2Vec training in Gensim is already super fast (covered in [this blog series](https://rare-technologies.com/word2vec-in-python-part-two-optimizing/), see also [benchmarks against other implementations in Tensorflow, DL4J, and C](https://rare-technologies.com/machine-learning-hardware-benchmarks/)) and flexible, allowing you to train on arbitrary Python streams. We had to jump through [some serious hoops](https://www.youtube.com/watch?v=vU4TlwZzTfU) to make it so, avoiding the Global Interpreter Lock (the dreaded GIL, the main bottleneck for any serious high performance computation in Python).\n",

+ "\n",

+ "The end result worked great for modest machines (< 8 cores), but for higher-end servers, the GIL reared its ugly head again. Simply managing the input stream iterators and worker queues, which has to be done in Python holding the GIL, was becoming the bottleneck. Simply put, the Python implementation didn't scale linearly with cores, as the original C implementation by Tomáš Mikolov did."

+ ]

+ },

+ {

+ "cell_type": "markdown",

+ "metadata": {},

+ "source": [

+ ""

+ ]

+ },

+ {

+ "cell_type": "markdown",

+ "metadata": {},

+ "source": [

+ "We decided to change that. After [much](https://github.com/RaRe-Technologies/gensim/pull/2127) [experimentation](https://github.com/RaRe-Technologies/gensim/pull/2048#issuecomment-401494412) and [benchmarking](https://persiyanov.github.io/jekyll/update/2018/05/28/gsoc-first-weeks.html), including some pretty [hardcore outlandish ideas](https://github.com/RaRe-Technologies/gensim/pull/2127#issuecomment-405937741), we figured there's no way around the GIL limitations—not at the level of fine-tuned performance needed here. Remember, we're talking >500k words (training instances) per second, using highly optimized C code. Way past the naive \"vectorize with NumPy arrays\" territory.\n",

+ "\n",

+ "So we decided to introduce a new code path, which has *less flexibility* in favour of *more performance*. We call this code path **`file-based training`**, and it's realized by passing a new `corpus_file` parameter to training. The existing `sentences` parameter (queue-based training) is still available, and you can continue using without any change: there's **full backward compatibility**."

+ ]

+ },

+ {

+ "cell_type": "markdown",

+ "metadata": {},

+ "source": [

+ "## How it works\n",

+ "\n",

+ "\n",

+ "\n",

+ "| *code path* | *input parameter* | *advantages* | *disadvantages*\n",

+ "| :-------- | :-------- | :--------- | :----------- |\n",

+ "| queue-based training (existing) | `sentences` (Python iterable) | Input can be generated dynamically from any storage, or even on-the-fly. | Scaling plateaus after 8 cores. |\n",

+ "| file-based training (new) | `corpus_file` (file on disk) | Scales linearly with CPU cores. | Training corpus must be serialized to disk in a specific format. |"

+ ]

+ },

+ {

+ "cell_type": "markdown",

+ "metadata": {},

+ "source": [

+ "When you specify `corpus_file`, the model will read and process different portions of the file with different workers. The entire bulk of work is done outside of GIL, using no Python structures at all. The workers update the same weight matrix, but otherwise there's no communication, each worker munches on its data portion completely independently. This is the same approach the original C tool uses. \n",

+ "\n",

+ "Training with `corpus_file` yields a **significant performance boost**: for example, in the experiment belows training is 3.7x faster with 32 workers in comparison to training with `sentences` argument. It even outperforms the original Word2Vec C tool in terms of words/sec processing speed on high-core machines.\n",

+ "\n",

+ "The limitation of this approach is that `corpus_file` argument accepts a path to your corpus file, which must be stored on disk in a specific format. The format is simply the well-known [gensim.models.word2vec.LineSentence](https://radimrehurek.com/gensim/models/word2vec.html#gensim.models.word2vec.LineSentence): one sentence per line, with words separated by spaces."

+ ]

+ },

+ {

+ "cell_type": "markdown",

+ "metadata": {},

+ "source": [

+ "## How to use it\n",

+ "\n",

+ "You only need to:\n",

+ "\n",

+ "1. Save your corpus in the LineSentence format to disk (you may use [gensim.utils.save_as_line_sentence(your_corpus, your_corpus_file)](https://radimrehurek.com/gensim/utils.html#gensim.utils.save_as_line_sentence) for convenience).\n",

+ "2. Change `sentences=your_corpus` argument to `corpus_file=your_corpus_file` in `Word2Vec.__init__`, `Word2Vec.build_vocab`, `Word2Vec.train` calls.\n",

+ "\n",

+ "\n",

+ "A short Word2Vec example:"

+ ]

+ },

+ {

+ "cell_type": "code",

+ "execution_count": 1,

+ "metadata": {},

+ "outputs": [

+ {

+ "name": "stdout",

+ "output_type": "stream",

+ "text": [

+ "1\n"

+ ]

+ }

+ ],

+ "source": [

+ "import gensim\n",

+ "import gensim.downloader as api\n",

+ "from gensim.utils import save_as_line_sentence\n",

+ "from gensim.models.word2vec import Word2Vec\n",

+ "\n",

+ "print(gensim.models.word2vec.CORPUSFILE_VERSION) # must be >= 0, i.e. optimized compiled version\n",

+ "\n",

+ "corpus = api.load(\"text8\")\n",

+ "save_as_line_sentence(corpus, \"my_corpus.txt\")\n",

+ "\n",

+ "model = Word2Vec(corpus_file=\"my_corpus.txt\", iter=5, size=300, workers=14)"

+ ]

+ },

+ {

+ "cell_type": "markdown",

+ "metadata": {},

+ "source": [

+ "### Let's prepare the full Wikipedia dataset as training corpus\n",

+ "\n",

+ "We load wikipedia dump from `gensim-data`, perform text preprocessing with Gensim functions, and finally save processed corpus in LineSentence format."

+ ]

+ },

+ {

+ "cell_type": "code",

+ "execution_count": 3,

+ "metadata": {},

+ "outputs": [],

+ "source": [

+ "CORPUS_FILE = 'wiki-en-20171001.txt'"

+ ]

+ },

+ {

+ "cell_type": "code",

+ "execution_count": null,

+ "metadata": {},

+ "outputs": [],

+ "source": [

+ "import itertools\n",

+ "from gensim.parsing.preprocessing import preprocess_string\n",

+ "\n",

+ "def processed_corpus():\n",

+ " raw_corpus = api.load('wiki-english-20171001')\n",

+ " for article in raw_corpus:\n",

+ " # concatenate all section titles and texts of each Wikipedia article into a single \"sentence\"\n",

+ " doc = '\\n'.join(itertools.chain.from_iterable(zip(article['section_titles'], article['section_texts'])))\n",

+ " yield preprocess_string(doc)\n",

+ "\n",

+ "# serialize the preprocessed corpus into a single file on disk, using memory-efficient streaming\n",

+ "save_as_line_sentence(processed_corpus(), CORPUS_FILE)"

+ ]

+ },

+ {

+ "cell_type": "markdown",

+ "metadata": {},

+ "source": [

+ "## Word2Vec\n",

+ "\n",

+ "We train two models:\n",

+ "* With `sentences` argument\n",

+ "* With `corpus_file` argument\n",

+ "\n",

+ "\n",

+ "Then, we compare the timings and accuracy on `question-words.txt`."

+ ]

+ },

+ {

+ "cell_type": "code",

+ "execution_count": null,

+ "metadata": {},

+ "outputs": [],

+ "source": [

+ "from gensim.models.word2vec import LineSentence\n",

+ "import time\n",

+ "\n",

+ "start_time = time.time()\n",

+ "model_sent = Word2Vec(sentences=LineSentence(CORPUS_FILE), iter=5, size=300, workers=32)\n",

+ "sent_time = time.time() - start_time\n",

+ "\n",

+ "start_time = time.time()\n",

+ "model_corp_file = Word2Vec(corpus_file=CORPUS_FILE, iter=5, size=300, workers=32)\n",

+ "file_time = time.time() - start_time"

+ ]

+ },

+ {

+ "cell_type": "code",

+ "execution_count": 5,

+ "metadata": {},

+ "outputs": [

+ {

+ "name": "stdout",

+ "output_type": "stream",

+ "text": [

+ "Training model with `sentences` took 9494.237 seconds\n",

+ "Training model with `corpus_file` took 2566.170 seconds\n"

+ ]

+ }

+ ],

+ "source": [

+ "print(\"Training model with `sentences` took {:.3f} seconds\".format(sent_time))\n",

+ "print(\"Training model with `corpus_file` took {:.3f} seconds\".format(file_time))"

+ ]

+ },

+ {

+ "cell_type": "markdown",

+ "metadata": {},

+ "source": [

+ "**Training with `corpus_file` took 3.7x less time!**"

+ ]

+ },

+ {

+ "cell_type": "markdown",

+ "metadata": {},

+ "source": [

+ "Now, let's compare the accuracies:"

+ ]

+ },

+ {

+ "cell_type": "code",

+ "execution_count": 6,

+ "metadata": {},

+ "outputs": [],

+ "source": [

+ "from gensim.test.utils import datapath"

+ ]

+ },

+ {

+ "cell_type": "code",

+ "execution_count": 7,

+ "metadata": {},

+ "outputs": [

+ {

+ "name": "stderr",

+ "output_type": "stream",

+ "text": [

+ "/home/persiyanov/gensim/gensim/matutils.py:737: FutureWarning: Conversion of the second argument of issubdtype from `int` to `np.signedinteger` is deprecated. In future, it will be treated as `np.int64 == np.dtype(int).type`.\n",

+ " if np.issubdtype(vec.dtype, np.int):\n"

+ ]

+ },

+ {

+ "name": "stdout",

+ "output_type": "stream",

+ "text": [

+ "Word analogy accuracy with `sentences`: 75.4%\n",

+ "Word analogy accuracy with `corpus_file`: 74.8%\n"

+ ]

+ }

+ ],

+ "source": [

+ "model_sent_accuracy = model_sent.wv.evaluate_word_analogies(datapath('questions-words.txt'))[0]\n",

+ "print(\"Word analogy accuracy with `sentences`: {:.1f}%\".format(100.0 * model_sent_accuracy))\n",

+ "\n",

+ "model_corp_file_accuracy = model_corp_file.wv.evaluate_word_analogies(datapath('questions-words.txt'))[0]\n",

+ "print(\"Word analogy accuracy with `corpus_file`: {:.1f}%\".format(100.0 * model_corp_file_accuracy))"

+ ]

+ },

+ {

+ "cell_type": "markdown",

+ "metadata": {},

+ "source": [

+ "The accuracies are approximately the same."

+ ]

+ },

+ {

+ "cell_type": "markdown",

+ "metadata": {},

+ "source": [

+ "## FastText"

+ ]

+ },

+ {

+ "cell_type": "markdown",

+ "metadata": {},

+ "source": [

+ "Short example:"

+ ]

+ },

+ {

+ "cell_type": "code",

+ "execution_count": 8,

+ "metadata": {},

+ "outputs": [],

+ "source": [

+ "import gensim.downloader as api\n",

+ "from gensim.utils import save_as_line_sentence\n",

+ "from gensim.models.fasttext import FastText\n",

+ "\n",

+ "corpus = api.load(\"text8\")\n",

+ "save_as_line_sentence(corpus, \"my_corpus.txt\")\n",

+ "\n",

+ "model = FastText(corpus_file=\"my_corpus.txt\", iter=5, size=300, workers=14)"

+ ]

+ },

+ {

+ "cell_type": "markdown",

+ "metadata": {},

+ "source": [

+ "#### Let's compare the timings"

+ ]

+ },

+ {

+ "cell_type": "code",

+ "execution_count": null,

+ "metadata": {},

+ "outputs": [],

+ "source": [

+ "from gensim.models.word2vec import LineSentence\n",

+ "from gensim.models.fasttext import FastText\n",

+ "import time\n",

+ "\n",

+ "start_time = time.time()\n",

+ "model_corp_file = FastText(corpus_file=CORPUS_FILE, iter=5, size=300, workers=32)\n",

+ "file_time = time.time() - start_time\n",

+ "\n",

+ "start_time = time.time()\n",

+ "model_sent = FastText(sentences=LineSentence(CORPUS_FILE), iter=5, size=300, workers=32)\n",

+ "sent_time = time.time() - start_time"

+ ]

+ },

+ {

+ "cell_type": "code",

+ "execution_count": 10,

+ "metadata": {},

+ "outputs": [

+ {

+ "name": "stdout",

+ "output_type": "stream",

+ "text": [

+ "Training model with `sentences` took 17963.283 seconds\n",

+ "Training model with `corpus_file` took 10725.931 seconds\n"

+ ]

+ }

+ ],

+ "source": [

+ "print(\"Training model with `sentences` took {:.3f} seconds\".format(sent_time))\n",

+ "print(\"Training model with `corpus_file` took {:.3f} seconds\".format(file_time))"

+ ]

+ },

+ {

+ "cell_type": "markdown",

+ "metadata": {},

+ "source": [

+ "**We see a 1.67x performance boost!**"

+ ]

+ },

+ {

+ "cell_type": "markdown",

+ "metadata": {},

+ "source": [

+ "#### Now, accuracies:"

+ ]

+ },

+ {

+ "cell_type": "code",

+ "execution_count": 11,

+ "metadata": {},

+ "outputs": [

+ {

+ "name": "stderr",

+ "output_type": "stream",

+ "text": [

+ "/home/persiyanov/gensim/gensim/matutils.py:737: FutureWarning: Conversion of the second argument of issubdtype from `int` to `np.signedinteger` is deprecated. In future, it will be treated as `np.int64 == np.dtype(int).type`.\n",

+ " if np.issubdtype(vec.dtype, np.int):\n"

+ ]

+ },

+ {

+ "name": "stdout",

+ "output_type": "stream",

+ "text": [

+ "Word analogy accuracy with `sentences`: 64.2%\n",

+ "Word analogy accuracy with `corpus_file`: 66.2%\n"

+ ]

+ }

+ ],

+ "source": [

+ "from gensim.test.utils import datapath\n",

+ "\n",

+ "model_sent_accuracy = model_sent.wv.evaluate_word_analogies(datapath('questions-words.txt'))[0]\n",

+ "print(\"Word analogy accuracy with `sentences`: {:.1f}%\".format(100.0 * model_sent_accuracy))\n",

+ "\n",

+ "model_corp_file_accuracy = model_corp_file.wv.evaluate_word_analogies(datapath('questions-words.txt'))[0]\n",

+ "print(\"Word analogy accuracy with `corpus_file`: {:.1f}%\".format(100.0 * model_corp_file_accuracy))"

+ ]

+ },

+ {

+ "cell_type": "markdown",

+ "metadata": {},

+ "source": [

+ "# Doc2Vec"

+ ]

+ },

+ {

+ "cell_type": "markdown",

+ "metadata": {},

+ "source": [

+ "Short example:"

+ ]

+ },

+ {

+ "cell_type": "code",

+ "execution_count": 12,

+ "metadata": {},

+ "outputs": [],

+ "source": [

+ "import gensim.downloader as api\n",

+ "from gensim.utils import save_as_line_sentence\n",

+ "from gensim.models.doc2vec import Doc2Vec\n",

+ "\n",

+ "corpus = api.load(\"text8\")\n",

+ "save_as_line_sentence(corpus, \"my_corpus.txt\")\n",

+ "\n",

+ "model = Doc2Vec(corpus_file=\"my_corpus.txt\", epochs=5, vector_size=300, workers=14)"

+ ]

+ },

+ {

+ "cell_type": "markdown",

+ "metadata": {},

+ "source": [

+ "#### Let's compare the timings"

+ ]

+ },

+ {

+ "cell_type": "code",

+ "execution_count": null,

+ "metadata": {},

+ "outputs": [],

+ "source": [

+ "from gensim.models.doc2vec import Doc2Vec, TaggedLineDocument\n",

+ "import time\n",

+ "\n",

+ "start_time = time.time()\n",

+ "model_corp_file = Doc2Vec(corpus_file=CORPUS_FILE, epochs=5, vector_size=300, workers=32)\n",

+ "file_time = time.time() - start_time\n",

+ "\n",

+ "start_time = time.time()\n",

+ "model_sent = Doc2Vec(documents=TaggedLineDocument(CORPUS_FILE), epochs=5, vector_size=300, workers=32)\n",

+ "sent_time = time.time() - start_time"

+ ]

+ },

+ {

+ "cell_type": "code",

+ "execution_count": 15,

+ "metadata": {},

+ "outputs": [

+ {

+ "name": "stdout",

+ "output_type": "stream",

+ "text": [

+ "Training model with `sentences` took 20427.949 seconds\n",

+ "Training model with `corpus_file` took 3085.256 seconds\n"

+ ]

+ }

+ ],

+ "source": [

+ "print(\"Training model with `sentences` took {:.3f} seconds\".format(sent_time))\n",

+ "print(\"Training model with `corpus_file` took {:.3f} seconds\".format(file_time))"

+ ]

+ },

+ {

+ "cell_type": "markdown",

+ "metadata": {},

+ "source": [

+ "**A 6.6x speedup!**"

+ ]

+ },

+ {

+ "cell_type": "markdown",

+ "metadata": {},

+ "source": [

+ "#### Accuracies:"

+ ]

+ },

+ {

+ "cell_type": "code",

+ "execution_count": 16,

+ "metadata": {},

+ "outputs": [

+ {

+ "name": "stderr",

+ "output_type": "stream",

+ "text": [

+ "/home/persiyanov/gensim/gensim/matutils.py:737: FutureWarning: Conversion of the second argument of issubdtype from `int` to `np.signedinteger` is deprecated. In future, it will be treated as `np.int64 == np.dtype(int).type`.\n",

+ " if np.issubdtype(vec.dtype, np.int):\n"

+ ]

+ },

+ {

+ "name": "stdout",

+ "output_type": "stream",

+ "text": [

+ "Word analogy accuracy with `sentences`: 71.7%\n",

+ "Word analogy accuracy with `corpus_file`: 67.8%\n"

+ ]

+ }

+ ],

+ "source": [

+ "from gensim.test.utils import datapath\n",

+ "\n",

+ "model_sent_accuracy = model_sent.wv.evaluate_word_analogies(datapath('questions-words.txt'))[0]\n",

+ "print(\"Word analogy accuracy with `sentences`: {:.1f}%\".format(100.0 * model_sent_accuracy))\n",

+ "\n",

+ "model_corp_file_accuracy = model_corp_file.wv.evaluate_word_analogies(datapath('questions-words.txt'))[0]\n",

+ "print(\"Word analogy accuracy with `corpus_file`: {:.1f}%\".format(100.0 * model_corp_file_accuracy))"

+ ]

+ },

+ {

+ "cell_type": "markdown",

+ "metadata": {},

+ "source": [

+ "## TL;DR: Conclusion\n",

+ "\n",

+ "In case your training corpus already lives on disk, you lose nothing by switching to the new `corpus_file` training mode. Training will be much faster.\n",

+ "\n",

+ "In case your corpus is generated dynamically, you can either serialize it to disk first with `gensim.utils.save_as_line_sentence` (and then use the fast `corpus_file`), or if that's not possible continue using the existing `sentences` training mode.\n",

+ "\n",

+ "------\n",

+ "\n",

+ "This new code branch was created by [@persiyanov](https://github.com/persiyanov) as a Google Summer of Code 2018 project in the [RARE Student Incubator](https://rare-technologies.com/incubator/).\n",

+ "\n",

+ "Questions, comments? Use our Gensim [mailing list](https://groups.google.com/forum/#!forum/gensim) and [twitter](https://twitter.com/gensim_py). Happy training!"

+ ]

+ }

+ ],

+ "metadata": {

+ "kernelspec": {

+ "display_name": "Python 3",

+ "language": "python",

+ "name": "python3"

+ },

+ "language_info": {

+ "codemirror_mode": {

+ "name": "ipython",

+ "version": 3

+ },

+ "file_extension": ".py",

+ "mimetype": "text/x-python",

+ "name": "python",

+ "nbconvert_exporter": "python",

+ "pygments_lexer": "ipython3",

+ "version": "3.6.5"

+ }

+ },

+ "nbformat": 4,

+ "nbformat_minor": 2

+}

diff --git a/docs/notebooks/FastText_Tutorial.ipynb b/docs/notebooks/FastText_Tutorial.ipynb

index f547009215..bc964b2829 100644

--- a/docs/notebooks/FastText_Tutorial.ipynb

+++ b/docs/notebooks/FastText_Tutorial.ipynb

@@ -134,7 +134,7 @@

"cell_type": "markdown",

"metadata": {},

"source": [

- "Hyperparameters for training the model follow the same pattern as Word2Vec. FastText supports the folllowing parameters from the original word2vec - \n",

+ "Hyperparameters for training the model follow the same pattern as Word2Vec. FastText supports the following parameters from the original word2vec - \n",

" - model: Training architecture. Allowed values: `cbow`, `skipgram` (Default `cbow`)\n",

" - size: Size of embeddings to be learnt (Default 100)\n",

" - alpha: Initial learning rate (Default 0.025)\n",

diff --git a/docs/notebooks/Poincare Evaluation.ipynb b/docs/notebooks/Poincare Evaluation.ipynb

index 0d3f8bb851..d2dd4bfac5 100644

--- a/docs/notebooks/Poincare Evaluation.ipynb

+++ b/docs/notebooks/Poincare Evaluation.ipynb

@@ -1706,7 +1706,7 @@

"cell_type": "markdown",

"metadata": {},

"source": [

- "1. The model can be investigated further to understand why it doesn't produce results as good as the paper. It is possible that this might be due to training details not present in the paper, or due to us incorrectly interpreting some ambiguous parts of the paper. We have not been able to clarify all such ambiguitities in communication with the authors.\n",

+ "1. The model can be investigated further to understand why it doesn't produce results as good as the paper. It is possible that this might be due to training details not present in the paper, or due to us incorrectly interpreting some ambiguous parts of the paper. We have not been able to clarify all such ambiguities in communication with the authors.\n",

"2. Optimizing the training process further - with a model size of 50 dimensions and a dataset with ~700k relations and ~80k nodes, the Gensim implementation takes around 45 seconds to complete an epoch (~15k relations per second), whereas the open source C++ implementation takes around 1/6th the time (~95k relations per second).\n",

"3. Implementing the variant of the model mentioned in the paper for symmetric graphs and evaluating on the scientific collaboration datasets described earlier in the report."

]

diff --git a/docs/notebooks/Tensorboard_visualizations.ipynb b/docs/notebooks/Tensorboard_visualizations.ipynb

index a2d88e9619..f65083b938 100644

--- a/docs/notebooks/Tensorboard_visualizations.ipynb

+++ b/docs/notebooks/Tensorboard_visualizations.ipynb

@@ -844,7 +844,7 @@

"- **T-SNE**: The idea of T-SNE is to place the local neighbours close to each other, and almost completely ignoring the global structure. It is useful for exploring local neighborhoods and finding local clusters. But the global trends are not represented accurately and the separation between different groups is often not preserved (see the t-sne plots of our data below which testify the same).\n",

"\n",

"\n",

- "- **Custom Projections**: This is a custom bethod based on the text searches you define for different directions. It could be useful for finding meaningful directions in the vector space, for example, female to male, currency to country etc.\n",

+ "- **Custom Projections**: This is a custom method based on the text searches you define for different directions. It could be useful for finding meaningful directions in the vector space, for example, female to male, currency to country etc.\n",

"\n",

"You can refer to this [doc](https://www.tensorflow.org/get_started/embedding_viz) for instructions on how to use and navigate through different panels available in TensorBoard."

]

@@ -1112,9 +1112,10 @@

"\n",

"The above plot was generated with perplexity 11, learning rate 10 and iteration 1100. Though the results could vary on successive runs, and you may not get the exact plot as above even with same hyperparameter settings. But some small clusters will start forming as above, with different orientations.\n",

"\n",

- "I named some clusters above based on the genre of it's movies and also using the `show_topic()` to see relevant terms of the topic which was most prevelant in a cluster. Most of the clusters had doocumets belonging dominantly to a single topic. For ex. The cluster with movies belonging primarily to topic 0 could be named Fantasy/Romance based on terms displayed below for topic 0. You can play with the visualization yourself on this [link](http://projector.tensorflow.org/?config=https://raw.githubusercontent.com/parulsethi/LdaProjector/master/doc_lda_config.json) and try to conclude a label for clusters based on movies it has and their dominant topic. You can see the top 5 topics of every point by hovering over it.\n",

+ "I named some clusters above based on the genre of it's movies and also using the `show_topic()` to see relevant terms of the topic which was most prevalent in a cluster. Most of the clusters had documents belonging dominantly to a single topic. For ex. The cluster with movies belonging primarily to topic 0 could be named Fantasy/Romance based on terms displayed below for topic 0. You can play with the visualization yourself on this [link](http://projector.tensorflow.org/?config=https://raw.githubusercontent.com/parulsethi/LdaProjector/master/doc_lda_config.json) and try to conclude a label for clusters based on movies it has and

+ dominant topic. You can see the top 5 topics of every point by hovering over it.\n",

"\n",

- "Now, we can notice that their are more than 10 clusters in the above image, whereas we trained our model for `num_topics=10`. It's because their are few clusters, which has documents belonging to more than one topic with an approximately close topic probability values."

+ "Now, we can notice that there are more than 10 clusters in the above image, whereas we trained our model for `num_topics=10`. It's because there are few clusters, which has documents belonging to more than one topic with an approximately close topic probability values."

]

},

{

diff --git a/docs/notebooks/Topics_and_Transformations.ipynb b/docs/notebooks/Topics_and_Transformations.ipynb

index 5a8ec7f985..b8b2ff129f 100644

--- a/docs/notebooks/Topics_and_Transformations.ipynb

+++ b/docs/notebooks/Topics_and_Transformations.ipynb

@@ -199,7 +199,7 @@

"In this particular case, we are transforming the same corpus that we used for training, but this is only incidental. Once the transformation model has been initialized, it can be used on any vectors (provided they come from the same vector space, of course), even if they were not used in the training corpus at all. This is achieved by a process called folding-in for LSA, by topic inference for LDA etc.\n",

"\n",

"> Note: \n",

- "> Calling model[corpus] only creates a wrapper around the old corpus document stream – actual conversions are done on-the-fly, during document iteration. We cannot convert the entire corpus at the time of calling corpus_transformed = model[corpus], because that would mean storing the result in main memory, and that contradicts gensim’s objective of memory-indepedence. If you will be iterating over the transformed corpus_transformed multiple times, and the transformation is costly, serialize the resulting corpus to disk first and continue using that.\n",

+ "> Calling model[corpus] only creates a wrapper around the old corpus document stream – actual conversions are done on-the-fly, during document iteration. We cannot convert the entire corpus at the time of calling corpus_transformed = model[corpus], because that would mean storing the result in main memory, and that contradicts gensim’s objective of memory-independence. If you will be iterating over the transformed corpus_transformed multiple times, and the transformation is costly, serialize the resulting corpus to disk first and continue using that.\n",

"\n",

"Transformations can also be serialized, one on top of another, in a sort of chain:"

]

@@ -332,7 +332,7 @@

"metadata": {},

"source": [

"### [Latent Semantic Indexing, LSI (or sometimes LSA)](http://en.wikipedia.org/wiki/Latent_semantic_indexing) \n",

- "LSI transforms documents from either bag-of-words or (preferrably) TfIdf-weighted space into a latent space of a lower dimensionality. For the toy corpus above we used only 2 latent dimensions, but on real corpora, target dimensionality of 200–500 is recommended as a “golden standard” [1]."

+ "LSI transforms documents from either bag-of-words or (preferably) TfIdf-weighted space into a latent space of a lower dimensionality. For the toy corpus above we used only 2 latent dimensions, but on real corpora, target dimensionality of 200–500 is recommended as a “golden standard” [1]."

]

},

{

diff --git a/docs/notebooks/WMD_tutorial.ipynb b/docs/notebooks/WMD_tutorial.ipynb

index 3a529f471e..8f627c37ce 100644

--- a/docs/notebooks/WMD_tutorial.ipynb

+++ b/docs/notebooks/WMD_tutorial.ipynb

@@ -14,7 +14,7 @@

"\n",

"WMD is a method that allows us to assess the \"distance\" between two documents in a meaningful way, even when they have no words in common. It uses [word2vec](http://rare-technologies.com/word2vec-tutorial/) [4] vector embeddings of words. It been shown to outperform many of the state-of-the-art methods in *k*-nearest neighbors classification [3].\n",

"\n",

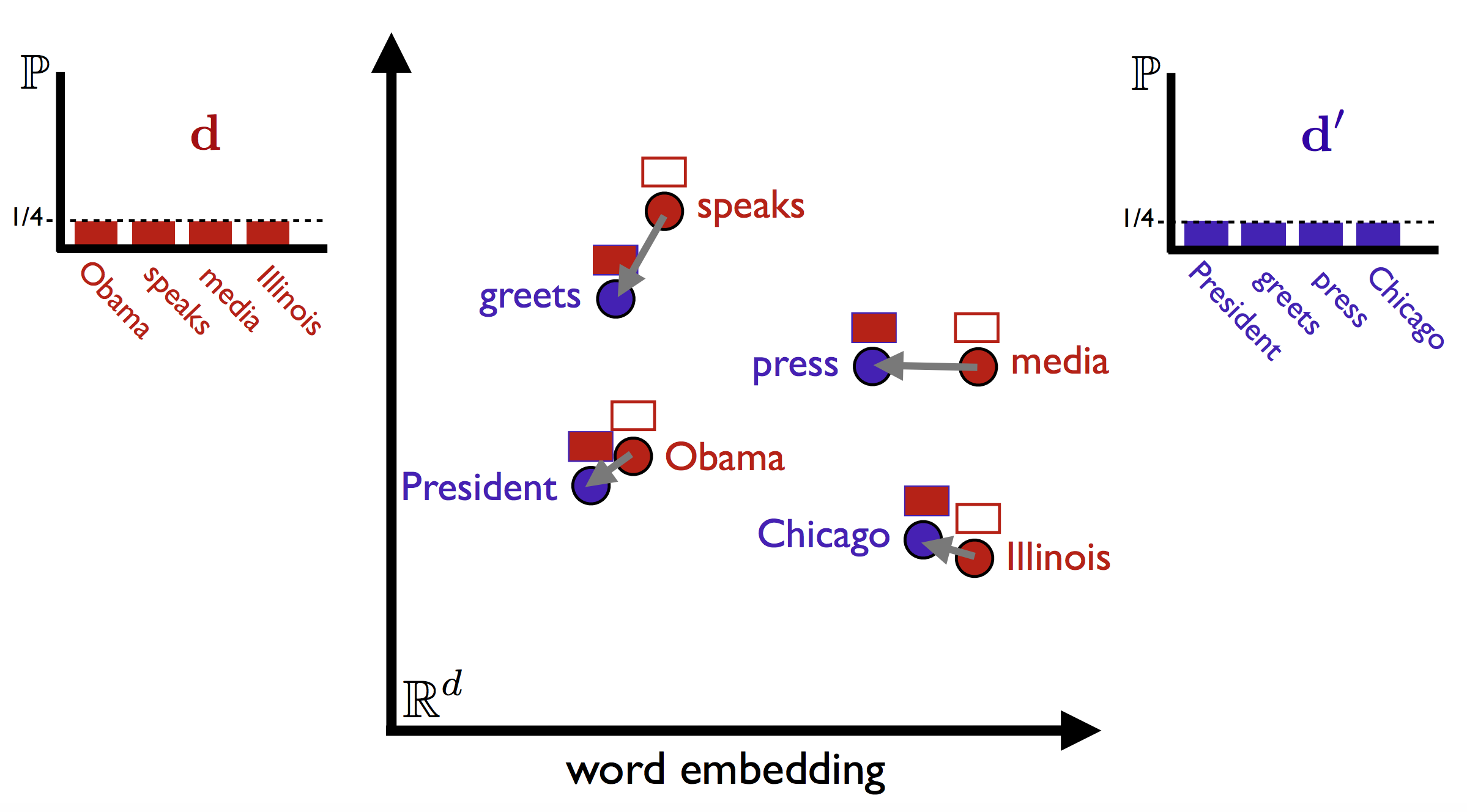

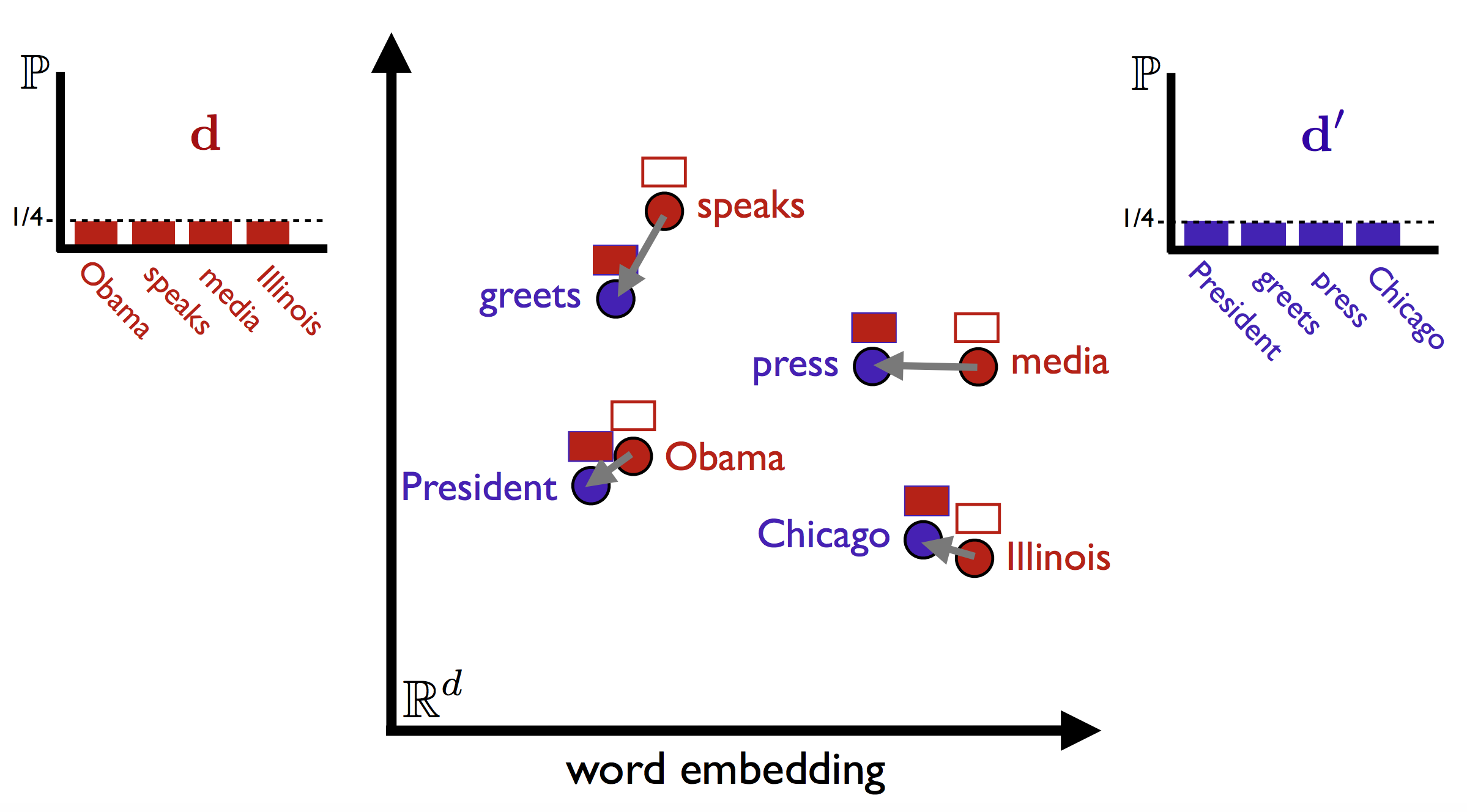

- "WMD is illustrated below for two very similar sentences (illustration taken from [Vlad Niculae's blog](http://vene.ro/blog/word-movers-distance-in-python.html)). The sentences have no words in common, but by matching the relevant words, WMD is able to accurately measure the (dis)similarity between the two sentences. The method also uses the bag-of-words representation of the documents (simply put, the word's frequencies in the documents), noted as $d$ in the figure below. The intution behind the method is that we find the minimum \"traveling distance\" between documents, in other words the most efficient way to \"move\" the distribution of document 1 to the distribution of document 2.\n",

+ "WMD is illustrated below for two very similar sentences (illustration taken from [Vlad Niculae's blog](http://vene.ro/blog/word-movers-distance-in-python.html)). The sentences have no words in common, but by matching the relevant words, WMD is able to accurately measure the (dis)similarity between the two sentences. The method also uses the bag-of-words representation of the documents (simply put, the word's frequencies in the documents), noted as $d$ in the figure below. The intuition behind the method is that we find the minimum \"traveling distance\" between documents, in other words the most efficient way to \"move\" the distribution of document 1 to the distribution of document 2.\n",

"\n",

" \n",

"\n",

@@ -639,4 +639,4 @@

},

"nbformat": 4,

"nbformat_minor": 0

-}

\ No newline at end of file

+}

diff --git a/docs/notebooks/Wordrank_comparisons.ipynb b/docs/notebooks/Wordrank_comparisons.ipynb

index 26bac2e880..a3ab167cc1 100644

--- a/docs/notebooks/Wordrank_comparisons.ipynb

+++ b/docs/notebooks/Wordrank_comparisons.ipynb

@@ -1071,7 +1071,7 @@

" # sort analogies according to their mean frequences \n",

" copy_mean_freq = sorted(copy_mean_freq.items(), key=lambda x: x[1][1])\n",

" # prepare analogies buckets according to given size\n",

- " for centre_p in xrange(bucket_size//2, len(copy_mean_freq), bucket_size):\n",

+ " for centre_p in range(bucket_size//2, len(copy_mean_freq), bucket_size):\n",

" bucket = copy_mean_freq[centre_p-bucket_size//2:centre_p+bucket_size//2]\n",

" b_acc = 0\n",

" # calculate current bucket accuracy with b_acc count\n",

@@ -1174,7 +1174,7 @@

"source": [

"This shows the results for text8(17 million tokens). Following points can be observed in this case-\n",

"\n",

- "1. For Semantic analogies, all the models perform comparitively poor on rare words and also when the word frequency is high towards the end.\n",

+ "1. For Semantic analogies, all the models perform comparatively poor on rare words and also when the word frequency is high towards the end.\n",

"2. For Syntactic Analogies, FastText performance is fairly well on rare words but then falls steeply at highly frequent words.\n",

"3. WordRank and Word2Vec perform very similar with low accuracy for rare and highly frequent words in Syntactic Analogies.\n",

"4. FastText is again better in total analogies case due to the same reason described previously. Here the total no. of Semantic analogies is 7416 and Syntactic Analogies is 10411.\n",

diff --git a/docs/notebooks/translation_matrix.ipynb b/docs/notebooks/translation_matrix.ipynb

index 2a82098752..5439005a8c 100644

--- a/docs/notebooks/translation_matrix.ipynb

+++ b/docs/notebooks/translation_matrix.ipynb

@@ -417,7 +417,7 @@

"duration = []\n",

"sizeofword = []\n",

"\n",

- "for idx in xrange(0, test_case):\n",

+ "for idx in range(0, test_case):\n",

" sub_pair = word_pair[: (idx + 1) * step]\n",

"\n",

" startTime = time.time()\n",

@@ -1450,7 +1450,7 @@

"small_train_docs = train_docs[:15000]\n",

"# train for small corpus\n",

"model1.build_vocab(small_train_docs)\n",

- "for epoch in xrange(50):\n",

+ "for epoch in range(50):\n",

" shuffle(small_train_docs)\n",

" model1.train(small_train_docs, total_examples=len(small_train_docs), epochs=1)\n",

"model.save(\"small_doc_15000_iter50.bin\")\n",

@@ -1458,7 +1458,7 @@

"large_train_docs = train_docs + test_docs\n",

"# train for large corpus\n",

"model2.build_vocab(large_train_docs)\n",

- "for epoch in xrange(50):\n",

+ "for epoch in range(50):\n",

" shuffle(large_train_docs)\n",

" model2.train(large_train_docs, total_examples=len(train_docs), epochs=1)\n",

"# save the model\n",

diff --git a/docs/notebooks/word2vec_file_scaling.png b/docs/notebooks/word2vec_file_scaling.png

new file mode 100644

index 0000000000..e4f5311736

Binary files /dev/null and b/docs/notebooks/word2vec_file_scaling.png differ

diff --git a/docs/src/_index.rst.unused b/docs/src/_index.rst.unused

new file mode 100644

index 0000000000..71390c1060

--- /dev/null

+++ b/docs/src/_index.rst.unused

@@ -0,0 +1,100 @@

+

+:github_url: https://github.com/RaRe-Technologies/gensim

+

+Gensim documentation

+===================================

+

+============

+Introduction

+============

+

+Gensim is a free Python library designed to automatically extract semantic

+topics from documents, as efficiently (computer-wise) and painlessly (human-wise) as possible.

+

+Gensim is designed to process raw, unstructured digital texts ("plain text").

+

+The algorithms in Gensim, such as **Word2Vec**, **FastText**, **Latent Semantic Analysis**, **Latent Dirichlet Allocation** and **Random Projections**, discover semantic structure of documents by examining statistical co-occurrence patterns within a corpus of training documents. These algorithms are **unsupervised**, which means no human input is necessary -- you only need a corpus of plain text documents.

+

+Once these statistical patterns are found, any plain text documents can be succinctly

+expressed in the new, semantic representation and queried for topical similarity

+against other documents, words or phrases.

+

+.. note::

+ If the previous paragraphs left you confused, you can read more about the `Vector

+ Space Model `_ and `unsupervised

+ document analysis `_ on Wikipedia.

+

+

+.. _design:

+

+Features

+--------

+

+* **Memory independence** -- there is no need for the whole training corpus to

+ reside fully in RAM at any one time (can process large, web-scale corpora).

+* **Memory sharing** -- trained models can be persisted to disk and loaded back via mmap. Multiple processes can share the same data, cutting down RAM footprint.

+* Efficient implementations for several popular vector space algorithms,

+ including Word2Vec, Doc2Vec, FastText, TF-IDF, Latent Semantic Analysis (LSI, LSA),

+ Latent Dirichlet Allocation (LDA) or Random Projection.

+* I/O wrappers and readers from several popular data formats.

+* Fast similarity queries for documents in their semantic representation.

+

+The **principal design objectives** behind Gensim are:

+

+1. Straightforward interfaces and low API learning curve for developers. Good for prototyping.

+2. Memory independence with respect to the size of the input corpus; all intermediate

+ steps and algorithms operate in a streaming fashion, accessing one document

+ at a time.

+

+.. seealso::

+

+ We built a high performance server for NLP, document analysis, indexing, search and clustering: https://scaletext.ai.

+ ScaleText is a commercial product, available both on-prem or as SaaS.

+ Reach out at info@scaletext.com if you need an industry-grade tool with professional support.

+

+.. _availability:

+

+Availability

+------------

+

+Gensim is licensed under the OSI-approved `GNU LGPLv2.1 license `_ and can be downloaded either from its `github repository `_ or from the `Python Package Index `_.

+

+.. seealso::

+

+ See the :doc:`install ` page for more info on Gensim deployment.

+

+

+.. toctree::

+ :glob:

+ :maxdepth: 1

+ :caption: Getting started

+

+ install

+ intro

+ support

+ about

+ license

+ citing

+

+

+.. toctree::

+ :maxdepth: 1

+ :caption: Tutorials

+

+ tutorial

+ tut1

+ tut2

+ tut3

+

+

+.. toctree::

+ :maxdepth: 1

+ :caption: API Reference

+

+ apiref

+

+Indices and tables

+==================

+

+* :ref:`genindex`

+* :ref:`modindex`

diff --git a/docs/src/_license.rst.unused b/docs/src/_license.rst.unused

new file mode 100644

index 0000000000..d85983aa44

--- /dev/null

+++ b/docs/src/_license.rst.unused

@@ -0,0 +1,26 @@

+:orphan:

+

+.. _license:

+

+Licensing

+---------

+

+Gensim is licensed under the OSI-approved `GNU LGPLv2.1 license `_.

+

+This means that it's free for both personal and commercial use, but if you make any

+modification to Gensim that you distribute to other people, you have to disclose

+the source code of these modifications.

+

+Apart from that, you are free to redistribute Gensim in any way you like, though you're

+not allowed to modify its license (doh!).

+

+My intent here is to **get more help and community involvement** with the development of Gensim.

+The legalese is therefore less important to me than your input and contributions.

+

+`Contact me `_ if LGPL doesn't fit your bill but you'd like the LGPL restrictions liften.

+

+.. seealso::

+

+ We built a high performance server for NLP, document analysis, indexing, search and clustering: https://scaletext.ai.

+ ScaleText is a commercial product, available both on-prem or as SaaS.

+ Reach out at info@scaletext.com if you need an industry-grade tool with professional support.

diff --git a/docs/src/changes_080.rst b/docs/src/changes_080.rst

index b038ccb930..2786d4b71a 100644

--- a/docs/src/changes_080.rst

+++ b/docs/src/changes_080.rst

@@ -23,10 +23,12 @@ That's not as tragic as it sounds, gensim was almost there anyway. The changes a

If you stored a model that is affected by this to disk, you'll need to rename its attributes manually:

->>> lsa = gensim.models.LsiModel.load('/some/path') # load old <0.8.0 model

->>> lsa.num_terms, lsa.num_topics = lsa.numTerms, lsa.numTopics # rename attributes

->>> del lsa.numTerms, lsa.numTopics # clean up old attributes (optional)

->>> lsa.save('/some/path') # save again to disk, as 0.8.0 compatible

+.. sourcecode:: pycon

+

+ >>> lsa = gensim.models.LsiModel.load('/some/path') # load old <0.8.0 model

+ >>> lsa.num_terms, lsa.num_topics = lsa.numTerms, lsa.numTopics # rename attributes

+ >>> del lsa.numTerms, lsa.numTopics # clean up old attributes (optional)

+ >>> lsa.save('/some/path') # save again to disk, as 0.8.0 compatible

Only attributes (variables) need to be renamed; method names (functions) are not affected, due to the way `pickle` works.

@@ -41,9 +43,11 @@ and can be processed independently. In addition, documents can now be added to a

There is also a new way to query the similarity indexes:

->>> index = MatrixSimilarity(corpus) # create an index

->>> sims = index[document] # get cosine similarity of query "document" against every document in the index

->>> sims = index[chunk_of_documents] # new syntax!

+.. sourcecode:: pycon

+

+ >>> index = MatrixSimilarity(corpus) # create an index

+ >>> sims = index[document] # get cosine similarity of query "document" against every document in the index

+ >>> sims = index[chunk_of_documents] # new syntax!

Advantage of the last line (querying multiple documents at the same time) is faster execution.

@@ -69,7 +73,7 @@ Other changes (that you're unlikely to notice unless you look)

----------------------------------------------------------------------

* Improved efficiency of ``lsi[corpus]`` transformations (documents are chunked internally for better performance).

-* Large matrices (numpy/scipy.sparse, in `LsiModel`, `Similarity` etc.) are now mmapped to/from disk when doing `save/load`. The `cPickle` approach used previously was too `buggy `_ and `slow `_.

+* Large matrices (numpy/scipy.sparse, in `LsiModel`, `Similarity` etc.) are now `mmapped `_ to/from disk when doing `save/load`. The `cPickle` approach used previously was too `buggy `_ and `slow `_.

* Renamed `chunks` parameter to `chunksize` (i.e. `LsiModel(corpus, num_topics=100, chunksize=20000)`). This better reflects its purpose: size of a chunk=number of documents to be processed at once.

* Also improved memory efficiency of LSI and LDA model generation (again).

* Removed SciPy 0.6 from the list of supported SciPi versions (need >=0.7 now).

diff --git a/docs/src/conf.py b/docs/src/conf.py

index d05558c540..da7d0a1994 100644

--- a/docs/src/conf.py

+++ b/docs/src/conf.py

@@ -17,7 +17,7 @@

# If extensions (or modules to document with autodoc) are in another directory,

# add these directories to sys.path here. If the directory is relative to the

# documentation root, use os.path.abspath to make it absolute, like shown here.

-sys.path.append(os.path.abspath('.'))

+sys.path.insert(0, os.path.abspath('../..'))

# -- General configuration -----------------------------------------------------

@@ -55,9 +55,9 @@

# built documents.

#

# The short X.Y version.

-version = '3.5'

+version = '3.6'

# The full version, including alpha/beta/rc tags.

-release = '3.5.0'

+release = '3.6.0'

# The language for content autogenerated by Sphinx. Refer to documentation

# for a list of supported languages.

diff --git a/docs/src/dist_lda.rst b/docs/src/dist_lda.rst

index baf2d28aba..a8d0cb9816 100644

--- a/docs/src/dist_lda.rst

+++ b/docs/src/dist_lda.rst

@@ -19,7 +19,9 @@ Running LDA

____________

Run LDA like you normally would, but turn on the `distributed=True` constructor

-parameter::

+parameter

+

+.. sourcecode:: pycon

>>> # extract 100 LDA topics, using default parameters

>>> lda = LdaModel(corpus=mm, id2word=id2word, num_topics=100, distributed=True)

@@ -34,7 +36,9 @@ In distributed mode with four workers (Linux, Xeons of 2Ghz, 4GB RAM

with `ATLAS `_), the wallclock time taken drops to 3h20m.

To run standard batch LDA (no online updates of mini-batches) instead, you would similarly

-call::

+call

+

+.. sourcecode:: pycon

>>> lda = LdaModel(corpus=mm, id2word=id2token, num_topics=100, update_every=0, passes=20, distributed=True)

using distributed version with 4 workers

@@ -50,7 +54,7 @@ and then, some two days later::

iteration 19, dispatching documents up to #3199665/3199665

reached the end of input; now waiting for all remaining jobs to finish

-::

+.. sourcecode:: pycon

>>> lda.print_topics(20)

topic #0: 0.007*disease + 0.006*medical + 0.005*treatment + 0.005*cells + 0.005*cell + 0.005*cancer + 0.005*health + 0.005*blood + 0.004*patients + 0.004*drug

diff --git a/docs/src/dist_lsi.rst b/docs/src/dist_lsi.rst

index 15dfb41f9c..45c79cb222 100644

--- a/docs/src/dist_lsi.rst

+++ b/docs/src/dist_lsi.rst

@@ -58,16 +58,20 @@ ____________

So let's test our setup and run one computation of distributed LSA. Open a Python

shell on one of the five machines (again, this can be done on any computer

in the same `broadcast domain `_,

-our choice is incidental) and try::

+our choice is incidental) and try:

- >>> from gensim import corpora, models, utils

+.. sourcecode:: pycon

+

+ >>> from gensim import corpora, models

>>> import logging

+ >>>

>>> logging.basicConfig(format='%(asctime)s : %(levelname)s : %(message)s', level=logging.INFO)

-

- >>> corpus = corpora.MmCorpus('/tmp/deerwester.mm') # load a corpus of nine documents, from the Tutorials

+ >>>

+ >>> corpus = corpora.MmCorpus('/tmp/deerwester.mm') # load a corpus of nine documents, from the Tutorials

>>> id2word = corpora.Dictionary.load('/tmp/deerwester.dict')

-

- >>> lsi = models.LsiModel(corpus, id2word=id2word, num_topics=200, chunksize=1, distributed=True) # run distributed LSA on nine documents

+ >>>

+ >>> # run distributed LSA on nine documents

+ >>> lsi = models.LsiModel(corpus, id2word=id2word, num_topics=200, chunksize=1, distributed=True)

This uses the corpus and feature-token mapping created in the :doc:`tut1` tutorial.

If you look at the log in your Python session, you should see a line similar to::

@@ -76,7 +80,9 @@ If you look at the log in your Python session, you should see a line similar to:

which means all went well. You can also check the logs coming from your worker and dispatcher

processes --- this is especially helpful in case of problems.

-To check the LSA results, let's print the first two latent topics::

+To check the LSA results, let's print the first two latent topics:

+

+.. sourcecode:: pycon

>>> lsi.print_topics(num_topics=2, num_words=5)

topic #0(3.341): 0.644*"system" + 0.404*"user" + 0.301*"eps" + 0.265*"time" + 0.265*"response"

@@ -86,13 +92,15 @@ Success! But a corpus of nine documents is no challenge for our powerful cluster

In fact, we had to lower the job size (`chunksize` parameter above) to a single document

at a time, otherwise all documents would be processed by a single worker all at once.

-So let's run LSA on **one million documents** instead::

+So let's run LSA on **one million documents** instead

+

+.. sourcecode:: pycon

>>> # inflate the corpus to 1M documents, by repeating its documents over&over

>>> corpus1m = utils.RepeatCorpus(corpus, 1000000)

>>> # run distributed LSA on 1 million documents

>>> lsi1m = models.LsiModel(corpus1m, id2word=id2word, num_topics=200, chunksize=10000, distributed=True)

-

+ >>>

>>> lsi1m.print_topics(num_topics=2, num_words=5)

topic #0(1113.628): 0.644*"system" + 0.404*"user" + 0.301*"eps" + 0.265*"time" + 0.265*"response"

topic #1(847.233): 0.623*"graph" + 0.490*"trees" + 0.451*"minors" + 0.274*"survey" + -0.167*"system"

@@ -118,25 +126,31 @@ Distributed LSA on Wikipedia

++++++++++++++++++++++++++++++

First, download and prepare the Wikipedia corpus as per :doc:`wiki`, then load

-the corpus iterator with::

+the corpus iterator with

- >>> import logging, gensim

- >>> logging.basicConfig(format='%(asctime)s : %(levelname)s : %(message)s', level=logging.INFO)

+.. sourcecode:: pycon

+ >>> import logging

+ >>> import gensim

+ >>>

+ >>> logging.basicConfig(format='%(asctime)s : %(levelname)s : %(message)s', level=logging.INFO)

+ >>>

>>> # load id->word mapping (the dictionary)

>>> id2word = gensim.corpora.Dictionary.load_from_text('wiki_en_wordids.txt')

>>> # load corpus iterator

>>> mm = gensim.corpora.MmCorpus('wiki_en_tfidf.mm')

>>> # mm = gensim.corpora.MmCorpus('wiki_en_tfidf.mm.bz2') # use this if you compressed the TFIDF output

-

+ >>>

>>> print(mm)

MmCorpus(3199665 documents, 100000 features, 495547400 non-zero entries)

-Now we're ready to run distributed LSA on the English Wikipedia::

+Now we're ready to run distributed LSA on the English Wikipedia:

+

+.. sourcecode:: pycon

>>> # extract 400 LSI topics, using a cluster of nodes

>>> lsi = gensim.models.lsimodel.LsiModel(corpus=mm, id2word=id2word, num_topics=400, chunksize=20000, distributed=True)

-

+ >>>

>>> # print the most contributing words (both positively and negatively) for each of the first ten topics

>>> lsi.print_topics(10)

2010-11-03 16:08:27,602 : INFO : topic #0(200.990): -0.475*"delete" + -0.383*"deletion" + -0.275*"debate" + -0.223*"comments" + -0.220*"edits" + -0.213*"modify" + -0.208*"appropriate" + -0.194*"subsequent" + -0.155*"wp" + -0.117*"notability"

diff --git a/docs/src/intro.rst b/docs/src/intro.rst

index bcb60efa27..b686a23a49 100644

--- a/docs/src/intro.rst

+++ b/docs/src/intro.rst

@@ -30,7 +30,7 @@ Features

* **Memory independence** -- there is no need for the whole training corpus to

reside fully in RAM at any one time (can process large, web-scale corpora).

-* **Memory sharing** -- trained models can be persisted to disk and loaded back via mmap. Multiple processes can share the same data, cutting down RAM footprint.

+* **Memory sharing** -- trained models can be persisted to disk and loaded back via `mmap `_. Multiple processes can share the same data, cutting down RAM footprint.

* Efficient implementations for several popular vector space algorithms,

including :class:`~gensim.models.word2vec.Word2Vec`, :class:`~gensim.models.doc2vec.Doc2Vec`, :class:`~gensim.models.fasttext.FastText`,

TF-IDF, Latent Semantic Analysis (LSI, LSA, see :class:`~gensim.models.lsimodel.LsiModel`),

diff --git a/docs/src/simserver.rst b/docs/src/simserver.rst

index 1b0d2b4396..49b26ab5d4 100644

--- a/docs/src/simserver.rst

+++ b/docs/src/simserver.rst

@@ -20,20 +20,20 @@ Conceptually, a service that lets you :

2. index arbitrary documents using this semantic model

3. query the index for similar documents (the query can be either an id of a document already in the index, or an arbitrary text)

-

->>> from simserver import SessionServer

->>> server = SessionServer('/tmp/my_server') # resume server (or create a new one)

-

->>> server.train(training_corpus, method='lsi') # create a semantic model

->>> server.index(some_documents) # convert plain text to semantic representation and index it

->>> server.find_similar(query) # convert query to semantic representation and compare against index

->>> ...

->>> server.index(more_documents) # add to index: incremental indexing works

->>> server.find_similar(query)

->>> ...

->>> server.delete(ids_to_delete) # incremental deleting also works

->>> server.find_similar(query)

->>> ...

+ .. sourcecode:: pycon

+

+ >>> from simserver import SessionServer

+ >>> server = SessionServer('/tmp/my_server') # resume server (or create a new one)

+ >>>

+ >>> server.train(training_corpus, method='lsi') # create a semantic model

+ >>> server.index(some_documents) # convert plain text to semantic representation and index it

+ >>> server.find_similar(query) # convert query to semantic representation and compare against index

+ >>>

+ >>> server.index(more_documents) # add to index: incremental indexing works

+ >>> server.find_similar(query)

+ >>>

+ >>> server.delete(ids_to_delete) # incremental deleting also works

+ >>> server.find_similar(query)

.. note::

"Semantic" here refers to semantics of the crude, statistical type --

@@ -89,19 +89,23 @@ version 4.8 as of this writing)::

$ sudo easy_install Pyro4

.. note::

- Don't forget to initialize logging to see logging messages::

+ Don't forget to initialize logging to see logging messages:

+

+ .. sourcecode:: pycon

- >>> import logging

- >>> logging.basicConfig(format='%(asctime)s : %(levelname)s : %(message)s', level=logging.INFO)

+ >>> import logging

+ >>> logging.basicConfig(format='%(asctime)s : %(levelname)s : %(message)s', level=logging.INFO)

What is a document?

-------------------

-In case of text documents, the service expects::

+In case of text documents, the service expects:

->>> document = {'id': 'some_unique_string',

->>> 'tokens': ['content', 'of', 'the', 'document', '...'],

->>> 'other_fields_are_allowed_but_ignored': None}

+.. sourcecode:: pycon

+

+ >>> document = {'id': 'some_unique_string',

+ >>> 'tokens': ['content', 'of', 'the', 'document', '...'],

+ >>> 'other_fields_are_allowed_but_ignored': None}

This format was chosen because it coincides with plain JSON and is therefore easy to serialize and send over the wire, in almost any language.

All strings involved must be utf8-encoded.

@@ -113,23 +117,29 @@ What is a corpus?

A sequence of documents. Anything that supports the `for document in corpus: ...`

iterator protocol. Generators are ok. Plain lists are also ok (but consume more memory).

->>> from gensim import utils

->>> texts = ["Human machine interface for lab abc computer applications",

->>> "A survey of user opinion of computer system response time",

->>> "The EPS user interface management system",

->>> "System and human system engineering testing of EPS",

->>> "Relation of user perceived response time to error measurement",

->>> "The generation of random binary unordered trees",

->>> "The intersection graph of paths in trees",

->>> "Graph minors IV Widths of trees and well quasi ordering",

->>> "Graph minors A survey"]

->>> corpus = [{'id': 'doc_%i' % num, 'tokens': utils.simple_preprocess(text)}

->>> for num, text in enumerate(texts)]

+.. sourcecode:: pycon

+

+ >>> from gensim import utils

+ >>>

+ >>> texts = ["Human machine interface for lab abc computer applications",

+ >>> "A survey of user opinion of computer system response time",

+ >>> "The EPS user interface management system",

+ >>> "System and human system engineering testing of EPS",

+ >>> "Relation of user perceived response time to error measurement",

+ >>> "The generation of random binary unordered trees",

+ >>> "The intersection graph of paths in trees",

+ >>> "Graph minors IV Widths of trees and well quasi ordering",

+ >>> "Graph minors A survey"]

+ >>>

+ >>> corpus = [{'id': 'doc_%i' % num, 'tokens': utils.simple_preprocess(text)}

+ >>> for num, text in enumerate(texts)]

Since corpora are allowed to be arbitrarily large, it is

recommended client splits them into smaller chunks before uploading them to the server:

->>> utils.upload_chunked(server, corpus, chunksize=1000) # send 1k docs at a time

+.. sourcecode:: pycon

+

+ >>> utils.upload_chunked(server, corpus, chunksize=1000) # send 1k docs at a time

Wait, upload what, where?

-------------------------

@@ -141,11 +151,13 @@ option, not a necessity.

Document similarity can also act as a long-running service, a daemon process on a separate machine. In that

case, I'll call the service object a *server*.

-But let's start with a local object. Open your `favourite shell `_ and::

+But let's start with a local object. Open your `favourite shell `_ and

->>> from gensim import utils

->>> from simserver import SessionServer

->>> service = SessionServer('/tmp/my_server/') # or wherever

+.. sourcecode:: pycon

+

+ >>> from simserver import SessionServer

+ >>>

+ >>> service = SessionServer('/tmp/my_server/') # or wherever

That initialized a new service, located in `/tmp/my_server` (you need write access rights to that directory).

@@ -162,14 +174,18 @@ Model training

We can start indexing right away:

->>> service.index(corpus)

-AttributeError: must initialize model for /tmp/my_server/b before indexing documents

+.. sourcecode:: pycon

+

+ >>> service.index(corpus)

+ AttributeError: must initialize model for /tmp/my_server/b before indexing documents

Oops, we can not. The service indexes documents in a semantic representation, which

is different to the plain text we give it. We must teach the service how to convert

-between plain text and semantics first::

+between plain text and semantics first:

->>> service.train(corpus, method='lsi')

+.. sourcecode:: pycon

+

+ >>> service.train(corpus, method='lsi')

That was easy. The `method='lsi'` parameter meant that we trained a model for

`Latent Semantic Indexing `_

@@ -188,19 +204,25 @@ on a corpus that is:

Indexing documents

------------------

->>> service.index(corpus) # index the same documents that we trained on...

+.. sourcecode:: pycon

+

+ >>> service.index(corpus) # index the same documents that we trained on...

Indexing can happen over any documents, but I'm too lazy to create another example corpus, so we index the same 9 docs used for training.

-Delete documents with::

+Delete documents with:

- >>> service.delete(['doc_5', 'doc_8']) # supply a list of document ids to be removed from the index

+.. sourcecode:: pycon

+

+ >>> service.delete(['doc_5', 'doc_8']) # supply a list of document ids to be removed from the index

When you pass documents that have the same id as some already indexed document,

the indexed document is overwritten by the new input (=only the latest counts;

-document ids are always unique per service)::

+document ids are always unique per service):

+

+.. sourcecode:: pycon

- >>> service.index(corpus[:3]) # overall index size unchanged (just 3 docs overwritten)

+ >>> service.index(corpus[:3]) # overall index size unchanged (just 3 docs overwritten)

The index/delete/overwrite calls can be arbitrarily interspersed with queries.

You don't have to index **all** documents first to start querying, indexing can be incremental.

@@ -212,26 +234,26 @@ There are two types of queries:

1. by id:

- .. code-block:: python

-

- >>> print(service.find_similar('doc_0'))

- [('doc_0', 1.0, None), ('doc_2', 0.30426699, None), ('doc_1', 0.25648531, None), ('doc_3', 0.25480536, None)]

+ .. sourcecode:: pycon

- >>> print(service.find_similar('doc_5')) # we deleted doc_5 and doc_8, remember?

- ValueError: document 'doc_5' not in index

+ >>> print(service.find_similar('doc_0'))

+ [('doc_0', 1.0, None), ('doc_2', 0.30426699, None), ('doc_1', 0.25648531, None), ('doc_3', 0.25480536, None)]

+ >>>

+ >>> print(service.find_similar('doc_5')) # we deleted doc_5 and doc_8, remember?

+ ValueError: document 'doc_5' not in index