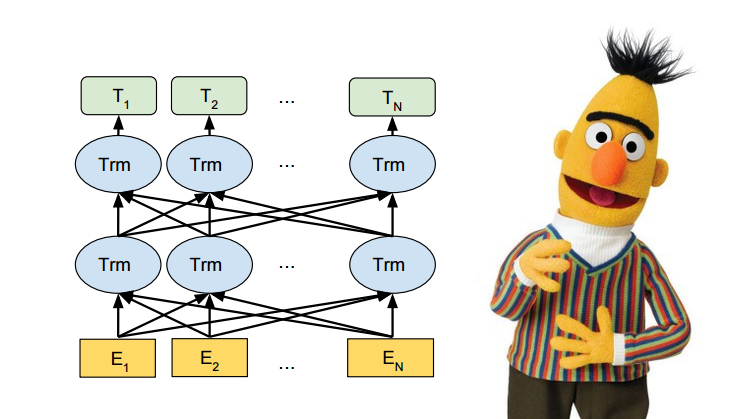

Fill-in-the-BERT is a fill in the blanks model that is trained to predict the missing word in the sentence. For the purpose of this demo we will be using pre-trained bert-base-uncased as our prediction model. If you are new to BERT, please read BERT-Explained and Visual Guide to BERT

$> python3 app.py

Open index.html in the browser and start typing 💬

- Model - bert-base-uncased

- Pre-trained Task - MaskedLM

P.S. The attention visualisation is done for layer 3 across all attention heads by taking their average. Read more about heads and what they mean at Visualizing inner workings of attention

- PyTorch

- HTML/Bootstrap

- Flask