diff --git a/.github/workflows/ci-tests.yml b/.github/workflows/ci-tests.yml

index cee98dc33d..81f5132ccf 100644

--- a/.github/workflows/ci-tests.yml

+++ b/.github/workflows/ci-tests.yml

@@ -36,8 +36,12 @@ jobs:

matrix:

os: ["ubuntu-latest"]

python-version: ["3.10"]

- session: ["tests", "doctest", "gallery", "linkcheck"]

+ session: ["doctest", "gallery", "linkcheck"]

include:

+ - os: "ubuntu-latest"

+ python-version: "3.10"

+ session: "tests"

+ coverage: "--coverage"

- os: "ubuntu-latest"

python-version: "3.9"

session: "tests"

@@ -133,4 +137,8 @@ jobs:

env:

PY_VER: ${{ matrix.python-version }}

run: |

- nox --session ${{ matrix.session }} -- --verbose

+ nox --session ${{ matrix.session }} -- --verbose ${{ matrix.coverage }}

+

+ - name: Upload coverage report

+ uses: codecov/codecov-action@v3

+ if: ${{ matrix.coverage }}

diff --git a/.pre-commit-config.yaml b/.pre-commit-config.yaml

index ad5a9d4626..7c95eeaca3 100644

--- a/.pre-commit-config.yaml

+++ b/.pre-commit-config.yaml

@@ -29,7 +29,7 @@ repos:

- id: no-commit-to-branch

- repo: https://github.com/psf/black

- rev: 22.12.0

+ rev: 23.1.0

hooks:

- id: black

pass_filenames: false

@@ -43,18 +43,17 @@ repos:

args: [--config=./setup.cfg]

- repo: https://github.com/pycqa/isort

- rev: 5.11.4

+ rev: 5.12.0

hooks:

- id: isort

types: [file, python]

args: [--filter-files]

- repo: https://github.com/asottile/blacken-docs

- rev: v1.12.1

+ rev: 1.13.0

hooks:

- id: blacken-docs

types: [file, rst]

- additional_dependencies: [black==21.6b0]

- repo: https://github.com/aio-libs/sort-all

rev: v1.2.0

diff --git a/README.md b/README.md

index ac2781f469..67c4399116 100644

--- a/README.md

+++ b/README.md

@@ -19,6 +19,9 @@

+

+

+

+

@@ -54,3 +57,24 @@ For documentation see the

developer version or the most recent released

stable version.

+

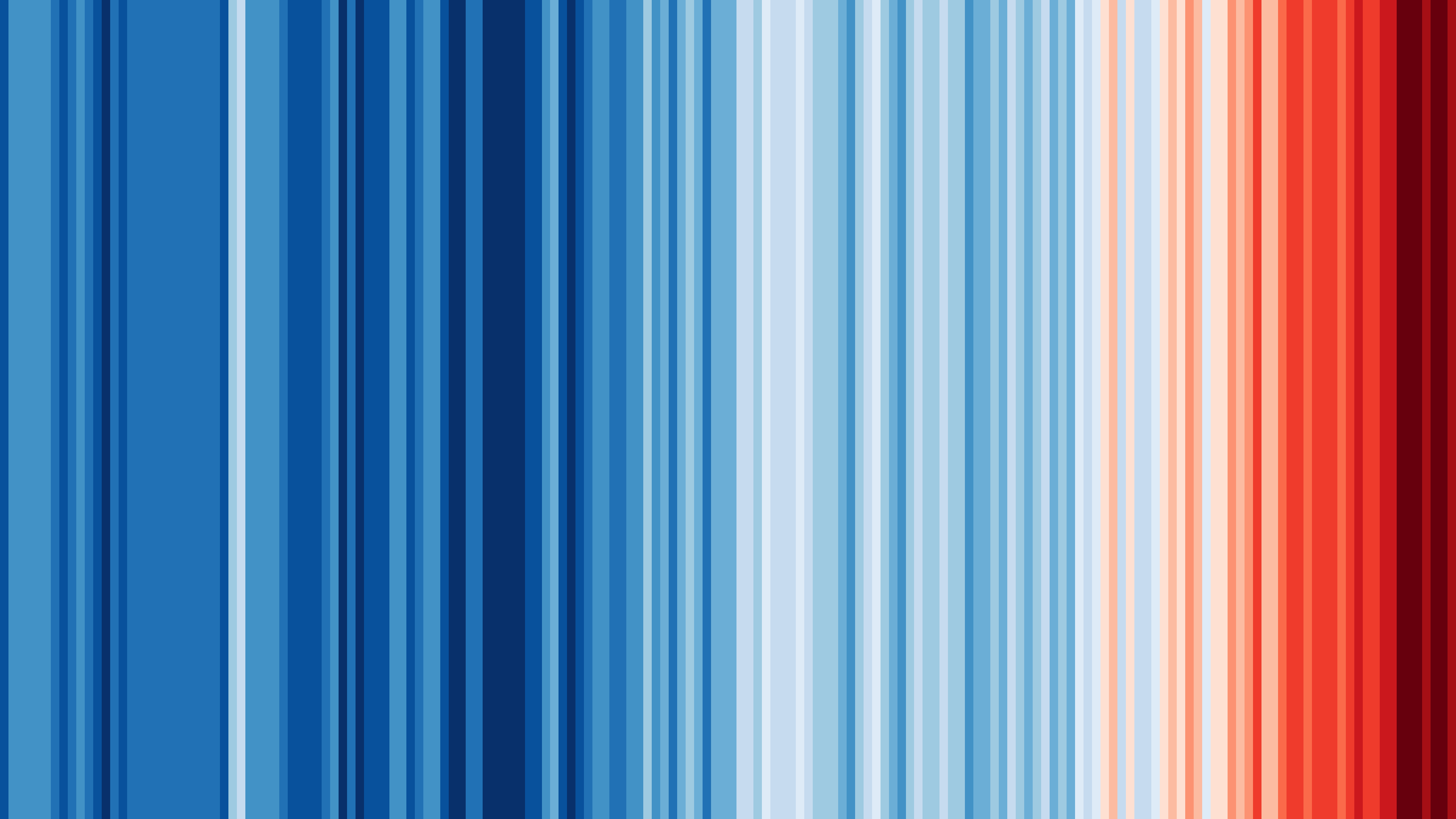

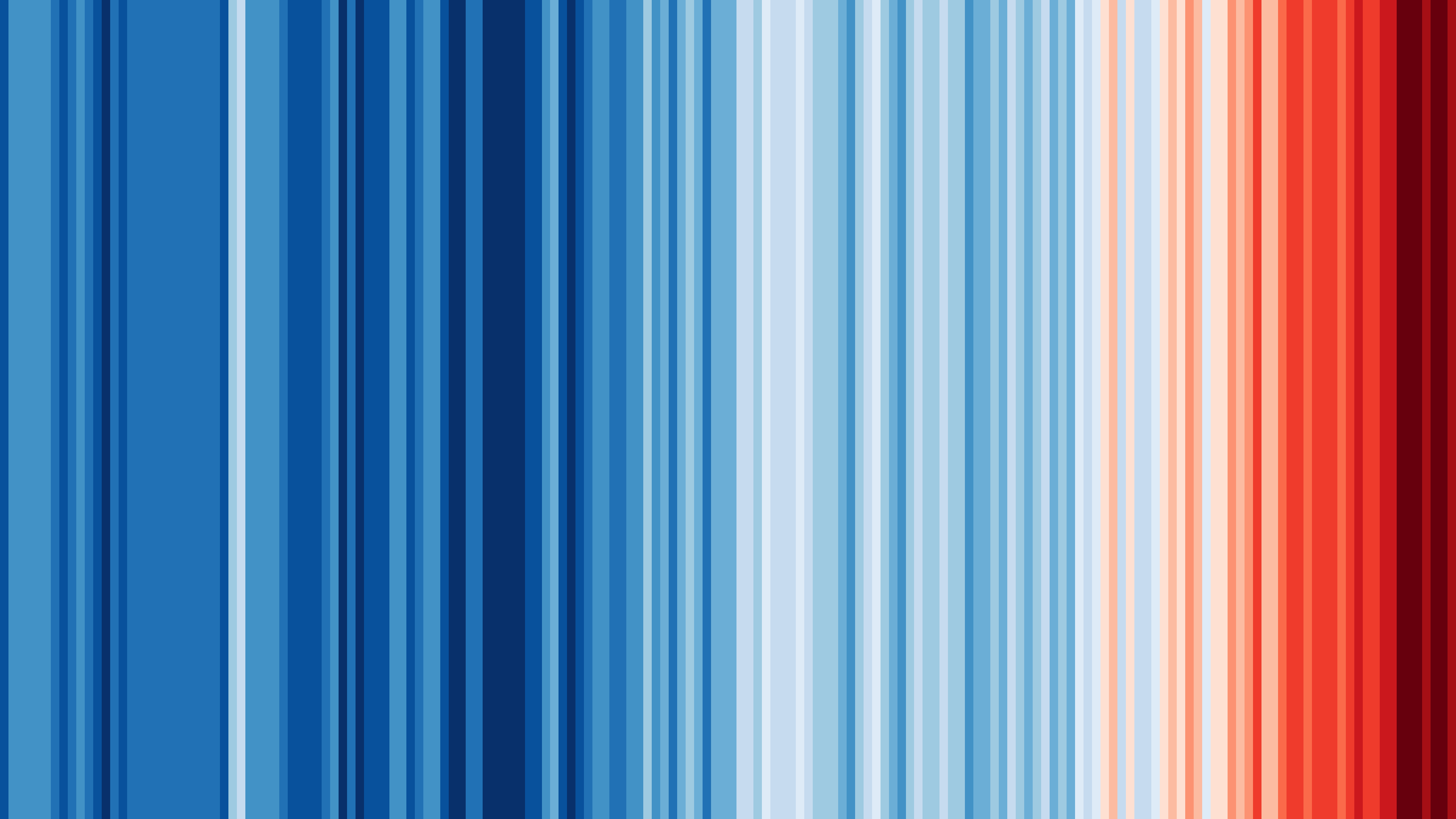

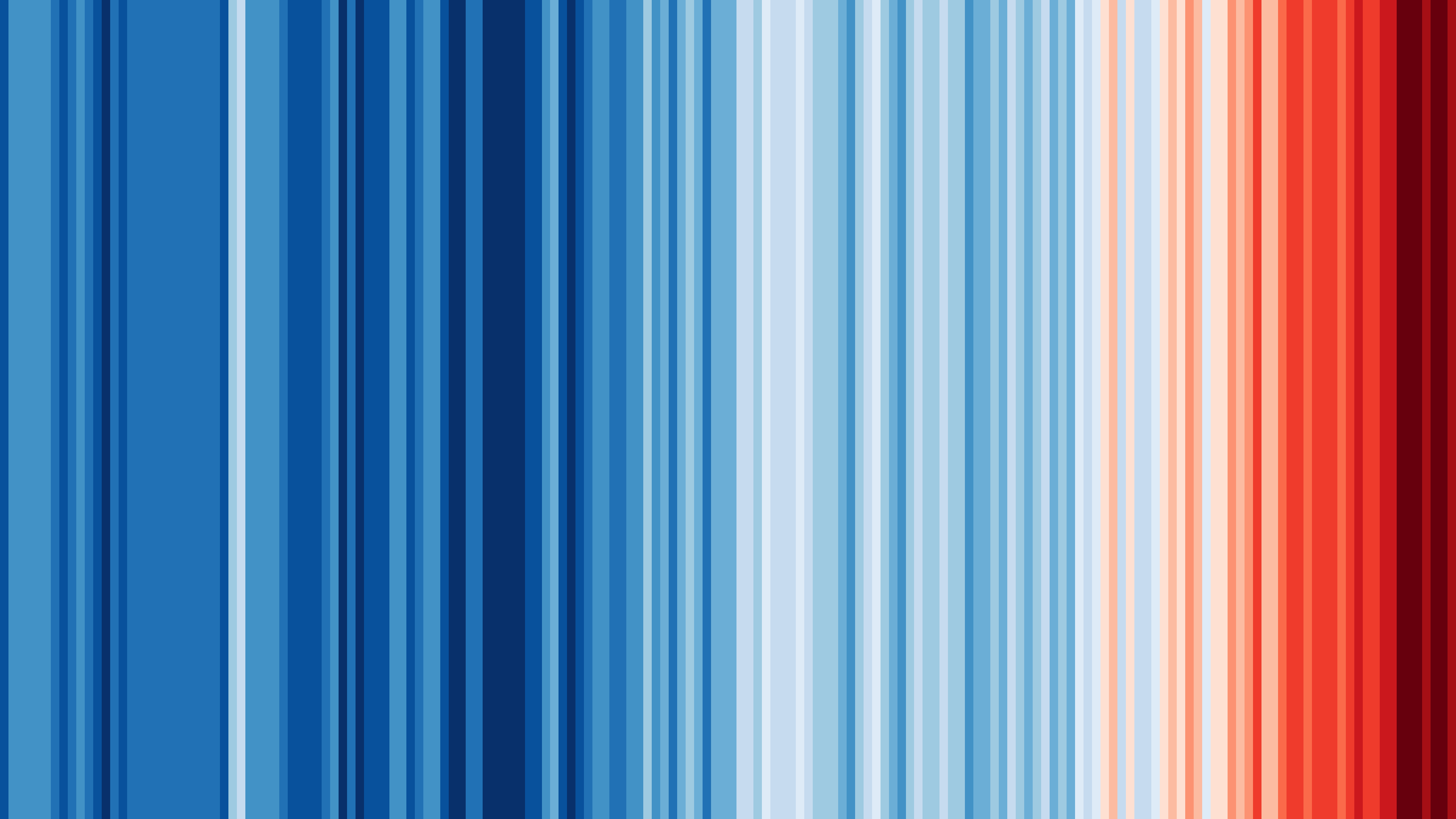

+## [#ShowYourStripes](https://showyourstripes.info/s/globe)

+

+

+

+  +

+

+

+**Graphics and Lead Scientist**: [Ed Hawkins](http://www.met.reading.ac.uk/~ed/home/index.php), National Centre for Atmospheric Science, University of Reading.

+

+**Data**: Berkeley Earth, NOAA, UK Met Office, MeteoSwiss, DWD, SMHI, UoR, Meteo France & ZAMG.

+

+

+#ShowYourStripes is distributed under a

+Creative Commons Attribution 4.0 International License

+

+  +

+

+

diff --git a/benchmarks/benchmarks/experimental/ugrid/__init__.py b/benchmarks/benchmarks/experimental/ugrid/__init__.py

index 2f9bb04e35..2e40c525a6 100644

--- a/benchmarks/benchmarks/experimental/ugrid/__init__.py

+++ b/benchmarks/benchmarks/experimental/ugrid/__init__.py

@@ -50,7 +50,7 @@ def time_create(self, *params):

class Connectivity(UGridCommon):

def setup(self, n_faces):

- self.array = np.zeros([n_faces, 3], dtype=np.int)

+ self.array = np.zeros([n_faces, 3], dtype=int)

super().setup(n_faces)

def create(self):

diff --git a/docs/Makefile b/docs/Makefile

index 47f3e740fa..fcb0ec0116 100644

--- a/docs/Makefile

+++ b/docs/Makefile

@@ -20,11 +20,6 @@ html-quick:

echo "make html-quick in $$i..."; \

(cd $$i; $(MAKE) $(MFLAGS) $(MYMAKEFLAGS) html-quick); done

-spelling:

- @for i in $(SUBDIRS); do \

- echo "make spelling in $$i..."; \

- (cd $$i; $(MAKE) $(MFLAGS) $(MYMAKEFLAGS) spelling); done

-

all:

@for i in $(SUBDIRS); do \

echo "make all in $$i..."; \

diff --git a/docs/gallery_code/general/plot_custom_aggregation.py b/docs/gallery_code/general/plot_custom_aggregation.py

index 5fba3669b6..6ef6075fb3 100644

--- a/docs/gallery_code/general/plot_custom_aggregation.py

+++ b/docs/gallery_code/general/plot_custom_aggregation.py

@@ -72,7 +72,7 @@ def main():

# Make an aggregator from the user function.

SPELL_COUNT = Aggregator(

- "spell_count", count_spells, units_func=lambda units: 1

+ "spell_count", count_spells, units_func=lambda units, **kwargs: 1

)

# Define the parameters of the test.

diff --git a/docs/gallery_code/general/plot_lineplot_with_legend.py b/docs/gallery_code/general/plot_lineplot_with_legend.py

index 78401817ba..aad7906acd 100644

--- a/docs/gallery_code/general/plot_lineplot_with_legend.py

+++ b/docs/gallery_code/general/plot_lineplot_with_legend.py

@@ -24,7 +24,6 @@ def main():

)

for cube in temperature.slices("longitude"):

-

# Create a string label to identify this cube (i.e. latitude: value).

cube_label = "latitude: %s" % cube.coord("latitude").points[0]

diff --git a/docs/gallery_code/general/plot_projections_and_annotations.py b/docs/gallery_code/general/plot_projections_and_annotations.py

index 75122591b9..2cf42e66e0 100644

--- a/docs/gallery_code/general/plot_projections_and_annotations.py

+++ b/docs/gallery_code/general/plot_projections_and_annotations.py

@@ -26,7 +26,6 @@

def make_plot(projection_name, projection_crs):

-

# Create a matplotlib Figure.

plt.figure()

diff --git a/docs/gallery_code/general/plot_zonal_means.py b/docs/gallery_code/general/plot_zonal_means.py

index 08a9578e63..195f8b4bb0 100644

--- a/docs/gallery_code/general/plot_zonal_means.py

+++ b/docs/gallery_code/general/plot_zonal_means.py

@@ -16,7 +16,6 @@

def main():

-

# Loads air_temp.pp and "collapses" longitude into a single, average value.

fname = iris.sample_data_path("air_temp.pp")

temperature = iris.load_cube(fname)

diff --git a/docs/gallery_code/meteorology/plot_lagged_ensemble.py b/docs/gallery_code/meteorology/plot_lagged_ensemble.py

index 5cd2752f39..e15aa0e6ef 100644

--- a/docs/gallery_code/meteorology/plot_lagged_ensemble.py

+++ b/docs/gallery_code/meteorology/plot_lagged_ensemble.py

@@ -86,7 +86,6 @@ def main():

# Iterate over all possible latitude longitude slices.

for cube in last_timestep.slices(["latitude", "longitude"]):

-

# Get the ensemble member number from the ensemble coordinate.

ens_member = cube.coord("realization").points[0]

diff --git a/docs/src/Makefile b/docs/src/Makefile

index 37c2e9e3e6..a75da5371b 100644

--- a/docs/src/Makefile

+++ b/docs/src/Makefile

@@ -62,11 +62,6 @@ html-quick:

@echo

@echo "Build finished. The HTML (no gallery or api docs) pages are in $(BUILDDIR)/html"

-spelling:

- $(SPHINXBUILD) -b spelling $(SRCDIR) $(BUILDDIR)

- @echo

- @echo "Build finished. The HTML (no gallery) pages are in $(BUILDDIR)/html"

-

dirhtml:

$(SPHINXBUILD) -b dirhtml $(ALLSPHINXOPTS) $(BUILDDIR)/dirhtml

@echo

@@ -156,4 +151,5 @@ doctest:

"results in $(BUILDDIR)/doctest/output.txt."

show:

- @python -c "import webbrowser; webbrowser.open_new_tab('file://$(shell pwd)/$(BUILDDIR)/html/index.html')"

\ No newline at end of file

+ @python -c "import webbrowser; webbrowser.open_new_tab('file://$(shell pwd)/$(BUILDDIR)/html/index.html')"

+

diff --git a/docs/src/common_links.inc b/docs/src/common_links.inc

index 530ebc4877..4d03a92715 100644

--- a/docs/src/common_links.inc

+++ b/docs/src/common_links.inc

@@ -9,7 +9,7 @@

.. _conda: https://docs.conda.io/en/latest/

.. _contributor: https://github.com/SciTools/scitools.org.uk/blob/master/contributors.json

.. _core developers: https://github.com/SciTools/scitools.org.uk/blob/master/contributors.json

-.. _generating sss keys for GitHub: https://docs.github.com/en/github/authenticating-to-github/adding-a-new-ssh-key-to-your-github-account

+.. _generating ssh keys for GitHub: https://docs.github.com/en/github/authenticating-to-github/adding-a-new-ssh-key-to-your-github-account

.. _GitHub Actions: https://docs.github.com/en/actions

.. _GitHub Help Documentation: https://docs.github.com/en/github

.. _GitHub Discussions: https://github.com/SciTools/iris/discussions

@@ -40,22 +40,26 @@

.. _CF-UGRID: https://ugrid-conventions.github.io/ugrid-conventions/

.. _issues on GitHub: https://github.com/SciTools/iris/issues?q=is%3Aopen+is%3Aissue+sort%3Areactions-%2B1-desc

.. _python-stratify: https://github.com/SciTools/python-stratify

+.. _iris-esmf-regrid: https://github.com/SciTools-incubator/iris-esmf-regrid

+.. _netCDF4: https://github.com/Unidata/netcdf4-python

.. comment

- Core developers (@github names) in alphabetical order:

+ Core developers and prolific contributors (@github names) in alphabetical order:

.. _@abooton: https://github.com/abooton

.. _@alastair-gemmell: https://github.com/alastair-gemmell

.. _@ajdawson: https://github.com/ajdawson

.. _@bjlittle: https://github.com/bjlittle

.. _@bouweandela: https://github.com/bouweandela

+.. _@bsherratt: https://github.com/bsherratt

.. _@corinnebosley: https://github.com/corinnebosley

.. _@cpelley: https://github.com/cpelley

.. _@djkirkham: https://github.com/djkirkham

.. _@DPeterK: https://github.com/DPeterK

.. _@ESadek-MO: https://github.com/ESadek-MO

.. _@esc24: https://github.com/esc24

+.. _@HGWright: https://github.com/HGWright

.. _@jamesp: https://github.com/jamesp

.. _@jonseddon: https://github.com/jonseddon

.. _@jvegasbsc: https://github.com/jvegasbsc

diff --git a/docs/src/community/index.rst b/docs/src/community/index.rst

new file mode 100644

index 0000000000..114cb96fe9

--- /dev/null

+++ b/docs/src/community/index.rst

@@ -0,0 +1,58 @@

+.. include:: ../common_links.inc

+

+.. todo:

+ consider scientific-python.org

+ consider scientific-python.org/specs/

+

+Iris in the Community

+=====================

+

+Iris aims to be a valuable member of the open source scientific Python

+community.

+

+We listen out for developments in our dependencies and neighbouring projects,

+and we reach out to them when we can solve problems together; please feel free

+to reach out to us!

+

+We are aware of our place in the user's wider 'toolbox' - offering unique

+functionality and interoperating smoothly with other packages.

+

+We welcome contributions from all; whether that's an opinion, a 1-line

+clarification, or a whole new feature 🙂

+

+Quick Links

+-----------

+

+* `GitHub Discussions`_

+* :ref:`Getting involved`

+* `Twitter `_

+

+Interoperability

+----------------

+

+There's a big choice of Python tools out there! Each one has strengths and

+weaknesses in different areas, so we don't want to force a single choice for your

+whole workflow - we'd much rather make it easy for you to choose the right tool

+for the moment, switching whenever you need. Below are our ongoing efforts at

+smoother interoperability:

+

+.. not using toctree due to combination of child pages and cross-references.

+

+* The :mod:`iris.pandas` module

+* :doc:`iris_xarray`

+

+.. toctree::

+ :maxdepth: 1

+ :hidden:

+

+ iris_xarray

+

+Plugins

+-------

+

+Iris can be extended with **plugins**! See below for further information:

+

+.. toctree::

+ :maxdepth: 2

+

+ plugins

diff --git a/docs/src/community/iris_xarray.rst b/docs/src/community/iris_xarray.rst

new file mode 100644

index 0000000000..859597da78

--- /dev/null

+++ b/docs/src/community/iris_xarray.rst

@@ -0,0 +1,154 @@

+.. include:: ../common_links.inc

+

+======================

+Iris ❤️ :term:`Xarray`

+======================

+

+There is a lot of overlap between Iris and :term:`Xarray`, but some important

+differences too. Below is a summary of the most important differences, so that

+you can be prepared, and to help you choose the best package for your use case.

+

+Overall Experience

+------------------

+

+Iris is the more specialised package, focussed on making it as easy

+as possible to work with meteorological and climatological data. Iris

+is built to natively handle many key concepts, such as the CF conventions,

+coordinate systems and bounded coordinates. Iris offers a smaller toolkit of

+operations compared to Xarray, particularly around API for sophisticated

+computation such as array manipulation and multi-processing.

+

+Xarray's more generic data model and community-driven development give it a

+richer range of operations and broader possible uses. Using Xarray

+specifically for meteorology/climatology may require deeper knowledge

+compared to using Iris, and you may prefer to add Xarray plugins

+such as :ref:`cfxarray` to get the best experience. Advanced users can likely

+achieve better performance with Xarray than with Iris.

+

+Conversion

+----------

+There are multiple ways to convert between Iris and Xarray objects.

+

+* Xarray includes the :meth:`~xarray.DataArray.to_iris` and

+ :meth:`~xarray.DataArray.from_iris` methods - detailed in the

+ `Xarray IO notes on Iris`_. Since Iris evolves independently of Xarray, be

+ vigilant for concepts that may be lost during the conversion.

+* Because both packages are closely linked to the :term:`NetCDF Format`, it is

+ feasible to save a NetCDF file using one package then load that file using

+ the other package. This will be lossy in places, as both Iris and Xarray

+ are opinionated on how certain NetCDF concepts relate to their data models.

+* The Iris development team are exploring an improved 'bridge' between the two

+ packages. Follow the conversation on GitHub: `iris#4994`_. This project is

+ expressly intended to be as lossless as possible.

+

+Regridding

+----------

+Iris and Xarray offer a range of regridding methods - both natively and via

+additional packages such as `iris-esmf-regrid`_ and `xESMF`_ - which overlap

+in places

+but tend to cover a different set of use cases (e.g. Iris handles unstructured

+meshes but offers access to fewer ESMF methods). The behaviour of these

+regridders also differs slightly (even between different regridders attached to

+the same package) so the appropriate package to use depends highly on the

+particulars of the use case.

+

+Plotting

+--------

+Xarray and Iris have a large overlap of functionality when creating

+:term:`Matplotlib` plots and both support the plotting of multidimensional

+coordinates. This means the experience is largely similar using either package.

+

+Xarray supports further plotting backends through external packages (e.g. Bokeh through `hvPlot`_)

+and, if a user is already familiar with `pandas`_, the interface should be

+familiar. It also supports some different plot types to Iris, and therefore can

+be used for a wider variety of plots. It also has benefits regarding "out of

+the box", quick customisations to plots. However, if further customisation is

+required, knowledge of matplotlib is still required.

+

+In both cases, :term:`Cartopy` is/can be used. Iris does more work

+automatically for the user here, creating Cartopy

+:class:`~cartopy.mpl.geoaxes.GeoAxes` for latitude and longitude coordinates,

+whereas the user has to do this manually in Xarray.

+

+Statistics

+----------

+Both libraries are quite comparable with generally similar capabilities,

+performance and laziness. Iris offers more specificity in some cases, such as

+some more specific unique functions and masked tolerance in most statistics.

+Xarray seems more approachable however, with some less unique but more

+convenient solutions (these tend to be wrappers to :term:`Dask` functions).

+

+Laziness and Multi-Processing with :term:`Dask`

+-----------------------------------------------

+Iris and Xarray both support lazy data and out-of-core processing through

+utilisation of Dask.

+

+While both Iris and Xarray expose :term:`NumPy` conveniences at the API level

+(e.g. the `ndim()` method), only Xarray exposes Dask conveniences. For example

+:attr:`xarray.DataArray.chunks`, which gives the user direct control

+over the underlying Dask array chunks. The Iris API instead takes control of

+such concepts and user control is only possible by manipulating the underlying

+Dask array directly (accessed via :meth:`iris.cube.Cube.core_data`).

+

+:class:`xarray.DataArray`\ s comply with `NEP-18`_, allowing NumPy arrays to be

+based on them, and they also include the necessary extra members for Dask

+arrays to be based on them too. Neither of these is currently possible with

+Iris :class:`~iris.cube.Cube`\ s, although an ambition for the future.

+

+NetCDF File Control

+-------------------

+(More info: :term:`NetCDF Format`)

+

+Unlike Iris, Xarray generally provides full control of major file structures,

+i.e. dimensions + variables, including their order in the file. It mostly

+respects these in a file input, and can reproduce them on output.

+However, attribute handling is not so complete: like Iris, it interprets and

+modifies some recognised aspects, and can add some extra attributes not in the

+input.

+

+.. todo:

+ More detail on dates and fill values (@pp-mo suggestion).

+

+Handling of dates and fill values have some special problems here.

+

+Ultimately, nearly everything wanted in a particular desired result file can

+be achieved in Xarray, via provided override mechanisms (`loading keywords`_

+and the '`encoding`_' dictionaries).

+

+Missing Data

+------------

+Xarray uses :data:`numpy.nan` to represent missing values and this will support

+many simple use cases assuming the data are floats. Iris enables more

+sophisticated missing data handling by representing missing values as masks

+(:class:`numpy.ma.MaskedArray` for real data and :class:`dask.array.Array`

+for lazy data) which allows data to be any data type and to include either/both

+a mask and :data:`~numpy.nan`\ s.

+

+.. _cfxarray:

+

+`cf-xarray`_

+-------------

+Iris has a data model entirely based on :term:`CF Conventions`. Xarray has a

+data model based on :term:`NetCDF Format` with cf-xarray acting as translation

+into CF. Xarray/cf-xarray methods can be

+called and data accessed with CF like arguments (e.g. axis, standard name) and

+there are some CF specific utilities (similar

+to Iris utilities). Iris tends to cover more of and be stricter about CF.

+

+

+.. seealso::

+

+ * `Xarray IO notes on Iris`_

+ * `Xarray notes on other NetCDF libraries`_

+

+.. _Xarray IO notes on Iris: https://docs.xarray.dev/en/stable/user-guide/io.html#iris

+.. _Xarray notes on other NetCDF libraries: https://docs.xarray.dev/en/stable/getting-started-guide/faq.html#what-other-netcdf-related-python-libraries-should-i-know-about

+.. _loading keywords: https://docs.xarray.dev/en/stable/generated/xarray.open_dataset.html#xarray.open_dataset

+.. _encoding: https://docs.xarray.dev/en/stable/user-guide/io.html#writing-encoded-data

+.. _xESMF: https://github.com/pangeo-data/xESMF/

+.. _seaborn: https://seaborn.pydata.org/

+.. _hvPlot: https://hvplot.holoviz.org/

+.. _pandas: https://pandas.pydata.org/

+.. _NEP-18: https://numpy.org/neps/nep-0018-array-function-protocol.html

+.. _cf-xarray: https://github.com/xarray-contrib/cf-xarray

+.. _iris#4994: https://github.com/SciTools/iris/issues/4994

diff --git a/docs/src/community/plugins.rst b/docs/src/community/plugins.rst

new file mode 100644

index 0000000000..0d79d64623

--- /dev/null

+++ b/docs/src/community/plugins.rst

@@ -0,0 +1,68 @@

+.. _namespace package: https://packaging.python.org/en/latest/guides/packaging-namespace-packages/

+

+.. _community_plugins:

+

+Plugins

+=======

+

+Iris supports **plugins** under the ``iris.plugins`` `namespace package`_.

+This allows packages that extend Iris' functionality to be developed and

+maintained independently, while still being installed into ``iris.plugins``

+instead of a separate package. For example, a plugin may provide loaders or

+savers for additional file formats, or alternative visualisation methods.

+

+

+Using plugins

+-------------

+

+Once a plugin is installed, it can be used either via the

+:func:`iris.use_plugin` function, or by importing it directly:

+

+.. code-block:: python

+

+ import iris

+

+ iris.use_plugin("my_plugin")

+ # OR

+ import iris.plugins.my_plugin

+

+

+Creating plugins

+----------------

+

+The choice of a `namespace package`_ makes writing a plugin relatively

+straightforward: it simply needs to appear as a folder within ``iris/plugins``,

+then can be distributed in the same way as any other package. An example

+repository layout:

+

+.. code-block:: text

+

+ + lib

+ + iris

+ + plugins

+ + my_plugin

+ - __init__.py

+ - (more code...)

+ - README.md

+ - pyproject.toml

+ - setup.cfg

+ - (other project files...)

+

+In particular, note that there must **not** be any ``__init__.py`` files at

+higher levels than the plugin itself.

+

+The package name - how it is referred to by PyPI/conda, specified by

+``metadata.name`` in ``setup.cfg`` - is recommended to include both "iris" and

+the plugin name. Continuing this example, its ``setup.cfg`` should include, at

+minimum:

+

+.. code-block:: ini

+

+ [metadata]

+ name = iris-my-plugin

+

+ [options]

+ packages = find_namespace:

+

+ [options.packages.find]

+ where = lib

diff --git a/docs/src/conf.py b/docs/src/conf.py

index 499d50116b..d2a204d3b3 100644

--- a/docs/src/conf.py

+++ b/docs/src/conf.py

@@ -158,9 +158,6 @@ def _dotv(version):

"sphinx_copybutton",

"sphinx.ext.napoleon",

"sphinx_panels",

- "sphinx_togglebutton",

- # TODO: Spelling extension disabled until the dependencies can be included

- # "sphinxcontrib.spelling",

"sphinx_gallery.gen_gallery",

"matplotlib.sphinxext.mathmpl",

"matplotlib.sphinxext.plot_directive",

@@ -199,16 +196,6 @@ def _dotv(version):

napoleon_use_keyword = True

napoleon_custom_sections = None

-# -- spellingextension --------------------------------------------------------

-# See https://sphinxcontrib-spelling.readthedocs.io/en/latest/customize.html

-spelling_lang = "en_GB"

-# The lines in this file must only use line feeds (no carriage returns).

-spelling_word_list_filename = ["spelling_allow.txt"]

-spelling_show_suggestions = False

-spelling_show_whole_line = False

-spelling_ignore_importable_modules = True

-spelling_ignore_python_builtins = True

-

# -- copybutton extension -----------------------------------------------------

# See https://sphinx-copybutton.readthedocs.io/en/latest/

copybutton_prompt_text = r">>> |\.\.\. "

@@ -241,6 +228,7 @@ def _dotv(version):

"python": ("https://docs.python.org/3/", None),

"scipy": ("https://docs.scipy.org/doc/scipy/", None),

"pandas": ("https://pandas.pydata.org/docs/", None),

+ "dask": ("https://docs.dask.org/en/stable/", None),

}

# The name of the Pygments (syntax highlighting) style to use.

@@ -289,7 +277,8 @@ def _dotv(version):

# See https://pydata-sphinx-theme.readthedocs.io/en/latest/user_guide/configuring.html

html_theme_options = {

- "footer_items": ["copyright", "sphinx-version", "custom_footer"],

+ "footer_start": ["copyright", "sphinx-version"],

+ "footer_end": ["custom_footer"],

"collapse_navigation": True,

"navigation_depth": 3,

"show_prev_next": True,

@@ -381,8 +370,6 @@ def _dotv(version):

"http://www.esrl.noaa.gov/psd/data/gridded/conventions/cdc_netcdf_standard.shtml",

"http://www.nationalarchives.gov.uk/doc/open-government-licence",

"https://www.metoffice.gov.uk/",

- # TODO: try removing this again in future - was raising an SSLError.

- "http://www.ecmwf.int/",

]

# list of sources to exclude from the build.

diff --git a/docs/src/developers_guide/contributing_documentation_full.rst b/docs/src/developers_guide/contributing_documentation_full.rst

index 390f2eeea7..a470def683 100755

--- a/docs/src/developers_guide/contributing_documentation_full.rst

+++ b/docs/src/developers_guide/contributing_documentation_full.rst

@@ -113,18 +113,6 @@ adding it to the ``linkcheck_ignore`` array that is defined in the

If this fails check the output for the text **broken** and then correct

or ignore the url.

-.. comment

- Finally, the spelling in the documentation can be checked automatically via the

- command::

-

- make spelling

-

- The spelling check may pull up many technical abbreviations and acronyms. This

- can be managed by using an **allow** list in the form of a file. This file,

- or list of files is set in the `conf.py`_ using the string list

- ``spelling_word_list_filename``.

-

-

.. note:: In addition to the automated `Iris GitHub Actions`_ build of all the

documentation build options above, the

https://readthedocs.org/ service is also used. The configuration

diff --git a/docs/src/developers_guide/gitwash/forking.rst b/docs/src/developers_guide/gitwash/forking.rst

index 247e3cf678..baeb243c86 100644

--- a/docs/src/developers_guide/gitwash/forking.rst

+++ b/docs/src/developers_guide/gitwash/forking.rst

@@ -18,7 +18,7 @@ Set up and Configure a Github Account

If you don't have a github account, go to the github page, and make one.

You then need to configure your account to allow write access, see

-the `generating sss keys for GitHub`_ help on `github help`_.

+the `generating ssh keys for GitHub`_ help on `github help`_.

Create Your own Forked Copy of Iris

diff --git a/docs/src/developers_guide/gitwash/set_up_fork.rst b/docs/src/developers_guide/gitwash/set_up_fork.rst

index d5c5bc5c44..5318825488 100644

--- a/docs/src/developers_guide/gitwash/set_up_fork.rst

+++ b/docs/src/developers_guide/gitwash/set_up_fork.rst

@@ -15,7 +15,7 @@ Overview

git clone git@github.com:your-user-name/iris.git

cd iris

- git remote add upstream git://github.com/SciTools/iris.git

+ git remote add upstream git@github.com/SciTools/iris.git

In Detail

=========

diff --git a/docs/src/developers_guide/release.rst b/docs/src/developers_guide/release.rst

index de7aa6c719..bae77a7d21 100644

--- a/docs/src/developers_guide/release.rst

+++ b/docs/src/developers_guide/release.rst

@@ -277,6 +277,11 @@ Post Release Steps

#. On main, make a new ``latest.rst`` from ``latest.rst.template`` and update

the include statement and the toctree in ``index.rst`` to point at the new

``latest.rst``.

+#. Consider updating ``docs/src/userguide/citation.rst`` on ``main`` to include

+ the version number, date and `Zenodo DOI `_

+ of the new release. Ideally this would be updated before the release, but

+ the DOI for the new version is only available once the release has been

+ created in GitHub.

.. _SciTools/iris: https://github.com/SciTools/iris

diff --git a/docs/src/further_topics/metadata.rst b/docs/src/further_topics/metadata.rst

index de1afb15af..4c55047d4c 100644

--- a/docs/src/further_topics/metadata.rst

+++ b/docs/src/further_topics/metadata.rst

@@ -389,10 +389,10 @@ instances. Normally, this would cause issues. For example,

.. doctest:: richer-metadata

- >>> simply = {"one": np.int(1), "two": np.array([1.0, 2.0])}

+ >>> simply = {"one": np.int32(1), "two": np.array([1.0, 2.0])}

>>> simply

{'one': 1, 'two': array([1., 2.])}

- >>> fruity = {"one": np.int(1), "two": np.array([1.0, 2.0])}

+ >>> fruity = {"one": np.int32(1), "two": np.array([1.0, 2.0])}

>>> fruity

{'one': 1, 'two': array([1., 2.])}

>>> simply == fruity

@@ -419,7 +419,7 @@ However, metadata class equality is rich enough to handle this eventuality,

>>> metadata1

CubeMetadata(standard_name='air_temperature', long_name=None, var_name='air_temperature', units=Unit('K'), attributes={'one': 1, 'two': array([1., 2.])}, cell_methods=(CellMethod(method='mean', coord_names=('time',), intervals=('6 hour',), comments=()),))

- >>> metadata2 = cube.metadata._replace(attributes={"one": np.int(1), "two": np.array([1000.0, 2000.0])})

+ >>> metadata2 = cube.metadata._replace(attributes={"one": np.int32(1), "two": np.array([1000.0, 2000.0])})

>>> metadata2

CubeMetadata(standard_name='air_temperature', long_name=None, var_name='air_temperature', units=Unit('K'), attributes={'one': 1, 'two': array([1000., 2000.])}, cell_methods=(CellMethod(method='mean', coord_names=('time',), intervals=('6 hour',), comments=()),))

>>> metadata1 == metadata2

diff --git a/docs/src/further_topics/ugrid/partner_packages.rst b/docs/src/further_topics/ugrid/partner_packages.rst

index 8e36f4ffc2..75b54b037f 100644

--- a/docs/src/further_topics/ugrid/partner_packages.rst

+++ b/docs/src/further_topics/ugrid/partner_packages.rst

@@ -1,3 +1,5 @@

+.. include:: ../../common_links.inc

+

.. _ugrid partners:

Iris' Mesh Partner Packages

@@ -97,4 +99,3 @@ Applications

.. _GeoVista: https://github.com/bjlittle/geovista

.. _PyVista: https://docs.pyvista.org/index.html

-.. _iris-esmf-regrid: https://github.com/SciTools-incubator/iris-esmf-regrid

diff --git a/docs/src/index.rst b/docs/src/index.rst

index c5d654ed31..531c0e0b26 100644

--- a/docs/src/index.rst

+++ b/docs/src/index.rst

@@ -136,6 +136,15 @@ The legacy support resources:

developers_guide/contributing_getting_involved

+.. toctree::

+ :caption: Community

+ :maxdepth: 1

+ :name: community_index

+ :hidden:

+

+ Community

+

+

.. toctree::

:caption: Iris API

:maxdepth: 1

diff --git a/docs/src/installing.rst b/docs/src/installing.rst

index 6a2d2f6131..b2481973c0 100644

--- a/docs/src/installing.rst

+++ b/docs/src/installing.rst

@@ -14,7 +14,7 @@ Subsystem for Linux). This is a great option to get started with Iris

for users and developers. Be aware that we do not currently test against

any WSL_ distributions.

-.. _WSL: https://docs.microsoft.com/en-us/windows/wsl/install-win10

+.. _WSL: https://learn.microsoft.com/en-us/windows/wsl/install

.. note:: Iris is currently supported and tested against |python_support|

running on Linux. We do not currently actively test on other

diff --git a/docs/src/spelling_allow.txt b/docs/src/spelling_allow.txt

deleted file mode 100644

index ed883ac3bf..0000000000

--- a/docs/src/spelling_allow.txt

+++ /dev/null

@@ -1,361 +0,0 @@

-Admin

-Albers

-Arakawa

-Arg

-Args

-Autoscale

-Biggus

-CF

-CI

-Cartopy

-Checklist

-Color

-Conda

-Constraining

-DAP

-Dask

-Debian

-Duchon

-EO

-Eos

-Exner

-Fieldsfile

-Fieldsfiles

-FillValue

-Gb

-GeogCS

-Hovmoller

-Jul

-Jun

-Jupyter

-Lanczos

-Mappables

-Matplotlib

-Mb

-Modeling

-Mollweide

-NetCDF

-Nino

-PPfield

-PPfields

-Perez

-Proj

-Quickplot

-Regrids

-Royer

-Scitools

-Scitools

-Sep

-Stehfest

-Steroegraphic

-Subsetting

-TestCodeFormat

-TestLicenseHeaders

-Torvalds

-Trans

-Trenberth

-Tri

-URIs

-URLs

-Ubuntu

-Ugrid

-Unidata

-Vol

-Vuuren

-Workflow

-Yury

-Zaytsev

-Zorder

-abf

-abl

-advection

-aggregator

-aggregators

-alphap

-ancils

-antimeridian

-ap

-arg

-args

-arithmetic

-arraylike

-atol

-auditable

-aux

-basemap

-behaviour

-betap

-bhulev

-biggus

-blev

-boolean

-boundpoints

-branchname

-broadcastable

-bugfix

-bugfixes

-builtin

-bulev

-carrée

-cartesian

-celsius

-center

-centrepoints

-cf

-cftime

-chunksizes

-ci

-clabel

-cmap

-cmpt

-codebase

-color

-colorbar

-colorbars

-complevel

-conda

-config

-constraining

-convertor

-coord

-coords

-cs

-datafiles

-datatype

-datetime

-datetimes

-ddof

-deepcopy

-deprecations

-der

-dewpoint

-dict

-dicts

-diff

-discontiguities

-discontiguous

-djf

-docstring

-docstrings

-doi

-dom

-dropdown

-dtype

-dtypes

-dx

-dy

-edgecolor

-endian

-endianness

-equirectangular

-eta

-etc

-fh

-fieldsfile

-fieldsfiles

-fileformat

-fileformats

-filename

-filenames

-filepath

-filespec

-fullname

-func

-geolocations

-github

-gregorian

-grib

-gribapi

-gridcell

-griddata

-gridlines

-hPa

-hashable

-hindcast

-hyperlink

-hyperlinks

-idiff

-ieee

-ifunc

-imagehash

-inc

-init

-inline

-inplace

-int

-interable

-interpolator

-ints

-io

-isosurfaces

-iterable

-jja

-jupyter

-kwarg

-kwargs

-landsea

-lat

-latlon

-latlons

-lats

-lbcode

-lbegin

-lbext

-lbfc

-lbft

-lblrec

-lbmon

-lbmond

-lbnrec

-lbrsvd

-lbtim

-lbuser

-lbvc

-lbyr

-lbyrd

-lh

-lhs

-linewidth

-linted

-linting

-lon

-lons

-lt

-mam

-markup

-matplotlib

-matplotlibrc

-max

-mdtol

-meaned

-mercator

-metadata

-min

-mpl

-nanmask

-nc

-ndarray

-neighbor

-ness

-netCDF

-netcdf

-netcdftime

-nimrod

-np

-nsigma

-numpy

-nx

-ny

-online

-orog

-paramId

-params

-parsable

-pcolormesh

-pdf

-placeholders

-plugin

-png

-proj

-ps

-pseudocolor

-pseudocolour

-pseudocoloured

-py

-pyplot

-quickplot

-rST

-rc

-rd

-reST

-reStructuredText

-rebase

-rebases

-rebasing

-regrid

-regridded

-regridder

-regridders

-regridding

-regrids

-rel

-repo

-repos

-reprojecting

-rh

-rhs

-rst

-rtol

-scipy

-scitools

-seekable

-setup

-sines

-sinh

-spec

-specs

-src

-ssh

-st

-stashcode

-stashcodes

-stats

-std

-stdout

-str

-subcube

-subcubes

-submodule

-submodules

-subsetting

-sys

-tanh

-tb

-testcases

-tgt

-th

-timepoint

-timestamp

-timesteps

-todo

-tol

-tos

-traceback

-travis

-tripolar

-tuple

-tuples

-txt

-udunits

-ufunc

-ugrid

-ukmo

-un

-unhandled

-unicode

-unittest

-unrotate

-unrotated

-uris

-url

-urls

-util

-var

-versioning

-vmax

-vmin

-waypoint

-waypoints

-whitespace

-wildcard

-wildcards

-windspeeds

-withnans

-workflow

-workflows

-xN

-xx

-xxx

-zeroth

-zlev

-zonal

diff --git a/docs/src/userguide/citation.rst b/docs/src/userguide/citation.rst

index 0a3a85fb89..1498b9dfe1 100644

--- a/docs/src/userguide/citation.rst

+++ b/docs/src/userguide/citation.rst

@@ -15,11 +15,12 @@ For example::

@manual{Iris,

author = {{Met Office}},

- title = {Iris: A Python package for analysing and visualising meteorological and oceanographic data sets},

- edition = {v1.2},

- year = {2010 - 2013},

+ title = {Iris: A powerful, format-agnostic, and community-driven Python package for analysing and visualising Earth science data },

+ edition = {v3.4},

+ year = {2010 - 2022},

address = {Exeter, Devon },

- url = {http://scitools.org.uk/}

+ url = {http://scitools.org.uk/},

+ doi = {10.5281/zenodo.7386117}

}

@@ -33,7 +34,7 @@ Suggested format::

For example::

- Iris. v1.2. 28-Feb-2013. Met Office. UK. https://github.com/SciTools/iris/archive/v1.2.0.tar.gz 01-03-2013

+ Iris. v3.4. 1-Dec-2022. Met Office. UK. https://doi.org/10.5281/zenodo.7386117 22-12-2022

********************

@@ -46,7 +47,7 @@ Suggested format::

For example::

- Iris. Met Office. git@github.com:SciTools/iris.git 06-03-2013

+ Iris. Met Office. git@github.com:SciTools/iris.git 22-12-2022

.. _How to cite and describe software: https://software.ac.uk/how-cite-software

diff --git a/docs/src/userguide/cube_maths.rst b/docs/src/userguide/cube_maths.rst

index 9c0898b62c..56a2041bd3 100644

--- a/docs/src/userguide/cube_maths.rst

+++ b/docs/src/userguide/cube_maths.rst

@@ -5,8 +5,8 @@ Cube Maths

==========

-The section :doc:`navigating_a_cube` highlighted that

-every cube has a data attribute;

+The section :doc:`navigating_a_cube` highlighted that

+every cube has a data attribute;

this attribute can then be manipulated directly::

cube.data -= 273.15

@@ -37,7 +37,7 @@ Let's load some air temperature which runs from 1860 to 2100::

filename = iris.sample_data_path('E1_north_america.nc')

air_temp = iris.load_cube(filename, 'air_temperature')

-We can now get the first and last time slices using indexing

+We can now get the first and last time slices using indexing

(see :ref:`cube_indexing` for a reminder)::

t_first = air_temp[0, :, :]

@@ -50,8 +50,8 @@ We can now get the first and last time slices using indexing

t_first = air_temp[0, :, :]

t_last = air_temp[-1, :, :]

-And finally we can subtract the two.

-The result is a cube of the same size as the original two time slices,

+And finally we can subtract the two.

+The result is a cube of the same size as the original two time slices,

but with the data representing their difference:

>>> print(t_last - t_first)

@@ -70,8 +70,8 @@ but with the data representing their difference:

.. note::

- Notice that the coordinates "time" and "forecast_period" have been removed

- from the resultant cube;

+ Notice that the coordinates "time" and "forecast_period" have been removed

+ from the resultant cube;

this is because these coordinates differed between the two input cubes.

@@ -174,15 +174,15 @@ broadcasting behaviour::

Combining Multiple Phenomena to Form a New One

----------------------------------------------

-Combining cubes of potential-temperature and pressure we can calculate

+Combining cubes of potential-temperature and pressure we can calculate

the associated temperature using the equation:

.. math::

-

+

T = \theta (\frac{p}{p_0}) ^ {(287.05 / 1005)}

-Where :math:`p` is pressure, :math:`\theta` is potential temperature,

-:math:`p_0` is the potential temperature reference pressure

+Where :math:`p` is pressure, :math:`\theta` is potential temperature,

+:math:`p_0` is the potential temperature reference pressure

and :math:`T` is temperature.

First, let's load pressure and potential temperature cubes::

@@ -191,7 +191,7 @@ First, let's load pressure and potential temperature cubes::

phenomenon_names = ['air_potential_temperature', 'air_pressure']

pot_temperature, pressure = iris.load_cubes(filename, phenomenon_names)

-In order to calculate :math:`\frac{p}{p_0}` we can define a coordinate which

+In order to calculate :math:`\frac{p}{p_0}` we can define a coordinate which

represents the standard reference pressure of 1000 hPa::

import iris.coords

@@ -205,7 +205,7 @@ the :meth:`iris.coords.Coord.convert_units` method::

p0.convert_units(pressure.units)

-Now we can combine all of this information to calculate the air temperature

+Now we can combine all of this information to calculate the air temperature

using the equation above::

temperature = pot_temperature * ( (pressure / p0) ** (287.05 / 1005) )

@@ -219,12 +219,12 @@ The result could now be plotted using the guidance provided in the

.. only:: html

- A very similar example to this can be found in

+ A very similar example to this can be found in

:ref:`sphx_glr_generated_gallery_meteorology_plot_deriving_phenomena.py`.

.. only:: latex

- A very similar example to this can be found in the examples section,

+ A very similar example to this can be found in the examples section,

with the title "Deriving Exner Pressure and Air Temperature".

.. _cube_maths_combining_units:

@@ -249,7 +249,7 @@ unit (if ``a`` had units ``'m2'`` then ``a ** 0.5`` would result in a cube

with units ``'m'``).

Iris inherits units from `cf_units `_

-which in turn inherits from `UDUNITS `_.

+which in turn inherits from `UDUNITS `_.

As well as the units UDUNITS provides, cf units also provides the units

``'no-unit'`` and ``'unknown'``. A unit of ``'no-unit'`` means that the

associated data is not suitable for describing with a unit, cf units

diff --git a/docs/src/userguide/cube_statistics.rst b/docs/src/userguide/cube_statistics.rst

index 08297c2a51..9dc21f91b5 100644

--- a/docs/src/userguide/cube_statistics.rst

+++ b/docs/src/userguide/cube_statistics.rst

@@ -14,7 +14,7 @@ Cube Statistics

Collapsing Entire Data Dimensions

---------------------------------

-.. testsetup::

+.. testsetup:: collapsing

import iris

filename = iris.sample_data_path('uk_hires.pp')

@@ -125,7 +125,7 @@ in order to calculate the area of the grid boxes::

These areas can now be passed to the ``collapsed`` method as weights:

-.. doctest::

+.. doctest:: collapsing

>>> new_cube = cube.collapsed(['grid_longitude', 'grid_latitude'], iris.analysis.MEAN, weights=grid_areas)

>>> print(new_cube)

@@ -141,8 +141,8 @@ These areas can now be passed to the ``collapsed`` method as weights:

altitude - x

Scalar coordinates:

forecast_reference_time 2009-11-19 04:00:00

- grid_latitude 1.5145501 degrees, bound=(0.14430022, 2.8848) degrees

- grid_longitude 358.74948 degrees, bound=(357.494, 360.00497) degrees

+ grid_latitude 1.5145501 degrees, bound=(0.13755022, 2.89155) degrees

+ grid_longitude 358.74948 degrees, bound=(357.48724, 360.01172) degrees

surface_altitude 399.625 m, bound=(-14.0, 813.25) m

Cell methods:

mean grid_longitude, grid_latitude

@@ -155,6 +155,50 @@ Several examples of area averaging exist in the gallery which may be of interest

including an example on taking a :ref:`global area-weighted mean

`.

+In addition to plain arrays, weights can also be given as cubes or (names of)

+:meth:`~iris.cube.Cube.coords`, :meth:`~iris.cube.Cube.cell_measures`, or

+:meth:`~iris.cube.Cube.ancillary_variables`.

+This has the advantage of correct unit handling, e.g., for area-weighted sums

+the units of the resulting cube are multiplied by an area unit:

+

+.. doctest:: collapsing

+

+ >>> from iris.coords import CellMeasure

+ >>> cell_areas = CellMeasure(

+ ... grid_areas,

+ ... standard_name='cell_area',

+ ... units='m2',

+ ... measure='area',

+ ... )

+ >>> cube.add_cell_measure(cell_areas, (0, 1, 2, 3))

+ >>> area_weighted_sum = cube.collapsed(

+ ... ['grid_longitude', 'grid_latitude'],

+ ... iris.analysis.SUM,

+ ... weights='cell_area'

+ ... )

+ >>> print(area_weighted_sum)

+ air_potential_temperature / (m2.K) (time: 3; model_level_number: 7)

+ Dimension coordinates:

+ time x -

+ model_level_number - x

+ Auxiliary coordinates:

+ forecast_period x -

+ level_height - x

+ sigma - x

+ Derived coordinates:

+ altitude - x

+ Scalar coordinates:

+ forecast_reference_time 2009-11-19 04:00:00

+ grid_latitude 1.5145501 degrees, bound=(0.13755022, 2.89155) degrees

+ grid_longitude 358.74948 degrees, bound=(357.48724, 360.01172) degrees

+ surface_altitude 399.625 m, bound=(-14.0, 813.25) m

+ Cell methods:

+ sum grid_longitude, grid_latitude

+ Attributes:

+ STASH m01s00i004

+ source 'Data from Met Office Unified Model'

+ um_version '7.3'

+

.. _cube-statistics-aggregated-by:

Partially Reducing Data Dimensions

@@ -338,3 +382,44 @@ from jja-2006 to jja-2010:

mam 2010

jja 2010

+Moreover, :meth:`Cube.aggregated_by ` supports

+weighted aggregation.

+For example, this is helpful for an aggregation over a monthly time

+coordinate that consists of months with different numbers of days.

+Similar to :meth:`Cube.collapsed `, weights can be

+given as arrays, cubes, or as (names of) :meth:`~iris.cube.Cube.coords`,

+:meth:`~iris.cube.Cube.cell_measures`, or

+:meth:`~iris.cube.Cube.ancillary_variables`.

+When weights are not given as arrays, units are correctly handled for weighted

+sums, i.e., the original unit of the cube is multiplied by the units of the

+weights.

+The following example shows a weighted sum (notice the change of the units):

+

+.. doctest:: aggregation

+

+ >>> from iris.coords import AncillaryVariable

+ >>> time_weights = AncillaryVariable(

+ ... cube.coord("time").bounds[:, 1] - cube.coord("time").bounds[:, 0],

+ ... long_name="Time Weights",

+ ... units="hours",

+ ... )

+ >>> cube.add_ancillary_variable(time_weights, 0)

+ >>> seasonal_sum = cube.aggregated_by("clim_season", iris.analysis.SUM, weights="Time Weights")

+ >>> print(seasonal_sum)

+ surface_temperature / (3600 s.K) (-- : 4; latitude: 18; longitude: 432)

+ Dimension coordinates:

+ latitude - x -

+ longitude - - x

+ Auxiliary coordinates:

+ clim_season x - -

+ forecast_reference_time x - -

+ season_year x - -

+ time x - -

+ Scalar coordinates:

+ forecast_period 0 hours

+ Cell methods:

+ mean month, year

+ sum clim_season

+ Attributes:

+ Conventions 'CF-1.5'

+ STASH m01s00i024

diff --git a/docs/src/userguide/glossary.rst b/docs/src/userguide/glossary.rst

index 818ef0c7ad..5c24f03372 100644

--- a/docs/src/userguide/glossary.rst

+++ b/docs/src/userguide/glossary.rst

@@ -1,3 +1,5 @@

+.. include:: ../common_links.inc

+

.. _glossary:

Glossary

@@ -125,7 +127,7 @@ Glossary

of formats.

| **Related:** :term:`CartoPy` **|** :term:`NumPy`

- | **More information:** `Matplotlib `_

+ | **More information:** `matplotlib`_

|

Metadata

@@ -143,9 +145,11 @@ Glossary

When Iris loads this format, it also especially recognises and interprets data

encoded according to the :term:`CF Conventions`.

+ __ `NetCDF4`_

+

| **Related:** :term:`Fields File (FF) Format`

**|** :term:`GRIB Format` **|** :term:`Post Processing (PP) Format`

- | **More information:** `NetCDF-4 Python Git `_

+ | **More information:** `NetCDF-4 Python Git`__

|

NumPy

diff --git a/docs/src/userguide/plotting_examples/1d_with_legend.py b/docs/src/userguide/plotting_examples/1d_with_legend.py

index 9b9fd8a49d..626335af45 100644

--- a/docs/src/userguide/plotting_examples/1d_with_legend.py

+++ b/docs/src/userguide/plotting_examples/1d_with_legend.py

@@ -13,7 +13,6 @@

temperature = temperature[5:9, :]

for cube in temperature.slices("longitude"):

-

# Create a string label to identify this cube (i.e. latitude: value)

cube_label = "latitude: %s" % cube.coord("latitude").points[0]

diff --git a/docs/src/whatsnew/1.0.rst b/docs/src/whatsnew/1.0.rst

index b226dc609b..c256c33566 100644

--- a/docs/src/whatsnew/1.0.rst

+++ b/docs/src/whatsnew/1.0.rst

@@ -147,8 +147,7 @@ the surface pressure. In return, it provides a virtual "pressure"

coordinate whose values are derived from the given components.

This facility is utilised by the GRIB2 loader to automatically provide

-the derived "pressure" coordinate for certain data [#f1]_ from the

-`ECMWF `_.

+the derived "pressure" coordinate for certain data [#f1]_ from the ECMWF.

.. [#f1] Where the level type is either 105 or 119, and where the

surface pressure has an ECMWF paramId of

diff --git a/docs/src/whatsnew/2.1.rst b/docs/src/whatsnew/2.1.rst

index 18c562d3da..33f3a013b1 100644

--- a/docs/src/whatsnew/2.1.rst

+++ b/docs/src/whatsnew/2.1.rst

@@ -1,3 +1,5 @@

+.. include:: ../common_links.inc

+

v2.1 (06 Jun 2018)

******************

@@ -67,7 +69,7 @@ Incompatible Changes

as an alternative.

* This release of Iris contains a number of updated metadata translations.

- See this

+ See this

`changelist `_

for further information.

@@ -84,7 +86,7 @@ Internal

calendar.

* Iris updated its time-handling functionality from the

- `netcdf4-python `_

+ `netcdf4-python`__

``netcdftime`` implementation to the standalone module

`cftime `_.

cftime is entirely compatible with netcdftime, but some issues may

@@ -92,6 +94,8 @@ Internal

In this situation, simply replacing ``netcdftime.datetime`` with

``cftime.datetime`` should be sufficient.

+__ `netCDF4`_

+

* Iris now requires version 2 of Matplotlib, and ``>=1.14`` of NumPy.

Full requirements can be seen in the `requirements `_

directory of the Iris' the source.

diff --git a/docs/src/whatsnew/3.4.rst b/docs/src/whatsnew/3.4.rst

index 1ad676c049..02fc574e51 100644

--- a/docs/src/whatsnew/3.4.rst

+++ b/docs/src/whatsnew/3.4.rst

@@ -26,15 +26,29 @@ This document explains the changes made to Iris for this release

* We have **begun refactoring Iris' regridding**, which has already improved

performance and functionality, with more potential in future!

* We have made several other significant `🚀 Performance Enhancements`_.

- * Please note that **Iris cannot currently work with the latest NetCDF4

- releases**. The pin is set to ``` if you have

any issues or feature requests for improving Iris. Enjoy!

+v3.4.1 (21 Feb 2023)

+====================

+

+.. dropdown:: :opticon:`alert` v3.4.1 Patches

+ :container: + shadow

+ :title: text-primary text-center font-weight-bold

+ :body: bg-light

+ :animate: fade-in

+

+ The patches in this release of Iris include:

+

+ #. `@trexfeathers`_ and `@pp-mo`_ made Iris' use of the `netCDF4`_ library

+ thread-safe. (:pull:`5095`)

+

+ #. `@trexfeathers`_ and `@pp-mo`_ removed the netCDF4 pin mentioned in

+ `🔗 Dependencies`_ point 3. (:pull:`5095`)

+

+

📢 Announcements

================

diff --git a/docs/src/whatsnew/latest.rst b/docs/src/whatsnew/latest.rst

index 22ec7a00b8..92153f902c 100644

--- a/docs/src/whatsnew/latest.rst

+++ b/docs/src/whatsnew/latest.rst

@@ -10,7 +10,7 @@ This document explains the changes made to Iris for this release

The highlights for this major/minor release of Iris include:

- * N/A

+ * We're so proud to fully support `@ed-hawkins`_ and `#ShowYourStripes`_ ❤️

And finally, get in touch with us on :issue:`GitHub` if you have

any issues or feature requests for improving Iris. Enjoy!

@@ -20,18 +20,35 @@ This document explains the changes made to Iris for this release

================

#. Congratulations to `@ESadek-MO`_ who has become a core developer for Iris! 🎉

+#. Welcome and congratulations to `@HGWright`_ for making his first contribution to Iris! 🎉

✨ Features

===========

-#. N/A

+#. `@bsherratt`_ added support for plugins - see the corresponding

+ :ref:`documentation page` for further information.

+ (:pull:`5144`)

+

+#. `@rcomer`_ enabled lazy evaluation of :obj:`~iris.analysis.RMS` calcuations

+ with weights. (:pull:`5017`)

+

+#. `@schlunma`_ allowed the usage of cubes, coordinates, cell measures, or

+ ancillary variables as weights for cube aggregations

+ (:meth:`iris.cube.Cube.collapsed`, :meth:`iris.cube.Cube.aggregated_by`, and

+ :meth:`iris.cube.Cube.rolling_window`). This automatically adapts cube units

+ if necessary. (:pull:`5084`)

🐛 Bugs Fixed

=============

-#. N/A

+#. `@trexfeathers`_ and `@pp-mo`_ made Iris' use of the `netCDF4`_ library

+ thread-safe. (:pull:`5095`)

+

+#. `@ESadek-MO`_ removed check and error raise for saving

+ cubes with masked :class:`iris.coords.CellMeasure`.

+ (:issue:`5147`, :pull:`5181`)

💣 Incompatible Changes

@@ -66,10 +83,29 @@ This document explains the changes made to Iris for this release

the light version (not dark) while we make the docs dark mode friendly

(:pull:`5129`)

+#. `@jonseddon`_ updated the citation to a more recent version of Iris. (:pull:`5116`)

+

#. `@rcomer`_ linked the :obj:`~iris.analysis.PERCENTILE` aggregator from the

:obj:`~iris.analysis.MEDIAN` docstring, noting that the former handles lazy

data. (:pull:`5128`)

+#. `@trexfeathers`_ updated the WSL link to Microsoft's latest documentation,

+ and removed an ECMWF link in the ``v1.0`` What's New that was failing the

+ linkcheck CI. (:pull:`5109`)

+

+#. `@trexfeathers`_ added a new top-level :doc:`/community/index` section,

+ as a one-stop place to find out about getting involved, and how we relate

+ to other projects. (:pull:`5025`)

+

+#. The **Iris community**, with help from the **Xarray community**, produced

+ the :doc:`/community/iris_xarray` page, highlighting the similarities and

+ differences between the two packages. (:pull:`5025`)

+

+#. `@bjlittle`_ added a new section to the `README.md`_ to show our support

+ for the outstanding work of `@ed-hawkins`_ et al for `#ShowYourStripes`_.

+ (:pull:`5141`)

+

+#. `@HGWright`_ fixed some typo's from Gitwash. (:pull:`5145`)

💼 Internal

===========

@@ -80,14 +116,22 @@ This document explains the changes made to Iris for this release

#. `@rcomer`_ removed some old infrastructure that printed test timings.

(:pull:`5101`)

+#. `@lbdreyer`_ and `@trexfeathers`_ (reviewer) added coverage testing. This

+ can be enabled by using the "--coverage" flag when running the tests with

+ nox i.e. ``nox --session tests -- --coverage``. (:pull:`4765`)

+

+#. `@lbdreyer`_ and `@trexfeathers`_ (reviewer) removed the ``--coding-tests``

+ option from Iris' test runner. (:pull:`4765`)

.. comment

Whatsnew author names (@github name) in alphabetical order. Note that,

core dev names are automatically included by the common_links.inc:

.. _@fnattino: https://github.com/fnattino

-

+.. _@ed-hawkins: https://github.com/ed-hawkins

.. comment

Whatsnew resources in alphabetical order:

+.. _#ShowYourStripes: https://showyourstripes.info/s/globe/

+.. _README.md: https://github.com/SciTools/iris#-----

diff --git a/lib/iris/__init__.py b/lib/iris/__init__.py

index 896b850541..38465472ee 100644

--- a/lib/iris/__init__.py

+++ b/lib/iris/__init__.py

@@ -91,6 +91,7 @@ def callback(cube, field, filename):

import contextlib

import glob

+import importlib

import itertools

import os.path

import pathlib

@@ -129,6 +130,7 @@ def callback(cube, field, filename):

"sample_data_path",

"save",

"site_configuration",

+ "use_plugin",

]

@@ -175,7 +177,6 @@ def __init__(self, datum_support=False, pandas_ndim=False):

self.__dict__["pandas_ndim"] = pandas_ndim

def __repr__(self):

-

# msg = ('Future(example_future_flag={})')

# return msg.format(self.example_future_flag)

msg = "Future(datum_support={}, pandas_ndim={})"

@@ -471,3 +472,22 @@ def sample_data_path(*path_to_join):

"appropriate for general file access.".format(target)

)

return target

+

+

+def use_plugin(plugin_name):

+ """

+ Convenience function to import a plugin

+

+ For example::

+

+ use_plugin("my_plugin")

+

+ is equivalent to::

+

+ import iris.plugins.my_plugin

+

+ This is useful for plugins that are not used directly, but instead do all

+ their setup on import. In this case, style checkers would not know the

+ significance of the import statement and warn that it is an unused import.

+ """

+ importlib.import_module(f"iris.plugins.{plugin_name}")

diff --git a/lib/iris/_lazy_data.py b/lib/iris/_lazy_data.py

index ac7ae34511..e0566fc8f2 100644

--- a/lib/iris/_lazy_data.py

+++ b/lib/iris/_lazy_data.py

@@ -39,7 +39,7 @@ def is_lazy_data(data):

"""

Return whether the argument is an Iris 'lazy' data array.

- At present, this means simply a Dask array.

+ At present, this means simply a :class:`dask.array.Array`.

We determine this by checking for a "compute" property.

"""

@@ -67,7 +67,8 @@ def _optimum_chunksize_internals(

* shape (tuple of int):

The full array shape of the target data.

* limit (int):

- The 'ideal' target chunk size, in bytes. Default from dask.config.

+ The 'ideal' target chunk size, in bytes. Default from

+ :mod:`dask.config`.

* dtype (np.dtype):

Numpy dtype of target data.

@@ -77,7 +78,7 @@ def _optimum_chunksize_internals(

.. note::

The purpose of this is very similar to

- `dask.array.core.normalize_chunks`, when called as

+ :func:`dask.array.core.normalize_chunks`, when called as

`(chunks='auto', shape, dtype=dtype, previous_chunks=chunks, ...)`.

Except, the operation here is optimised specifically for a 'c-like'

dimension order, i.e. outer dimensions first, as for netcdf variables.

@@ -174,13 +175,13 @@ def _optimum_chunksize(

def as_lazy_data(data, chunks=None, asarray=False):

"""

- Convert the input array `data` to a dask array.

+ Convert the input array `data` to a :class:`dask.array.Array`.

Args:

* data (array-like):

An indexable object with 'shape', 'dtype' and 'ndim' properties.

- This will be converted to a dask array.

+ This will be converted to a :class:`dask.array.Array`.

Kwargs:

@@ -192,7 +193,7 @@ def as_lazy_data(data, chunks=None, asarray=False):

Set to False (default) to pass passed chunks through unchanged.

Returns:

- The input array converted to a dask array.

+ The input array converted to a :class:`dask.array.Array`.

.. note::

The result chunk size is a multiple of 'chunks', if given, up to the

@@ -284,15 +285,16 @@ def multidim_lazy_stack(stack):

"""

Recursively build a multidimensional stacked dask array.

- This is needed because dask.array.stack only accepts a 1-dimensional list.

+ This is needed because :meth:`dask.array.Array.stack` only accepts a

+ 1-dimensional list.

Args:

* stack:

- An ndarray of dask arrays.

+ An ndarray of :class:`dask.array.Array`.

Returns:

- The input array converted to a lazy dask array.

+ The input array converted to a lazy :class:`dask.array.Array`.

"""

if stack.ndim == 0:

diff --git a/lib/iris/_merge.py b/lib/iris/_merge.py

index bc12080523..5ca5f31a8e 100644

--- a/lib/iris/_merge.py

+++ b/lib/iris/_merge.py

@@ -1418,6 +1418,7 @@ def _define_space(self, space, positions, indexes, function_matrix):

participates in a functional relationship.

"""

+

# Heuristic reordering of coordinate defintion indexes into

# preferred dimension order.

def axis_and_name(name):

diff --git a/lib/iris/analysis/__init__.py b/lib/iris/analysis/__init__.py

index 55d5d5d93e..173487cfb0 100644

--- a/lib/iris/analysis/__init__.py

+++ b/lib/iris/analysis/__init__.py

@@ -39,8 +39,10 @@

from collections.abc import Iterable

import functools

from functools import wraps

+from inspect import getfullargspec

import warnings

+from cf_units import Unit

import dask.array as da

import numpy as np

import numpy.ma as ma

@@ -55,7 +57,9 @@

)

from iris.analysis._regrid import CurvilinearRegridder, RectilinearRegridder

import iris.coords

+from iris.coords import _DimensionalMetadata

from iris.exceptions import LazyAggregatorError

+import iris.util

__all__ = (

"Aggregator",

@@ -296,7 +300,6 @@ def _dimensional_metadata_comparison(*cubes, object_get=None):

# for coordinate groups

for cube, coords in zip(cubes, all_coords):

for coord in coords:

-

# if this coordinate has already been processed, then continue on

# to the next one

if id(coord) in processed_coords:

@@ -468,11 +471,13 @@ def __init__(

Kwargs:

* units_func (callable):

- | *Call signature*: (units)

+ | *Call signature*: (units, \**kwargs)

If provided, called to convert a cube's units.

Returns an :class:`cf_units.Unit`, or a

value that can be made into one.

+ To ensure backwards-compatibility, also accepts a callable with

+ call signature (units).

* lazy_func (callable or None):

An alternative to :data:`call_func` implementing a lazy

@@ -480,7 +485,8 @@ def __init__(

main operation, but should raise an error in unhandled cases.

Additional kwargs::

- Passed through to :data:`call_func` and :data:`lazy_func`.

+ Passed through to :data:`call_func`, :data:`lazy_func`, and

+ :data:`units_func`.

Aggregators are used by cube aggregation methods such as

:meth:`~iris.cube.Cube.collapsed` and

@@ -626,7 +632,11 @@ def update_metadata(self, cube, coords, **kwargs):

"""

# Update the units if required.

if self.units_func is not None:

- cube.units = self.units_func(cube.units)

+ argspec = getfullargspec(self.units_func)

+ if argspec.varkw is None: # old style

+ cube.units = self.units_func(cube.units)

+ else: # new style (preferred)

+ cube.units = self.units_func(cube.units, **kwargs)

def post_process(self, collapsed_cube, data_result, coords, **kwargs):

"""

@@ -694,13 +704,13 @@ class PercentileAggregator(_Aggregator):

"""

def __init__(self, units_func=None, **kwargs):

- """

+ r"""

Create a percentile aggregator.

Kwargs:

* units_func (callable):

- | *Call signature*: (units)

+ | *Call signature*: (units, \**kwargs)

If provided, called to convert a cube's units.

Returns an :class:`cf_units.Unit`, or a

@@ -935,13 +945,13 @@ class WeightedPercentileAggregator(PercentileAggregator):

"""

def __init__(self, units_func=None, lazy_func=None, **kwargs):

- """

+ r"""

Create a weighted percentile aggregator.

Kwargs:

* units_func (callable):

- | *Call signature*: (units)

+ | *Call signature*: (units, \**kwargs)

If provided, called to convert a cube's units.

Returns an :class:`cf_units.Unit`, or a

@@ -1173,8 +1183,112 @@ def post_process(self, collapsed_cube, data_result, coords, **kwargs):

return result

+class _Weights(np.ndarray):

+ """Class for handling weights for weighted aggregation.

+

+ This subclasses :class:`numpy.ndarray`; thus, all methods and properties of

+ :class:`numpy.ndarray` (e.g., `shape`, `ndim`, `view()`, etc.) are

+ available.

+

+ Details on subclassing :class:`numpy.ndarray` are given here:

+ https://numpy.org/doc/stable/user/basics.subclassing.html

+

+ """

+

+ def __new__(cls, weights, cube, units=None):

+ """Create class instance.

+

+ Args:

+

+ * weights (Cube, string, _DimensionalMetadata, array-like):

+ If given as a :class:`iris.cube.Cube`, use its data and units. If

+ given as a :obj:`str` or :class:`iris.coords._DimensionalMetadata`,

+ assume this is (the name of) a

+ :class:`iris.coords._DimensionalMetadata` object of the cube (i.e.,

+ one of :meth:`iris.cube.Cube.coords`,

+ :meth:`iris.cube.Cube.cell_measures`, or

+ :meth:`iris.cube.Cube.ancillary_variables`). If given as an

+ array-like object, use this directly and assume units of `1`. If

+ `units` is given, ignore all units derived above and use the ones

+ given by `units`.

+ * cube (Cube):

+ Input cube for aggregation. If weights is given as :obj:`str` or

+ :class:`iris.coords._DimensionalMetadata`, try to extract the

+ :class:`iris.coords._DimensionalMetadata` object and corresponding

+ dimensional mappings from this cube. Otherwise, this argument is

+ ignored.

+ * units (string, Unit):

+ If ``None``, use units derived from `weights`. Otherwise, overwrite

+ the units derived from `weights` and use `units`.

+

+ """

+ # `weights` is a cube

+ # Note: to avoid circular imports of Cube we use duck typing using the

+ # "hasattr" syntax here

+ # --> Extract data and units from cube

+ if hasattr(weights, "add_aux_coord"):

+ obj = np.asarray(weights.data).view(cls)

+ obj.units = weights.units

+

+ # `weights`` is a string or _DimensionalMetadata object

+ # --> Extract _DimensionalMetadata object from cube, broadcast it to

+ # correct shape using the corresponding dimensional mapping, and use

+ # its data and units

+ elif isinstance(weights, (str, _DimensionalMetadata)):

+ dim_metadata = cube._dimensional_metadata(weights)

+ arr = dim_metadata._values

+ if dim_metadata.shape != cube.shape:

+ arr = iris.util.broadcast_to_shape(

+ arr,

+ cube.shape,

+ dim_metadata.cube_dims(cube),

+ )

+ obj = np.asarray(arr).view(cls)

+ obj.units = dim_metadata.units

+

+ # Remaining types (e.g., np.ndarray): try to convert to ndarray.

+ else:

+ obj = np.asarray(weights).view(cls)

+ obj.units = Unit("1")

+

+ # Overwrite units from units argument if necessary

+ if units is not None:

+ obj.units = units

+

+ return obj

+

+ def __array_finalize__(self, obj):

+ """See https://numpy.org/doc/stable/user/basics.subclassing.html.

+

+ Note

+ ----

+ `obj` cannot be `None` here since ``_Weights.__new__`` does not call

+ ``super().__new__`` explicitly.

+

+ """

+ self.units = getattr(obj, "units", Unit("1"))

+

+ @classmethod

+ def update_kwargs(cls, kwargs, cube):

+ """Update ``weights`` keyword argument in-place.

+

+ Args:

+

+ * kwargs (dict):

+ Keyword arguments that will be updated in-place if a `weights`

+ keyword is present which is not ``None``.

+ * cube (Cube):

+ Input cube for aggregation. If weights is given as :obj:`str`, try

+ to extract a cell measure with the corresponding name from this

+ cube. Otherwise, this argument is ignored.

+

+ """

+ if kwargs.get("weights") is not None:

+ kwargs["weights"] = cls(kwargs["weights"], cube)

+

+

def create_weighted_aggregator_fn(aggregator_fn, axis, **kwargs):

- """Return an aggregator function that can explicitely handle weights.

+ """Return an aggregator function that can explicitly handle weights.

Args:

@@ -1399,7 +1513,7 @@ def _weighted_quantile_1D(data, weights, quantiles, **kwargs):

array or float. Calculated quantile values (set to np.nan wherever sum

of weights is zero or masked)

"""

- # Return np.nan if no useable points found

+ # Return np.nan if no usable points found

if np.isclose(weights.sum(), 0.0) or ma.is_masked(weights.sum()):

return np.resize(np.array(np.nan), len(quantiles))

# Sort the data

@@ -1536,7 +1650,7 @@ def _proportion(array, function, axis, **kwargs):

# Otherwise, it is possible for numpy to return a masked array that has

# a dtype for its data that is different to the dtype of the fill-value,

# which can cause issues outside this function.

- # Reference - tests/unit/analyis/test_PROPORTION.py Test_masked.test_ma

+ # Reference - tests/unit/analysis/test_PROPORTION.py Test_masked.test_ma

numerator = _count(array, axis=axis, function=function, **kwargs)

result = ma.asarray(numerator / total_non_masked)

@@ -1584,27 +1698,19 @@ def _lazy_max_run(array, axis=-1, **kwargs):

def _rms(array, axis, **kwargs):

- # XXX due to the current limitations in `da.average` (see below), maintain

- # an explicit non-lazy aggregation function for now.

- # Note: retaining this function also means that if weights are passed to

- # the lazy aggregator, the aggregation will fall back to using this

- # non-lazy aggregator.

- rval = np.sqrt(ma.average(np.square(array), axis=axis, **kwargs))

- if not ma.isMaskedArray(array):

- rval = np.asarray(rval)

+ rval = np.sqrt(ma.average(array**2, axis=axis, **kwargs))

+

return rval

-@_build_dask_mdtol_function

def _lazy_rms(array, axis, **kwargs):

- # XXX This should use `da.average` and not `da.mean`, as does the above.

- # However `da.average` current doesn't handle masked weights correctly

- # (see https://github.com/dask/dask/issues/3846).

- # To work around this we use da.mean, which doesn't support weights at

- # all. Thus trying to use this aggregator with weights will currently

- # raise an error in dask due to the unexpected keyword `weights`,

- # rather than silently returning the wrong answer.

- return da.sqrt(da.mean(array**2, axis=axis, **kwargs))

+ # Note that, since we specifically need the ma version of average to handle

+ # weights correctly with masked data, we cannot rely on NEP13/18 and need

+ # to implement a separate lazy RMS function.

+

+ rval = da.sqrt(da.ma.average(array**2, axis=axis, **kwargs))

+

+ return rval

def _sum(array, **kwargs):

@@ -1639,6 +1745,18 @@ def _sum(array, **kwargs):

return rvalue

+def _sum_units_func(units, **kwargs):

+ """Multiply original units with weight units if possible."""

+ weights = kwargs.get("weights")

+ if weights is None: # no weights given or weights are None

+ result = units

+ elif hasattr(weights, "units"): # weights are _Weights

+ result = units * weights.units

+ else: # weights are regular np.ndarrays

+ result = units

+ return result

+

+

def _peak(array, **kwargs):

def column_segments(column):

nan_indices = np.where(np.isnan(column))[0]

@@ -1754,7 +1872,7 @@ def interp_order(length):

COUNT = Aggregator(

"count",

_count,

- units_func=lambda units: 1,

+ units_func=lambda units, **kwargs: 1,

lazy_func=_build_dask_mdtol_function(_count),

)

"""

@@ -1786,7 +1904,7 @@ def interp_order(length):

MAX_RUN = Aggregator(

None,

iris._lazy_data.non_lazy(_lazy_max_run),

- units_func=lambda units: 1,

+ units_func=lambda units, **kwargs: 1,

lazy_func=_build_dask_mdtol_function(_lazy_max_run),

)

"""

@@ -2030,7 +2148,11 @@ def interp_order(length):

"""

-PROPORTION = Aggregator("proportion", _proportion, units_func=lambda units: 1)

+PROPORTION = Aggregator(

+ "proportion",

+ _proportion,

+ units_func=lambda units, **kwargs: 1,

+)

"""

An :class:`~iris.analysis.Aggregator` instance that calculates the

proportion, as a fraction, of :class:`~iris.cube.Cube` data occurrences

@@ -2072,14 +2194,16 @@ def interp_order(length):

the root mean square over a :class:`~iris.cube.Cube`, as computed by

((x0**2 + x1**2 + ... + xN-1**2) / N) ** 0.5.

-Additional kwargs associated with the use of this aggregator:

+Parameters

+----------

-* weights (float ndarray):

+weights : array-like, optional

Weights matching the shape of the cube or the length of the window for

rolling window operations. The weights are applied to the squares when

taking the mean.

-**For example**:

+Example

+-------

To compute the zonal root mean square over the *longitude* axis of a cube::

@@ -2129,6 +2253,7 @@ def interp_order(length):

SUM = WeightedAggregator(

"sum",

_sum,

+ units_func=_sum_units_func,

lazy_func=_build_dask_mdtol_function(_sum),

)

"""

@@ -2166,7 +2291,7 @@ def interp_order(length):

VARIANCE = Aggregator(

"variance",

ma.var,

- units_func=lambda units: units * units,

+ units_func=lambda units, **kwargs: units * units,

lazy_func=_build_dask_mdtol_function(da.var),

ddof=1,

)

@@ -2808,7 +2933,7 @@ def __init__(self, mdtol=1):

Both sourge and target cubes must have an XY grid defined by

separate X and Y dimensions with dimension coordinates.

All of the XY dimension coordinates must also be bounded, and have

- the same cooordinate system.

+ the same coordinate system.

"""

if not (0 <= mdtol <= 1):

diff --git a/lib/iris/analysis/_area_weighted.py b/lib/iris/analysis/_area_weighted.py

index edbfd41ef9..3b728e9a43 100644

--- a/lib/iris/analysis/_area_weighted.py

+++ b/lib/iris/analysis/_area_weighted.py

@@ -853,7 +853,7 @@ def _calculate_regrid_area_weighted_weights(

cached_x_bounds = []

cached_x_indices = []

max_x_indices = 0

- for (x_0, x_1) in grid_x_bounds:

+ for x_0, x_1 in grid_x_bounds:

if grid_x_decreasing:

x_0, x_1 = x_1, x_0

x_bounds, x_indices = _cropped_bounds(src_x_bounds, x_0, x_1)

diff --git a/lib/iris/analysis/_interpolation.py b/lib/iris/analysis/_interpolation.py

index 2a7dfa6e62..f5e89a9e51 100644

--- a/lib/iris/analysis/_interpolation.py

+++ b/lib/iris/analysis/_interpolation.py

@@ -268,7 +268,7 @@ def _account_for_circular(self, points, data):

"""

from iris.analysis.cartography import wrap_lons

- for (circular, modulus, index, dim, offset) in self._circulars:

+ for circular, modulus, index, dim, offset in self._circulars:

if modulus:

# Map all the requested values into the range of the source

# data (centred over the centre of the source data to allow

diff --git a/lib/iris/analysis/_scipy_interpolate.py b/lib/iris/analysis/_scipy_interpolate.py

index fc64249729..bfa070c7c7 100644

--- a/lib/iris/analysis/_scipy_interpolate.py

+++ b/lib/iris/analysis/_scipy_interpolate.py

@@ -225,7 +225,6 @@ def compute_interp_weights(self, xi, method=None):

prepared = (xi_shape, method) + self._find_indices(xi.T)