-

Notifications

You must be signed in to change notification settings - Fork 701

Automated Jenkins Testing

The WRF repository is linked to a Jenkins server that runs on AWS. Whenever a pull request (PR) is made or when a commit to an existing PR is made, a suite of tests are conducted. Those tests take about 30 minutes to complete. The purpose of this page is to fully describe the tests that are conducted and to assist the user in interpreting the test results.

The Jenkins server was developed and is maintained by ScalaComputing.

The automated tests are referred to as regression tests. A successful completion of the testing indicates that the source code modification has no side effects that break other WRF options (at least the other options that were tested). The testing does not validate the results from the proposed modification. The developer is entirely responsible for demonstrating that the new code performs as expected.

The following table lists the broad test areas (currently 19), and the associated 48 parallel build types across those 19 broad test areas. Note that Tests 1, and 11 - 18 are all em-real. These are just bins for additional physics coverage. Splitting the physics into multiple and separate tests allows the testing to complete much more quickly.

| Test Number | Mnemonic | Description | Type |

|---|---|---|---|

| 1 | em_real | ARW real data | S O M |

| 2 | nmm_hwrf | NMM HWRF data | - - M |

| 3 | em_chem | Chemistry real | S - M |

| 4 | em_quarter_ss | Ideal Supercell | S O M |

| 5 | em_b_wave | Ideal Baroclinic Wave | S O M |

| 6 | em_real8 | ARW real 8-byte float | S O M |

| 7 | em_quarter_ss8 | Supercell 8-byte float | S O M |

| 8 | em_move | Moving Nest | - - M |

| 9 | em_fire | Ideal Ground Fire | S O M |

| 10 | em_hill2d_x | Ideal 2d Hill | S - - |

| 11 | em_realA | ARW real data | S O M |

| 12 | em_realB | ARW real data | S O M |

| 13 | em_realC | ARW real data | S O M |

| 14 | em_realD | ARW real data | S O M |

| 15 | em_realE | ARW real data | S O M |

| 16 | em_realF | ARW real data | S O M |

| 17 | em_realG | ARW real data | S O M |

| 18 | em_realH | ARW real data | S M |

| 3 | em_chem_kpp | Chemistry KPP real | S - M |

Type refers to the requested parallel option:

- S = Serial

- O = OpenMP

- M = MPI

Therefore "S O M" refers to a test that is built for Serial, OpenMP, and MPI. The "S - -" entry refers to a test that is only built for Serial processing.

The regression tests are short. For each of the 48 builds (for example em_real using MPI), one or more simulations are performed. The simulations are approximately 10 time steps in length.

The WRF model requires both the netCDF directory structure (lib, include, and bin), and an MPI implementation (OpenMPI is selected). The compiler is GNU, using both gfortran and gcc. To keep a uniform environment for the testing, the WRF source code, test data, and built libraries are placed inside a Docker container (one container for each of the 48 builds). The ARW builds require GNU 9, while the NMM build uses GNU 8. This is handled transparently with no user interaction.

The simulation choices for each of the 48 builds are controlled by a script that assigns namelists.

| Test Name | Namelists Tested |

|---|---|

| em_real | 3dtke conus rap tropical |

| nmm_hwrf | 1NE 2NE 3NE |

| em_chem | 1 2 5 |

| em_quarter_ss | 02NE 03 03NE 04 |

| em_b_wave | 1NE 2 2NE 3 |

| em_real8 | 14 17AD |

| em_quarter_ss8 | 06 08 09 |

| em_move | 01 |

| em_fire | 01 |

| em_hill2d_x | 01 |

| em_realA | 03 03DF 03FD 06 07NE |

| em_realB | 10 11 14 16 16DF |

| em_realC | 17 17AD 18 20 20NE |

| em_realD | 38 48 49 50 51 |

| em_realE | 52 52DF 52FD 60 60NE |

| em_realF | 65DF 66FD 71 78 79 |

| em_realG | kiaps1NE kiaps2 |

| em_realH | cmt solaraNE |

| em_chem_kpp | 120 |

The complete set of namelists options are available on github: directory of WRF namelist settings.

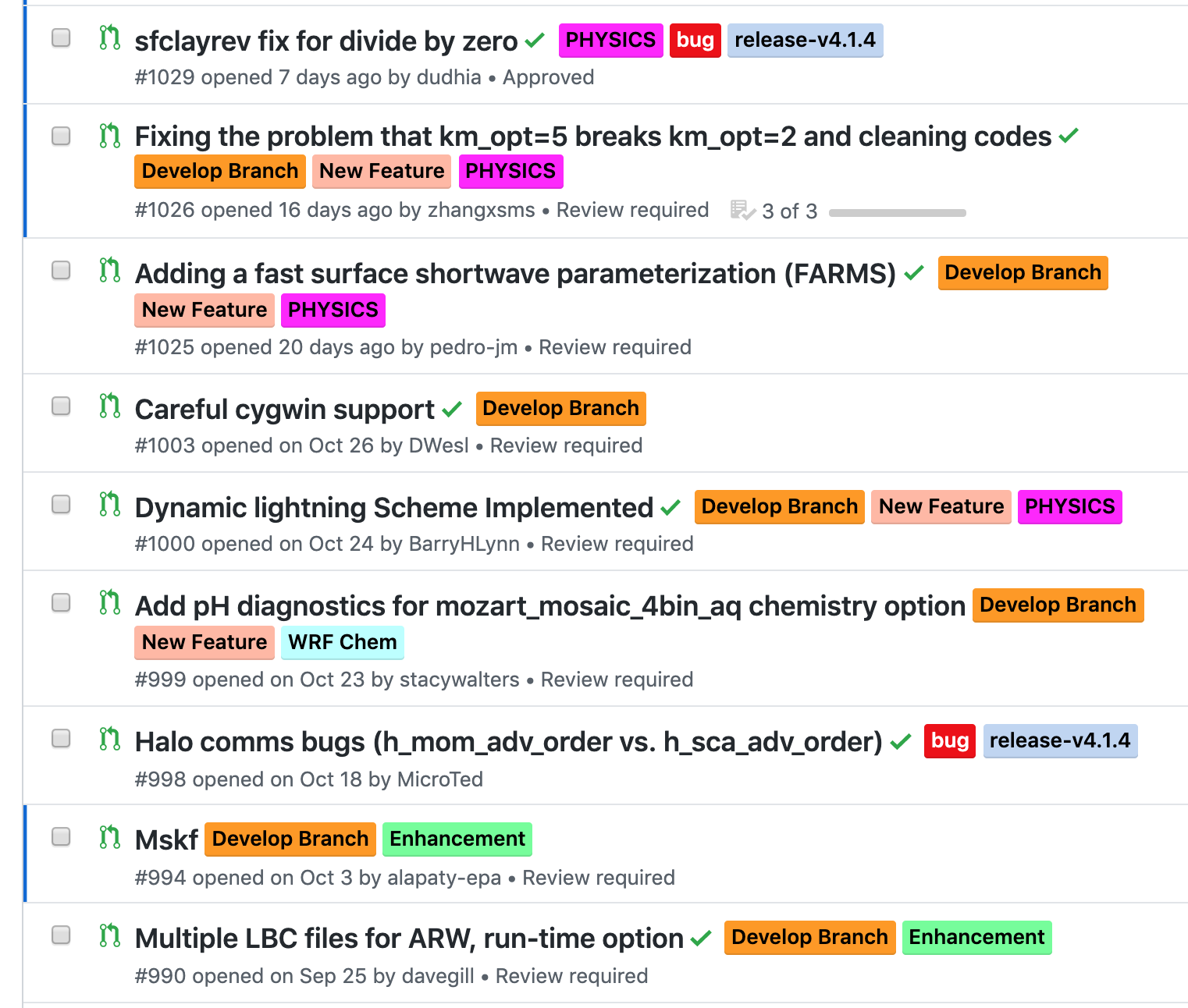

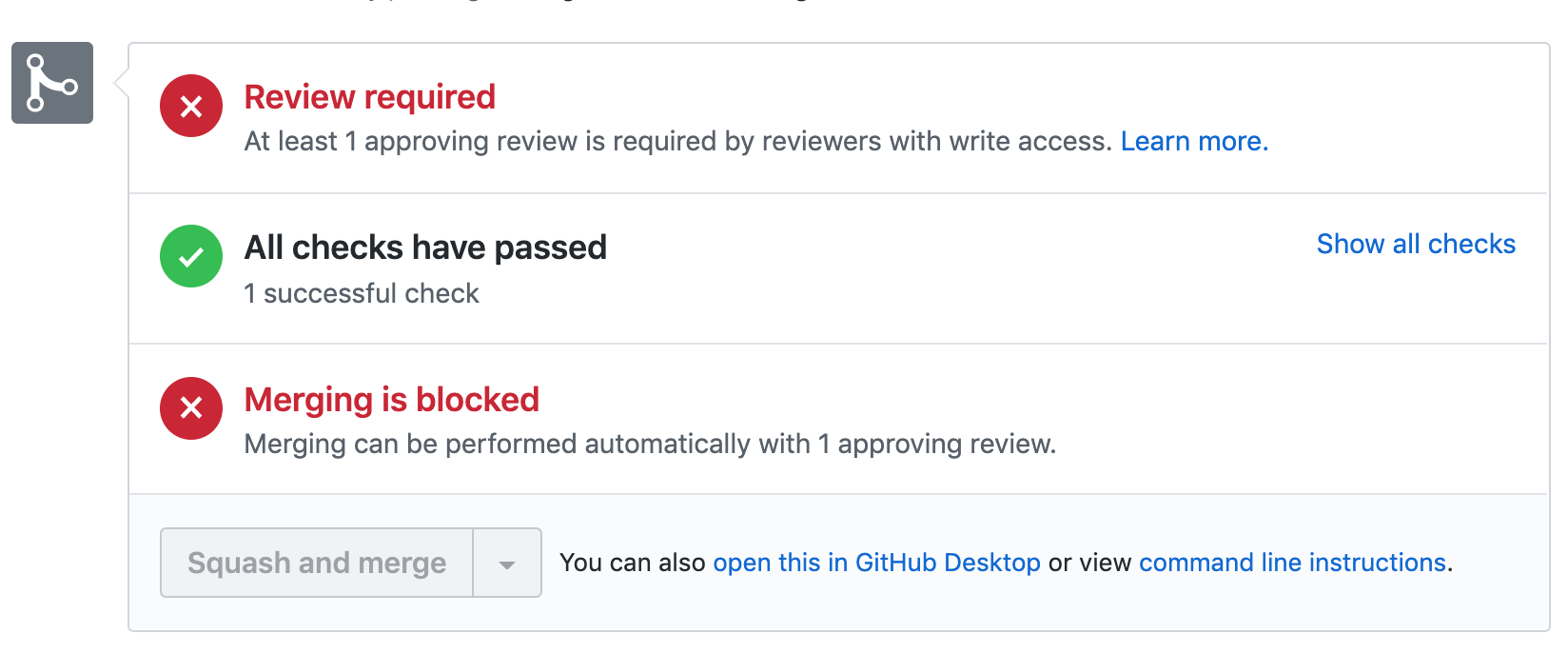

Once a PR is proposed and for each subsequent commit to that PR, automated testing is performed via the Jenkins server (for security purposes, this server is not generally accessible).

When the automated tests are all complete, and if the tests were successful, then multiple green checkmarks appear. There is a large green checkmark at the end of the conversations for the PR:

A number of indicators are reviewed to determine SUCCESS vs FAIL, depending on the stage of the automated testing.

-

Build SUCCESS

- Both wrf.exe and the associated pre-processor exist and have executable privilege

-

Individual Simulation Run SUCCESS

- Pre-processor print output has SUCCESS message

- Pre-processor netCDF output exists (for each domain that was run)

- WRF model print output has SUCCESS message

- WRF model netCDF output exists for each domain (for each domain that was run)

- Two time periods exist in each WRF model netCDF output file (for each domain that was run)

- No occurrence of "NaN" in any WRF model netCDF output file (for each domain that was run)

-

Parallel Comparison SUCCESS (assuming same namelist configuration)

- If Serial and OpenMP types exist, compare bit-wise identical results

- If Serial and MPI types exist, compare bit-wise identical results

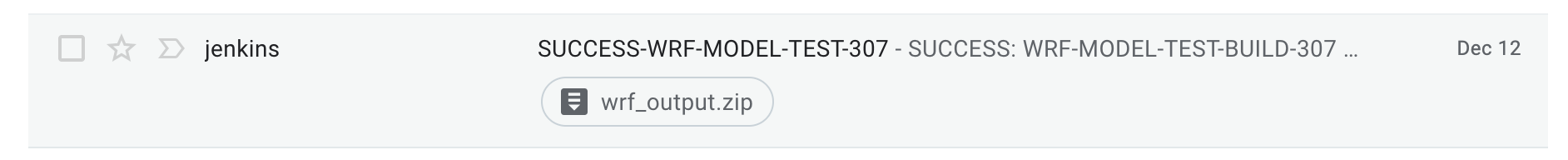

After approximately half an hour from the time of the PR or commit, an email arrives. The email is sent from user jenkins, and due to the contents, many email servers identify the message as SPAM.

Here is an example of a successful commit as reported by jenkins. The values in the Expected column (the number of items anticipated to be completed successfully) match those in the Received column (the actual results from the tests). If there are any failures, the broken tests are listed.

Test Type | Expected | Received | Failed

= = = = = = = = = = = = = = = = = = = = = = = = = = = =

Number of Tests : 19 19

Number of Builds : 48 48

Number of Simulations : 164 164 0

Number of Comparisons : 104 104 0

Failed Simulations are:

None

Which comparisons are not bit-for-bit:

None

The content of the email message lists sufficient information to determine to which PR and commit the test refers, and the email also provides the github ID of the person proposing the change (usually the email recipent).

The email contains an attachment: wrf_output.zip.

If you are trying to determine the cause of the failures, the attachment is a good place to start. If all of the tests were successful, there is no need to bother with the attachment to the email.

- Download the file wrf_output.zip.

- Inflate (decompress) this zip'ed file

unzip wrf_output.zip

- A new directory is created, get into that directory

cd output_testcase

- There are 20 files (the 19 tests plus a file called

email_01.txt). The 19 output files are associated with the 19 broad area tests that are conducted. For example,output_1refers to em_real,output_2refers to nmm_hwrf, etc.

ls -ls

total 12080

16 -rw-r--r--@ 1 gill 1500 4263 Apr 8 18:18 email_01.txt

480 -rw-r--r--@ 1 gill 1500 243981 Apr 8 18:09 output_1

416 -rw-r--r--@ 1 gill 1500 210561 Apr 8 17:58 output_10

664 -rw-r--r--@ 1 gill 1500 339414 Apr 8 18:13 output_11

656 -rw-r--r--@ 1 gill 1500 335501 Apr 8 18:13 output_12

592 -rw-r--r--@ 1 gill 1500 300965 Apr 8 18:10 output_13

568 -rw-r--r--@ 1 gill 1500 287701 Apr 8 18:10 output_14

696 -rw-r--r--@ 1 gill 1500 355992 Apr 8 18:15 output_15

656 -rw-r--r--@ 1 gill 1500 333935 Apr 8 18:14 output_16

328 -rw-r--r--@ 1 gill 1500 166776 Apr 8 18:07 output_17

312 -rw-r--r--@ 1 gill 1500 158984 Apr 8 18:04 output_18

312 -rw-r--r--@ 1 gill 1500 158984 Apr 8 18:04 output_19

328 -rw-r--r--@ 1 gill 1500 164845 Apr 8 18:16 output_2

4112 -rw-r--r--@ 1 gill 1500 2101787 Apr 8 18:18 output_3

640 -rw-r--r--@ 1 gill 1500 325108 Apr 8 18:04 output_4

496 -rw-r--r--@ 1 gill 1500 250766 Apr 8 18:04 output_5

280 -rw-r--r--@ 1 gill 1500 140195 Apr 8 18:05 output_6

472 -rw-r--r--@ 1 gill 1500 240026 Apr 8 18:03 output_7

96 -rw-r--r--@ 1 gill 1500 45261 Apr 8 18:00 output_8

272 -rw-r--r--@ 1 gill 1500 136700 Apr 8 18:06 output_9

Within each file are a number of sections. They can be searched and aggregated information can be displayed. The -a option to grep indicates that the file should be treated as an ASCII text file, regardless of the presence of binary characters being detected.

- There should be 48 total builds that are attempted:

grep -a " START" * | grep -av "CLEAN START" | wc -l

48

- There are 104 bit-for-bit comparisons:

grep -a "status = " * | wc -l

104

All should have status = 0 (success), so NONE should have any other status:

grep -a "status = " * | grep -av "status = 0" | wc -l

0

- There are 164 total simulations:

grep -a " = STATUS" * | wc -l

164

All have status = 0, so NONE have a nonzero status:

grep -a " = STATUS" * | grep -av "0 = STATUS" | wc -l

0

- The top part of each output file contains the print out related to creating the Docker container. This starts with:

Sending build context to Docker daemon 289.3kB

Step 1/6 : FROM davegill/wrf-coop:thirteenthtry

thirteenthtry: Pulling from davegill/wrf-coop

and ends with something similar to:

Step 6/6 : CMD ["/bin/tcsh"]

---> Running in e911e10a911a

Removing intermediate container e911e10a911a

---> b1233e0731e8

Successfully built b1233e0731e8

- The second part of each file is the output of the real / ideal program and the WRF model, for each of the namelist choices, for each of the parallel build types. The parallel build sections begin with:

==============================================================

==============================================================

SERIAL START

==============================================================

or

==============================================================

==============================================================

OPENMP START

==============================================================

or

==============================================================

==============================================================

MPI START

==============================================================

Within each of these larger sections, the namelists are looped over (32 is the serial build for GNU, 33 = OpenMP, 34 = MPI):

grep "32" output_1 | grep "NML = "

RUN WRF test_001s for em_real 32 em_real, NML = 3dtke

RUN WRF test_001s for em_real 32 em_real, NML = conus

RUN WRF test_001s for em_real 32 em_real, NML = rap

RUN WRF test_001s for em_real 32 em_real, NML = tropical

- The third and final section is provided only for tests that support multiple parallel build types (for example, serial and mpi). The third section details the comparisons between the different parallel build, and those comparisons should always be bit-wise identical.

tail output_1

SUCCESS_RUN_WRF_d01_em_real_32_em_real_3dtke vs SUCCESS_RUN_WRF_d01_em_real_33_em_real_3dtke status = 0

SUCCESS_RUN_WRF_d01_em_real_32_em_real_3dtke vs SUCCESS_RUN_WRF_d01_em_real_34_em_real_3dtke status = 0

SUCCESS_RUN_WRF_d01_em_real_32_em_real_conus vs SUCCESS_RUN_WRF_d01_em_real_33_em_real_conus status = 0

SUCCESS_RUN_WRF_d01_em_real_32_em_real_conus vs SUCCESS_RUN_WRF_d01_em_real_34_em_real_conus status = 0

SUCCESS_RUN_WRF_d01_em_real_32_em_real_rap vs SUCCESS_RUN_WRF_d01_em_real_33_em_real_rap status = 0

SUCCESS_RUN_WRF_d01_em_real_32_em_real_rap vs SUCCESS_RUN_WRF_d01_em_real_34_em_real_rap status = 0

SUCCESS_RUN_WRF_d01_em_real_32_em_real_tropical vs SUCCESS_RUN_WRF_d01_em_real_33_em_real_tropical status = 0

SUCCESS_RUN_WRF_d01_em_real_32_em_real_tropical vs SUCCESS_RUN_WRF_d01_em_real_34_em_real_tropical status = 0

Thu Apr 9 00:08:58 UTC 2020

Here are the steps to construct a docker container that has the directory structure, data, libraries, and the executable ready for conducting a test run. This testing uses 46 machines (there are 46 separate builds, 101 through 146).

-

Log into the classroom as "150".

-

Get to the right location

cd /classroom/dave

- Copy the file

run_templatetoRUN, and edit theRUNfile. The cron job looks for thisRUNfile every 5 minutes, so give yourself time to edit the four lines.

cp run_template RUN

vi RUN

Here is what a typical run_template file looks like:

Email: gill@ucar.edu

Fork: https://github.com/wrf-model

Repo: WRF

Branch: release-v4.1.1

Here are the interpreted meanings of those entries:

Email: where you want the regression test results mailed, single person

example: weiwang@ucar.edu

example: dudhia@ucar.edu

Fork: which github fork

example: https://github.com/kkeene44

example: https://github.com/smileMchen

Repo: There are still people who have a repo that is named WRF-1

example: WRF

example: WRF-1

Branch: which branch to checkout

example: develop

example: master

example: release-v4.1.2