(Updated 02/17) New research: ControlNet - Adding Conditional Control to Text-to-Image Diffusion Models #7732

Replies: 13 comments 11 replies

-

|

This needs to be given more attention as I personally believe this can supercharge the webui. Here are some more examples of what a lot of people may be interested in as well. Also, the depth to image model apparently better than SD 2.0's as the mask part is trained at a higher resolution. |

Beta Was this translation helpful? Give feedback.

-

|

I tested it and it's amazing! Each tool is very powerful and produces results that are faithful to the input image and pose. In particular, pose2image was able to capture poses much better and create accurate images compared to depth models. |

Beta Was this translation helpful? Give feedback.

-

|

i tried to test it, but got OOM error, I guess 6 gigs isn't enough vram, maybe if its an extension, it'll be optimized by auto's optimizations? |

Beta Was this translation helpful? Give feedback.

-

|

looks great |

Beta Was this translation helpful? Give feedback.

-

|

And merging with other models is also possible! |

Beta Was this translation helpful? Give feedback.

-

|

I need this, popular or not! |

Beta Was this translation helpful? Give feedback.

-

|

Just PoC, I wrote a simple extension that takes ControlNet into WebUI. Not all features are supported, but at least it works. |

Beta Was this translation helpful? Give feedback.

-

|

thanks to @Mikubill ❤ now openpose ControlNet with colab 🥳 I will add others |

Beta Was this translation helpful? Give feedback.

-

Beta Was this translation helpful? Give feedback.

-

|

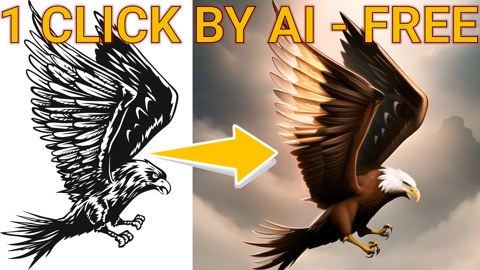

this thing is just revolutionary just released my tutorial for controlnet based on an extension 16.) Automatic1111 Web UI |

Beta Was this translation helpful? Give feedback.

-

|

Two similar papers have now come out shortly after ControlNet did. https://github.com/arpitbansal297/Universal-Guided-Diffusion (https://arxiv.org/abs/2302.07121) Edit: T2I-Adapter integration has now been merged with the ControlNet extension. Mikubill/sd-webui-controlnet#140 |

Beta Was this translation helpful? Give feedback.

-

ControlNet - Adding Conditional Control to Text-to-Image Diffusion Models

Paper: https://arxiv.org/abs/2302.05543

GitHub: https://github.com/lllyasviel/ControlNet

From the abstract, this provides a way to train for task-specific conditions with even small datasets (<50k) and as fast as fine-tuning diffusion models locally (one of the examples presented shows a depth model that only took a week of training on a single 3090TI).

Several pre-trained models are provided, and training code is included in the GitHub repo to make your own. Highly recommend taking a look at the paper to understand what it's capable of.

Update

This is now supported in the webui via the extensions below (independent of each other):

https://github.com/Mikubill/sd-webui-controlnet

https://github.com/ThereforeGames/unprompted

A kind user also shared a Blender project to create openpose-like images, so you can skip the preprocessor step for the openpose controlnet. https://toyxyz.gumroad.com/l/ciojz

Update 2

T2I-Adapter integration has now been merged with the ControlNet extension. Mikubill/sd-webui-controlnet#140

Related, Style2Paints V5 preview (using a currently unreleased sketch-to-illustration model, based on the same research): https://github.com/lllyasviel/style2paints/tree/master/V5_preview

Beta Was this translation helpful? Give feedback.

All reactions