-

Notifications

You must be signed in to change notification settings - Fork 139

New issue

Have a question about this project? Sign up for a free GitHub account to open an issue and contact its maintainers and the community.

By clicking “Sign up for GitHub”, you agree to our terms of service and privacy statement. We’ll occasionally send you account related emails.

Already on GitHub? Sign in to your account

Final DMC energy varies with equilibration strategy #2330

Comments

|

Hoping to narrow this bug/feature: if you do a restart, does the problem persist? Also: to help planning debugging, how long does this example take to run? |

|

Attention @camelto2 . Until resolved, this issue would preclude a smarter equilibration strategy. |

|

This is for T-moves version 0. Trying a restart is a good idea. Right now, we are performing reruns on CADES with slightly smaller errorbars for 20, 10, and 5 atom cells as initial runs gave ambiguous results for the smaller cells. This should tell us if the issue is reproducible and whether it appears for smaller systems as well. The 20 atom runs take about a day on 8 Skylake nodes. |

|

It is possibly worth mentioning that @aannabe has seen similar problems with an independent code (qwalk) using tmoves. The tmoves used there would be equivalent to tmoves v0 in qmcpack. |

|

DMC propagator needs an E_trial and variance of E_local for things like adjusting the population size and determining if the walkers are stepping into weird places. Depending on how these values are estimated/carried over between simulations could have some measureable consequences, especially for these energy scales. |

|

@rcclay, but these choices should fade out rapidly and should be no worse than starting from VMC (at least in principle), right? |

|

I need to dig into SimpleFixedNodeBranch and see how precisely and how often we update this stuff. |

|

another thing to investigate is the whether or not you can recover the correct behavior using smaller jumps in the timestep. Rather than going from 0.02 to 0.002, trying a few intermediates to see if the problem is as drastic. |

|

A complicating factor is that at the entry point to each DMC sub-run, warmup is performed with stochastic reconfiguration. In this case (and in others), we have observed that the trial energy behaves erratically (rapid fluctuations on the scale of the VMC-DMC energy difference), before settling down immediately following the warmup. If a large error was introduced to the trial energy at this point, and if the moving average of the trial energy kept this initial bias for a long time, it could affect the population dynamics for an extended period of time. I suppose that this would formally appear as a more pronounced (and transient) population control bias. |

|

@camelto2 I agree that this is a good thing to investigate. I had planned to move this direction once we have fully settled reproducibility. Restarts are another good thing to try early on. |

|

In view of the upcoming weekend it would be sensible to queue several independent series of runs that will tell us the most with the least amount of human effort. For example:

Perhaps this has already been done, but I think it is worth fishing for significant problems in existing data. Unlikely to be successful but low effort:

|

|

Chandler is performing a series of runs. He's been able to reproduce the problem in the 10 atom cell, so we can now get conclusive results in 12 hours on 4 nodes. Runs we have planned:

Additional observations:

|

|

@jtkrogel @prckent I'm writing what I found today because it seems to be related with this issue. This DMC results are for 2D GeSe starting from 0.1, 0.05 and 0.01 to 0.005 a.u. time step, and v3 T-moves is used. I first thought DMC total energy is converged enough on 0.005 a.u., but when I restart with stored walkers on the same 0.005 a.u. final time step, it gives different equilibrium total energy. I think it is the same issue with here. |

|

Hyeondeok, interesting. The stored walkers that you use for the restart -- they were stored during the 0.005 a.u. timestep in the first run? |

|

@mcbennet Yes, it is. They were from the first run with the same time step 0.005 a.u. to the restart run. |

|

@Hyeondeok-Shin Have you checked that VMC restarts correctly and consistently in this system? Clearly it would be very difficult for it not to, but we thought DMC was safe as well... |

|

@prckent I didn't check for VMC restart run, but I can quickly check it. I'll post here when VMC check has done. |

|

I quickly did a restart test for VMC and it seems fine. VMC test has done with total 400 blocks and 200 blocks + 200 restart blocks. Total 400 blocks. GeSe-S4-dmc series 1 -105.390069 +/- 0.001767 2.487108 +/- 0.081797 0.0236 First 200 + 200 restart blocks. file series 2 -105.391064 +/- 0.001996 2.507822 +/- 0.072885 0.0238 They are equivalent, so restart works correctly for VMC (or single QMC section), |

|

@mcbennet @jtkrogel assuming that the weekend runs have not flagged a clear culprit, I have an additional request: compile the code without MPI and run on the smaller cell with OpenMP threads on one node only. Do not use the MPI code with one task for this test. The run should use exactly the same number of total walkers as the 4 node runs so that it can be directly compared. This will rule out any problems with either our MPI implementation or that on CADES. |

|

The weekend runs have not flagged a clear culprit, however we do have some new information:

We can run with no-mpi. Chandler is currently trying to compile v3.6.0 for a historical reference. He will also soon see if the effect is visible in pseudopotential diamond, if so, we can more easily compare e.g. QMCPACK and QWalk in the near future (good to isolate code vs algorithm issue). |

|

@jtkrogel could you provide the DMC input sections and the full printout of QMCPACK corresponding to your run with two time steps? |

|

@ye-luo attached here |

|

@mcbennet Could you rerun the one_ts and two_ts with warmupSteps=1000 in every DMC section? |

|

Yes, I can have a look at that. |

|

Interesting results so far. Can you confirm that locality approximation shows the problem? I suggest focusing on it since all the different QMC codes have it implemented consistently. |

|

I see the following stochastic results for diamond with develop+modification mentioned above (DevMod). Also, for SCO, the modification appears to have resolved AoS. One TS Ref: -449.351323 +/- 0.001461 AoS LocalEnergy = -449.350831 +/- 0.001897 I am submitting a WIP PR, so that others might test my modification, scrutinize it, etc. |

|

Thanks Chandler. So with your fix, diamond now looks clean with AoS/SoA, but there remains an additional (and possibly unrelated) bug in SoA, as evidenced by your SCO results, correct? |

|

Yes, diamond looks clean to me. I am not yet sure however why my stochastic tests show failures for 1704 and Devel in AoS, but pass deterministic tests. Also, SCO looks clean as well for AoS but SoA is still off. I do not know the nature of the SoA error in SCO. |

|

Good to fix the manifesting issue, anyway. The remaining SoA issue was introduced prior to 3.8.0, right? I guess a return to the bisection search with SoA should be revealing. |

|

Follow up regarding deterministic tests. @Hyeondeok-Shin , I modified your tests by increasing number of VMC walkers to 20 then removing targetwalkers variables in DMC sections, i.e., After doing so, I see that this deterministic tests agrees with my stochastic tests

|

|

This is an important update to the deterministic test. It's also consistent with the idea that only some trajectories (a subset of the phase space) are affected. |

|

More observations worth noting regarding the above deterministic test.

|

|

Note that the VMC driver will round up the number of walkers to a multiple of the thread count. There is no way to change this because the existing VMC drivers require an equal number of walkers per thread, which makes sense from efficiency considerations although a route for more flexibility would be ideal. Therefore when specifying walkers you need to be careful about the number of threads that you use. |

|

For all of my tests of diamond 1x1x1, I have used 1 thread and 1 mpi task. |

|

Does the discussion above still reflect the current status? i.e. If anyone has an update, please post. |

|

Some discussion has been continued within #2349. That WIP PR corresponds to the modifications that fixes AoS (mentioned above in present thread) -- though SoA remains broken. |

|

For comparison purposes, I am sharing an analogous plot here to the diamond 1x1x1 figure above but for SCO. This again suggests an underlying issue with SoA while AoS appears clean for version 3.8.0, then a bias in #1704 and beyond. The suggested modification in #2349 again appears to remove the bias in AoS. UPDATE (4/8/2020): There was a mistake in the figure above. The SoA builds were all from the development branch. See corrected plot further down this thread. |

|

Submitted earlier today. Will prepend to the plot when complete. |

|

Retracted comment. |

|

The following figure contains corrected SrCoO3 data. In my previous figure, all SoA builds corresponded to the development branch by mistake. From the statistical tests here, we see that a bug was introduced in #1704 and the bug remains in the current develop branch. #2369 fixes this bug. This test is consistent with the statistical test of diamond. |

|

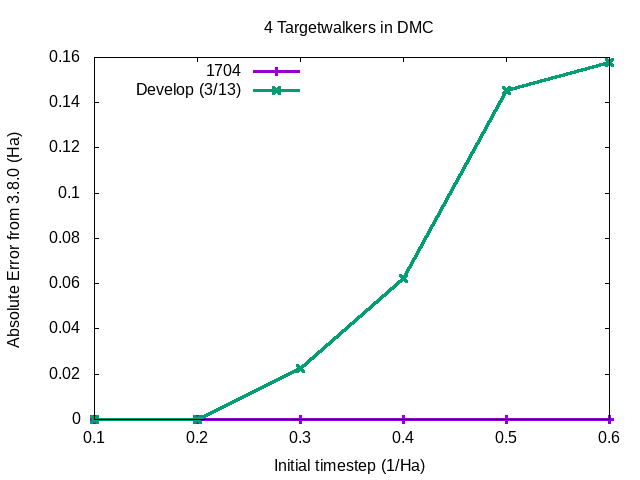

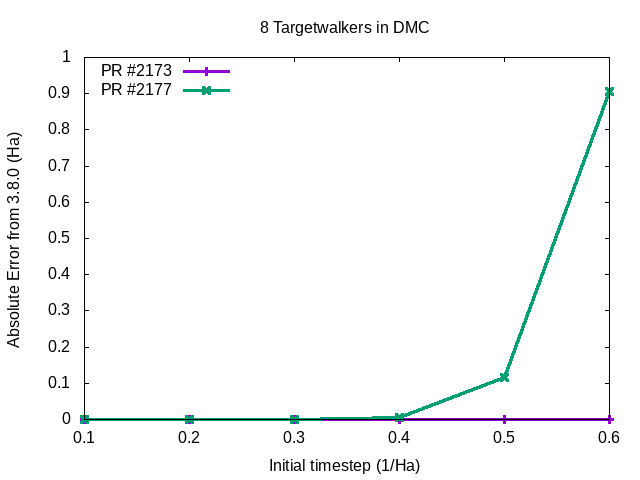

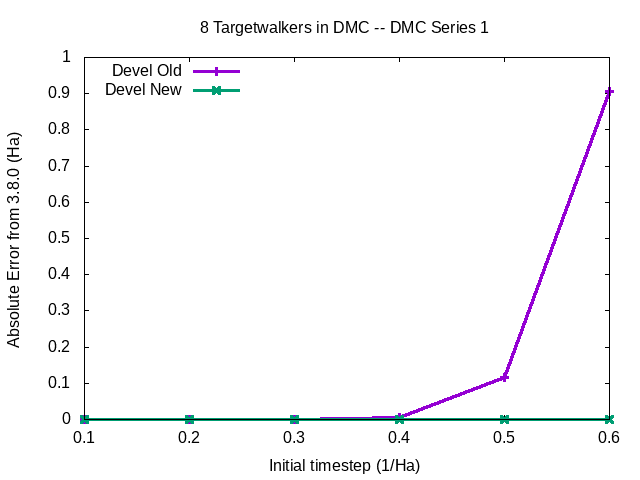

Regarding the deterministic test, here I focus on the absolute errors in the first DMC series. I have taken Hyeondeok's test and have varied two parameters independently -- (1) the number of target walkers in the DMC and (2) the timestep in the initial DMC. The plots below show the series 1 errors in total energy of 1704 and Develop (pulled in 3/13) w.r.t 3.8.0. For all variations, it appears that 1704 is clean on this front (if 3.8.0 is assumed "correct"). For Develop, clearly there is a dependence on both timestep and number of target walkers chosen -- also, from the case where targetwalkers=2 it is clear that the absolute error is not necessarily monotonic with timestep. Note: the targetwalkers=2 case is exactly Hyeondeok's test but with varied timestep. Note: All runs below correspond to SoA builds |

|

After the additional fix of #2377, develop should now be cured of this important problem. We can check the nightlies tomorrow afternoon for the results of the deterministic test, but I think we also need confirmation here that the problem is fixed in SrCoO3 and no other problems have crept in. |

|

@mcbennet could you verify that the develop no more diverge behaviour from v3.8.0? Thank you. |

|

From the above, I think we can happily mark this as closed. Thanks all. |

The final DMC energy for a 20 atom cell of SrCoO3 differs when QMCPACK is run in two ways that should be equivalent:

In this case, a difference of 6.6(7) mHa/f.u. is observed in the final DMC energy using the CPU code on Theta (case 1) and CADES (case 1 and 2) with Theta and CADES agreeing for case 1.

A plot of the 2-timestep data with differing numbers of equilibration blocks removed, seems to show a very long and slow transient. For comparison, a full equilibration from VMC to the DMC 0.002/Ha equilibrium occurs within 100 blocks. Starting from the 0.02/Ha equilibrium, the new 0.002/Ha equilibrium is not reached even after 1000 blocks.

Given the rapid equlibration from the high energy VMC state, it is unclear what mechanism could be responsible for the lack of equilibration from the lower energy, and presumably closer, DMC 0.02/Ha state. More investigation of this effect is warranted.

The code's inability to relax to the same minimum from different initial states casts doubt on the equilibrium found from standard single shot calculations performed in production.

Issue identified by @mcbennet.

The text was updated successfully, but these errors were encountered: