-

Notifications

You must be signed in to change notification settings - Fork 10.9k

New issue

Have a question about this project? Sign up for a free GitHub account to open an issue and contact its maintainers and the community.

By clicking “Sign up for GitHub”, you agree to our terms of service and privacy statement. We’ll occasionally send you account related emails.

Already on GitHub? Sign in to your account

[BREAK] Require OPLOG/REPLICASET to run Rocket.Chat #14227

Conversation

|

Why was this done? I have no need to run this on a cluster and now I have to install all this extra crap? |

|

This is incredible. WHY was this done? Who needs clusters and whatnot? Our entire rocket chat installation is now messed up because of this!!!! And the medium.com link is so old. Why didn't you provide installation/upgrade instructions for this? Unbeliveable! |

|

I managed to get replication setup on MongoDB and RocketChat still won't start due to no oplog (even though there is). My installation is completely broken! EDIT: Working after 2.5 hours of frantic reading and trying things. I don't know why Rocket.Chat decided to do this (it's even called [BREAK]) but this was really irresponsible. |

|

I got this to work again finally but it was a lot of work, I'm including my steps below in the hope that this will help someone else. Please note, my installation is using Synology Docker and MongoDB is only there for RocketChat so security is completely turned off.

bash: mongo command client: NOTE: The createUser is only necessary if you're using authentication with MongoDB.

In my case since I don't have any MongoDB security whatsoever: with authentication: |

|

@LeeThompson Thank you !!! My install is broken too :( I'll give your solution a try . |

|

@LeeThompson Do you have any suggestion for Docker-compose ? This is my file: |

|

@franckadil I haven't used docker-compose but maybe this: NOTE: Backup your data first if you care about it in case this goes horribly wrong. Once your MongoDB is running with the --replSet option, you will need to attach a terminal to the Mongo container and use the " docker-compose snippets: |

|

@LeeThompson Thank you very much, I finally got it working ! If somebody could update the docker-compose documentation …

|

|

Which docker-compose did you use? The one here on the repo actually has oplog configured. https://github.com/RocketChat/Rocket.Chat/blob/develop/docker-compose.yml Just to be clear. This does not require an actual replicaset. This just requires at least the single node be configured in replicaset mode so that the oplog is enabled. In addition make sure you set MONGO_OPLOG_URL so the Rocket.Chat is aware of this |

|

@geekgonecrazy I created my own stack after searching on the rocket.chat official documentation and Google. I had to customize docker-compose to be able to automate the letsencrypt/nginx certification by building a custom dockerfile for nginx/certbot. Are you interested in receiving the complete stack to update the Official documentation for people new to docker and Rocket.Chat ? |

|

@franckadil please send us your docker-compose, we can add some parts to our own or create a new file just for a more complete stack. Thanks |

|

Any chance you'll change your mind and make this optional? I don't even understand what this does.. |

|

Hi, I wouldn't have expected a change between a v.1.0.0 Release Candidate and a v.1.0.0 to completely break my simple rocketchat install. I fixed eventually with the instructions provided by Lee Thompson above, but it would have been really great if this was communicated more clearly. thanks. I used the edit docker config.v2.json hack to restart mongo docker container with the --replSet rs0 to get back and running.. |

|

The OPLOG/REPLICASET requirement also breaks Rocket.Chat deployments on Heroku. Would it be an option to keep OPLOG/REPLICASET optional? |

|

I can't get this to work :( I initiated the replset with the following commands: I stopped the container and then typed docker-compose up. I'm getting the following error now... My docker-compose.yml file looks like this: Can someone tell me what I did wrong please? |

|

Even using @franckadil 's simple compose I'm still getting the same |

|

Doing this #6963 (comment) has worked. Thanks @franckadil and @geekgonecrazy |

|

@rockneverdies55 Yes sorry I forgot to mention you should delete your old containers first. And in some rare cases do a |

@franckadil big thanks for your help, i have modified the docker-compose.yml file but it doesn't worked for me. my commands :

failure code: |

|

@exitsoundhh This should work, but first delete your containers: The error message you get # If you don't use docker services the

Note: When your stack is correctly launched, I would recommend you stop the stack, then you run it in the background with |

@franckadil big thanks for your help, i have modificted the docker-compose.yml file |

|

@franckadil thank you man for your help, i think my last error is i must migrate my data for the mongo version 4? Do you have any idea? I think the configuration are now correct? |

|

@exitsoundhh yes indeed, But isn't there a database upgrade script by Rocket.Chat ? |

|

@franckadil i have downgrade rocket chat to the last worked version 0.74.2 for me. i fixed my migration problem with this command: |

|

Thank you @exitsoundhh ! I will test this asap. |

|

@franckadil thank you for your help! FYI, I am using AWS to deploy my rocket.chat server and using the docker compose created by you. |

|

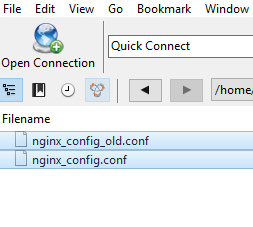

@hkbnman Hi!, The 504 error indicates that the server, while acting as a gateway or proxy fails. this can happen for multiple reasons. On top of my mind I would ask you to check the NGINX conf files, if you followed my steps, you should edit those files and replace replace the site URL with yours. The whole Idea of Using NGINX is to avoid having to use the port prefix at the end of your URL (http://test.com:3000) and to be able to get SSL termination (https). Otherwise if that's done, I would need more details:

Having more information about your setup will be helpful! |

Thanks for your kindly assistance! |

|

While I haven't tested on AWS, It should normally work unless there is an issue with the security on the instance or the VPC level. I will run some tests on my end too as soon as I can. Checking your logs:

On a terminal external to your instance check if port 80/443 are correctly allowed for inbound traffic. You're supposed to get something similar to this: If you get an error: Can you check your security-group inbound and outbound rules? You can also for testing purposes allow the following rules for Inbound traffic: ALL Traffic | ALL | ALL | ::/0 | ALLOW |

|

Hi @franckadil

-sudo ufw status: 22/tcp ALLOW Anywhere

404 Not Foundnginx/1.15.12 Thanks!@franckadil |

|

Thanks for sharing this, It seems like an issue with the Nginx config files or the Security Group preventing access to port 3000. Have you tried testing the Security Group with these rules ? I'am investigating. |

|

@hkbnman So here is what worked for me on Amazon AWS:

After getting the Rocketchat screen :

wait 15~ minutes then browse your site it should be up and running: |

|

Great Jobs! @franckadil But there is 2 problems: 2, Testing for live.chat in android app for new message : I know this may not be related to the rocket-chat installation issue, but please help to test if these 2 problems also occur in your rocket.chat installation or just my cases. Thank you for your kindly assistance! |

|

@hkbnman I am Happy you got your Rocket successfully launched 👍

Then stop the stack Reference: https://rocket.chat/docs/bots/running-a-hubot-bot/

I can guarantee that my installation is free of these errors after I setup the site. But you may want to check the Rocket.Chat Mobile app section with your issue this will help everyone having the same errors and provide valuable feedback to the Developers. I am happy I could help ! |

|

Thanks! @franckadil |

|

Hi team Am trying to run the rocketchat on kubernetes in IBM Cloud platform and using the latest version @1.0.3 / #1.0.3 . My mongo database is running in the container : {redacted}:27017 Replicaset Name : mongodb-7cbd69f547 Mapped it in the MongoURL & Mongo_OPLOG URL as below. Still error persits. Can someone guide me here "spec": { |

|

@Srinivasan2017 please don’t paste with public ips like that. I’ve redacted, please also secure your mongo server it should not be exposed to the world with a public ip. All in this thread have already seen the information, so please make sure at least your mongo servers ip is changed. I’d also recommend getting support on our forum or #support on our community server |

|

could someone please add proper docker-compose.yaml to AWS deploy documentation, step 7? https://rocket.chat/docs/installation/paas-deployments/aws/ |

Open a bug against the documentation. Even better do a fix and pull request.... https://github.com/RocketChat/docs/blob/master/installation/paas-deployments/aws/README.md |

|

Expanding @LeeThompson's godsend post on authenticated instances: make sure you create the If you don't, you might get an authentication error when starting rocketchat. Also, when following the official docs, you might want to change the env-variable to have

When mongodb says you are not in the primary replica, revert the changes made to Last but not least, when you cannot create the |

|

From cluster perspective, I am strongly concerned about potential security implications. One of my projects is multi-tenant -- for each customer there's independent Rocket.Chat setup, which connects to dedicated database with dedicated credentials set. All databases are running on the shared cluster though. It all looks fairly isolated from security viewpoint... until we introduce the oplog access, which (as far as I understand) is shared across all logical databases. So, now, if customer's RocketChat is hacked into execution of arbitrary code (e.g. due to vulnerability in node or whatever), attacker can gain read access to shared oplog and steal info about all customer databases. Not good... :( I'd strongly vote to make this feature optional ASAP. |

|

If you use username / password for each database you will be unable to subscribe to events from another database |

How to use Rocket.Chat with Oplog

mongo mongo/rocketchat --eval "rs.initiate({ _id: ''rs0'', members: [ { _id: 0, host: ''localhost:27017'' } ]})"Docker Compose

To start mongodb replicaset via docker compose you need to have an additional job to execute the command and add the

MONGO_OPLOG_URLenv var into the rocket.chat config: