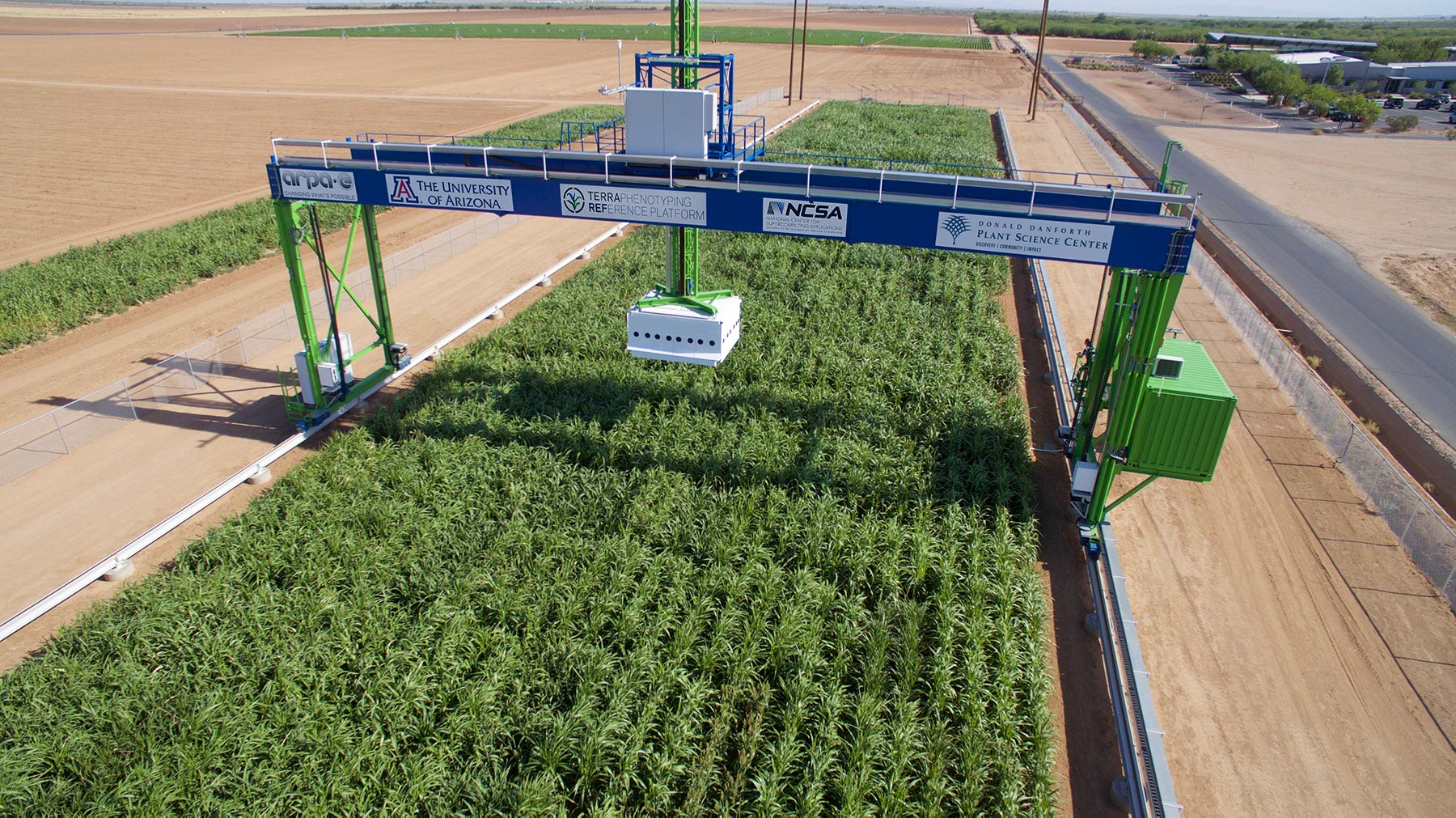

Data in the SGxP benchmark comes from the TERRA-REF Project and field, shown here with sorghum growing.

Large scale field-phenotyping approaches have the potential to solve important questions about the relationship of plant genotype to plant phenotype. Computational approaches to measuring the phenotype (the observable plant features) are required to address the problem at a large scale, but machine learning approaches to extract phenotypes from sensor data have been hampered by limited access to (a) sufficiently large, organized multi-sensor datasets, (b) field trials that have a large scale and significant number of genotypes, (c) full genetic sequencing of those phenotypes, and (d) datasets sufficiently organized so that algorithm centered researchers can directly address the real biological problems.

To address this, we present SGxP, a novel benchmark dataset from a large-scale field trial consisting of the complete genotype of over 300 sorghum varieties, and time sequences of imagery from several field plots growing each variety, taken with RGB and laser 3D scanner imaging. To lower the barrier to entry and facilitate further developments, we provide a set of well organized, multi-sensor imagery and corresponding genomic data. We implement baseline deep learning based phenotyping approaches to create baseline results for individual sensors and multi-sensor fusion for detecting genetic mutations with known impacts. We also provide and support an open-ended challenge by identifying thousands of genetic mutations whose phenotypic impacts are currently unknown. A web interface for machine learning researchers and practitioners to share approaches, visualizations and hypotheses supports engagement with plant biologists to further the understanding of the sorghum genotype x phenotype relationship.

This repository includes code to load data in the SGxP Benchmark, and to reproduce the baseline results shared in the paper.

The full dataset, leaderboard (including baseline results) and discussion forums can be found at http://sorghumsnpbenchmark.com.

To quickly get started with the sample dataset, follow these steps:

-

Clone the Repository:

Clone this repository to your local machine using the following command:

git clone https://github.com/SLUVisLab/sorghum_snp_prediction.git

-

Install the required packages:

pip install -r requirements_dataset_tools.txt

-

Download the sample dataset:

from dataset_tools import SorghumSNPDataset ds = SorghumSNPDataset('path/to/the/dataset', sample_ds=True, sensor='rgb', train=True, download=True)

Detail of sample dataset | Image Metadata Readme

For the full dataset, please note that due to the size of images, the code does not handle downloading the images directly. Follow these steps:

-

Download the images:

images.tar.gz (230GB)

-

Organize the images:

Place the downloaded file

images.tar.gzunder the dataset folder and untar it. The folder structure should look like this:path/to/dataset/images/[sensor]/[cultivar]/[image] -

Download all the labels:

After setting up the images, use the provided code to download all the labels:

ds = SorghumSNPDataset('path/to/dataset/', marker='known', sensor='rgb', train=True, download=True)

Once you have the dataset downloaded and organized, you can obtain image paths and labels specific to a marker by either its name or index:

# By marker name

img_paths, labels = ds['sobic_001G269200_1_51589435']

# By marker index

img_paths, labels = ds[0]Once you have downloaded the dataset (full or sample), you can use the following code to obtain paths to image pairs and labels for the marker specific multimodal dataset:

from dataset_tools import SorghumSNPMultimodalDataset

ds = SorghumSNPMultimodalDataset('path/to/the/dataset', marker='known', sample_ds=False, train=True)

img_pair_paths, labels = ds[0]For all of the tasks included in the SGxP benchmark, we include the performance on a baseline model that was pretrained on TERRA-REF imagery from a different season (see the paper for more details).

You can download the pre-trained weights for each sensor: baseline_model_and_ebd.tar.gz

The reproduce the baseline results using these model, first clone this repository and follow these steps:

- Install the required packages:

pip install -r requirements.txt - Put the pretrained models under

results/model/ - Run

notebooks/gene_embeddings.ipynbto get the image embeddings. - Run

known_gene_pred.ipynbto compute the accuracy for each genetic markers.

If you would prefer to train your own model, we have imagery from entirely different lines of sorghum grown in a different season under the TERRA-REF gantry, so there is no risk of data leakage for the SGxP benchmark tasks. You can download the imagery here: genetic_marker_pretrain_dataset.tar.gz (70GB).

After you have downloaded the images and cloned this repository, follow these steps:

- Modify the dataset folder location in

tasks/s9_pretrain_rgb_jpg_res50_512_softmax_ebd.pyandtasks/s9_pretrain_scnr3d_jpg_res50_512_softmax_ebd.pyto the location where you downloaded the imagery. - Run

python -m tasks.s9_pretrain_rgb_jpg_res50_512_softmax_ebdfor RGB model finetuning andpython -m tasks.s9_pretrain_scnr3d_jpg_res50_512_softmax_ebdfor 3D scanner finetuning

The paper that details the SGxP Benchmark, "SG×P : A Sorghum Genotype × Phenotype Prediction Dataset and Benchmark", is currently under review at NeurIPS 2023 and can be found at https://openreview.net/pdf?id=dOeBYjxSoq.