Explore the Pro version of Prowler at prowler.pro

Prowler is an Open Source security tool to perform AWS security best practices assessments, audits, incident response, continuous monitoring, hardening and forensics readiness. It contains more than 240 controls covering CIS, PCI-DSS, ISO27001, GDPR, HIPAA, FFIEC, SOC2, AWS FTR, ENS and custom security frameworks.

- Description

- Features

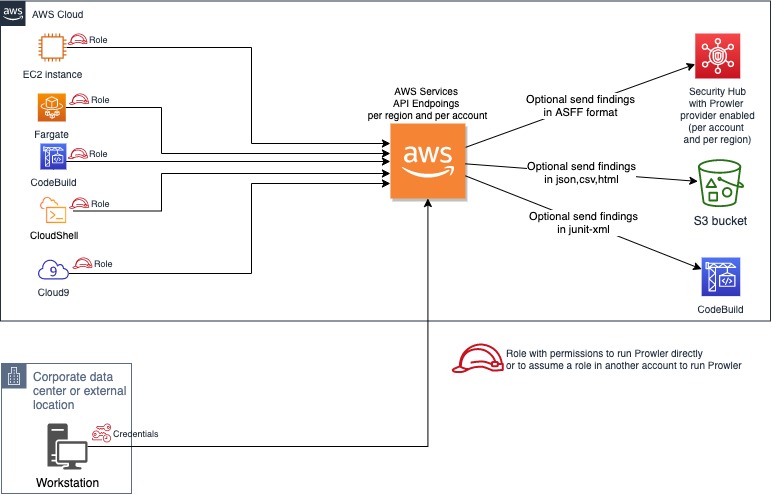

- High level architecture

- Requirements and Installation

- Usage

- Screenshots

- Advanced Usage

- Security Hub integration

- CodeBuild deployment

- Allowlist

- Inventory

- Fix

- Troubleshooting

- Extras

- Forensics Ready Checks

- GDPR Checks

- HIPAA Checks

- Trust Boundaries Checks

- Multi Account and Continuous Monitoring

- Custom Checks

- Third Party Integrations

- Full list of checks and groups

- License

Prowler is a command line tool that helps you with AWS security assessment, auditing, hardening and incident response.

It follows guidelines of the CIS Amazon Web Services Foundations Benchmark (49 checks) and has more than 190 additional checks including related to GDPR, HIPAA, PCI-DSS, ISO-27001, FFIEC, SOC2 and others.

Read more about CIS Amazon Web Services Foundations Benchmark v1.2.0 - 05-23-2018

+240 checks covering security best practices across all AWS regions and most of AWS services and related to the next groups:

- Identity and Access Management [group1]

- Logging [group2]

- Monitoring [group3]

- Networking [group4]

- CIS Level 1 [cislevel1]

- CIS Level 2 [cislevel2]

- Extras see Extras section [extras]

- Forensics related group of checks [forensics-ready]

- GDPR [gdpr] Read more here

- HIPAA [hipaa] Read more here

- Trust Boundaries [trustboundaries] Read more here

- Secrets

- Internet exposed resources

- EKS-CIS

- Also includes PCI-DSS, ISO-27001, FFIEC, SOC2, ENS (Esquema Nacional de Seguridad of Spain).

- AWS FTR [FTR] Read more here

With Prowler you can:

- Get a direct colorful or monochrome report

- A HTML, CSV, JUNIT, JSON or JSON ASFF (Security Hub) format report

- Send findings directly to Security Hub

- Run specific checks and groups or create your own

- Check multiple AWS accounts in parallel or sequentially

- Get an inventory of your AWS resources

- And more! Read examples below

You can run Prowler from your workstation, an EC2 instance, Fargate or any other container, Codebuild, CloudShell and Cloud9.

Prowler has been written in bash using AWS-CLI underneath and it works in Linux, Mac OS or Windows with cygwin or virtualization. Also requires jq and detect-secrets to work properly.

-

Make sure the latest version of AWS-CLI is installed. It works with either v1 or v2, however latest v2 is recommended if using new regions since they require STS v2 token, and other components needed, with Python pip already installed.

-

For Amazon Linux (

yumbased Linux distributions and AWS CLI v2):sudo yum update -y sudo yum remove -y awscli curl "https://awscli.amazonaws.com/awscli-exe-linux-x86_64.zip" -o "awscliv2.zip" unzip awscliv2.zip sudo ./aws/install sudo yum install -y python3 jq git sudo pip3 install detect-secrets==1.0.3 git clone https://github.com/prowler-cloud/prowler -

For Ubuntu Linux (

aptbased Linux distributions and AWS CLI v2):sudo apt update sudo apt install python3 python3-pip jq git zip pip install detect-secrets==1.0.3 curl "https://awscli.amazonaws.com/awscli-exe-linux-x86_64.zip" -o "awscliv2.zip" unzip awscliv2.zip sudo ./aws/install git clone https://github.com/prowler-cloud/prowlerNOTE: detect-secrets Yelp version is no longer supported, the one from IBM is mantained now. Use the one mentioned below or the specific Yelp version 1.0.3 to make sure it works as expected (

pip install detect-secrets==1.0.3):pip install "git+https://github.com/ibm/detect-secrets.git@master#egg=detect-secrets"AWS-CLI can be also installed it using other methods, refer to official documentation for more details: https://aws.amazon.com/cli/, but

detect-secretshas to be installed usingpiporpip3. -

Once Prowler repository is cloned, get into the folder and you can run it:

cd prowler ./prowler -

Since Prowler users AWS CLI under the hood, you can follow any authentication method as described here. Make sure you have properly configured your AWS-CLI with a valid Access Key and Region or declare AWS variables properly (or instance profile/role):

aws configure

or

export AWS_ACCESS_KEY_ID="ASXXXXXXX" export AWS_SECRET_ACCESS_KEY="XXXXXXXXX" export AWS_SESSION_TOKEN="XXXXXXXXX"

-

Those credentials must be associated to a user or role with proper permissions to do all checks. To make sure, add the AWS managed policies, SecurityAudit and ViewOnlyAccess, to the user or role being used. Policy ARNs are:

arn:aws:iam::aws:policy/SecurityAudit arn:aws:iam::aws:policy/job-function/ViewOnlyAccess

Additional permissions needed: to make sure Prowler can scan all services included in the group Extras, make sure you attach also the custom policy prowler-additions-policy.json to the role you are using. If you want Prowler to send findings to AWS Security Hub, make sure you also attach the custom policy prowler-security-hub.json.

-

Run the

prowlercommand without options (it will use your environment variable credentials if they exist or will default to using the~/.aws/credentialsfile and run checks over all regions when needed. The default region is us-east-1):./prowler

Use

-lto list all available checks and the groups (sections) that reference them. To list all groups use-Land to list content of a group use-l -g <groupname>.If you want to avoid installing dependencies run it using Docker:

docker run -ti --rm --name prowler --env AWS_ACCESS_KEY_ID --env AWS_SECRET_ACCESS_KEY --env AWS_SESSION_TOKEN toniblyx/prowler:latest

In case you want to get reports created by Prowler use docker volume option like in the example below:

docker run -ti --rm -v /your/local/output:/prowler/output --name prowler --env AWS_ACCESS_KEY_ID --env AWS_SECRET_ACCESS_KEY --env AWS_SESSION_TOKEN toniblyx/prowler:latest -g hipaa -M csv,json,html

-

For custom AWS-CLI profile and region, use the following: (it will use your custom profile and run checks over all regions when needed):

./prowler -p custom-profile -r us-east-1

-

For a single check use option

-c:./prowler -c check310

With Docker:

docker run -ti --rm --name prowler --env AWS_ACCESS_KEY_ID --env AWS_SECRET_ACCESS_KEY --env AWS_SESSION_TOKEN toniblyx/prowler:latest "-c check310"or multiple checks separated by comma:

./prowler -c check310,check722

or all checks but some of them:

./prowler -E check42,check43

or for custom profile and region:

./prowler -p custom-profile -r us-east-1 -c check11

or for a group of checks use group name:

./prowler -g group1 # for iam related checksor exclude some checks in the group:

./prowler -g group4 -E check42,check43

Valid check numbers are based on the AWS CIS Benchmark guide, so 1.1 is check11 and 3.10 is check310

By default, Prowler scans all opt-in regions available, that might take a long execution time depending on the number of resources and regions used. Same applies for GovCloud or China regions. See below Advance usage for examples.

Prowler has two parameters related to regions: -r that is used query AWS services API endpoints (it uses us-east-1 by default and required for GovCloud or China) and the option -f that is to filter those regions you only want to scan. For example if you want to scan Dublin only use -f eu-west-1 and if you want to scan Dublin and Ohio -f eu-west-1,us-east-1, note the regions are separated by a comma delimiter (it can be used as before with -f 'eu-west-1,us-east-1').

-

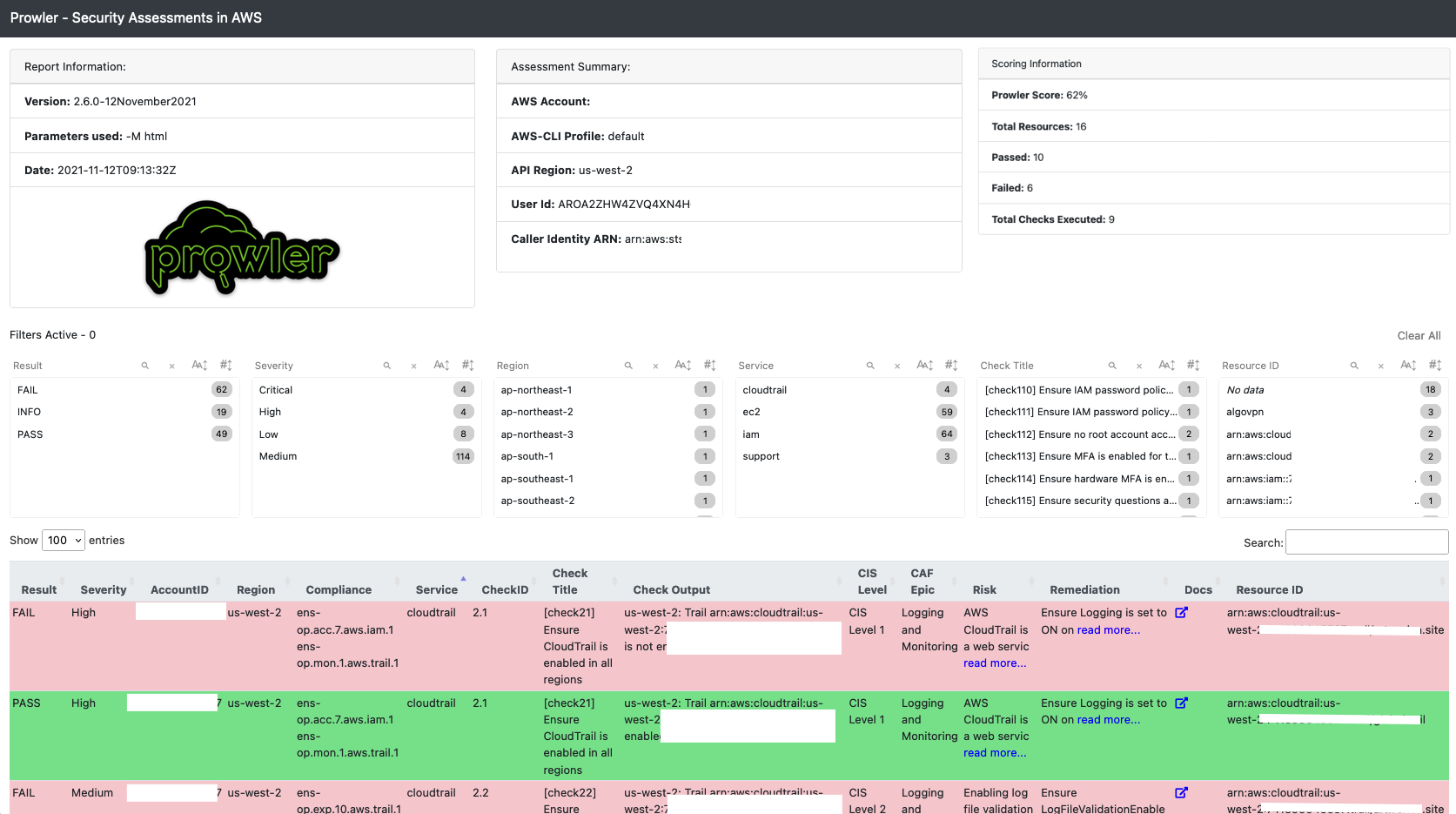

Sample screenshot of default console report first lines of command

./prowler:

-

Sample screenshot of the html output

-M html:

-

Sample screenshot of the Quicksight dashboard, see quicksight-security-dashboard.workshop.aws:

-

Sample screenshot of the junit-xml output in CodeBuild

-M junit-xml:

-

If you want to save your report for later analysis thare are different ways, natively (supported text, mono, csv, json, json-asff, junit-xml and html, see note below for more info):

./prowler -M csv

or with multiple formats at the same time:

./prowler -M csv,json,json-asff,html

or just a group of checks in multiple formats:

./prowler -g gdpr -M csv,json,json-asff

or if you want a sorted and dynamic HTML report do:

./prowler -M html

Now

-Mcreates a file inside the prowleroutputdirectory namedprowler-output-AWSACCOUNTID-YYYYMMDDHHMMSS.format. You don't have to specify anything else, no pipes, no redirects.or just saving the output to a file like below:

./prowler -M mono > prowler-report.txtTo generate JUnit report files, include the junit-xml format. This can be combined with any other format. Files are written inside a prowler root directory named

junit-reports:./prowler -M text,junit-xml

Note about output formats to use with

-M: "text" is the default one with colors, "mono" is like default one but monochrome, "csv" is comma separated values, "json" plain basic json (without comma between lines) and "json-asff" is also json with Amazon Security Finding Format that you can ship to Security Hub using-S.To save your report in an S3 bucket, use

-Bto define a custom output bucket along with-Mto define the output format that is going to be uploaded to S3:./prowler -M csv -B my-bucket/folder/

In the case you do not want to use the assumed role credentials but the initial credentials to put the reports into the S3 bucket, use

-Dinstead of-B. Make sure that the used credentials have s3:PutObject permissions in the S3 path where the reports are going to be uploaded.When generating multiple formats and running using Docker, to retrieve the reports, bind a local directory to the container, e.g.:

docker run -ti --rm --name prowler --volume "$(pwd)":/prowler/output --env AWS_ACCESS_KEY_ID --env AWS_SECRET_ACCESS_KEY --env AWS_SESSION_TOKEN toniblyx/prowler:latest -M csv,json -

To perform an assessment based on CIS Profile Definitions you can use cislevel1 or cislevel2 with

-gflag, more information about this here, page 8:./prowler -g cislevel1

-

If you want to run Prowler to check multiple AWS accounts in parallel (runs up to 4 simultaneously

-P 4) but you may want to read below in Advanced Usage section to do so assuming a role:grep -E '^\[([0-9A-Aa-z_-]+)\]' ~/.aws/credentials | tr -d '][' | shuf | \ xargs -n 1 -L 1 -I @ -r -P 4 ./prowler -p @ -M csv 2> /dev/null >> all-accounts.csv

-

For help about usage run:

./prowler -h

You can send the Prowler's output to different databases (right now only PostgreSQL is supported).

Jump into the section for the database provider you want to use and follow the required steps to configure it.

Install psql

- Mac ->

brew install libpq - Ubuntu ->

sudo apt-get install postgresql-client - RHEL/Centos ->

sudo yum install postgresql10

### Audit ID Field

Prowler can add an optional audit_id field to identify each audit that has been made in the database. You can do this by adding the -u audit_id flag to the prowler command.

There are two options to pass the PostgreSQL credentials to Prowler:

Configure a ~/.pgpass file into the root folder of the user that is going to launch Prowler (pgpass file doc), including an extra field at the end of the line, separated by :, to name the table, using the following format:

hostname:port:database:username:password:table

- Configure the following environment variables:

POSTGRES_HOSTPOSTGRES_PORTPOSTGRES_USERPOSTGRES_PASSWORDPOSTGRES_DBPOSTGRES_TABLE

Note: If you are using a schema different than postgres please include it at the beginning of the

POSTGRES_TABLEvariable, like:export POSTGRES_TABLE=prowler.findings

Also you need to have enabled the uuid postgresql extension, to enable it:

CREATE EXTENSION IF NOT EXISTS "uuid-ossp";

Create a table in your PostgreSQL database to store the Prowler's data. You can use the following SQL statement to create the table:

CREATE TABLE IF NOT EXISTS prowler_findings (

id uuid,

audit_id uuid ,

profile text,

account_number text,

region text,

check_id text,

result text,

item_scored text,

item_level text,

check_title text,

result_extended text,

check_asff_compliance_type text,

severity text,

service_name text,

check_asff_resource_type text,

check_asff_type text,

risk text,

remediation text,

documentation text,

check_caf_epic text,

resource_id text,

account_details_email text,

account_details_name text,

account_details_arn text,

account_details_org text,

account_details_tags text,

prowler_start_time text

);

- Execute Prowler with

-dflag, for example:./prowler -M csv -d postgresqlNote: This command creates a

csvoutput file and stores the Prowler output in the configured PostgreSQL DB. It's an example,-dflag does not require-Mto run.

Prowler supports natively the following output formats:

- CSV

- JSON

- JSON-ASFF

- HTML

- JUNIT-XML

Hereunder is the structure for each of them

| PROFILE | ACCOUNT_NUM | REGION | TITLE_ID | CHECK_RESULT | ITEM_SCORED | ITEM_LEVEL | TITLE_TEXT | CHECK_RESULT_EXTENDED | CHECK_ASFF_COMPLIANCE_TYPE | CHECK_SEVERITY | CHECK_SERVICENAME | CHECK_ASFF_RESOURCE_TYPE | CHECK_ASFF_TYPE | CHECK_RISK | CHECK_REMEDIATION | CHECK_DOC | CHECK_CAF_EPIC | CHECK_RESOURCE_ID | PROWLER_START_TIME | ACCOUNT_DETAILS_EMAIL | ACCOUNT_DETAILS_NAME | ACCOUNT_DETAILS_ARN | ACCOUNT_DETAILS_ORG | ACCOUNT_DETAILS_TAGS |

|---|

{

"Profile": "ENV",

"Account Number": "1111111111111",

"Control": "[check14] Ensure access keys are rotated every 90 days or less",

"Message": "us-west-2: user has not rotated access key 2 in over 90 days",

"Severity": "Medium",

"Status": "FAIL",

"Scored": "",

"Level": "CIS Level 1",

"Control ID": "1.4",

"Region": "us-west-2",

"Timestamp": "2022-05-18T10:33:48Z",

"Compliance": "ens-op.acc.1.aws.iam.4 ens-op.acc.5.aws.iam.3",

"Service": "iam",

"CAF Epic": "IAM",

"Risk": "Access keys consist of an access key ID and secret access key which are used to sign programmatic requests that you make to AWS. AWS users need their own access keys to make programmatic calls to AWS from the AWS Command Line Interface (AWS CLI)- Tools for Windows PowerShell- the AWS SDKs- or direct HTTP calls using the APIs for individual AWS services. It is recommended that all access keys be regularly rotated.",

"Remediation": "Use the credential report to ensure access_key_X_last_rotated is less than 90 days ago.",

"Doc link": "https://docs.aws.amazon.com/IAM/latest/UserGuide/id_credentials_getting-report.html",

"Resource ID": "terraform-user",

"Account Email": "",

"Account Name": "",

"Account ARN": "",

"Account Organization": "",

"Account tags": ""

}

NOTE: Each finding is a

jsonobject.

{

"SchemaVersion": "2018-10-08",

"Id": "prowler-1.4-1111111111111-us-west-2-us-west-2_user_has_not_rotated_access_key_2_in_over_90_days",

"ProductArn": "arn:aws:securityhub:us-west-2::product/prowler/prowler",

"RecordState": "ACTIVE",

"ProductFields": {

"ProviderName": "Prowler",

"ProviderVersion": "2.9.0-13April2022",

"ProwlerResourceName": "user"

},

"GeneratorId": "prowler-check14",

"AwsAccountId": "1111111111111",

"Types": [

"ens-op.acc.1.aws.iam.4 ens-op.acc.5.aws.iam.3"

],

"FirstObservedAt": "2022-05-18T10:33:48Z",

"UpdatedAt": "2022-05-18T10:33:48Z",

"CreatedAt": "2022-05-18T10:33:48Z",

"Severity": {

"Label": "MEDIUM"

},

"Title": "iam.[check14] Ensure access keys are rotated every 90 days or less",

"Description": "us-west-2: user has not rotated access key 2 in over 90 days",

"Resources": [

{

"Type": "AwsIamUser",

"Id": "user",

"Partition": "aws",

"Region": "us-west-2"

}

],

"Compliance": {

"Status": "FAILED",

"RelatedRequirements": [

"ens-op.acc.1.aws.iam.4 ens-op.acc.5.aws.iam.3"

]

}

}

NOTE: Each finding is a

jsonobject.

Prowler uses the AWS CLI underneath so it uses the same authentication methods. However, there are few ways to run Prowler against multiple accounts using IAM Assume Role feature depending on eachg use case. You can just set up your custom profile inside ~/.aws/config with all needed information about the role to assume then call it with ./prowler -p your-custom-profile. Additionally you can use -A 123456789012 and -R RemoteRoleToAssume and Prowler will get those temporary credentials using aws sts assume-role, set them up as environment variables and run against that given account. To create a role to assume in multiple accounts easier either as CFN Stack or StackSet, look at this CloudFormation template and adapt it.

./prowler -A 123456789012 -R ProwlerRole./prowler -A 123456789012 -R ProwlerRole -I 123456NOTE 1 about Session Duration: By default it gets credentials valid for 1 hour (3600 seconds). Depending on the mount of checks you run and the size of your infrastructure, Prowler may require more than 1 hour to finish. Use option

-T <seconds>to allow up to 12h (43200 seconds). To allow more than 1h you need to modify "Maximum CLI/API session duration" for that particular role, read more here.

NOTE 2 about Session Duration: Bear in mind that if you are using roles assumed by role chaining there is a hard limit of 1 hour so consider not using role chaining if possible, read more about that, in foot note 1 below the table here.

For example, if you want to get only the fails in CSV format from all checks regarding RDS without banner from the AWS Account 123456789012 assuming the role RemoteRoleToAssume and set a fixed session duration of 1h:

./prowler -A 123456789012 -R RemoteRoleToAssume -T 3600 -b -M cvs -q -g rdsor with a given External ID:

./prowler -A 123456789012 -R RemoteRoleToAssume -T 3600 -I 123456 -b -M cvs -q -g rdsIf you want to run Prowler or just a check or a group across all accounts of AWS Organizations you can do this:

First get a list of accounts that are not suspended:

ACCOUNTS_IN_ORGS=$(aws organizations list-accounts --query Accounts[?Status==`ACTIVE`].Id --output text)

Then run Prowler to assume a role (same in all members) per each account, in this example it is just running one particular check:

for accountId in $ACCOUNTS_IN_ORGS; do ./prowler -A $accountId -R RemoteRoleToAssume -c extra79; done

Using the same for loop it can be scanned a list of accounts with a variable like ACCOUNTS_LIST='11111111111 2222222222 333333333'

From Prowler v2.8, you can get additional information of the scanned account in CSV and JSON outputs. When scanning a single account you get the Account ID as part of the output. Now, if you have AWS Organizations and are scanning multiple accounts using the assume role functionality, Prowler can get your account details like Account Name, Email, ARN, Organization ID and Tags and you will have them next to every finding in the CSV and JSON outputs.

In order to do that you can use the new option -O <management account id>, requires -R <role to assume> and also needs permissions organizations:ListAccounts* and organizations:ListTagsForResource. See the following sample command:

./prowler -R ProwlerScanRole -A 111111111111 -O 222222222222 -M json,csv

In that command Prowler will scan the account 111111111111 assuming the role ProwlerScanRole and getting the account details from the AWS Organizatiosn management account 222222222222 assuming the same role ProwlerScanRole for that and creating two reports with those details in JSON and CSV.

In the JSON output below (redacted) you can see tags coded in base64 to prevent breaking CSV or JSON due to its format:

"Account Email": "my-prod-account@domain.com",

"Account Name": "my-prod-account",

"Account ARN": "arn:aws:organizations::222222222222:account/o-abcde1234/111111111111",

"Account Organization": "o-abcde1234",

"Account tags": "\"eyJUYWdzIjpasf0=\""The additional fields in CSV header output are as follow:

ACCOUNT_DETAILS_EMAIL,ACCOUNT_DETAILS_NAME,ACCOUNT_DETAILS_ARN,ACCOUNT_DETAILS_ORG,ACCOUNT_DETAILS_TAGS

Prowler runs in GovCloud regions as well. To make sure it points to the right API endpoint use -r to either us-gov-west-1 or us-gov-east-1. If not filter region is used it will look for resources in both GovCloud regions by default:

./prowler -r us-gov-west-1For Security Hub integration see below in Security Hub section.

Flag -x /my/own/checks will include any check in that particular directory (files must start by check). To see how to write checks see Add Custom Checks section.

S3 URIs are also supported as custom folders for custom checks, e.g. s3://bucket/prefix/checks. Prowler will download the folder locally and run the checks as they are called with default execution,-c or -g.

Make sure that the used credentials have s3:GetObject permissions in the S3 path where the custom checks are located.

In order to remove noise and get only FAIL findings there is a -q flag that makes Prowler to show and log only FAILs.

It can be combined with any other option.

Will show WARNINGS when a resource is excluded, just to take into consideration.

# -q option combined with -M csv -b

./prowler -q -M csv -bSets the entropy limit for high entropy base64 strings from environment variable BASE64_LIMIT. Value must be between 0.0 and 8.0, defaults is 4.5.

Sets the entropy limit for high entropy hex strings from environment variable HEX_LIMIT. Value must be between 0.0 and 8.0, defaults is 3.0.

export BASE64_LIMIT=4.5

export HEX_LIMIT=3.0An easy way to run Prowler to scan your account is using AWS CloudShell. Read more and learn how to do it here.

Since October 30th 2020 (version v2.3RC5), Prowler supports natively and as official integration sending findings to AWS Security Hub. This integration allows Prowler to import its findings to AWS Security Hub. With Security Hub, you now have a single place that aggregates, organizes, and prioritizes your security alerts, or findings, from multiple AWS services, such as Amazon GuardDuty, Amazon Inspector, Amazon Macie, AWS Identity and Access Management (IAM) Access Analyzer, and AWS Firewall Manager, as well as from AWS Partner solutions and from Prowler for free.

Before sending findings to Prowler, you need to perform next steps:

- Since Security Hub is a region based service, enable it in the region or regions you require. Use the AWS Management Console or using the AWS CLI with this command if you have enough permissions:

aws securityhub enable-security-hub --region <region>.

- Enable Prowler as partner integration integration. Use the AWS Management Console or using the AWS CLI with this command if you have enough permissions:

- As mentioned in section "Custom IAM Policy", to allow Prowler to import its findings to AWS Security Hub you need to add the policy below to the role or user running Prowler:

Once it is enabled, it is as simple as running the command below (for all regions):

./prowler -M json-asff -Sor for only one filtered region like eu-west-1:

./prowler -M json-asff -q -S -f eu-west-1Note 1: It is recommended to send only fails to Security Hub and that is possible adding

-qto the command.

Note 2: Since Prowler perform checks to all regions by defaults you may need to filter by region when runing Security Hub integration, as shown in the example above. Remember to enable Security Hub in the region or regions you need by calling

aws securityhub enable-security-hub --region <region>and run Prowler with the option-f <region>(if no region is used it will try to push findings in all regions hubs).

Note 3: to have updated findings in Security Hub you have to run Prowler periodically. Once a day or every certain amount of hours.

Once you run findings for first time you will be able to see Prowler findings in Findings section:

To use Prowler and Security Hub integration in GovCloud there is an additional requirement, usage of -r is needed to point the API queries to the right API endpoint. Here is a sample command that sends only failed findings to Security Hub in region us-gov-west-1:

./prowler -r us-gov-west-1 -f us-gov-west-1 -S -M csv,json-asff -q

To use Prowler and Security Hub integration in China regions there is an additional requirement, usage of -r is needed to point the API queries to the right API endpoint. Here is a sample command that sends only failed findings to Security Hub in region cn-north-1:

./prowler -r cn-north-1 -f cn-north-1 -q -S -M csv,json-asff

Either to run Prowler once or based on a schedule this template makes it pretty straight forward. This template will create a CodeBuild environment and run Prowler directly leaving all reports in a bucket and creating a report also inside CodeBuild basedon the JUnit output from Prowler. Scheduling can be cron based like cron(0 22 * * ? *) or rate based like rate(5 hours) since CloudWatch Event rules (or Eventbridge) is used here.

The Cloud Formation template that helps you to do that is here.

This is a simple solution to monitor one account. For multiples accounts see Multi Account and Continuous Monitoring.

Sometimes you may find resources that are intentionally configured in a certain way that may be a bad practice but it is all right with it, for example an S3 bucket open to the internet hosting a web site, or a security group with an open port needed in your use case. Now you can use -w allowlist_sample.txt and add your resources as checkID:resourcename as in this command:

./prowler -w allowlist_sample.txt

S3 URIs are also supported as allowlist file, e.g. s3://bucket/prefix/allowlist_sample.txt

Make sure that the used credentials have s3:GetObject permissions in the S3 path where the allowlist file is located.

DynamoDB table ARNs are also supported as allowlist file, e.g. arn:aws:dynamodb:us-east-1:111111222222:table/allowlist

Make sure that the table has

account_idas partition key andruleas sort key, and that the used credentials havedynamodb:PartiQLSelectpermissions in the table.

The field

account_idcan contain either an account ID or an*(which applies to all the accounts that use this table as a whitelist). As in the traditional allowlist file, therulefield must containcheckID:resourcenamepattern.

Allowlist option works along with other options and adds a WARNING instead of INFO, PASS or FAIL to any output format except for json-asff.

With Prowler you can get an inventory of your AWS resources. To do so, run ./prowler -i to see what AWS resources you have deployed in your AWS account. This feature lists almost all resources in all regions based on this API call. Note that it does not cover 100% of resource types.

The inventory will be stored in an output csv file by default, under common Prowler output folder, with the following format: prowler-inventory-${ACCOUNT_NUM}-${OUTPUT_DATE}.csv

Check your report and fix the issues following all specific guidelines per check in https://d0.awsstatic.com/whitepapers/compliance/AWS_CIS_Foundations_Benchmark.pdf

If you are using an STS token for AWS-CLI and your session is expired you probably get this error:

A client error (ExpiredToken) occurred when calling the GenerateCredentialReport operation: The security token included in the request is expiredTo fix it, please renew your token by authenticating again to the AWS API, see next section below if you use MFA.

To run Prowler using a profile that requires MFA you just need to get the session token before hand. Just make sure you use this command:

aws --profile <YOUR_AWS_PROFILE> sts get-session-token --duration 129600 --serial-number <ARN_OF_MFA> --token-code <MFA_TOKEN_CODE> --output textOnce you get your token you can export it as environment variable:

export AWS_PROFILE=YOUR_AWS_PROFILE

export AWS_SESSION_TOKEN=YOUR_NEW_TOKEN

AWS_SECRET_ACCESS_KEY=YOUR_SECRET

export AWS_ACCESS_KEY_ID=YOUR_KEYor set manually up your ~/.aws/credentials file properly.

There are some helpfull tools to save time in this process like aws-mfa-script or aws-cli-mfa.

- Use case: This user can view a list of AWS resources and basic metadata in the account across all services. The user cannot read resource content or metadata that goes beyond the quota and list information for resources.

- Policy description: This policy grants List*, Describe*, Get*, View*, and Lookup* access to resources for most AWS services. To see what actions this policy includes for each service, see ViewOnlyAccess Permissions

- Use case: This user monitors accounts for compliance with security requirements. This user can access logs and events to investigate potential security breaches or potential malicious activity.

- Policy description: This policy grants permissions to view configuration data for many AWS services and to review their logs. To see what actions this policy includes for each service, see SecurityAudit Permissions

Some new and specific checks require Prowler to inherit more permissions than SecurityAudit and ViewOnlyAccess to work properly. In addition to the AWS managed policies, "SecurityAudit" and "ViewOnlyAccess", the user/role you use for checks may need to be granted a custom policy with a few more read-only permissions (to support additional services mostly). Here is an example policy with the additional rights, "Prowler-Additions-Policy" (see below bootstrap script for set it up):

Allows Prowler to import its findings to AWS Security Hub. More information in Security Hub integration:

Quick bash script to set up a "prowler" IAM user with "SecurityAudit" and "ViewOnlyAccess" group with the required permissions (including "Prowler-Additions-Policy"). To run the script below, you need a user with administrative permissions; set the AWS_DEFAULT_PROFILE to use that account:

export AWS_DEFAULT_PROFILE=default

export ACCOUNT_ID=$(aws sts get-caller-identity --query 'Account' | tr -d '"')

aws iam create-group --group-name Prowler

aws iam create-policy --policy-name Prowler-Additions-Policy --policy-document file://$(pwd)/iam/prowler-additions-policy.json

aws iam attach-group-policy --group-name Prowler --policy-arn arn:aws:iam::aws:policy/SecurityAudit

aws iam attach-group-policy --group-name Prowler --policy-arn arn:aws:iam::aws:policy/job-function/ViewOnlyAccess

aws iam attach-group-policy --group-name Prowler --policy-arn arn:aws:iam::${ACCOUNT_ID}:policy/Prowler-Additions-Policy

aws iam create-user --user-name prowler

aws iam add-user-to-group --user-name prowler --group-name Prowler

aws iam create-access-key --user-name prowler

unset ACCOUNT_ID AWS_DEFAULT_PROFILEThe aws iam create-access-key command will output the secret access key and the key id; keep these somewhere safe, and add them to ~/.aws/credentials with an appropriate profile name to use them with Prowler. This is the only time the secret key will be shown. If you lose it, you will need to generate a replacement.

This CloudFormation template may also help you on that task.

We are adding additional checks to improve the information gather from each account, these checks are out of the scope of the CIS benchmark for AWS, but we consider them very helpful to get to know each AWS account set up and find issues on it.

Some of these checks look for publicly facing resources may not actually be fully public due to other layered controls like S3 Bucket Policies, Security Groups or Network ACLs.

To list all existing checks in the extras group run the command below:

./prowler -l -g extrasThere are some checks not included in that list, they are experimental or checks that take long to run like

extra759andextra760(search for secrets in Lambda function variables and code).

To check all extras in one command:

./prowler -g extrasor to run just one of the checks:

./prowler -c extraNUMBERor to run multiple extras in one go:

./prowler -c extraNumber,extraNumberWith this group of checks, Prowler looks if each service with logging or audit capabilities has them enabled to ensure all needed evidences are recorded and collected for an eventual digital forensic investigation in case of incident. List of checks part of this group (you can also see all groups with ./prowler -L). The list of checks can be seen in the group file at:

The forensics-ready group of checks uses existing and extra checks. To get a forensics readiness report, run this command:

./prowler -g forensics-readyWith this group of checks, Prowler shows result of checks related to GDPR, more information here. The list of checks can be seen in the group file at:

The gdpr group of checks uses existing and extra checks. To get a GDPR report, run this command:

./prowler -g gdprWith this group of checks, Prowler shows result of checks related to the AWS Foundational Technical Review, more information here. The list of checks can be seen in the group file at:

The ftr group of checks uses existing and extra checks. To get a AWS FTR report, run this command:

./prowler -g ftrWith this group of checks, Prowler shows results of controls related to the "Security Rule" of the Health Insurance Portability and Accountability Act aka HIPAA as defined in 45 CFR Subpart C - Security Standards for the Protection of Electronic Protected Health Information within PART 160 - GENERAL ADMINISTRATIVE REQUIREMENTS and Subpart A and Subpart C of PART 164 - SECURITY AND PRIVACY

More information on the original PR is here.

Under the HIPAA regulations, cloud service providers (CSPs) such as AWS are considered business associates. The Business Associate Addendum (BAA) is an AWS contract that is required under HIPAA rules to ensure that AWS appropriately safeguards protected health information (PHI). The BAA also serves to clarify and limit, as appropriate, the permissible uses and disclosures of PHI by AWS, based on the relationship between AWS and our customers, and the activities or services being performed by AWS. Customers may use any AWS service in an account designated as a HIPAA account, but they should only process, store, and transmit protected health information (PHI) in the HIPAA-eligible services defined in the Business Associate Addendum (BAA). For the latest list of HIPAA-eligible AWS services, see HIPAA Eligible Services Reference.

More information on AWS & HIPAA can be found here

The list of checks showed by this group is as follows, they will be mostly relevant for Subsections 164.306 Security standards: General rules and 164.312 Technical safeguards. Prowler is only able to make checks in the spirit of the technical requirements outlined in these Subsections, and cannot cover all procedural controls required. They be found in the group file at:

The hipaa group of checks uses existing and extra checks. To get a HIPAA report, run this command:

./prowler -g hipaaThe term "trust boundary" is originating from the threat modelling process and the most popular contributor Adam Shostack and author of "Threat Modeling: Designing for Security" defines it as following (reference):

Trust boundaries are perhaps the most subjective of all: these represent the border between trusted and untrusted elements. Trust is complex. You might trust your mechanic with your car, your dentist with your teeth, and your banker with your money, but you probably don't trust your dentist to change your spark plugs.

AWS is made to be flexible for service links within and between different AWS accounts, we all know that.

This group of checks helps to analyse a particular AWS account (subject) on existing links to other AWS accounts across various AWS services, in order to identify untrusted links.

To give it a quick shot just call:

./prowler -g trustboundariesCurrently, this check group supports two different scenarios:

- Single account environment: no action required, the configuration is happening automatically for you.

- Multi account environment: in case you environment has multiple trusted and known AWS accounts you maybe want to append them manually to groups/group16_trustboundaries as a space separated list into

GROUP_TRUSTBOUNDARIES_TRUSTED_ACCOUNT_IDSvariable, then just run prowler.

Current coverage of Amazon Web Service (AWS) taken from here:

| Topic | Service | Trust Boundary |

|---|---|---|

| Networking and Content Delivery | Amazon VPC | VPC endpoints connections (extra786) |

| VPC endpoints allowlisted principals (extra787) |

All ideas or recommendations to extend this group are very welcome here.

The diagrams depict two common scenarios, single account and multi account environments. Every circle represents one AWS account. The dashed line represents the trust boundary, that separates trust and untrusted AWS accounts. The arrow simply describes the direction of the trust, however the data can potentially flow in both directions.

Single Account environment assumes that only the AWS account subject to this analysis is trusted. However, there is a chance that two VPCs are existing within that one AWS account which are still trusted as a self reference.

Multi Account environments assumes a minimum of two trusted or known accounts. For this particular example all trusted and known accounts will be tested. Therefore GROUP_TRUSTBOUNDARIES_TRUSTED_ACCOUNT_IDS variable in groups/group16_trustboundaries should include all trusted accounts Account #A, Account #B, Account #C, and Account #D in order to finally raise Account #E and Account #F for being untrusted or unknown.

Using ./prowler -c extra9999 -a you can build your own on-the-fly custom check by specifying the AWS CLI command to execute.

Omit the "aws" command and only use its parameters within quotes and do not nest quotes in the aws parameter, --output text is already included in the check.

Here is an example of a check to find SGs with inbound port 80:

./prowler -c extra9999 -a 'ec2 describe-security-groups --filters Name=ip-permission.to-port,Values=80 --query SecurityGroups[*].GroupId[]]'In order to add any new check feel free to create a new extra check in the extras group or other group. To do so, you will need to follow these steps:

- Follow structure in file

checks/check_sample - Name your check with a number part of an existing group or a new one

- Save changes and run it as

./prowler -c extraNN - Send me a pull request! :)

- Follow structure in file

groups/groupN_sample - Name your group with a non existing number

- Save changes and run it as

./prowler -g extraNN - Send me a pull request! :)

- You can also create a group with only the checks that you want to perform in your company, for instance a group named

group9_mycompanywith only the list of checks that you care or your particular compliance applies.

Javier Pecete has done an awesome job integrating Prowler with Telegram, you have more details here https://github.com/i4specete/ServerTelegramBot

The guys of SecurityFTW have added Prowler in their Cloud Security Suite along with other cool security tools https://github.com/SecurityFTW/cs-suite

Prowler is licensed as Apache License 2.0 as specified in each file. You may obtain a copy of the License at http://www.apache.org/licenses/LICENSE-2.0

I'm not related anyhow with CIS organization, I just write and maintain Prowler to help companies over the world to make their cloud infrastructure more secure.

If you want to contact me visit https://blyx.com/contact or follow me on Twitter https://twitter.com/prowler-cloud my DMs are open.