-

Notifications

You must be signed in to change notification settings - Fork 3.6k

New issue

Have a question about this project? Sign up for a free GitHub account to open an issue and contact its maintainers and the community.

By clicking “Sign up for GitHub”, you agree to our terms of service and privacy statement. We’ll occasionally send you account related emails.

Already on GitHub? Sign in to your account

ARROW-11362:[Rust][DataFusion] Use iterator APIs in to_array_of_size to improve performance #9305

Conversation

…on script Closes apache#9247 from kszucs/mimalloc-windows-verification Authored-by: Krisztián Szűcs <szucs.krisztian@gmail.com> Signed-off-by: Sutou Kouhei <kou@clear-code.com>

Because we require .NET 3 or later since 3.0.0. Closes apache#9254 from kou/release-verify-macos-csharp Authored-by: Sutou Kouhei <kou@clear-code.com> Signed-off-by: Sutou Kouhei <kou@clear-code.com>

Closes apache#9260 from kou/release-debian-buster-arm64-add-missing-gir-gandiva Authored-by: Sutou Kouhei <kou@clear-code.com> Signed-off-by: Krisztián Szűcs <szucs.krisztian@gmail.com>

…ages Closes apache#9259 from kou/release-verify-arm64 Authored-by: Sutou Kouhei <kou@clear-code.com> Signed-off-by: Krisztián Szűcs <szucs.krisztian@gmail.com>

Closes apache#6302 from mrkn/ARROW-7633 Lead-authored-by: Kenta Murata <mrkn@mrkn.jp> Co-authored-by: Antoine Pitrou <antoine@python.org> Signed-off-by: Antoine Pitrou <antoine@python.org>

I am getting a bug with an error: Unexpected accumulator state, but It's not possible to understand what value was passed when the exception is done on the user's side. I add type to the error message to make investigation of the bug more easy. Closes apache#9201 from ovr/unexpected-accumulator-state Authored-by: Dmitry Patsura <zaets28rus@gmail.com> Signed-off-by: Andrew Lamb <andrew@nerdnetworks.org>

…s to benchmarks I think it would be great to test some compilation options individually on the benchmarks to see the impact. Here are some examples of the impact it can have on the queries: --features "": ``` Query 5 iteration 0 took 655.3 ms Query 5 iteration 1 took 648.9 ms Query 5 iteration 2 took 640.3 ms Query 5 iteration 3 took 658.7 ms Query 5 iteration 4 took 646.3 ms Query 5 iteration 5 took 684.1 ms Query 5 iteration 6 took 642.8 ms Query 5 iteration 7 took 656.9 ms Query 5 iteration 8 took 646.0 ms Query 5 iteration 9 took 669.1 ms Query 5 avg time: 654.85 ms ``` --features "snmalloc" ``` Query 5 iteration 0 took 525.4 ms Query 5 iteration 1 took 478.8 ms Query 5 iteration 2 took 485.7 ms Query 5 iteration 3 took 486.6 ms Query 5 iteration 4 took 482.6 ms Query 5 iteration 5 took 473.1 ms Query 5 iteration 6 took 494.4 ms Query 5 iteration 7 took 483.5 ms Query 5 iteration 8 took 493.1 ms Query 5 iteration 9 took 479.4 ms Query 5 avg time: 488.26 ms ``` --features "" ``` Query 12 iteration 0 took 241.4 ms Query 12 iteration 1 took 234.8 ms Query 12 iteration 2 took 229.8 ms Query 12 iteration 3 took 229.5 ms Query 12 iteration 4 took 228.3 ms Query 12 iteration 5 took 230.0 ms Query 12 iteration 6 took 228.3 ms Query 12 iteration 7 took 229.3 ms Query 12 iteration 8 took 229.9 ms Query 12 iteration 9 took 230.1 ms Query 12 avg time: 231.13 ms ``` --features "simd" ``` Query 12 iteration 0 took 157.7 ms Query 12 iteration 1 took 159.3 ms Query 12 iteration 2 took 156.9 ms Query 12 iteration 3 took 163.0 ms Query 12 iteration 4 took 157.5 ms Query 12 iteration 5 took 157.6 ms Query 12 iteration 6 took 156.6 ms Query 12 iteration 7 took 157.4 ms Query 12 iteration 8 took 158.6 ms Query 12 iteration 9 took 157.0 ms Query 12 avg time: 158.16 ms ``` Closes apache#9206 from Dandandan/custom_alloc Lead-authored-by: Daniël Heres <danielheres@gmail.com> Co-authored-by: Heres, Daniel <danielheres@gmail.com> Signed-off-by: Andrew Lamb <andrew@nerdnetworks.org>

…quet tables While profiling a DataFusion query I found that the code spends a lot of time in reading data from parquet files. Predicate / filter push-down is a commonly used performance optimization, where statistics data stored in parquet files (such as min / max values for columns in a parquet row group) is evaluated against query filters to determine which row groups could contain data requested by a query. In this way, by pushing down query filters all the way to the parquet data source, entire row groups or even parquet files can be skipped often resulting in significant performance improvements. I have been working on an implementation for a few weeks and initial results look promising - with predicate push-down, DataFusion is now faster than Apache Spark (`140ms for DataFusion vs 200ms for Spark`) for the same query against the same parquet files. Without predicate push-down into parquet, DataFusion takes about 2 - 3s (depending on concurrency) for the same query, because the data is ordered and most files don't contain data that satisfies the query filters, but are still loaded and processed in vain. This work is based on the following key ideas: * predicate-push down is implemented by filtering row group metadata entries to only those which could contain data that could satisfy query filters * it's best to reuse the existing code for evaluating physical expressions already implemented in DataFusion * filter expressions pushed down to a parquet table are rewritten to use parquet statistics (instead of the actual column data), for example `(column / 2) = 4` becomes `(column_min / 2) <= 4 && 4 <= (column_max / 2)` - this is done once for all files in a parquet table * for each parquet file, a RecordBatch containing all required statistics columns ( [`column_min`, `column_max`] in the example above) is produced, and the predicate expression from the previous step is evaluated, producing a binary array which is finally used to filter the row groups in each parquet file This is still work in progress - more tests left to write; I am publishing this now to gather feedback. @andygrove let me know what you think Closes apache#9064 from yordan-pavlov/parquet_predicate_push_down Authored-by: Yordan Pavlov <yordan.pavlov@outlook.com> Signed-off-by: Andrew Lamb <andrew@nerdnetworks.org>

…ng levels See [Intel Compiler warning flag documentation](https://software.intel.com/content/www/us/en/develop/documentation/cpp-compiler-developer-guide-and-reference/top/compiler-reference/error-handling-1/warnings-errors-and-remarks.html). Closes apache#9266 from jcmuel/master Authored-by: Johannes Müller <JohannesMueller@fico.com> Signed-off-by: Antoine Pitrou <antoine@python.org>

…m, and rtrim There is one obvious loose end in this PR, which is where to generate the `std::set` based on the `TrimOptions` (now in the ctor of UTF8TrimBase). I'm not sure what the lifetime guarantees are for this object (TrimOptions), where it makes sense to initialize this set, and when an (utf8 decoding) error occurs, how/where to report this. Although this is not a costly operation, assuming people don't pass in a billion characters to trim, I do wonder what the best approach here is in general. It does not make much sense to create the `std::set` at each `Exec` call, but that is what happens now. (This also seems to happen in `TransformMatchSubstring` for creating the `prefix_table` btw.) Maybe a good place to put per-kernel pre-compute results are the `*Options` objects, but I'm not sure if that makes sense in the current architecture. Another idea is to explore alternatives to the `std::set`. It seem that (based on the TrimManyAscii benchmark), `std::unordered_set` seemed a bit slower, and simply using a linear search: `std::find(options.characters.begin(), options.characters.end(), c) != options.characters.end()` in the predicate instead of the set doesn't seem to affect performance that much. In CPython, a bloom filter is used, I could explore to see if that makes sense, but the implementation in Arrow lives under the parquet namespace. Closes apache#8621 from maartenbreddels/ARROW-9128 Authored-by: Maarten A. Breddels <maartenbreddels@gmail.com> Signed-off-by: Antoine Pitrou <antoine@python.org>

…t-rowcount binary Closes apache#9249 from jhorstmann/ARROW-11305-parquet-rowcount-argument-confusion Authored-by: Jörn Horstmann <joern.horstmann@signavio.com> Signed-off-by: Jorge C. Leitao <jorgecarleitao@gmail.com>

This PR refactors `MutableBuffer::extend_from_slice` to remove the need to use `to_byte_slice` on every call, thereby removing its level of indirection, that does not allow the compiler to optimize out some code. This is the second performance improvement originally presented in apache#8796 and, together with apache#9027 , brings the performance of "MutableBuffer" to the same level as `Vec<u8>`, in particular to building buffers on the fly. Basically, when converting to a byte slice `&[u8]`, the compiler loses the type size information, and thus needs to perform extra checks and can't just optimize out the code. This PR adopts the same API as `Vec<T>::extend_from_slice`, but since our buffers are in `u8` (i.e. a la `Vec<u8>`), I made the signature ``` pub fn extend_from_slice<T: ToByteSlice>(&mut self, items: &[T]) pub fn push<T: ToByteSlice>(&mut self, item: &T) ``` i.e. it consumes something that can be converted to a byte slice, but internally makes the conversion to bytes (as `to_byte_slice` was doing). Credits for the root cause analysis that lead to this PR go to @Dandandan, [originally fielded here](apache#9016 (comment)). > [...] current conversion to a byte slice may add some overhead? - @Dandandan Benches (against master, so, both this PR and apache#9044 ): ``` Switched to branch 'perf_buffer' Your branch and 'origin/perf_buffer' have diverged, and have 6 and 1 different commits each, respectively. (use "git pull" to merge the remote branch into yours) Compiling arrow v3.0.0-SNAPSHOT (/Users/jorgecarleitao/projects/arrow/rust/arrow) Finished bench [optimized] target(s) in 1m 00s Running /Users/jorgecarleitao/projects/arrow/rust/target/release/deps/buffer_create-915da5f1abaf0471 Gnuplot not found, using plotters backend mutable time: [463.11 us 463.57 us 464.07 us] change: [-19.508% -18.571% -17.526%] (p = 0.00 < 0.05) Performance has improved. Found 10 outliers among 100 measurements (10.00%) 1 (1.00%) high mild 9 (9.00%) high severe mutable prepared time: [527.84 us 528.46 us 529.14 us] change: [-13.356% -12.522% -11.790%] (p = 0.00 < 0.05) Performance has improved. Found 12 outliers among 100 measurements (12.00%) 5 (5.00%) high mild 7 (7.00%) high severe Benchmarking from_slice: Warming up for 3.0000 s Warning: Unable to complete 100 samples in 5.0s. You may wish to increase target time to 6.1s, enable flat sampling, or reduce sample count to 60. from_slice time: [1.1968 ms 1.1979 ms 1.1991 ms] change: [-6.8697% -6.2029% -5.5812%] (p = 0.00 < 0.05) Performance has improved. Found 10 outliers among 100 measurements (10.00%) 3 (3.00%) high mild 7 (7.00%) high severe from_slice prepared time: [917.49 us 918.89 us 920.60 us] change: [-6.5111% -5.9102% -5.3038%] (p = 0.00 < 0.05) Performance has improved. Found 10 outliers among 100 measurements (10.00%) 4 (4.00%) high mild 6 (6.00%) high severe ``` Closes apache#9076 from jorgecarleitao/perf_buffer Authored-by: Jorge C. Leitao <jorgecarleitao@gmail.com> Signed-off-by: Jorge C. Leitao <jorgecarleitao@gmail.com>

I find myself trying to remember the exact incantation to create a `StringDictionaryBuilder` so I figured I would add it as a doc example Closes apache#9169 from alamb/alamb/doc-example Authored-by: Andrew Lamb <andrew@nerdnetworks.org> Signed-off-by: Jorge C. Leitao <jorgecarleitao@gmail.com>

I think the feature to be able to repartition an in memory table is useful, as the repartitioning only needs to be applied once, and repartition itself is cheap (at the same node). Doing this when loading data is very useful for in-memory analytics as we can benefit from mutliple cores after loading the data. The speed up from repartitioning is very big (mainly on aggregates), on my (8-core machine): ~5-7x on query 1 and 12 versus a single partition, and a smaller (~30%) difference for query 5 when using 16 partition. q1/q12 also have very high cpu utilization. @jorgecarleitao maybe this is of interest to you, as you mentioned you are looking into multi-threading. I think this would be a "high level" way to get more parallelism, also in the logical plan. I think in some optimizer rules and/or dynamically we can do repartitions, similar to what's described here https://issues.apache.org/jira/browse/ARROW-9464 Benchmarks after repartitioning (16 partitions): PR (16 partitions) ``` Query 12 iteration 0 took 33.9 ms Query 12 iteration 1 took 34.3 ms Query 12 iteration 2 took 36.9 ms Query 12 iteration 3 took 33.6 ms Query 12 iteration 4 took 35.1 ms Query 12 iteration 5 took 38.8 ms Query 12 iteration 6 took 35.8 ms Query 12 iteration 7 took 34.4 ms Query 12 iteration 8 took 34.2 ms Query 12 iteration 9 took 35.3 ms Query 12 avg time: 35.24 ms ``` Master (1 partition): ``` Query 12 iteration 0 took 245.6 ms Query 12 iteration 1 took 246.4 ms Query 12 iteration 2 took 246.1 ms Query 12 iteration 3 took 247.9 ms Query 12 iteration 4 took 246.5 ms Query 12 iteration 5 took 248.2 ms Query 12 iteration 6 took 247.8 ms Query 12 iteration 7 took 246.4 ms Query 12 iteration 8 took 246.6 ms Query 12 iteration 9 took 246.5 ms Query 12 avg time: 246.79 ms ``` PR (16 partitions): ``` Query 1 iteration 0 took 138.6 ms Query 1 iteration 1 took 142.2 ms Query 1 iteration 2 took 125.8 ms Query 1 iteration 3 took 102.4 ms Query 1 iteration 4 took 105.9 ms Query 1 iteration 5 took 107.0 ms Query 1 iteration 6 took 109.3 ms Query 1 iteration 7 took 109.9 ms Query 1 iteration 8 took 108.8 ms Query 1 iteration 9 took 112.0 ms Query 1 avg time: 116.19 ms ``` Master (1 partition): ``` Query 1 iteration 0 took 640.6 ms Query 1 iteration 1 took 640.0 ms Query 1 iteration 2 took 632.9 ms Query 1 iteration 3 took 634.6 ms Query 1 iteration 4 took 630.7 ms Query 1 iteration 5 took 630.7 ms Query 1 iteration 6 took 631.9 ms Query 1 iteration 7 took 635.5 ms Query 1 iteration 8 took 639.0 ms Query 1 iteration 9 took 638.3 ms Query 1 avg time: 635.43 ms ``` PR (16 partitions) ``` Query 5 iteration 0 took 465.8 ms Query 5 iteration 1 took 428.0 ms Query 5 iteration 2 took 435.0 ms Query 5 iteration 3 took 407.3 ms Query 5 iteration 4 took 435.7 ms Query 5 iteration 5 took 437.4 ms Query 5 iteration 6 took 411.2 ms Query 5 iteration 7 took 432.0 ms Query 5 iteration 8 took 436.8 ms Query 5 iteration 9 took 435.6 ms Query 5 avg time: 432.47 ms ``` Master (1 partition) ``` Query 5 iteration 0 took 660.6 ms Query 5 iteration 1 took 634.4 ms Query 5 iteration 2 took 626.4 ms Query 5 iteration 3 took 628.0 ms Query 5 iteration 4 took 635.3 ms Query 5 iteration 5 took 631.1 ms Query 5 iteration 6 took 631.3 ms Query 5 iteration 7 took 639.4 ms Query 5 iteration 8 took 634.3 ms Query 5 iteration 9 took 639.0 ms Query 5 avg time: 635.97 ms ``` Closes apache#9214 from Dandandan/mem_table_repartition Lead-authored-by: Heres, Daniel <danielheres@gmail.com> Co-authored-by: Daniël Heres <danielheres@gmail.com> Signed-off-by: Jorge C. Leitao <jorgecarleitao@gmail.com>

FYI @jorgecarleitao I think some change on master and maybe some parquet related changes caused a compilation error on master. This fixes the compilation error. Closes apache#9269 from Dandandan/fix_datafusion Authored-by: Heres, Daniel <danielheres@gmail.com> Signed-off-by: Andrew Lamb <andrew@nerdnetworks.org>

Create hashes vectorized in hash join This is one step for a fully vectorized hash join: https://issues.apache.org/jira/browse/ARROW-11112 The idea of the PR is as follows: * We still use a `HashMap` but rather than using the row data as key we use a hash value ( `u64`) both as key and as hash. We use a custom `Hasher` to avoid (re)computing hashes in the hash map and while doing lookups. * Only the hash value creation is in this PR vectorized, the rest is still on a row basis. * A test for hash collision detection needs to be added. TCPH 12 is without the remaining part ~10% faster than the other PR: ~180ms vs ~200ms. TCPH 5 is >40% faster (332ms vs 624ms). Closes apache#9116 from Dandandan/vectorized_hashing Authored-by: Heres, Daniel <danielheres@gmail.com> Signed-off-by: Andrew Lamb <andrew@nerdnetworks.org>

`size_hint` should return the remaining items, not the total number of items. Closes apache#9258 from jorgecarleitao/fix_size_hint Authored-by: Jorge C. Leitao <jorgecarleitao@gmail.com> Signed-off-by: Andrew Lamb <andrew@nerdnetworks.org>

… problems fixed The major change of [flatbuffers 0.8.1](https://docs.rs/flatbuffers/0.8.1/flatbuffers/index.html) since 0.8.0 is google/flatbuffers#6393, which fixed some possible memory alignment issues. In this PR, the ipc/gen/*.rs files are generated by `regen.sh` as before, without any manual change. Closes apache#9176 from mqy/flatbuffers-0.8.1 Authored-by: mqy <meng.qingyou@gmail.com> Signed-off-by: Andrew Lamb <andrew@nerdnetworks.org>

…nsts

```c++

/tmp/apache-arrow-20210116-6233-1jyrhk8/apache-arrow-3.0.0/cpp/src/arrow/dataset/expression.cc

/tmp/apache-arrow-20210116-6233-1jyrhk8/apache-arrow-3.0.0/cpp/src/arrow/dataset/expression.cc:684:30:

error: default initialization of an object of const type 'const arrow::Datum' without a user-provided default constructor

static const Datum ignored_input;

```

Datum defines a default constructor but it doesn't seem to be found for const/constexpr decls

Closes apache#9267 from bkietz/11277-Fix-compilation-error-in-

Authored-by: Benjamin Kietzman <bengilgit@gmail.com>

Signed-off-by: Neal Richardson <neal.p.richardson@gmail.com>

Closes apache#9270 from maxburke/rust_memory_public Authored-by: Max Burke <max@urbanlogiq.com> Signed-off-by: Jorge C. Leitao <jorgecarleitao@gmail.com>

The Int96 timestamp was not using the specialised timestamp builder that takes the timezone as a paramenter. This changes that to use the builder that preserves timezones. I tested this change with the test file provided in the JIRA. It looks like we don't have a way of writing int96 from the arrow writer, so there isn't an easy way to add a testcase. Closes apache#9253 from nevi-me/ARROW-11269 Authored-by: Neville Dipale <nevilledips@gmail.com> Signed-off-by: Neville Dipale <nevilledips@gmail.com>

Writes leaves immediately after calculating array levels to reduce array level memory usage by the number of rows in a row group. Closes apache#9222 from TurnOfACard/parquet-memory Authored-by: Ryan Jennings <ryan@ryanj.net> Signed-off-by: Neville Dipale <nevilledips@gmail.com>

…stamp types

I found this while removing `test::format_batches` (PR to come shortly); The Record batch pretty printing code was printing numbers rather than dates.

Before this PR, when date/time columns were printed they were printed as numbers:

```

[

"+----------+",

"| f |",

"+----------+",

"| 11111111 |",

"| |",

"+----------+",

]

```

After this PR, they are printed (via chrono) as dates:

```

[

"+---------------------+",

"| f |",

"+---------------------+",

"| 1970-05-09 14:25:11 |",

"| |",

"+---------------------+",

]

```

Closes apache#9263 from alamb/alamb/pretty_print_datetimes

Authored-by: Andrew Lamb <andrew@nerdnetworks.org>

Signed-off-by: Andrew Lamb <andrew@nerdnetworks.org>

The functions `unset_bit` and `unset_bit_raw` were toggling, not unsetting, bits, which was obviously wrong. This PR also changes the test for `set_bit` to also make sure that it does not toggle bits. Closes apache#9257 from jorgecarleitao/fix_unset Authored-by: Jorge C. Leitao <jorgecarleitao@gmail.com> Signed-off-by: Andrew Lamb <andrew@nerdnetworks.org>

This PR removes the risk of boolean values to be converted to bytes via `ToByteSlice` by explicitly making `ArrowNativeType` be only used in types whose in-memory representation in Rust equates to the in-memory representation in Arrow. `bool` in Rust is a byte and in Arrow it is a bit. Overall, the direction of this PR is to have the traits represent one aspect of the type. In this case, `ArrowNativeType` is currently * a type that has the same in memory representation (ToByteSlice is implemented for it) * a json serializable type * something that can be casted to/from `usize`. This poses a problem because: 1. bools are serializable, not castable to usize, have different memory representation 2. fixed size (iX, uX) are serializable, castable to usize, have the same memory representation 3. fixed floating (f32, f64) are serializable, not castable to usize, have the same memory representation however, they all implement `ArrowNativeType`. This PR focus on splitting the json-serializable part of it. Closes apache#9212 from jorgecarleitao/fix_trait Authored-by: Jorge C. Leitao <jorgecarleitao@gmail.com> Signed-off-by: Andrew Lamb <andrew@nerdnetworks.org>

…sue with high number of groups Currently, we loop to the hashmap for every key. However, as we receive a batch, if we a lot of groups in the group by expression (or receive sorted data, etc.) then we could create a lot of empty batches and call `update_batch` for each of the key already in the hashmap. In the PR we keep track of which keys we received in the batch and only update the accumulators with the same keys instead of all accumulators. On the db-benchmark h2oai/db-benchmark#182 this is the difference (mainly q3 and q5, others seem to be noise). It doesn't seem to completely solve the problem, but it reduces the problem already quite a bit. This PR: ``` q1 took 340 ms q2 took 1768 ms q3 took 10975 ms q4 took 337 ms q5 took 13529 ms ``` Master: ``` q1 took 330 ms q2 took 1648 ms q3 took 16408 ms q4 took 335 ms q5 took 21074 ms ``` Closes apache#9234 from Dandandan/hash_agg_speed2 Authored-by: Heres, Daniel <danielheres@gmail.com> Signed-off-by: Andrew Lamb <andrew@nerdnetworks.org>

…_batch_empty Previously `build_empty_list_array` was declared inside Parquet (`array_reader`), but I will use this function inside DataFushion's `create_batch_empty` (it's used inside hash_aggregate to make an empty batch from the provided schema that contains type for columns). I moved it to Arrow (because it's common and useful) and made `build_empty_large_list_array` (for large lists) on top of macros with different implementation than build_empty_list_array. Closes apache#9114 from ovr/issue-11149 Authored-by: Dmitry Patsura <zaets28rus@gmail.com> Signed-off-by: Andrew Lamb <andrew@nerdnetworks.org>

This speeds up development by avoiding rebuilding the whole library when any file in the package directory is touched. Closes apache#9277 from mbrubeck/build Authored-by: Matt Brubeck <mbrubeck@limpet.net> Signed-off-by: Jorge C. Leitao <jorgecarleitao@gmail.com>

Introduce support in DataFushion for GROUP BY on boolean values. Boolean type in Rust implements Eq and Hash traits which allow us to use GroupByScalar. Closes apache#9174 from ovr/issue-11220 Authored-by: Dmitry Patsura <zaets28rus@gmail.com> Signed-off-by: Andrew Lamb <andrew@nerdnetworks.org>

…ssion walking ## Problem: * There are several places in the DataFusion codebase where a walk of an Expression tree is needed * The logic of how to walk the tree is replicated * Adding new expression types often require many mechanically different but semantically the same changes in many places where no special treatment of such types is needed This PR introduces a `ExpressionVisitor` trait and the `Expr::accept` function to consolidate this walking of the expression tree. It does not intend to change any functionality. If folks like this pattern, I have ideas for a similar type of trait `ExpressionRewriter` which can be used to rewrite expressions (much like `clone_with_replacement`) as a subsquent PR. I think this was mentioned by @Dandandan in the [Rust roadmap](https://docs.google.com/document/d/1qspsOM_dknOxJKdGvKbC1aoVoO0M3i6x1CIo58mmN2Y/edit#heading=h.kstb571j5g5j) cc @jorgecarleitao @Dandandan and @andygrove Closes apache#9278 from alamb/alamb/expression_visitor Authored-by: Andrew Lamb <andrew@nerdnetworks.org> Signed-off-by: Andrew Lamb <andrew@nerdnetworks.org>

Codecov Report

@@ Coverage Diff @@

## master #9305 +/- ##

==========================================

- Coverage 81.89% 81.85% -0.05%

==========================================

Files 215 215

Lines 52988 53036 +48

==========================================

+ Hits 43392 43410 +18

- Misses 9596 9626 +30

Continue to review full report at Codecov.

|

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

LGTM.

There is a fmt issue somewhere.

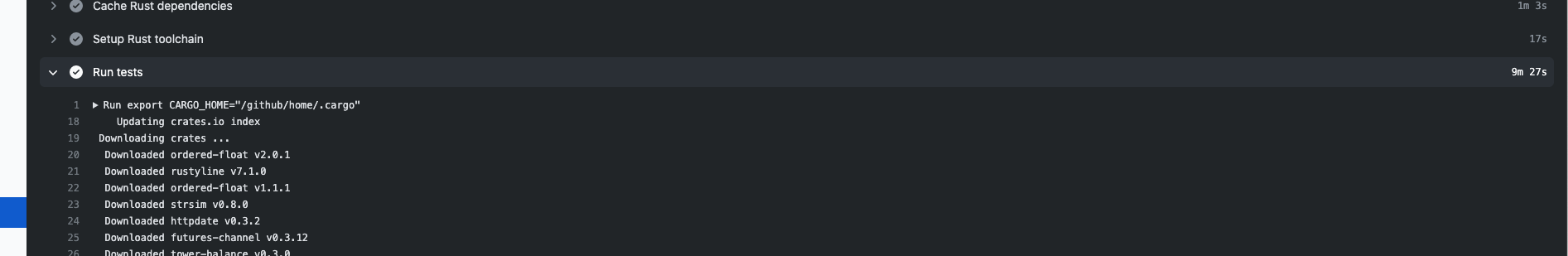

This PR changes the Rust CI job to include the prettyprint feature. (so we have CI coverage of that feature) As @paddyhoran noted, passing in feature flags to the root workspace doesn't do anything, and as it turns out we already invoke `cargo test` for the `arrow` crate separately. Prior to this PR I think that just ran the same tests again with the same features. After this PR, the second run of the arrow tests are run with the `prettyprint` feature Here is evidence that the tests are running twice. I picked a PR (randomly) https://github.com/apache/arrow/pull/9258/checks?check_run_id=1725967358 If you look at the logs for the run-tests action  You can see that the same test is run twice: ``` 2021-01-19T07:02:49.9476111Z test compute::kernels::boolean::tests::test_nonnull_array_is_not_null ... ok 2021-01-19T07:02:49.9476854Z test compute::kernels::boolean::tests::test_nonnull_array_is_null ... ok 2021-01-19T07:02:49.9477616Z test compute::kernels::boolean::tests::test_nonnull_array_with_offset_is_not_null ... ok 2021-01-19T07:02:49.9478427Z test compute::kernels::boolean::tests::test_nonnull_array_with_offset_is_null ... ok 2021-01-19T07:02:49.9479207Z test compute::kernels::boolean::tests::test_nullable_array_is_null ... ok 2021-01-19T07:02:49.9479948Z test compute::kernels::boolean::tests::test_nullable_array_is_not_null ... ok 2021-01-19T07:02:49.9480744Z test compute::kernels::boolean::tests::test_nullable_array_with_offset_is_not_null ... ok 2021-01-19T07:02:49.9481640Z test compute::kernels::boolean::tests::test_nullable_array_with_offset_is_null ... ok 2021-01-19T07:02:49.9487019Z test compute::kernels::boolean::tests::test_nullif_int_array_offset ... ok 2021-01-19T07:02:49.9487773Z test compute::kernels::boolean::tests::test_nullif_int_array ... ok ... 2021-01-19T07:07:23.4568865Z test compute::kernels::boolean::tests::test_nonnull_array_is_not_null ... ok 2021-01-19T07:07:23.4569576Z test compute::kernels::boolean::tests::test_nonnull_array_is_null ... ok 2021-01-19T07:07:23.4570337Z test compute::kernels::boolean::tests::test_nonnull_array_with_offset_is_not_null ... ok 2021-01-19T07:07:23.4571133Z test compute::kernels::boolean::tests::test_nonnull_array_with_offset_is_null ... ok 2021-01-19T07:07:23.4571885Z test compute::kernels::boolean::tests::test_nullable_array_is_not_null ... ok 2021-01-19T07:07:23.4572614Z test compute::kernels::boolean::tests::test_nullable_array_is_null ... ok 2021-01-19T07:07:23.4573383Z test compute::kernels::boolean::tests::test_nullable_array_with_offset_is_not_null ... ok 2021-01-19T07:07:23.4574183Z test compute::kernels::boolean::tests::test_nullable_array_with_offset_is_null ... ok 2021-01-19T07:07:23.4574929Z test compute::kernels::boolean::tests::test_nullif_int_array ... ok 2021-01-19T07:07:23.4575636Z test compute::kernels::boolean::tests::test_nullif_int_array_offset ... ok ``` Closes apache#9262 from alamb/alamb/run_prettyprint_ci Authored-by: Andrew Lamb <andrew@nerdnetworks.org> Signed-off-by: Jorge C. Leitao <jorgecarleitao@gmail.com>

|

I noticed that this is also being used in |

|

@jorgecarleitao yes, but those are on |

|

CI failures don't seem to be related to the changes. |

…ull) values The idea of this PR is to have a function `from_iter_values` that (just like `from_iter`) creates an array based on an iterator, but from `T` instead of `Option<T>`. I have seen some places in DataFusion (especially `to_array_of_size`) where an `Array` is generated from a `Vec` of items, which could be replaced by this. The other iterators have some memory / time overhead in both creating and manipulating the null buffer (and in the case of `Vec` for allocating / dropping the Vec) Closes apache#9293 from Dandandan/array_iter_non_null Authored-by: Heres, Daniel <danielheres@gmail.com> Signed-off-by: Jorge C. Leitao <jorgecarleitao@gmail.com>

rust/datafusion/src/scalar.rs

Outdated

| Arc::new(StringArray::from_iter_values(repeat(value).take(size))) | ||

| } | ||

| None => { | ||

| Arc::new(repeat(e.as_deref()).take(size).collect::<StringArray>()) |

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

any reason to use e.as_deref instead of None? Just curious.

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

I couldn't get the None compiling here.

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

Updated.

|

The clippy failures were fixed in #9314 - a quick rebase can probably get this PR green |

|

PR is updated against master @jorgecarleitao @alamb |

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

I reviewed this PR and it looks good to me.

|

@Dandandan there is a missing license in |

|

Whoa. This PR needed to be rebased (https://github.com/apache/arrow/pull/9305/commits) |

|

I am really sorry, @wesm . What were the consequences? From what I see, the main problem is that the list of co-authors is rather large on the commit message. |

|

I think it was still the recent rebase after 3.0 on master (which I think introduced some weirdness on the master branch?) causing this, I missed that it still had those commits in here. |

|

All the more reason not to be rebasing master going forward I would say |

…to improve performance This function `to_array_of_size` is about 8.3% of total instructions in the db-benchmark (aggregation) queries. This uses the PR apache#9293 The case of converting an int32 to an array improved by ~5x according to the microbenchmark: ``` to_array_of_size 100000 time: [55.501 us 55.627 us 55.809 us] change: [-82.457% -82.384% -82.299%] (p = 0.00 < 0.05) Performance has improved. Found 3 outliers among 100 measurements (3.00%) 2 (2.00%) high mild 1 (1.00%) high severe ``` And on TCPH query 1 (SF=1, 16 partitions). PR: ``` Query 1 iteration 0 took 90.8 ms Query 1 iteration 1 took 106.6 ms Query 1 iteration 2 took 101.1 ms Query 1 iteration 3 took 101.5 ms Query 1 iteration 4 took 96.9 ms Query 1 iteration 5 took 100.3 ms Query 1 iteration 6 took 99.6 ms Query 1 iteration 7 took 100.4 ms Query 1 iteration 8 took 104.2 ms Query 1 iteration 9 took 100.3 ms Query 1 avg time: 100.18 ms ``` Master: ``` Query 1 iteration 0 took 121.1 ms Query 1 iteration 1 took 123.4 ms Query 1 iteration 2 took 121.0 ms Query 1 iteration 3 took 121.0 ms Query 1 iteration 4 took 123.0 ms Query 1 iteration 5 took 121.7 ms Query 1 iteration 6 took 121.7 ms Query 1 iteration 7 took 120.2 ms Query 1 iteration 8 took 119.7 ms Query 1 iteration 9 took 121.4 ms Query 1 avg time: 121.43 ms ``` Closes apache#9305 from Dandandan/to_array_of_size_perf Lead-authored-by: Heres, Daniel <danielheres@gmail.com> Co-authored-by: Jorge C. Leitao <jorgecarleitao@gmail.com> Co-authored-by: Andrew Lamb <andrew@nerdnetworks.org> Co-authored-by: Sutou Kouhei <kou@clear-code.com> Co-authored-by: Neville Dipale <nevilledips@gmail.com> Co-authored-by: Dmitry Patsura <zaets28rus@gmail.com> Co-authored-by: Yibo Cai <yibo.cai@arm.com> Co-authored-by: Daniël Heres <danielheres@gmail.com> Co-authored-by: Kenta Murata <mrkn@mrkn.jp> Co-authored-by: Mahmut Bulut <vertexclique@gmail.com> Co-authored-by: Yordan Pavlov <yordan.pavlov@outlook.com> Co-authored-by: Max Burke <max@urbanlogiq.com> Co-authored-by: Ryan Jennings <ryan@ryanj.net> Co-authored-by: Benjamin Kietzman <bengilgit@gmail.com> Co-authored-by: Jörn Horstmann <joern.horstmann@signavio.com> Co-authored-by: Krisztián Szűcs <szucs.krisztian@gmail.com> Co-authored-by: Joris Van den Bossche <jorisvandenbossche@gmail.com> Co-authored-by: Johannes Müller <JohannesMueller@fico.com> Co-authored-by: mqy <meng.qingyou@gmail.com> Co-authored-by: Maarten A. Breddels <maartenbreddels@gmail.com> Co-authored-by: Antoine Pitrou <antoine@python.org> Co-authored-by: Matt Brubeck <mbrubeck@limpet.net> Co-authored-by: Weston Pace <weston.pace@gmail.com> Signed-off-by: Jorge C. Leitao <jorgecarleitao@gmail.com>

…to improve performance This function `to_array_of_size` is about 8.3% of total instructions in the db-benchmark (aggregation) queries. This uses the PR apache#9293 The case of converting an int32 to an array improved by ~5x according to the microbenchmark: ``` to_array_of_size 100000 time: [55.501 us 55.627 us 55.809 us] change: [-82.457% -82.384% -82.299%] (p = 0.00 < 0.05) Performance has improved. Found 3 outliers among 100 measurements (3.00%) 2 (2.00%) high mild 1 (1.00%) high severe ``` And on TCPH query 1 (SF=1, 16 partitions). PR: ``` Query 1 iteration 0 took 90.8 ms Query 1 iteration 1 took 106.6 ms Query 1 iteration 2 took 101.1 ms Query 1 iteration 3 took 101.5 ms Query 1 iteration 4 took 96.9 ms Query 1 iteration 5 took 100.3 ms Query 1 iteration 6 took 99.6 ms Query 1 iteration 7 took 100.4 ms Query 1 iteration 8 took 104.2 ms Query 1 iteration 9 took 100.3 ms Query 1 avg time: 100.18 ms ``` Master: ``` Query 1 iteration 0 took 121.1 ms Query 1 iteration 1 took 123.4 ms Query 1 iteration 2 took 121.0 ms Query 1 iteration 3 took 121.0 ms Query 1 iteration 4 took 123.0 ms Query 1 iteration 5 took 121.7 ms Query 1 iteration 6 took 121.7 ms Query 1 iteration 7 took 120.2 ms Query 1 iteration 8 took 119.7 ms Query 1 iteration 9 took 121.4 ms Query 1 avg time: 121.43 ms ``` Closes apache#9305 from Dandandan/to_array_of_size_perf Lead-authored-by: Heres, Daniel <danielheres@gmail.com> Co-authored-by: Jorge C. Leitao <jorgecarleitao@gmail.com> Co-authored-by: Andrew Lamb <andrew@nerdnetworks.org> Co-authored-by: Sutou Kouhei <kou@clear-code.com> Co-authored-by: Neville Dipale <nevilledips@gmail.com> Co-authored-by: Dmitry Patsura <zaets28rus@gmail.com> Co-authored-by: Yibo Cai <yibo.cai@arm.com> Co-authored-by: Daniël Heres <danielheres@gmail.com> Co-authored-by: Kenta Murata <mrkn@mrkn.jp> Co-authored-by: Mahmut Bulut <vertexclique@gmail.com> Co-authored-by: Yordan Pavlov <yordan.pavlov@outlook.com> Co-authored-by: Max Burke <max@urbanlogiq.com> Co-authored-by: Ryan Jennings <ryan@ryanj.net> Co-authored-by: Benjamin Kietzman <bengilgit@gmail.com> Co-authored-by: Jörn Horstmann <joern.horstmann@signavio.com> Co-authored-by: Krisztián Szűcs <szucs.krisztian@gmail.com> Co-authored-by: Joris Van den Bossche <jorisvandenbossche@gmail.com> Co-authored-by: Johannes Müller <JohannesMueller@fico.com> Co-authored-by: mqy <meng.qingyou@gmail.com> Co-authored-by: Maarten A. Breddels <maartenbreddels@gmail.com> Co-authored-by: Antoine Pitrou <antoine@python.org> Co-authored-by: Matt Brubeck <mbrubeck@limpet.net> Co-authored-by: Weston Pace <weston.pace@gmail.com> Signed-off-by: Jorge C. Leitao <jorgecarleitao@gmail.com>

This function

to_array_of_sizeis about 8.3% of total instructions in the db-benchmark (aggregation) queries.This uses the PR #9293

The case of converting an int32 to an array improved by ~5x according to the microbenchmark:

And on TCPH query 1 (SF=1, 16 partitions).

PR:

Master: