CoRefi is an emebedable web component and stand alone suite for exaughstive Within Document and Cross Document Coreference Anntoation. For a demo of the suite click here

- Introduction

- Features

- Installation

- Usage

- Team

- Support

- Contributing

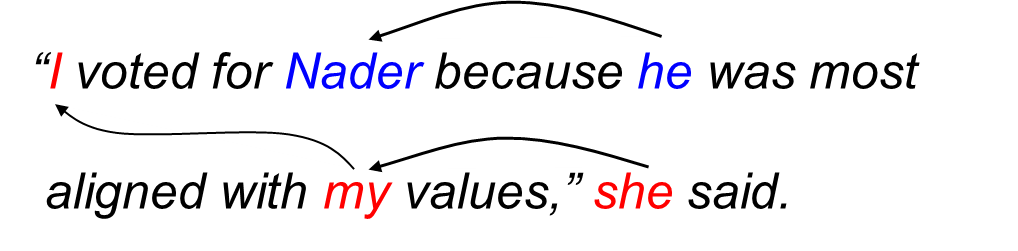

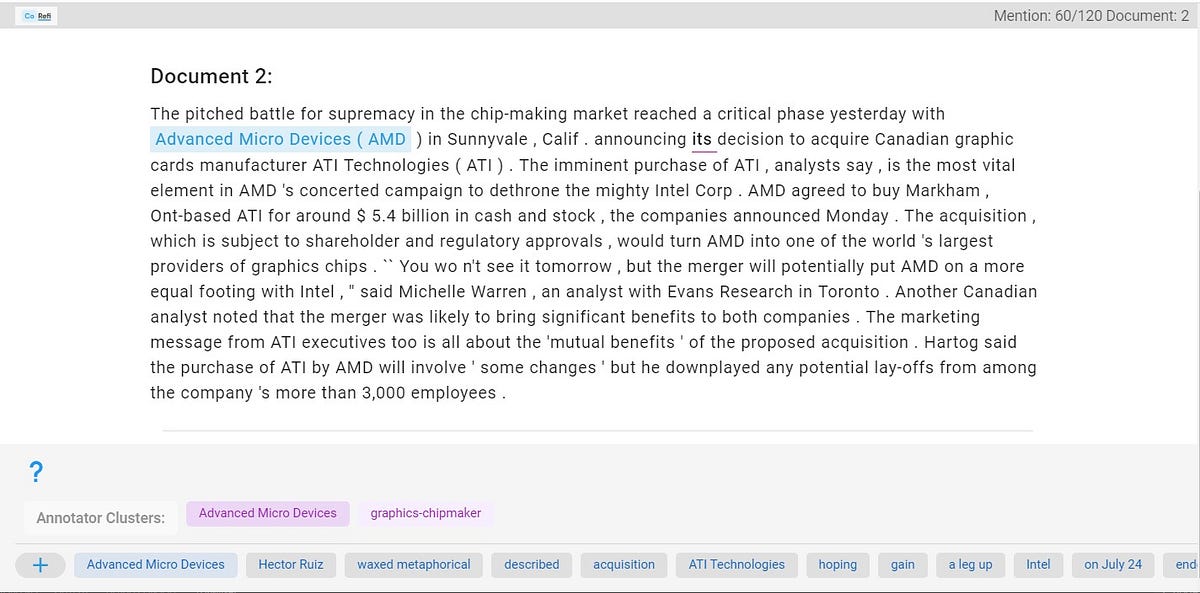

Coreference Resolution is the task of clustering words and names that refer to the same concept, entity or event. Coreference is an important NLP task for downstream applications such as abstractive summarization, reading comprehension, and information extraction. See the following Coreference Example from Stanford NLP.

To ensure quality when crowdsourcing Coreference, an annotator must be trained to understand the nuances of co-reference and then be provided with all the information needed to accurately make coreference cluster assignments for every mention in a topic. The quality of an annotator's work must then be reviewable by a designated reviewer.

If you use this tool in your research, please kindly cite our EMNLP 2020 CoRefi system demo paper.

@inproceedings{bornstein-etal-2020-corefi,

title={CoRefi: A Crowd Sourcing Suite for Coreference Annotation},

author={Aaron Bornstein and Arie Cattan and Ido Dagan},

booktitle = "Proceedings of the 2020 Conference on Empirical Methods in Natural Language Processing: System Demonstrations",

year={2020}

}

CoRefi provides capabilites for the full end to end process for Exhaustive Coreference Annotation consisting of:

For a full walkthrough of CoRefi's features, check out our system demonstration video on youtube.

A trainee is provided with a sequence of mentions. For each mention the trainee decides whether to update the mention’s span and then to either create a new mention or assign the mention to an existing cluster. At any point of time, the trainee may view the mentions in any existing cluster. If the trainee incorrectly changes a mention span or assigns a mention to the wrong cluster they will be notified. Additionally after certain decisions an explanation of a specific important rule of co-reference will be explained. Once all mentions are clustered the annotator can submit the job.

A sample configuration json file for onboarding can be found here for more information on tool configuration see the configuration section.

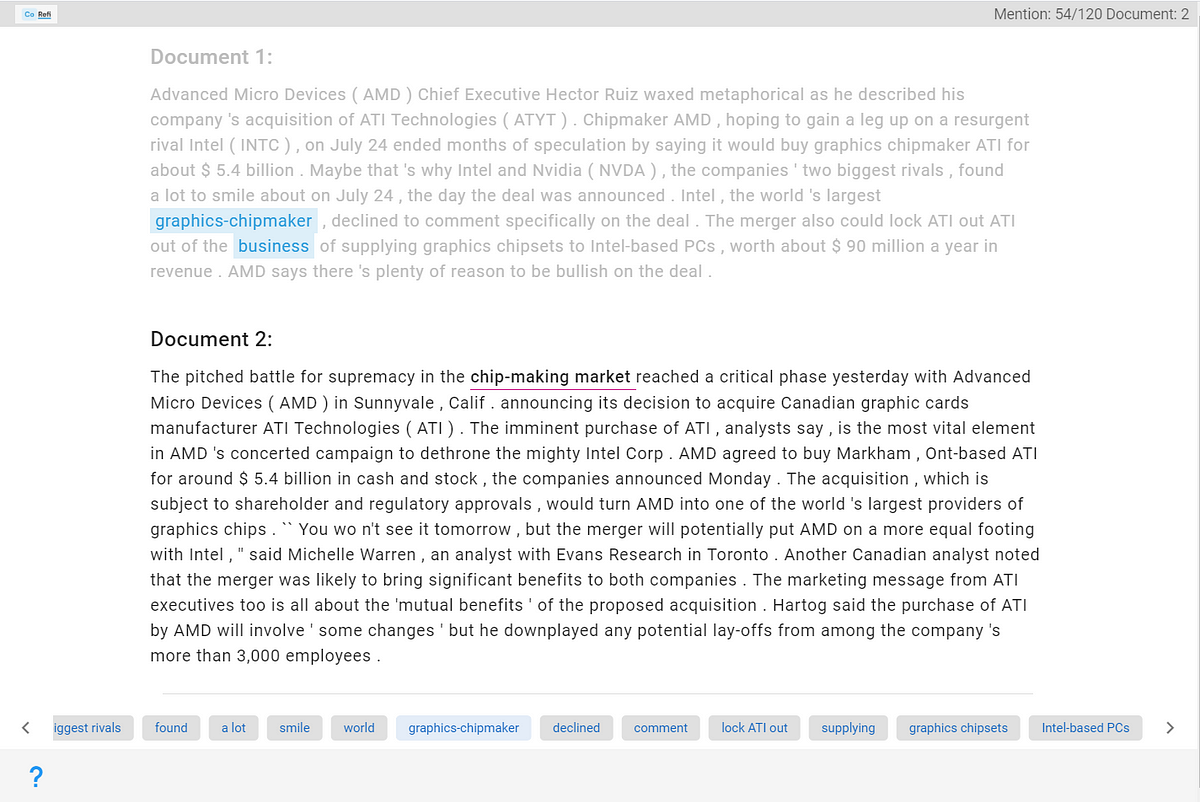

The annotator is provided with a sequence of mentions. For each mention the annotator decides whether to update the mention’s span and then to either create a new mention or assign the mention to an existing cluster. At any point in time the annotator can re-assign a previously assigned mention to another cluster or view the mentions in an existing cluster. Once all mentions are clustered the annotator can submit the job.

A sample configuration json file for annotation can be found here for more information on tool configuration see the configuration section.

The Reviewer is provided a sequential list of mentions as well as a highlighted list of clusters that the reviewer may have meant to assign them to. For each mention the reviewer is shown the potential clusters that the annotator believed the mention belongs to. The reviewer then decides whether to update the mention’s span and then whether to agree or update the annotators assignment.

A sample configuration json file for reviewing can be found here for more information on tool configuration see the configuration section.

Clone this repo to your local machine

$ git clone https://github.com/aribornstein/cdc-app.gitThen run the following npm commands to install and run the tool locally.

$ npm install

$ npm run --serve Add the following code your html file.

<script src="https://unpkg.com/vue"></script>

<script src="https://github.com/aribornstein/CoRefi/releases/download/1.0.0/co-refi.min.js"></script>

<link href="https://fonts.googleapis.com/css?family=Roboto:100,300,400,500,700,900" rel="stylesheet">

<link href="https://cdn.jsdelivr.net/npm/@mdi/font@5.x/css/materialdesignicons.min.css" rel="stylesheet">

<link href="https://cdn.jsdelivr.net/npm/vuetify@2.x/dist/vuetify.min.css" rel="stylesheet">

<link href="https://fonts.googleapis.com/css?family=Material+Icons" rel="stylesheet">The app can then be embeded as a Web Component

<co-refi json="{html escaped json config}" ></co-refi>- Assign Mention to Current Cluster: SPACE

- Assign Mention to New Cluster: Ctrl + SPACE (Windows) or Alt + SPACE (MacOS)

- Select Cluster: Click on a previously assigned mention or use the ↔ keys on the keyboard

- Select Mention to Reassign: Ctrl + Click (Windows) or Alt + Click (MacOS) the mention

CoRefi can be configured with a simple JSON schema. Examples configuration files for Onboarding, Annotation, and Reviewing can be found in the src\data\mentions.json folder.

The Notebooks folder contains python example code for: - Generating CoRefi JSON configuration files from Raw text, - Converting JSON config files into HTML escaped embeddable input. - Preperation code for Mechanical Turk - Converting JSON results to CONLL - Converting Annotation results to a Reviewer Configurtation file.

The following is example code for extracting annotation results from CoRefi

let corefi = document.getElementsByTagName("co-refi")[0].vueComponent;

let results = {tokens:corefi.tokens, mentions:corefi.viewedMentions}

JSON.stringify(results);People/Contribution

- Ari Bornstein and Arie Cattan - Core Team

- Ido Dagan - Advisor

- Uri Fried - Lead Designer

- Ayal Klein & Paul Roit - Crowd Sourcing Review

- Amir Cohen - Architectural Review

- Sharon Oren - Code Review

- Chris Noring & Asaf Amrami

- Ori Shapira, Daniela Stepanov, Ori Ernst, Yehudit Meged, Valentina Pyatkin, Moshe Uzan, Ofer Sabo - Feedback and Review

Reach out to me at one of the following places!

- Website at https://aribornstein.github.io/corefidemo/

- Twitter at BIUNLP

To get started...

-

Option 1

- 🍴 Fork this repo!

-

Option 2

- 👯 Clone this repo to your local machine using

https://github.com/aribornstein/CoRefi.git

- 👯 Clone this repo to your local machine using

- HACK AWAY! 🔨🔨🔨

- 🔃 Create a new pull request using

https://github.com/aribornstein/CoRefi/compare/.