-

Notifications

You must be signed in to change notification settings - Fork 3.8k

Commit

This commit does not belong to any branch on this repository, and may belong to a fork outside of the repository.

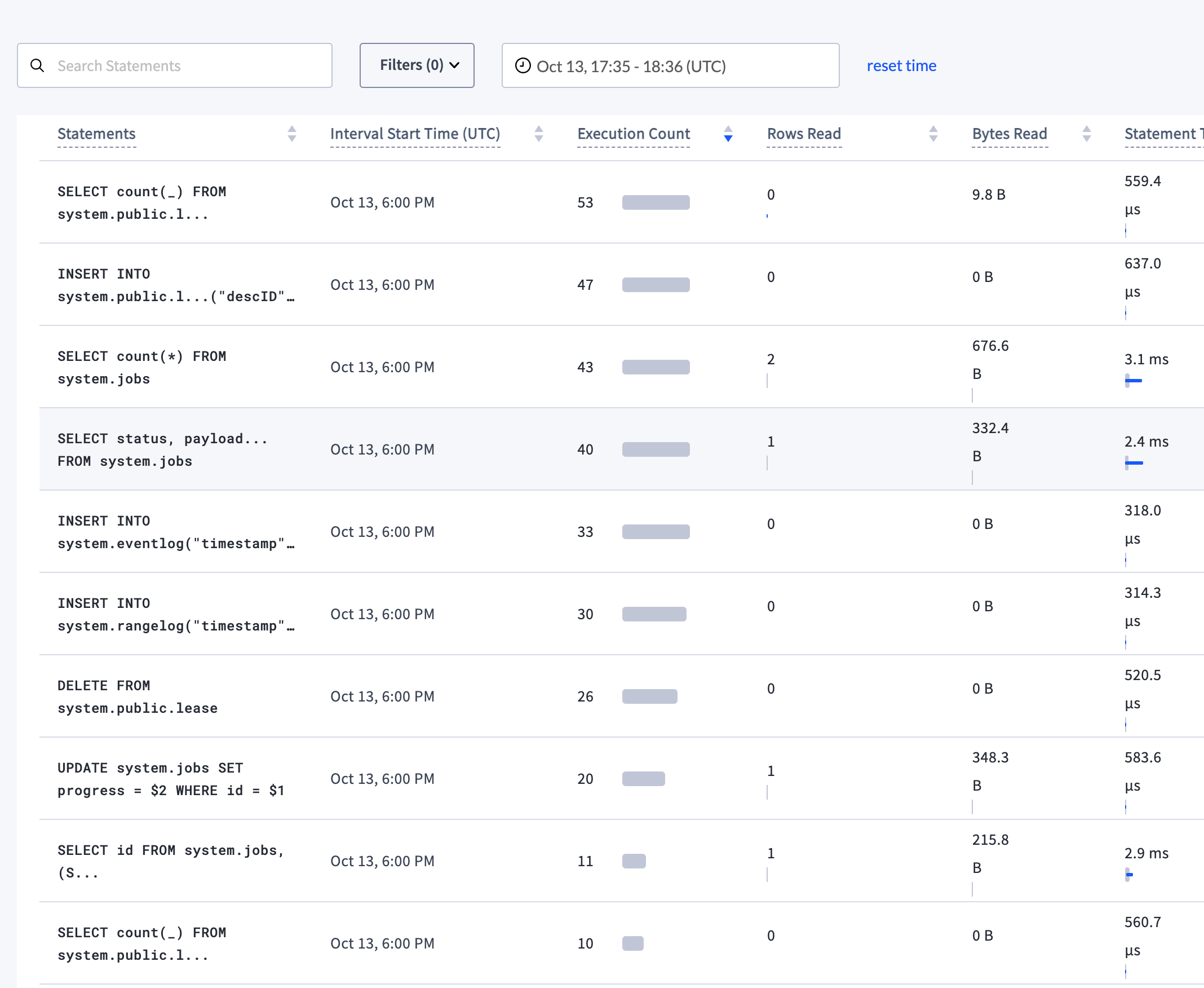

71225: rangefeedbuffer: introduce a rangefeed buffer r=irfansharif a=irfansharif Buffer provides a thin memory-bounded buffer to sit on top of a rangefeed. It accumulates raw rangefeed events[^1], which can be flushed out in timestamp sorted order en-masse whenever the rangefeed frontier is bumped. If we accumulate more events than the limit allows for, we error out to the caller. We need such a thing in both #69614 and #69661. [^1]: Rangefeed error events are propagated to the caller, checkpoint events are discarded. Release note: None First commit is from #71256. Co-authored-by: Arul Ajmani <arula@cockroachlabs.com>. 71534: ui/sql: show summarized statements in the statements table r=lindseyjin a=lindseyjin Resolves #27021 Previously, statements on the statements page hid too much information. There were complaints that it was difficult to disambiguate between statements without having to view the full query on the tooltips. The first commit in this patch implemented back-end changes to add a new metadata field for summarized queries, as well as formatting functions. This second commit implements additional logic to pass that new metadata to the front-end and display it in the Statements Table. Currently, we only create summaries of SELECT, INSERT/UPSERT, and UPDATE statements in the back-end. For all other statement types, we will continue to use the existing summary system.  Release note (ui change): Show new statement summaries on the Statements page. This applies for SELECT, INSERT/UPSERT, and UPDATE statements, and will enable them to be more detailed and less ambiguous than our previous formats. 71625: clusterversion: add a (disabled) assertion that binary version is latest r=dt a=dt This is intended to be flipped on and the release version updated when the cluster version mint commit is backported to a release branch. Doing so would then prevent accidentally backporting any future cluster versions without causing this to panic in all tests. Release note: none. Co-authored-by: irfan sharif <irfanmahmoudsharif@gmail.com> Co-authored-by: Lindsey Jin <lindsey.jin@cockroachlabs.com> Co-authored-by: David Taylor <tinystatemachine@gmail.com>

- Loading branch information

Showing

34 changed files

with

776 additions

and

118 deletions.

There are no files selected for viewing

This file contains bidirectional Unicode text that may be interpreted or compiled differently than what appears below. To review, open the file in an editor that reveals hidden Unicode characters.

Learn more about bidirectional Unicode characters

This file contains bidirectional Unicode text that may be interpreted or compiled differently than what appears below. To review, open the file in an editor that reveals hidden Unicode characters.

Learn more about bidirectional Unicode characters

This file contains bidirectional Unicode text that may be interpreted or compiled differently than what appears below. To review, open the file in an editor that reveals hidden Unicode characters.

Learn more about bidirectional Unicode characters

This file contains bidirectional Unicode text that may be interpreted or compiled differently than what appears below. To review, open the file in an editor that reveals hidden Unicode characters.

Learn more about bidirectional Unicode characters

This file contains bidirectional Unicode text that may be interpreted or compiled differently than what appears below. To review, open the file in an editor that reveals hidden Unicode characters.

Learn more about bidirectional Unicode characters

| Original file line number | Diff line number | Diff line change |

|---|---|---|

| @@ -0,0 +1,45 @@ | ||

| load("@io_bazel_rules_go//go:def.bzl", "go_library", "go_test") | ||

|

|

||

| go_library( | ||

| name = "buffer", | ||

| srcs = ["buffer.go"], | ||

| importpath = "github.com/cockroachdb/cockroach/pkg/kv/kvclient/rangefeed/buffer", | ||

| visibility = ["//visibility:public"], | ||

| deps = [ | ||

| "//pkg/ccl/changefeedccl/changefeedbase", | ||

| "//pkg/jobs/jobspb", | ||

| "//pkg/roachpb:with-mocks", | ||

| "//pkg/settings", | ||

| "//pkg/util/hlc", | ||

| "//pkg/util/log/logcrash", | ||

| "//pkg/util/mon", | ||

| "//pkg/util/quotapool", | ||

| "//pkg/util/syncutil", | ||

| "//pkg/util/timeutil", | ||

| "@com_github_cockroachdb_errors//:errors", | ||

| ], | ||

| ) | ||

|

|

||

| go_library( | ||

| name = "rangefeedbuffer", | ||

| srcs = ["buffer.go"], | ||

| importpath = "github.com/cockroachdb/cockroach/pkg/kv/kvclient/rangefeed/rangefeedbuffer", | ||

| visibility = ["//visibility:public"], | ||

| deps = [ | ||

| "//pkg/util/hlc", | ||

| "//pkg/util/log", | ||

| "//pkg/util/syncutil", | ||

| "@com_github_cockroachdb_errors//:errors", | ||

| ], | ||

| ) | ||

|

|

||

| go_test( | ||

| name = "rangefeedbuffer_test", | ||

| srcs = ["buffer_test.go"], | ||

| deps = [ | ||

| ":rangefeedbuffer", | ||

| "//pkg/util/hlc", | ||

| "//pkg/util/leaktest", | ||

| "@com_github_stretchr_testify//require", | ||

| ], | ||

| ) |

This file contains bidirectional Unicode text that may be interpreted or compiled differently than what appears below. To review, open the file in an editor that reveals hidden Unicode characters.

Learn more about bidirectional Unicode characters

| Original file line number | Diff line number | Diff line change |

|---|---|---|

| @@ -0,0 +1,102 @@ | ||

| // Copyright 2021 The Cockroach Authors. | ||

| // | ||

| // Use of this software is governed by the Business Source License | ||

| // included in the file licenses/BSL.txt. | ||

| // | ||

| // As of the Change Date specified in that file, in accordance with | ||

| // the Business Source License, use of this software will be governed | ||

| // by the Apache License, Version 2.0, included in the file | ||

| // licenses/APL.txt. | ||

|

|

||

| package rangefeedbuffer | ||

|

|

||

| import ( | ||

| "context" | ||

| "sort" | ||

|

|

||

| "github.com/cockroachdb/cockroach/pkg/util/hlc" | ||

| "github.com/cockroachdb/cockroach/pkg/util/log" | ||

| "github.com/cockroachdb/cockroach/pkg/util/syncutil" | ||

| "github.com/cockroachdb/errors" | ||

| ) | ||

|

|

||

| // ErrBufferLimitExceeded is returned by the buffer when attempting to add more | ||

| // events than the limit the buffer is configured with. | ||

| var ErrBufferLimitExceeded = errors.New("buffer limit exceeded") | ||

|

|

||

| // Event is the unit of what can be added to the buffer. | ||

| type Event interface { | ||

| Timestamp() hlc.Timestamp | ||

| } | ||

|

|

||

| // Buffer provides a thin memory-bounded buffer to sit on top of a rangefeed. It | ||

| // accumulates raw events which can then be flushed out in timestamp sorted | ||

| // order en-masse whenever the rangefeed frontier is bumped. If we accumulate | ||

| // more events than the limit allows for, we error out to the caller. | ||

| type Buffer struct { | ||

| limit int | ||

|

|

||

| mu struct { | ||

| syncutil.Mutex | ||

|

|

||

| events | ||

| frontier hlc.Timestamp | ||

| } | ||

| } | ||

|

|

||

| // New constructs a Buffer with the provided limit. | ||

| func New(limit int) *Buffer { | ||

| return &Buffer{limit: limit} | ||

| } | ||

|

|

||

| // Add adds the given entry to the buffer. | ||

| func (b *Buffer) Add(ctx context.Context, ev Event) error { | ||

| b.mu.Lock() | ||

| defer b.mu.Unlock() | ||

|

|

||

| if ev.Timestamp().LessEq(b.mu.frontier) { | ||

| // If the entry is at a timestamp less than or equal to our last known | ||

| // frontier, we can discard it. | ||

| return nil | ||

| } | ||

|

|

||

| if b.mu.events.Len()+1 > b.limit { | ||

| return ErrBufferLimitExceeded | ||

| } | ||

|

|

||

| b.mu.events = append(b.mu.events, ev) | ||

| return nil | ||

| } | ||

|

|

||

| // Flush returns the timestamp sorted list of accumulated events with timestamps | ||

| // less than or equal to the provided frontier timestamp. The timestamp is | ||

| // recorded (expected to monotonically increase), and future events with | ||

| // timestamps less than or equal to it are discarded. | ||

| func (b *Buffer) Flush(ctx context.Context, frontier hlc.Timestamp) (events []Event) { | ||

| b.mu.Lock() | ||

| defer b.mu.Unlock() | ||

|

|

||

| if frontier.Less(b.mu.frontier) { | ||

| log.Fatalf(ctx, "frontier timestamp regressed: saw %s, previously %s", frontier, b.mu.frontier) | ||

| } | ||

|

|

||

| // Accumulate all events with timestamps <= the given timestamp in sorted | ||

| // order. | ||

| sort.Sort(&b.mu.events) | ||

| idx := sort.Search(len(b.mu.events), func(i int) bool { | ||

| return !b.mu.events[i].Timestamp().LessEq(frontier) | ||

| }) | ||

|

|

||

| events = b.mu.events[:idx] | ||

| b.mu.events = b.mu.events[idx:] | ||

| b.mu.frontier = frontier | ||

| return events | ||

| } | ||

|

|

||

| type events []Event | ||

|

|

||

| var _ sort.Interface = (*events)(nil) | ||

|

|

||

| func (es *events) Len() int { return len(*es) } | ||

| func (es *events) Less(i, j int) bool { return (*es)[i].Timestamp().Less((*es)[j].Timestamp()) } | ||

| func (es *events) Swap(i, j int) { (*es)[i], (*es)[j] = (*es)[j], (*es)[i] } |

Oops, something went wrong.