-

Notifications

You must be signed in to change notification settings - Fork 4.9k

New issue

Have a question about this project? Sign up for a free GitHub account to open an issue and contact its maintainers and the community.

By clicking “Sign up for GitHub”, you agree to our terms of service and privacy statement. We’ll occasionally send you account related emails.

Already on GitHub? Sign in to your account

[packetbeat] memory usage is growing continuously when capturing redis #12657

Comments

|

i also have a test to output to local file, but problem still exists, |

|

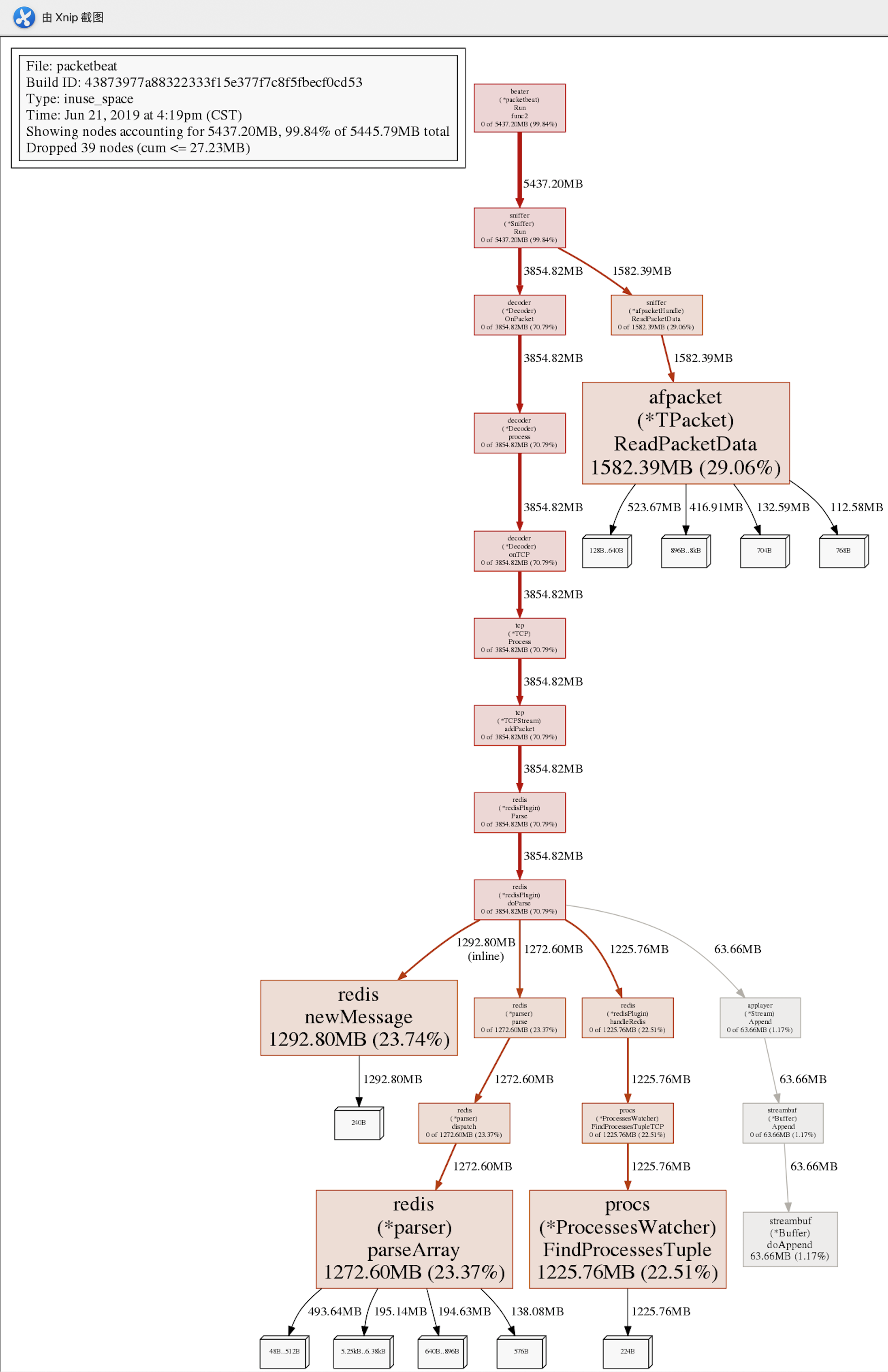

Hi @romber2001 By looking at the code and the provided pprof output, I think that the logical explanation is that Packetbeat is capturing a lot of Redis requests but not the responses. This will currently cause it to leak memory as it will accumulate the requests indefinitely waiting for a response to be received (that's something that should be fixed). It might be caused by a lot of packet loss / retransmissions in the network. Can we confirm by having either: |

|

Hi @romber2001, thanks for the files, they confirm my suspicions, I will work on a fix. |

|

@adriansr , thanks for your help, is this caused by there are only slaves on the server? |

|

@romber2001 as I understand from the pcap, what causes the memory usage, in this case, is the replication streams. These consist of the master sending requests to the slave and there is never a response. The redis processor in Packetbeat is not prepared for this. |

|

@adriansr , is there any workaround to set a hard limit of memory usage of packetbeat? |

This patch limits the amount of memory that can be used by outstanding requests by adding two new configuration options to the Redis protocol: - max_queue_size: Limits the total size of the queued requests (in bytes). - max_queue_length: Limits the number of requests queued. These limits apply to individual connections. The defaults are to queue up to 1MB or 20.000 requests. This is enough to limit the currently unbounded memory used by replication streams while at the same time allow for pipelining requests. Closes elastic#12657

|

@romber2001 I've submitted a patch. I don't have a workaround other than suggesting you set a bpf_filter that ignores traffic between servers (replication) Something like this (untested!):

|

This patch limits the amount of memory that can be used by outstanding requests by adding two new configuration options to the Redis protocol: - max_queue_size: Limits the total size of the queued requests (in bytes). - max_queue_length: Limits the number of requests queued. These limits apply to individual connections. The defaults are to queue up to 1MB or 20.000 requests. This is enough to limit the currently unbounded memory used by replication streams while at the same time allow for pipelining requests. Closes #12657

This patch limits the amount of memory that can be used by outstanding requests by adding two new configuration options to the Redis protocol: - max_queue_size: Limits the total size of the queued requests (in bytes). - max_queue_length: Limits the number of requests queued. These limits apply to individual connections. The defaults are to queue up to 1MB or 20.000 requests. This is enough to limit the currently unbounded memory used by replication streams while at the same time allow for pipelining requests. Closes elastic#12657 (cherry picked from commit 1275ee8)

This patch limits the amount of memory that can be used by outstanding requests by adding two new configuration options to the Redis protocol: - max_queue_size: Limits the total size of the queued requests (in bytes). - max_queue_length: Limits the number of requests queued. These limits apply to individual connections. The defaults are to queue up to 1MB or 20.000 requests. This is enough to limit the currently unbounded memory used by replication streams while at the same time allow for pipelining requests. Closes elastic#12657 (cherry picked from commit 1275ee8)

|

@adriansr , thanks for your effort!!! |

|

@romber2001 nope, this was too late for 7.2.0. Expect it in 7.2.1 and 7.3.0 |

This patch limits the amount of memory that can be used by outstanding requests by adding two new configuration options to the Redis protocol: - max_queue_size: Limits the total size of the queued requests (in bytes). - max_queue_length: Limits the number of requests queued. These limits apply to individual connections. The defaults are to queue up to 1MB or 20.000 requests. This is enough to limit the currently unbounded memory used by replication streams while at the same time allow for pipelining requests. Closes elastic#12657 (cherry picked from commit 1275ee8)

|

@adriansr got it, i'll patch it to the v7.2.0 and have a test. |

|

@romber2001 it's a limit per redis connection. |

|

@adriansr so if there are 1000 clients connected to redis server, queue_max_bytes is 1MB, and packetbeat will use up to 1GB RAM? |

|

It's an upper limit @romber2001. This queue will only fill in the case of replication connections. For regular clients the queue will usually be empty. |

|

@adriansr thanks for your explain, i finally got the point, thanks again. |

This patch limits the amount of memory that can be used by outstanding requests by adding two new configuration options to the Redis protocol: - max_queue_size: Limits the total size of the queued requests (in bytes). - max_queue_length: Limits the number of requests queued. These limits apply to individual connections. The defaults are to queue up to 1MB or 20.000 requests. This is enough to limit the currently unbounded memory used by replication streams while at the same time allow for pipelining requests. Closes #12657 (cherry picked from commit 1275ee8)

…eplication (#12752) This patch limits the amount of memory that can be used by outstanding requests by adding two new configuration options to the Redis protocol: - max_queue_size: Limits the total size of the queued requests (in bytes). - max_queue_length: Limits the number of requests queued. These limits apply to individual connections. The defaults are to queue up to 1MB or 20.000 requests. This is enough to limit the currently unbounded memory used by replication streams while at the same time allow for pipelining requests. Closes #12657 (cherry picked from commit 1275ee8)

|

@adriansr hi again, just want to give a feedback to you and other guys who may keep an eye on this issue that i've run nearly 24 hours with new patch and memory usage does not grow anymore, problem solved. |

Beats version 6.8.8 Add support for Cisco syslog format used by their switch. 10760 Beats version 6.8.7 Fixed bug with elasticsearch/cluster_stats metricset not recording license expiration date correctly. 14541 14591 Make kibana module more resilient to Kibana unavailability. 15258 15270 Beats version 6.8.6 Fix recording of SSL cert metadata for Expired/Unvalidated x509 certs. 13687 Fix marshaling of ms-since-epoch values in elasticsearch/cluster_stats metricset. 14378 Fixed bug with elasticsearch/cluster_stats metricset not recording license ID in the correct field. 14592 Beats version 6.8.5 Convert indexed ms-since-epoch timestamp fields in elasticsearch/ml_job metricset to ints from float64s. 14220 14222 Beats version 6.8.4 Fix delay in enforcing close_renamed and close_removed options. 13488 13907 Fix merging of fields specified in global scope with fields specified under an input’s scope. 3628 13909 Fixed early expiration of templates (Netflow v9 and IPFIX). 13821 Fixed bad handling of sequence numbers when multiple observation domains were exported by a single device (Netflow V9 and IPFIX). 13821 Fixed increased memory usage with large files when multiline pattern does not match. 14068 Mark Kibana usage stats as collected only if API call succeeds. 13881 Beats version 6.8.3 Iterate over journal correctly, so no duplicate entries are sent. 12716 Fix panic in Redis Key metricset when collecting information from a removed key. 13426 Remove _nodes field from under cluster_stats as it’s not being used. 13010 Collect license expiry date fields as well. 11652 Beats version 6.8.2 Process dataset: Do not show non-root warning on Windows. 12740 Host dataset: Export Host fields to gob encoder. 12940 Skipping unparsable log entries from docker json reader 12268 Limit memory usage of Redis replication sessions. https://github.com/elastic/beats/issues/12657[12657 Beats version 6.8.1 Fixed a memory leak when using the add_process_metadata processor under Windows. 12100 Package dataset: Log error when Homebrew is not installed. 11667 Process dataset: Fixed a memory leak under Windows. 12100 Login dataset: Fix re-read of utmp files. 12028 Package dataset: Fixed a crash inside librpm after Auditbeat has been running for a while. 12147 12168 Fix direction of incoming IPv6 sockets. 12248 Package dataset: Auto-detect package directories. 12289 System module: Start system module without host ID. 12373 Host dataset: Fix reboot detection logic. 12591 Fix goroutine leak happening when harvesters are dynamically stopped. 11263 Fix initialization of the TCP input logger. 11605 Fix goroutine leak caused on initialization failures of log input. 12125 Fix memory leak in Filebeat pipeline acker. 12063 Fix goroutine leak on non-explicit finalization of log input. 12164 When TLS is configured for the TCP input and a certificate_authorities is configured we now default to required for the client_authentication. 12584 Avoid generating hints-based configuration with empty hosts when no exposed port is suitable for the hosts hint. 8264 12086 Fix direction of incoming IPv6 sockets. 12248 Validate that kibana/status metricset cannot be used when xpack is enabled. 12264 In the kibana/stats metricset, only log error (don’t also index it) if xpack is enabled. 12353 The elasticsearch/index_summary metricset gracefully handles an empty Elasticsearch cluster when xpack.enabled: true is set. 12489 12487 When TLS is configured for the http metricset and a certificate_authorities is configured we now default to required for the client_authentication. 12584 Fixed a memory leak when using process monitoring under Windows. 12100 Improved debug logging efficiency in PGQSL module. 12150 Add support to the system package dataset for the SUSE OS family. 11634 Add validation for elasticsearch and kibana modules' metricsets when xpack.enabled is set to true. 12386 Beats version 6.8.0 Updates to support changes to licensing of security features. Some Elastic Stack security features, such as encrypted communications, file and native authentication, and role-based access control, are now available in more subscription levels. For details, see https://www.elastic.co/subscriptions. Beats version 6.7.2 Relax validation of the X-Pack license UID value. 11640 Fix a parsing error with the X-Pack license check on 32-bit system. 11650 Fix OS family classification in add_host_metadata for Amazon Linux, Raspbian, and RedHat Linux. 9134 11494 Fix false positives reported in the host.containerized field added by add_host_metadata. 11494 Fix the add_host_metadata’s host.id field on older Linux versions. 11494 Package dataset: dlopen versioned librpm shared objects. 11565 Package dataset: Nullify Librpm’s rpmsqEnable. 11628 Don’t apply multiline rules in Logstash json logs. 11346 Prevent the docker/memory metricset from processing invalid events before container start 11676 Add support to the system package dataset for the SUSE OS family. 11634 Beats version 6.7.1 Initialize the Paths before the keystore and save the keystore into data/{beatname}.keystore. 10706 Remove IP fields from default_field in Elasticsearch template. 11399 Beats version 6.7.0 Port settings have been deprecated in redis/logstash output and will be removed in 7.0. 9915 Update the code of Central Management to align with the new returned format. 10019 Allow Central Management to send events back to kibana. 9382 Fix panic if fields settting is used to configure hosts.x fields. 10824 10935 Introduce query.default_field as part of the template. 11205 Beats Xpack now checks for Basic license on connect. 11296 Filesets with multiple ingest pipelines added in 8914 only work with Elasticsearch >= 6.5.0 10001 Add grok pattern to support redis 5.0.3 log timestamp. 9819 10033 Ingesting Elasticsearch audit logs is only supported with Elasticsearch 6.5.0 and above 8852 Remove ecs option from user_agent processors when loading pipelines with Filebeat 6.7.x into Elasticsearch < 6.7.0. 10655 11362 Remove monitor generator script that was rarely used. 9648 Fix TLS certificate DoS vulnerability. 10303 Fix panic and file unlock in spool on atomic operation (arm, x86-32). File lock was not released when panic occurs, leading to the beat deadlocking on startup. 10289 Adding logging traces at debug level when the pipeline client receives the following events: onFilteredOut, onDroppedOnPublish. 9016 Do not panic when no tokenizer string is configured for a dissect processor. 8895 Fix a issue when remote and local configuration didn’t match when fetching configuration from Central Management. 10587 Add ECS-like selectors and dedotting to docker autodiscover. 10757 10862 Fix encoding of timestamps when using disk spool. 10099 Include ip and boolean type when generating index pattern. 10995 Using an environment variable for the password when enrolling a beat will now raise an error if the variable doesn’t exist. 10936 Cancelling enrollment of a beat will not enroll the beat. 10150 Remove IP fields from default_field in Elasticsearch template. 11399 Package: Disable librpm signal handlers. 10694 Login: Handle different bad login UTMP types. 10865 Fix hostname references in System module dashbords. 11064 User dataset: Numerous fixes to error handling. 10942 Support IPv6 addresses with zone id in IIS ingest pipeline. 9836 error log: 9869 access log: 10029 Fix bad bytes count in docker input when filtering by stream. 10211 Fixed data types for roles and indices fields in elasticsearch/audit fileset 10307 Cover empty request data, url and version in Apache2 modulehttps://github.com/elastic/beats/pull/10846[10846] Fix a bug with the convert_timezone option using the incorrect timezone field. 11055 11164 Change URLPATH grok pattern to support brackets. 11135 11252 Add support for iis log with different address format. 11255 11256 Add fix to parse syslog message with priority value 0. 11010 Host header can now be overridden for HTTP requests sent by Heartbeat monitors. 9516 Fix checks for TCP send/receive data 10777 Do not stop collecting events when journal entries change. 9994 Fix MongoDB dashboard that had some incorrect field names from status Metricset 9795 9715 Fix issue that would prevent collection of processes without command line on Windows. 10196 Fixed data type for tags field in docker/container metricset 10307 Fixed data type for tags field in docker/image metricset 10307 Fixed data type for isr field in kafka/partition metricset 10307 Fixed data types for various hosts fields in mongodb/replstatus metricset 10307 Added function to close sql database connection. 10355 Fix parsing error using GET in Jolokia module. 11075 11071 Fix Winlogbeat escaping CR, LF and TAB characters. 11328 11357 Correctly extract Kinesis Data field from the Kinesis Record. 11141 Add the required permissions to the role when deployment SQS functions. 9152 Affecting all Beats Add ip fields to default_field in Elasticsearch template. 11035 Add cleanup_timeout option to docker autodiscover, to wait some time before removing configurations after a container is stopped. 10374 10905 System module process dataset: Add user information to processes. 9963 Add system package dataset. 10225 Add system module login dataset. 9327 Add entity_id fields. 10500 Add seven dashboards for the system module. 10511 Add field log.source.address and log.file.path to replace source. 9435 Support mysql 5.7.22 slowlog starting with time information. 7892 9647 Add support for ssl_request_log in apache2 module. 8088 9833 Add support for iis 7.5 log format. 9753 9967 Add support for MariaDB in the slowlog fileset of mysql module. 9731 Add convert_timezone to nginx module. 9839 10148 Add support for Percona in the slowlog fileset of mysql module. 6665 10227 Added support for ingesting structured Elasticsearch audit logs 8852 New iptables module that receives iptables/ip6tables logs over syslog or file. Supports Ubiquiti Firewall extensions. 8781 10176 Populate more ECS fields in the Suricata module. 10006 Made monitors.d configuration part of the default config. 9004 Autodiscover metadata is now included in events by default. So, if you are using the docker provider for instance, you’ll see the correct fields under the docker key. 10258 Add field event.dataset which is {module}.{metricset}. Add more TCP statuses to socket_summary metricset. 9430 Remove experimental tag from ceph metricsets. 9708 Add key metricset to the Redis module. 9582 9657 Add DeDot for kubernetes labels and annotations. 9860 9939 Add docker event metricset. 9856 Release Ceph module as GA. 10202 Release windows Metricbeat module as GA. 10163 Release traefik Metricbeat module as GA. 10166 List filesystems on Windows that have an access path but not an assigned letter 8916 10196 Release uswgi Metricbeat module GA. 10164 Release php_fpm module as GA. 10198 Release Memcached module as GA. 10199 Release etcd module as GA. 10200 Release kubernetes apiserver and event metricsets as GA 10212 Release Couchbase module as GA. 10201 Release aerospike module as GA. 10203 Release envoyproxy module GA. 10223 Release mongodb.metrics and mongodb.replstatus as GA. 10242 Release mysql.galera_status as Beta. 10242 Release postgresql.statement as GA. 10242 Release RabbitMQ Metricbeat module GA. 10165 Release Dropwizard module as GA. 10240 Release Graphite module as GA. 10240 Release http.server metricset as GA. 10240 Add support for MySQL 8.0 and tests also for Percona and MariaDB. 10261 Release use of xpack.enabled: true flag in Elasticsearch and Kibana modules as GA. 10222 Release Elastic stack modules (Elasticsearch, Logstash, and Kibana) as GA. 10094 Add remaining memory metrics of pods in Kubernetes metricbeat module 10157 Added 'server' Metricset to Zookeeper Metricbeat module 8938 10341 Add overview dashboard to Zookeeper Metricbeat module 10379 Mark Functionbeat as GA. 10564 Functionbeat can now deploy a function for Kinesis. 10116 Allow functionbeat to use the keystore. 9009 Deprecate field source. Will be replaced by log.source.address and log.file.path in 7.0. 9435 Deprecate field metricset.rtt. Replaced by event.duration which is in nano instead of micro seconds. Support new TLS version negotiation introduced in TLS 1.3. 8647. Journalbeat requires at least systemd v233 in order to follow entries after journal changes (rotation, vacuum).

…ed by replication (elastic#12752) This patch limits the amount of memory that can be used by outstanding requests by adding two new configuration options to the Redis protocol: - max_queue_size: Limits the total size of the queued requests (in bytes). - max_queue_length: Limits the number of requests queued. These limits apply to individual connections. The defaults are to queue up to 1MB or 20.000 requests. This is enough to limit the currently unbounded memory used by replication streams while at the same time allow for pipelining requests. Closes elastic#12657 (cherry picked from commit 4d0ea18)

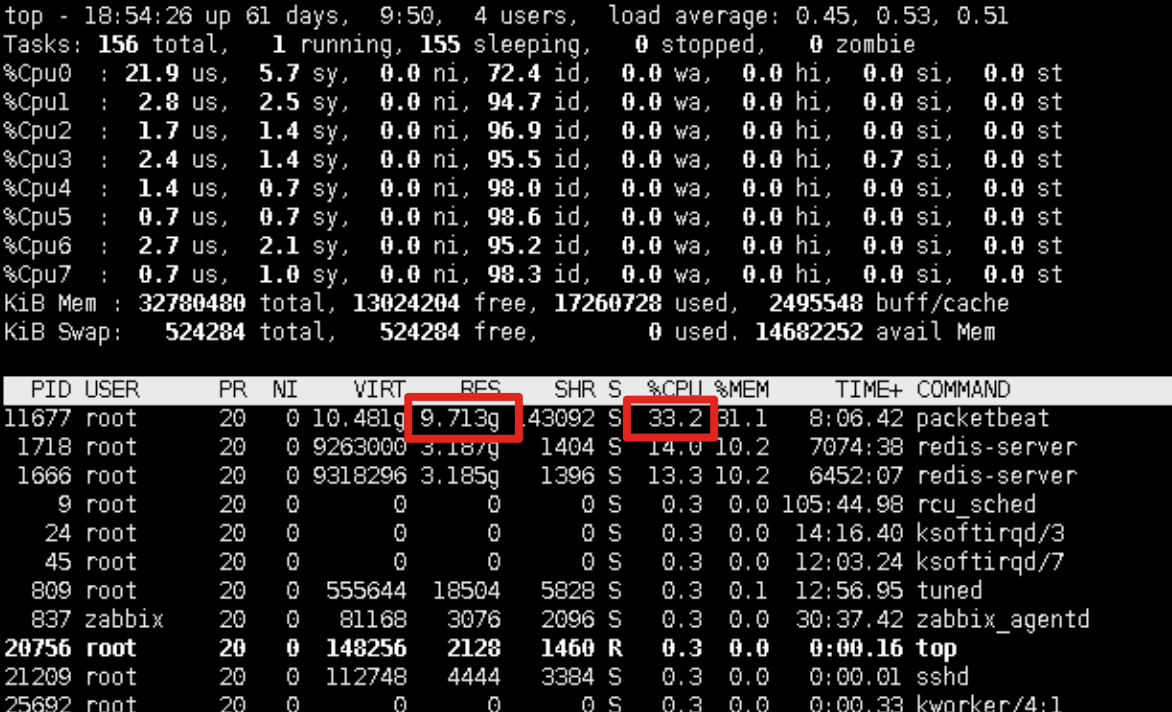

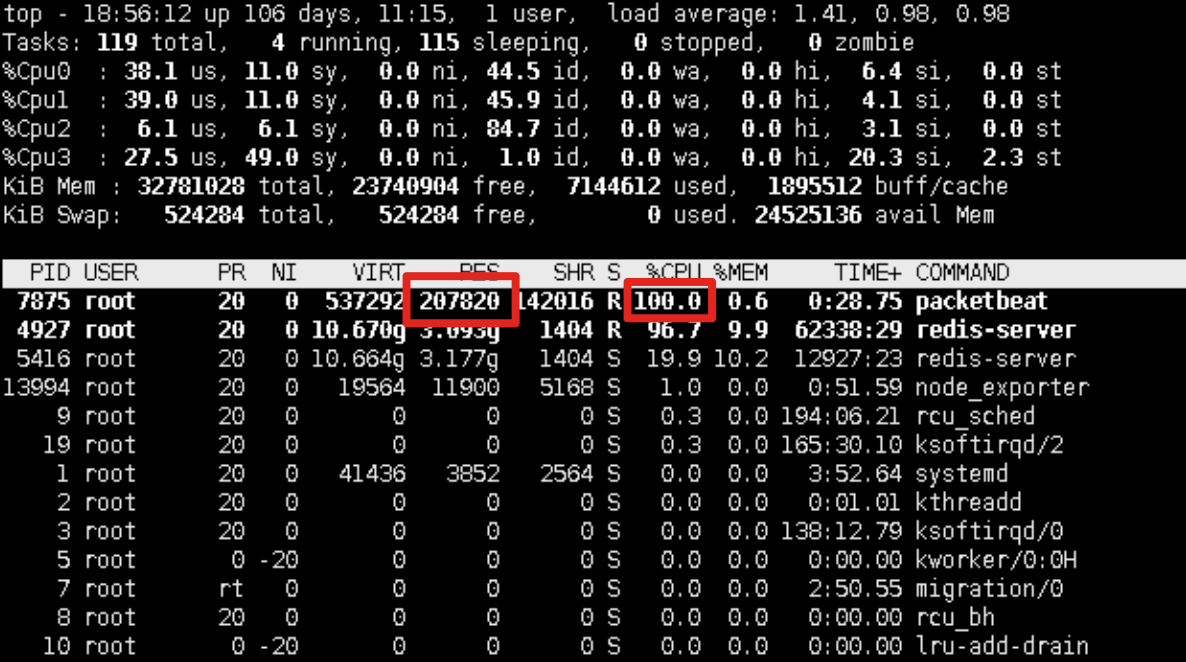

server: virtual machine

cpu: 4 logic cpu/8 logic cpu

ram: 32GB

os: centos 7.2 x86_64

packetbeat: 7.1.1(i used binary release not rpm package)

redis: 4.0.8

kafka: 2.9.2-0.8.2.2

we have a redis cluster which has 8 dedicated redis servers, and running 2 redis instances on each server, totally 16 redis instances.

and i deployed 8 packetbeat on those servers to capture redis traffic and output to kakfa cluster.

what is strange is that only one of the packetbeat process uses memory more and more,

but it output content to kafka without problems.

on the other side, memory usage of other 7 packetbeat processes did not grow continuously,

and they shared the same config file and binary release.

i uploaded config file and also the pprof png file,

could anyone help me on this problem?

thanks in advance.

config file:

pprof:

The text was updated successfully, but these errors were encountered: