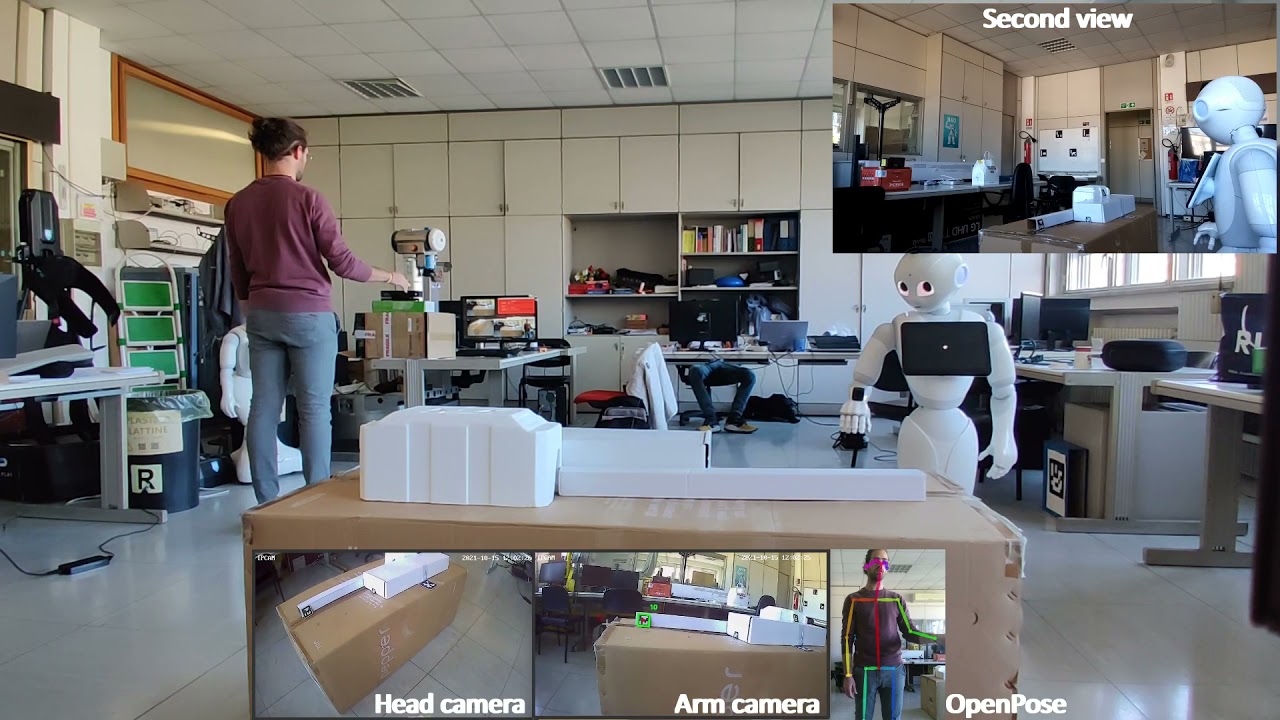

This repository contains the python code to use the Softbank Robotics Humanoid Robot Pepper to approach a user and then be teleoperated by an operator using Openpose for human pose estimation through the RGB camera of a Kinect v2 and the Kinect Depth Camera to add the third dimension to the estimated skeleton. From the 3D keypoints the joint angles are calculated and used as control input for the robot motors. A real-time butterworth filter is used for smoothing the control signals.

Here you can find a video showing the framework in action:

- OpenPose prerequisites:

- Windows 10 (For this implementation)

- Cmake-GUI

- Visual Studio 2019 Enterprise (Version 16.8.6)

- CUDA 11.1.1

- Cudnn 8.0.5 (CUDA 11.1 compatible)

- Python 3.7

- OpenPose installation

- Kinect V2

-

Pykinect2 python modules installation:

pip install comtypes==1.1.4

pip install numpy

pip install pykinect2

-

Install matplotlib

pip install matplotlib

-

Install pyzmq for inter process communication

pip install pyzmq

It is recommended to substitute the

PyKinect2.pyandPyKinectRuntime.pyfiles in the pykinect2 libraries inside your python installation with the updated ones form the github repository because the pip installer is not updated to the last version

- Pepper teleoperation

- Install

Python 2.7 32-bit(Recommended: miniconda2) - Install numpy on Python 2.7

pip install numpy

- Install scipy on Python 2.7

pip install pyzmq

- Install matplotlib on Python 2.7

pip install matplotlib

- Install naoqi Python SDK.

Extract and copy the libraries in the folderlibsof yourPython 2.7 32-bitinstallation directory (inside the miniconda2 directory if you use it)

- Install

-

To start the openpose 3D keypoints detection:

- Connect the kinect v2 to a USB 3.0 port

- Run

op_depth_keypoints.py(inopenpose_wrapfolder)cd ~/pepper_openpose_teleoperation/openpose_wrap python op_depth_keypoints.py

-

To visualize live 3D human pose with

matplotlib:- In another terminal run

get_and_plot_3Dkeypoints.py(inopenpose_wrapfolder)cd ~/pepper_openpose_teleoperation/openpose_wrap python get_and_plot_3Dkeypoints.py

- In another terminal run

-

Pepper real-time teleoperation after finding and approaching the user using GUI and voice controls

- Open another terminal using

Python 2.7 32-bit(see miniconda2) - Run

pepper_gui.py(inpepper_teleoperationfolder) - Insert the IP of your Pepper robot

- Connect to the robot pressing the button or saying 'Connect'

- Use the GUI buttons for approaching the user or teleoperate the robot

- You can press the buttons also by talking (Say what is written on the button)

- After pressing 'Start talking', the robot will repeat all the things you say, except if it recognize one of the possible commands:

- 'move forward'

- 'move backwards'

- 'move right'

- 'move left'

- 'rotate left'

- 'rotate right'

- 'stop talking'

- 'start moving'

- 'stop moving'

- 'watch right'

- 'watch left'

- 'watch up'

- 'watch down'

- 'watch ahead'

- 'track arm'

- 'track user'

- Open another terminal using

- Run

plot_angles.py(inpepper_teleoperationfolder)

cd ~/pepper_openpose_teleoperation/pepper_teleoperation

python plot_angles.py --path <name of the folder where the angles are stored inside the angles_data folder> Pepper cameras are low resolution and streaming video while teleoperating its motors is a challenging task for its hardware, so we decided to install external battery-powered wireless IP cameras to get the live video streaming from Pepper while teleoperating it from another room. Another IP camera will be installed on Pepper arm to visualize a more specific part of the scenary

- Download ONFIV Device Manager

- Get the rtsp stream URL of your IP camera (rtsp://...) (if it's connected to the same Wi-Fi network of your PC)

- Download PMPlayer

- Click on 'Open URL'

- Insert video stream URL and play