This repository contains the training scripts used in Pérez-García et al., 2021, Transfer Learning of Deep Spatiotemporal Networks to Model Arbitrarily Long Videos of Seizures, 24th International Conference on Medical Image Computing and Computer Assisted Intervention (MICCAI).

The (features) dataset is publicly available at the UCL Research Data Repository.

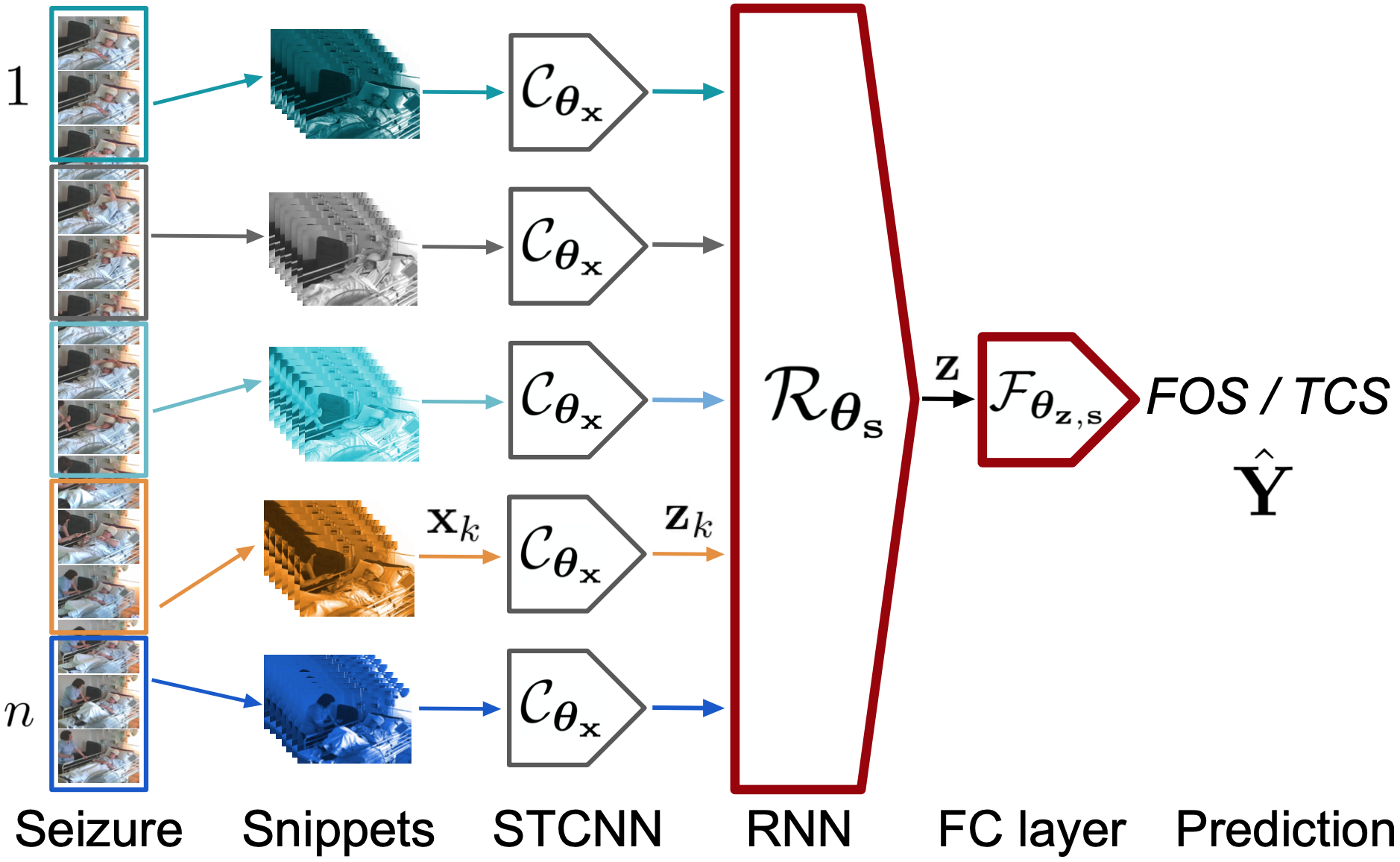

GESTURES stands for generalized epileptic seizure classification from video-telemetry using recurrent convolutional neural networks.

If you use this code or the dataset for your research, please cite the paper and the dataset appropriately.

Using conda is recommended:

conda create -n miccai-gestures python=3.7 ipython -y && conda activate miccai-gesturesUsing light-the-torch is recommended to install the best version of PyTorch automatically:

pip install light-the-torch

ltt install torch==1.7.0 torchvision==0.4.2Then, clone this repository and install the rest of the requirements:

git clone https://github.com/fepegar/gestures-miccai-2021.git

cd gestures-miccai-2021

pip install -r requirements.txtFinally, download the dataset:

curl -L -o dataset.zip https://ndownloader.figshare.com/files/28668096

unzip dataset.zip -d datasetGAMMA=4 # gamma parameter for Beta distribution

AGG=blstm # aggregation mode. Can be "mean", "lstm" or "blstm"

N=16 # number of segments

K=0 # fold for k-fold cross-validation

python train_features_lstm.py \

--print-config \

with \

experiment_name=lstm_feats_jitter_${GAMMA}_agg_${AGG}_segs_${N} \

jitter_mode=${GAMMA} \

aggregation=${AGG} \

num_segments=${N} \

fold=${K}