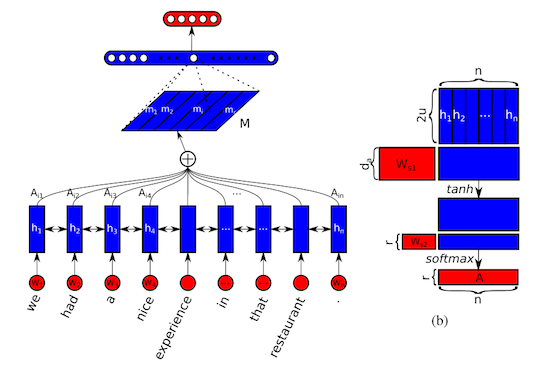

Tensorflow implementation of A Structured Self-Attentive Sentence Embedding

You can read more about concept from this paper

Frobenius norm with attention

Download ag news dataset as below

$ tree ./data

./data

└── ag_news_csv

├── classes.txt

├── readme.txt

├── test.csv

├── train.csv

└── train_mini.csv

and then

$ python train.py

Accuracy 0.895

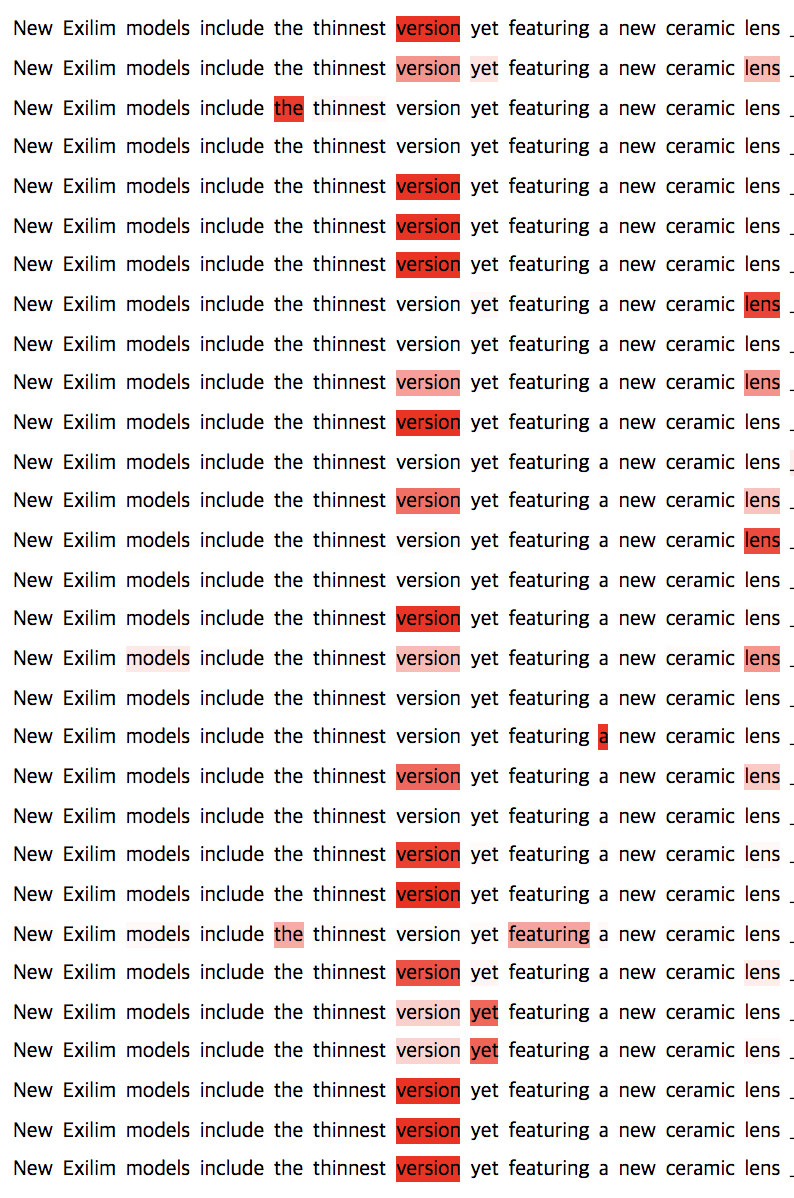

visualize without penalization

visualize with penalization

- support multiple dataset

This implementation does not use pretrained GloVe or Word2vec.