-

Notifications

You must be signed in to change notification settings - Fork 82

Design

This document gives a high level overview of the GlusterD-2.0 design. The GlusterD-2.0 design is being refined as we go along, and this document will be updated accordingly.

Gluster has come a long way being the POSIX-compliant distributed file system in clusters of small to medium sized clusters (10s-100s). Gluster.Next is a collection of improvements to push Gluster's capabilities to cloud-scale (read 1000s of nodes).

GlusterD-2.0, the next version of native Gluster management software, aims to offer devops-friendly interfaces to build, configure and deploy a 'thousand-node' Gluster cloud.

Following are the different categories of limitation the current GlusterD has.

Nonlinear node scalability: GlusterD's internal configuration data and local state is replicated across all the nodes. This requires the use of a n^2 heartbeat/membership protocol, which doesn’t scale when the cluster has thousands of nodes.

Code maintainability & feature integration: Non trivial effort is involved in adding management support for a new feature. Any new feature needs to hook into GlusterD codebase and this is how GlusterD codebase grows exponentially and it becomes difficult to maintain.

Lack of native ReST APIs: There was no native ReST API support and existing external implementations are wrappers around Gluster CLI.

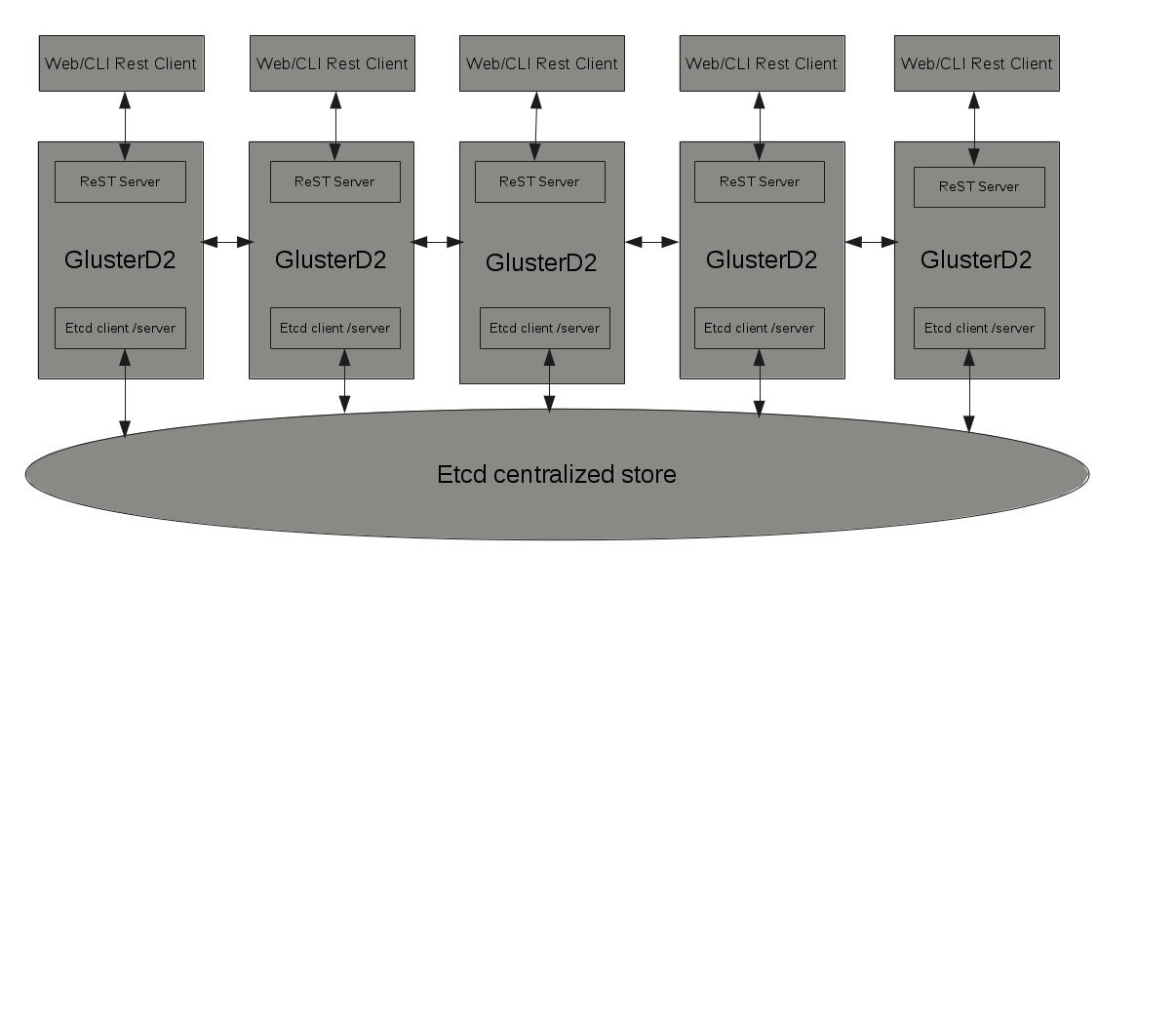

GD2 will maintain the cluster configuration data, which includes peer and volume information, in a central store instead of maintaining it on each peer. The central store is backed by etcd. Etcd servers will only run on a subset of the nodes in the cluster. Only these nodes take part in RAFT consensus mechanism. Other nodes of the cluster will be etcd proxies or clients.

GD2 will also be a native ReST server, exposing cluster management interfaces via a HTTP ReST API. The CLI will be rewritten as a ReST client which uses this API.

The main management interface in GD2 will be a HTTP ReST interface. APIs will be provided for the management of peers, management of volumes, local-GlusterD management, monitoring (events) and long-running asynchronous operations.

More details on the ReST-API can be found at ReST-API (note that this is still under active development).

The CLI application will be a ReST Client application talking over HTTP ResT interfaces with GD2. The CLI will support GlusterFS 3.x semantics, with changes as appropriate to fix some known issues.

The central store is the most important part of GD2. The central store will provide GD2 with a centralized location to save cluster data, and have it accessible from the whole cluster. The central store helps avoid the complex and costly transactions in use now. We choose to use an external, distributed-replicated key-value store as the centralized store instead of implementing in GD2. etcd was our choice for central store.

The transaction framework ensures that a given set of actions will be performed in order, one after another, on the required peers of the cluster.

The new transaction framework is built around the central store. It has 2 major differences to the existing frameworks:

- actions will only be performed where required, instead of being done across the whole cluster

- final committing of the results into the store will only be done by the node where the transaction was initiated

The above 2 changes will help keep the transaction framework simple and fast.

More details can be found at Transaction-framework.

GD2 instances will talk to each other over gRPC protocol.

Glusterfs client processes and brick processes will communicate with GD2 using SunRPC. This ensures that other parts of Gluster written in C will need no code changes and GD2 can be a drop-in replacement for glusterd1.

To ease integration of GlusterFS features into GD2 and to reduce the maintenance effort, GD2 will provide a pluggable interface. This interface will allow new features to integrate with GD2, without the feature developers having to modify core GD2. This interface will mainly be targeted for filesystem features, that wouldn't require a large amount of change to management code. The interface will aim to provide the ability to,

- insert xlators into a volume graph

- set options on xlators

- define and create custom volume graphs

- define and manage daemons

- create CLI commands

- hook into existing CLI commands

- query cluster and volume information

- associate information with objects (peers, volumes)

The above should satisfy most of the common requirements for GD2 plugins.

GD2 will use structured logging to help improve log readability and machine parse-ability. Structured logging uses fixed strings with some attached metadata, generally in the form of key-value pairs, instead of variable log strings. Structured logging also allows us to create log contexts, which can be used to attach specific metadata to all logs in the context. Using log contexts and transaction-ids/request-ids allows us to easily identify and group logs related to a specific transaction or request.

GD2 currently uses logrus, structured logger for Go.

Operating version (or op-version) is used to prevent troubles caused during running hybrid clusters (different nodes have different versions of Gluster running). GD2 will set clear guidelines on how op-versions are supposed to be used, and provide suggested patterns to effectively use op-versions.

More focus will be given on improving the documentation for both users and developers. GD2 code will follow Go documentation practices and include proper documentation inline. This documentation can be easily extracted and hosted using the godoc tool.

As GD2 moves to a new store format and a new transaction mechanism, rolling upgrades from old version and maintaining backwards compatibility with 3.x releases will not be possible. Upgrades will involve service disruption.

Support may be provided for the migration of older configuration data to GD2, possibly in the form of helper scripts.

A detailed discussion on this can be found here.