Huicong Zhang, Haozhe Xie, Hongxun Yao

Harbin Institute of Technology, S-Lab, Nanyang Technological University

- [2024/07/04] The training and testing code are released.

- [2024/02/29] The repo is created.

We use the GoPro and DVD datasets in our experiments, which are available below:

You could download the zip file and then extract it to the datasets folder.

You could download the pretrained model from here and put the weights in model_zoos.

git clone https://github.com/huicongzhang/BSSTNet.git

conda create -n BSSTNet python=3.8

conda activate BSSTNet

pip install torch==1.9.1+cu111 torchvision==0.10.1+cu111 torchaudio==0.9.1 -f https://download.pytorch.org/whl/torch_stable.html

pip install mmcv-full==1.7.1 -f https://download.openmmlab.com/mmcv/dist/cu111/torch1.9/index.html

pip install -r requirements.txt

BASICSR_EXT=True python setup.py develop

To train BSSTNet, you can simply use the following commands:

GoPro dataset

scripts/dist_test.sh 2 options/test/BSST/gopro_BSST.yml

DVD dataset

scripts/dist_test.sh 2 options/test/BSST/dvd_BSST.yml

To train BSSTNet, you can simply use the following commands:

GoPro dataset

scripts/dist_train.sh 2 options/test/BSST/gopro_BSST.yml

DVD dataset

scripts/dist_train.sh 2 options/test/BSST/dvd_BSST.yml

@inproceedings{zhang2024bsstnet,

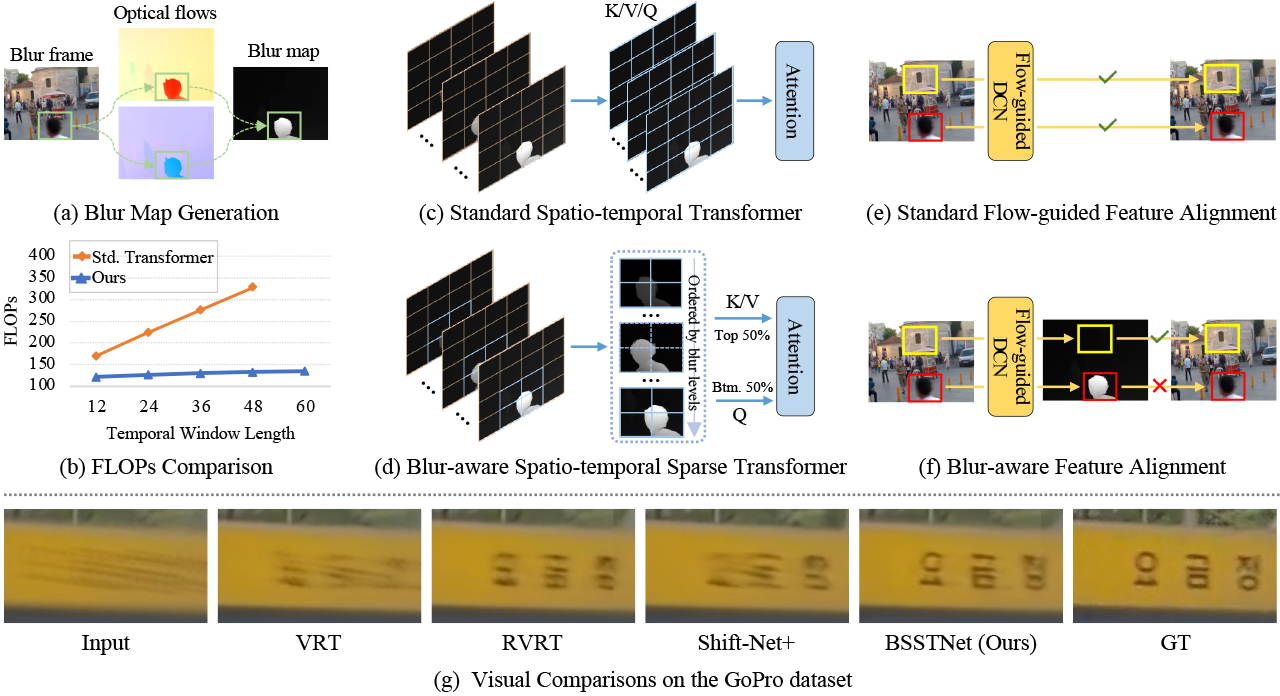

title = {Blur-aware Spatio-temporal Sparse Transformer for Video Deblurring},

author = {Zhang, Huicong and

Xie, Haozhe and

Yao, Hongxun},

booktitle = {CVPR},

year = {2024}

}

This project is open sourced under MIT license.

This project is based on BasicSR, ProPainter and Shift-Net.