-

Notifications

You must be signed in to change notification settings - Fork 839

New issue

Have a question about this project? Sign up for a free GitHub account to open an issue and contact its maintainers and the community.

By clicking “Sign up for GitHub”, you agree to our terms of service and privacy statement. We’ll occasionally send you account related emails.

Already on GitHub? Sign in to your account

RPC performance regression since 22.4.0-RC2 #3959

Comments

|

see this gist for details on how to connect to the palm dev network where this can be reproduced reliably |

|

@usmansaleem @garyschulte - has there been any progress on this issue? |

Yes, @ahamlat has identified that the regression was introduced in #3695 and it appears to be related to netty deferring to a native transport library. Ameziane is out ill currently, but we should be able to have a mitigation for this soon. |

|

@mbaxter : I compared the performances before and after #3695 and we can notice a real degradation. I used a benchmark created by @usmansaleem with the same throughput during 5 minutes. Here are the results : Before PR 3695

After PR 3695================================================================================

As Besu is consuming a lot of CPU during these tests, I did some CPU profiling to see which is the hot spot in the two tests Before PR 3695CPU hot spot : RocksDB.get After PR 3695CPU hot spot : some calls to native librairies @diega : Do you think we can improve the performance of this PR #3695 ? I was thinking for example about testing Java implementations instead of native librairies |

|

This is a major regression - it is blocking upgrades to RPC nodes on the Palm network. Have you considered reverting the problematic PR while a fix is worked out? |

|

It is a pretty major refactor from a couple months back, it might be more difficult to revert it than to mitigate or fix it. We will prioritize a fix one way or the other. |

|

I took a quick look at the revert conflicts - it doesn't look too bad to me. It really depends on timing, if there's an imminent fix, probably makes sense to just wait on the fix. But if there's weeks of exploratory work, probably better to just revert it. |

|

@mbaxter if you have the environment for running the test, would you try just reverting 70a01962? This commit has been lost in the squash but that's the one enabling native transport usage. If the regression is still present after reverting this one, then the problem is somewhere else in the refactor. |

|

@diega suggested testing the impact of adding Add false as second argument here: https://github.com/hyperledger/besu/blob/main/ethereum/api/src/main/java/org/hyperledger/besu/ethereum/api/jsonrpc/JsonRpcHttpService.java#L333 and here https://github.com/hyperledger/besu/blob/main/ethereum/api/src/main/java/org/hyperledger/besu/ethereum/api/jsonrpc/JsonRpcHttpService.java#L344 I am paraphrasing his suggestion - but anyone looking at the defect could try the above @mbaxter |

|

I ran @usmansaleem's performance tool against the Palm Testnet at version 22.4.0-RC1(before the regression was released) and compared that to At 22.4.0-RC1 (before the regression): And on @usmansaleem - any suggestions on the gatling tests? |

|

@mbaxter I used https://github.com/ConsenSys-Palm/palm-node to create the palm instance and updated the bootnodes etc. as mentioned in this ticket. I set up Besu |

|

I ran the besu-gatling default test suite against a fully synced palm dev node (HEAD at 18_438_825) with the following outputs Output for Output for Output for based on these numbers, I can say I can not reproduce the reported performance degradation |

|

I'm not able to reproduce anymore, here are the results :

Benchmark output ================================================================================

Benchmark output ================================================================================

Benchmark output ================================================================================

I had the same results with Java 11. |

Description

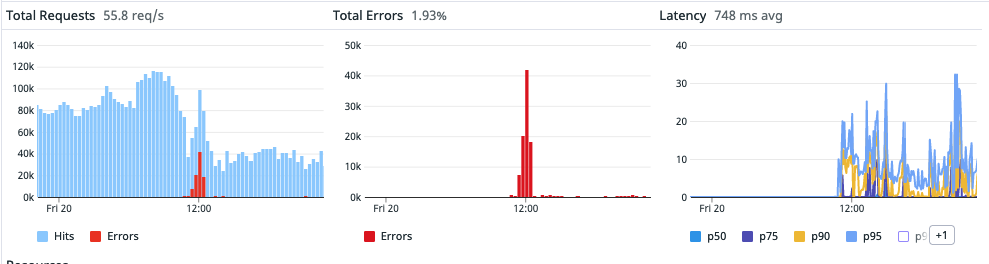

We have had a report of RPC performance regression in besu after upgrading from 22.1.x to the latest quaarterly release versions:

After some version bisecting it appears that the performance regression first appears in 22.4.0-RC2:

As noted in the chart, versions since 22.4.0-RC2 show a significant latency in rpc response time for light RPC activity (1-2 requests per second).

Acceptance Criteria

Steps to Reproduce (Bug)

To observe request latency spike on releases since 22.4.0-RC2

eth_getBlockByNumber Full and eth_getLogs Full

Expected behavior: no regression in RPC performance

Actual behavior: request latency is unacceptable

Frequency: 100% reproducible with the aforementioned traffic

Versions (Add all that apply)

cat /etc/*release]uname -a]vmware -v]docker version]Smart contract information (If you're reporting an issue arising from deploying or calling a smart contract, please supply related information)

solc --version]Additional Information (Add any of the following or anything else that may be relevant)

cloud k8s instance pod config:

The text was updated successfully, but these errors were encountered: