Nano Bots: AI-powered bots that can be easily shared as a single file, designed to support multiple providers such as Cohere Command, Google Gemini, Maritaca AI MariTalk, Mistral AI, Ollama, OpenAI ChatGPT, and others, with support for calling tools (functions).

Enhance your productivity and workflow by bringing the power of Artificial Intelligence to your writing app!

- Installation

- Commands

- Cartridges

- Shortcuts

- Privacy and Security: Frequently Asked Questions

- Will my files/code/content be shared or uploaded to third-party services?

- What information may be shared with third-party AI providers?

- Who are these third parties?

- Is there an option to avoid sharing any information?

- Can I use this for private or confidential content/code?

- Do I need to pay to use this?

- Is this project affiliated with OpenAI?

- Warranty and Disclaimer

- Development

obsidian.mp4

Create a folder obsidian-nano-bots in your .obsidian/plugins/ directory inside your vault:

mkdir -p .obsidian/plugins/obsidian-nano-botsDownload the files manifest.json, main.js, and styles.css from the latest release and place them inside the obsidian-nano-bots folder.

Ensure that you have "Community Plugins" enabled in your Settings and restart Obsidian.

After restarting, go to "Settings" -> "Community Plugins," find "Nano Bots," and enable it. Once enabled, you can start using it by opening your command palette and searching for "Nano Bots."

By default, access to the public Nano Bots API is available. However, it only provides a default Cartridge and may sometimes be slow or unavailable due to rate limits. This is common when many users around the world intensely use the API simultaneously.

To obtain the best performance and the opportunity to develop and personalize your own Cartridges, it is recommended that you use your own provider credentials to run your instance of the API locally. This approach will provide a superior and customized experience, in contrast to the convenient yet limited experience provided by the public API.

To connect your plugin to your own local Nano Bots API, start a local instance using nano-bots-api. Please note that the local API may still relies on external providers, which has its own policies regarding security and privacy. However, if you choose to use Ollama with open source Models, you can ensure that everything is kept local and remains completely private.

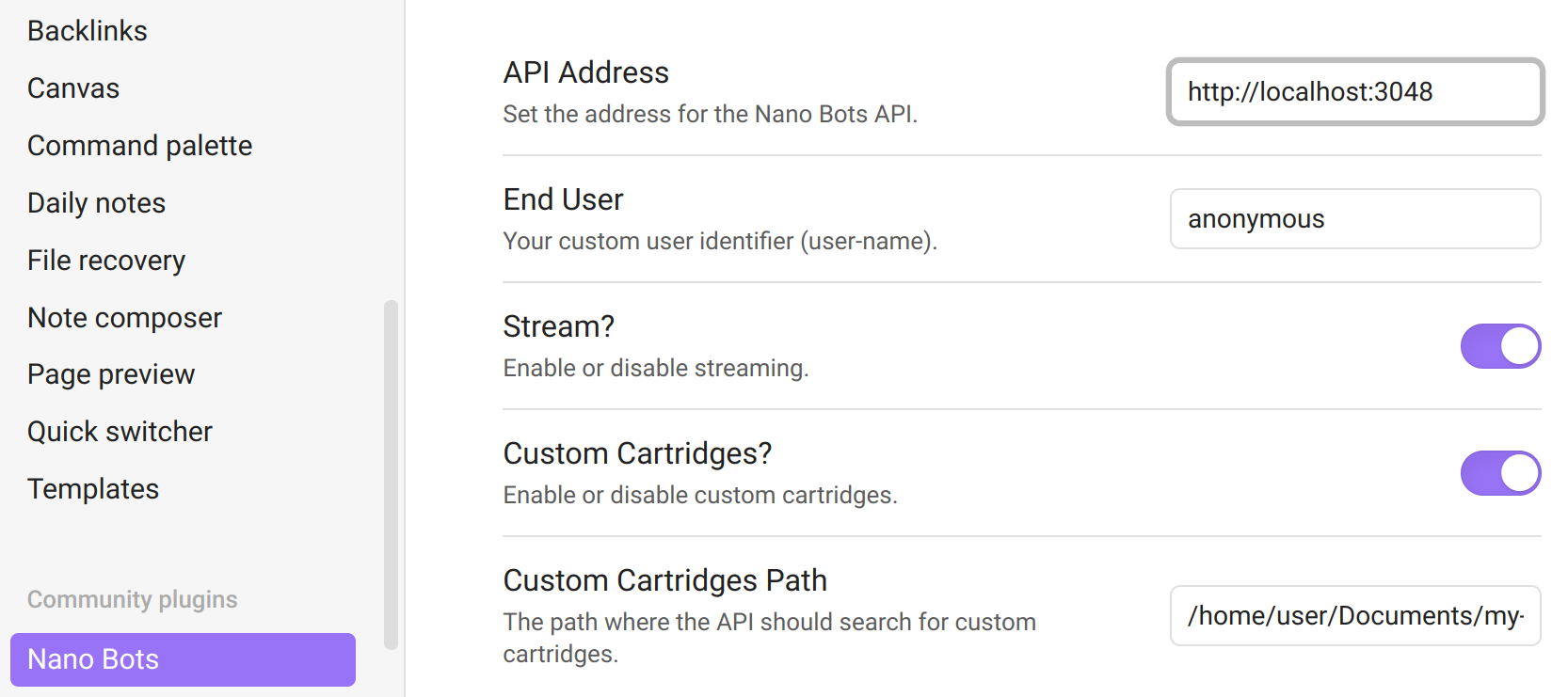

Once you have access to the Nano Bots API, you can go to "Settings" -> "Community Plugins" -> "Nano Bots" and add the API Address, which usually is http://localhost:3048:

With a Local API Instance, not only can you create custom YAML cartridges, but you can also design Markdown cartridges using your vault. To enable this, go to "Settings" > "Community Plugins" > "Nano Bots" and turn on "Custom Cartridges?". The default value for "Custom Cartridges Path", searches your vault for a "cartridges" or "Cartridges" folder. You may customize this path if needed. After configuration, any notes created in these folders become cartridges:

Examples can be found here: Markdown Cartridges Examples

After installation, you will have the following commands available in the command pallet:

The Evaluate command sends your currently selected text to a Nano Bot without any additional instructions.

Example:

Selected Text: Hi!

Nano Bot: Hello! How can I assist you today?

Demonstration:

obsidian.mp4

The Apply command works on a text selection. You select a piece of text and ask the Nano Bot to perform an action.

Example:

Selected Text: How are you doing?

Prompt: translate to french

Nano Bot: Comment allez-vous ?

The Prompt command works like a traditional chat, allowing you to prompt a request and receive an answer from the Nano Bot.

Example:

Prompt: write a hello world in Ruby

Nano Bot: puts "Hello, world!"

To interrupt a streaming response or stop waiting for a complete response, you can use the "Stop" command in the command palette. This is useful if you realize that the bot's answer is not what you were expecting from your request.

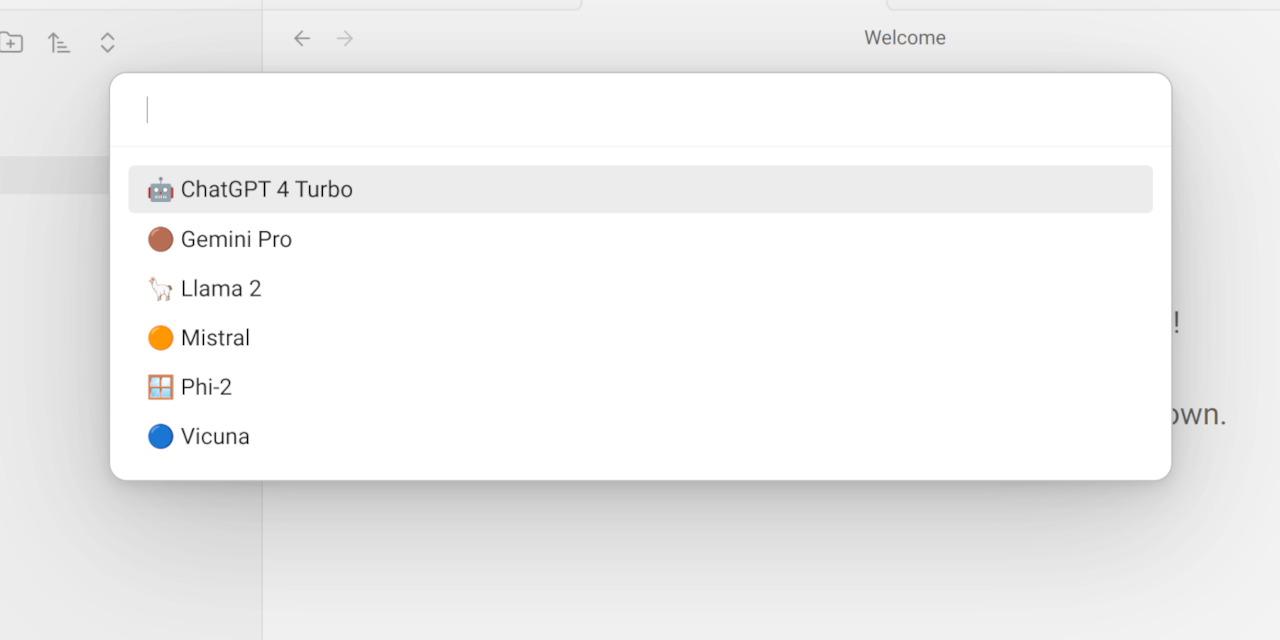

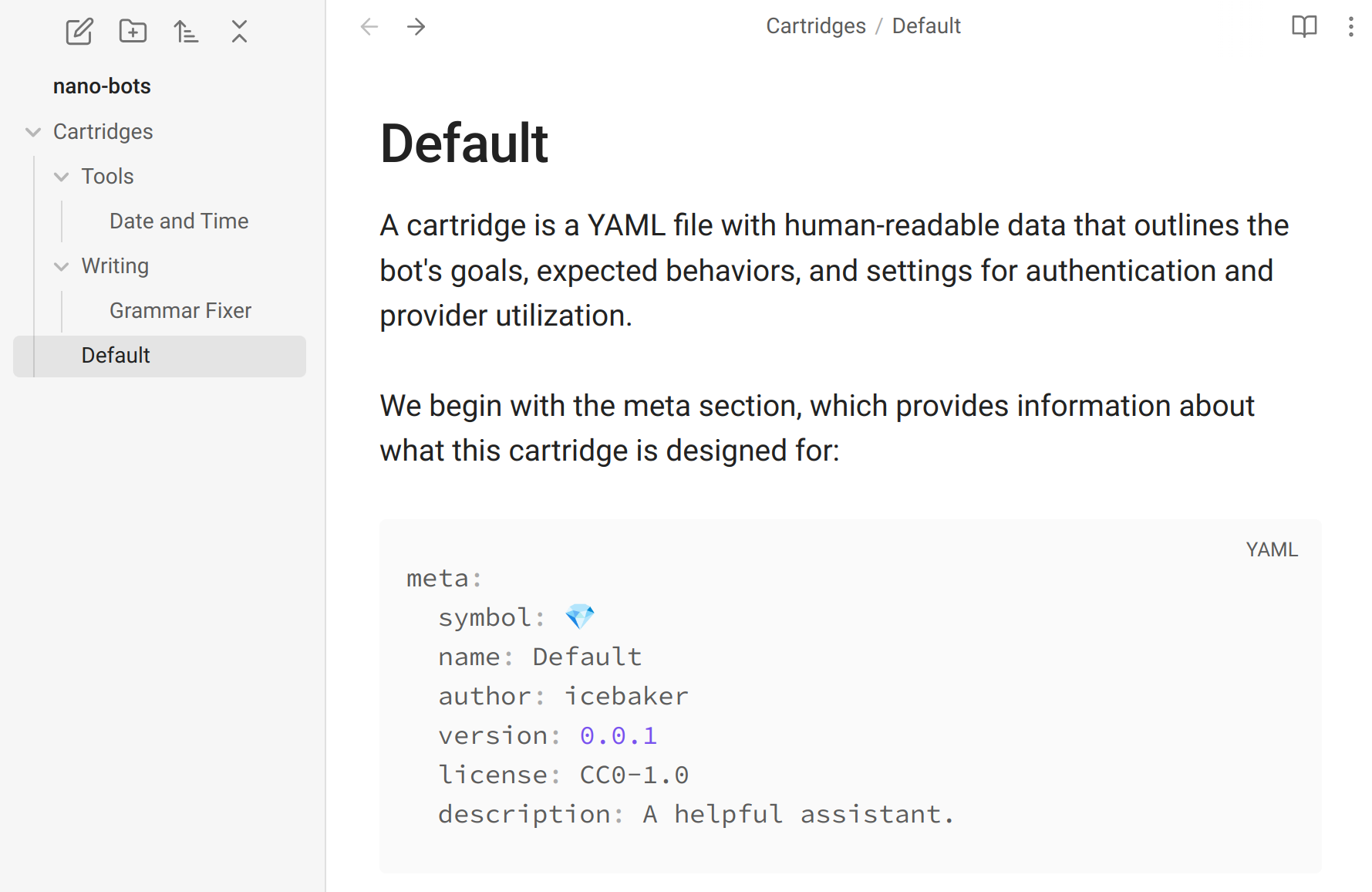

When executing the commands mentioned earlier, a prompt will appear asking you to select a Cartridge. The default Cartridge is the standard chat interaction. However, you can create your own Cartridges which will automatically appear in the command palette.

For further details on Cartridges, please refer to the Nano Bots specification.

You can override the default cartridge by creating your own with the name default.yml:

---

meta:

symbol: 🤖

name: Default

author: Your Name

version: 1.0.0

license: CC0-1.0

description: A helpful assistant.

provider:

id: openai

credentials:

address: ENV/OPENAI_API_ADDRESS

access-token: ENV/OPENAI_API_KEY

settings:

user: ENV/NANO_BOTS_END_USER

model: gpt-3.5-turboThere are no default shortcuts, but you can add your own by going to "Settings" -> "Hotkeys" and searching for "Nano Bots"

These are recommented shortcuts that you may choose do add:

ctrl+b->Nano Bots: Evaluate

Note that you need to disable the default "Toggle bold" hotkey to use this.

Another option is to use Chord Hotkeys, which you can do with plugins like Sequence Hotkeys:

ctrl+bctrl+b->Nano Bots: Evaluatectrl+bctrl+l->Nano Bots: Applyctrl+bctrl+p->Nano Bots: Promptctrl+bctrl+k->Nano Bots: Stop

Note that you would also need to disable the default "Toggle Bold" hotkey to use this.

Absolutely not, unless you intentionally take action to do so. The files you're working on or have open in your writing app will never be uploaded or shared without your explicit action.

Only small fragments of text/code that you intentionally take action to share. The specific text you select while using the Evaluate command is shared with the Nano Bots Public API, which also needs to share it with the OpenAI API strictly for generating a response. If you choose to use your own Local API, it will depend on your choice of providers and configurations.

The data you deliberately choose to share will be transmitted securely (HTTPS) to the Nano Bots Public API. This public API is open source and available for auditing here. It employs OpenAI API for data processing. As a result, any data you opt to share will also be sent to OpenAI API, which according to their policies, is not used for model training and is not retained beyond a 30-day period.

Sharing fragments of data is necessary to generate outputs. You have the option to use your own local instance of the Nano Bots API. This setup ensures all interactions occur locally on your machine, with the only data shared being with your personal OpenAI API. Alternatively, you can decide not to use OpenAI as well, and instead, connect the local Nano Bots API to your own local LLM, such as Ollama, enabling a completely local and private interaction.

For private or confidential content/code, we recommend that you or your organization conduct a thorough security and privacy assessment. Based on this, you may decide that the Nano Bots Public API and OpenAI's privacy policies are sufficient, or you may choose to use your own private setup for the API and LLM provider.

No. If you're using the default Nano Bots Public API, there's no cost involved, but you might encounter occasional rate limiting or stability issues. If you decide to use your own API and LLM provider, any associated costs will depend on your chosen provider. For instance, using the Nano Bots API locally with OpenAI will require a paid OpenAI Platform Account.

No, this is an open-source project with no formal affiliations with OpenAI or any of the other supported providers. It's designed for compatibility with various LLM providers, with OpenAI being the default one. As OpenAI is a private company, we can't provide any assurances about their services, and we have no affiliations whatsoever. Use at your own risk.

This project follows the MIT license. In plain language, it means:

The software is provided as it is. This means there's no guarantee or warranty for it. This includes how well it works (if it works as you expect), if it's fit for your purpose, and that it won't harm anything (non-infringement). The people who made or own this software can't be held responsible if something goes wrong because of the software, whether you're using it, changing it, or anything else you're doing with it.

In other words, there's no promise or responsibility from us about what happens when you use it. So, it's important that you use it at your own risk and decide how much you trust it. You are the one in charge and responsible for how you use it and the possible consequences of its usage.

npm install

npm run dev

npm run buildFor more details, refer to the Obsidian documentation:

Releasing new releases:

- Update the

manifest.jsonwith the new version number, such as1.0.1, and the minimum Obsidian version required for the latest release. - Update the

versions.jsonfile with"new-plugin-version": "minimum-obsidian-version"so older versions of Obsidian can download an older version of the plugin that's compatible. - Create new GitHub release using the new version number as the "Tag version". Use the exact version number, don't include a prefix

v. See here for an example: https://github.com/obsidianmd/obsidian-sample-plugin/releases - Upload the files

manifest.json,main.js,styles.cssas binary attachments. Note: The manifest.json file must be in two places, first the root path of the repository and also in the release. - Publish the release.