Click here for the Huggingface link with a video of the implementation

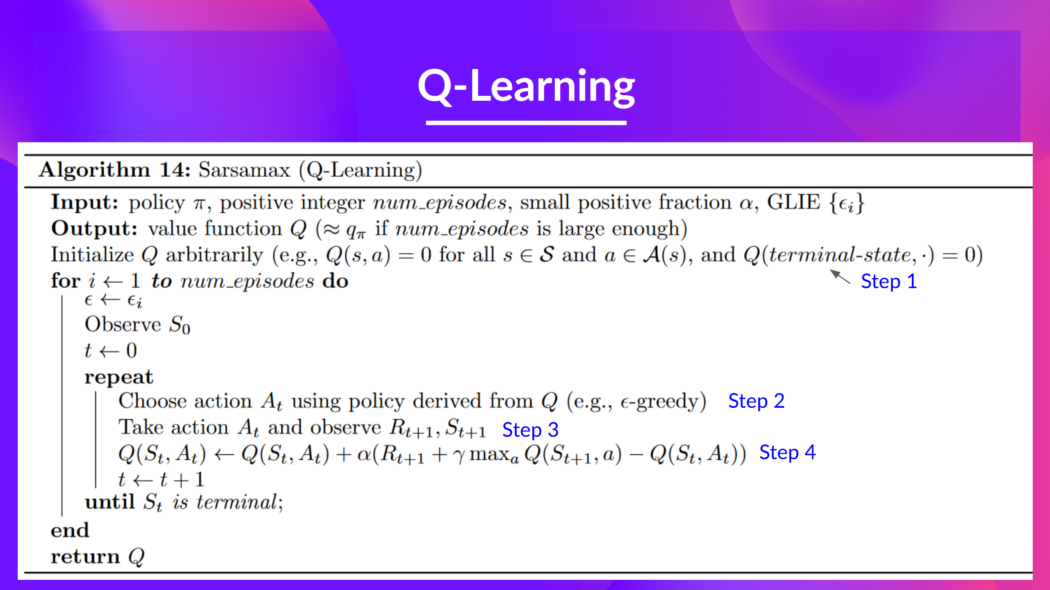

Q = "the Quality" of that action at that state. Q-learning is a model-free, value based, off-policy Reinforcement Learning algorithm which uses the Temporal Difference Control approach of updating the action-value function at each step instead of at the end of the episode, to learn the value of an action in a particular state.

- Definition Keypoints:

- Model-Free

- Off-Policy

- Uses TD Approach

- Value Based Method

- Control Problem

These algorithms seek to learn the consequences of their actions through experience. Such an algorithm will carry out an action multiple times, learn from its actions and will adjust the policy (the strategy behind its actions) for optimal rewards, based on the outcomes.

Eg, Self-Driving Cars.

In such an algorithm, an agent tries to understand its environment and creates a model for it based on its interactions with this environment. In such a system, preferences take priority over the consequences of the actions i.e. the greedy agent will always try to perform an action that will get the maximum reward irrespective of what that action may cause.

Eg, Playing Chess

Such an algorithm uses a diffrent policy for Acting and Updating.

Eg, In Q-Learning Algorithm:

Acting Policy: Epsilon Greedy Policy

Updating Policy: Greedy Policy for selecting the best next-state action value to update the Q-value.

Such an algorithm uses the same policy for Acting and Updating.

-Trains Q-Function (an action-value function) which internally is a Q-table that contains all the state-action pair values.

-Given a state and action, the Q-Function will search in its Q-table, the corresponding value.

-When the training is done, an optimal Q-function is obtained, which means an optimal Q-Table is obtained.

-Since there is an optimal Q-function, there is an optimal policy because for each state the best action to take is now known.