-

Notifications

You must be signed in to change notification settings - Fork 1.2k

Adding 50Mb+ Files to a browser js-ipfs crashes Chrome #952

Comments

|

@ya7ya Thanks for the report! I believe this issue to be related to the use of WebRTC and not necessarily with large files. |

|

@ya7ya wanna try disabling WebRTC and adding a large file? |

|

@diasdavid hey, I'll try that now 👍 |

|

Hey @diasdavid , I tried to disable WebRTC and it still crashed, However I had an idea and it kinda worked. instead of adding the file buffer using readFileContents(file).then((buf) => {

// create Readable Stream from buffer. requires 'stream-buffers'

let myReadableStreamBuffer = new streamBuffers.ReadableStreamBuffer({

chunkSize: 2048 // in bytes.

})

window.ipfs.files.createAddStream((err, stream) => {

if (err) throw err

stream.on('data', (file) => {

console.log('FILE : ', file)

})

myReadableStreamBuffer.on('data', (chunk) => {

myReadableStreamBuffer.resume()

})

// add ReadableStream to content

stream.write({

path: file.name,

content: myReadableStreamBuffer

})

// add file buffer to the stream

myReadableStreamBuffer.put(Buffer.from(buf))

myReadableStreamBuffer.stop()

myReadableStreamBuffer.on('end', () => {

console.log('stream ended.')

stream.end()

})

myReadableStreamBuffer.resume()

})

})the browser didn't crash, but chrome got super resource hungry and ate up alotta ram. What I did is instead of adding the content as 1 big buffer, i chunked it into a stream to throttle it. it took alot longer but it didn't crash. |

|

That tells me that we need stress tests sooner than ever. Thank you @ya7ya! |

|

Having this same issue. I watched resources go to nearly 12GB of swap and 6GB of ram in about 20 minutes before killing the Chrome Helper process. Running js-ipfs in browser. How could a 80mb file possibly cause all of this without some sort of severe memory leak occurring? |

|

I'm curious to see if libp2p/js-libp2p-secio#92 and browserify/browserify-aes#48 will actually mitigate this problem. |

|

If you're interested to see if the two issues @diasdavid linked above would help with this one (952) and you're using webpack, you could try adding |

|

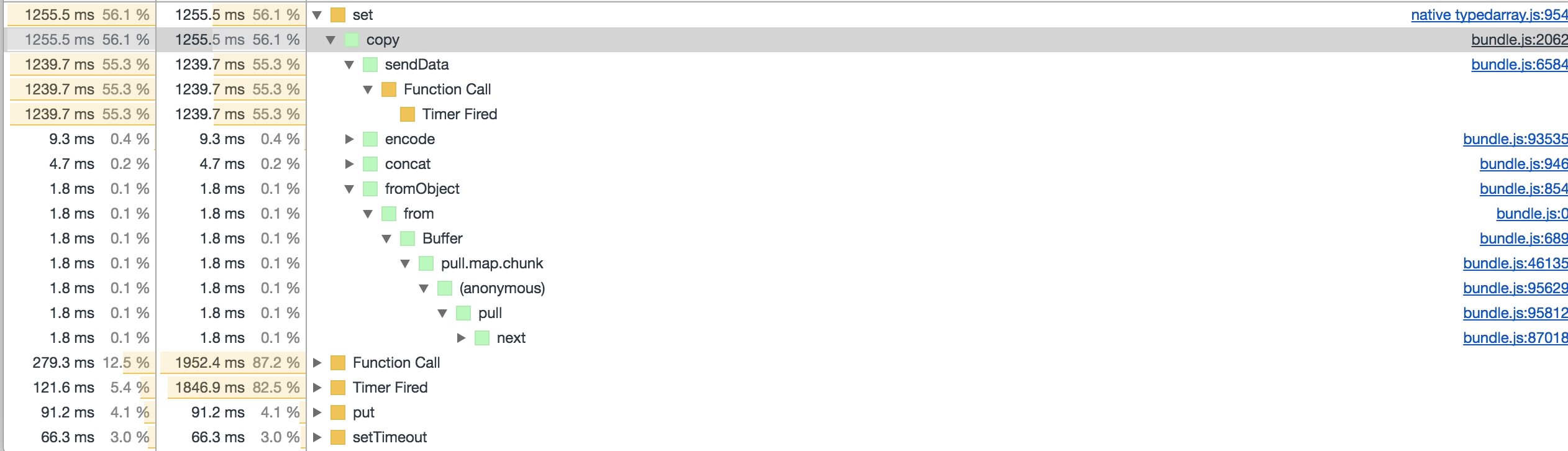

Did some tests with that, and it worked nicely with a 70mb file for me. Doing some more perf analysis it seems we still have a few places where we do a lot of Buffer copies which get expensive and we should look into optimising those away: Where the first |

|

Turned out all the stream issues from above where coming from the example and using the node stream api. Switched things to pull-streams and now we are fast and no crashing even with 300Mb: #988 |

|

@dignifiedquire Thanks 👍 , This is a much more elegant solution. That's what I had in mind in the first place but my pull-streams game is still weak 😄. |

|

@dignifiedquire I have a noob question Regarding this file uploader, How do you measure progress in a pull-stream, Like How much of the data was pulled to pull(

pull.values(files),

pull.through((file) => console.log('Adding %s', file)),

pull.asyncMap((file, cb) => pull(

pull.values([{

path: file.name,

content: pullFilereader(file)

}]),

node.files.createAddPullStream(),

pull.collect((err, res) => {

if (err) {

return cb(err)

}

const file = res[0]

console.log('Adding %s finished', file.path)

cb(null, file)

}))),

pull.collect((err, files) => {

if (err) {

throw err

}

if (files && files.length) {

console.log('All Done')

}

})

) |

content: pull(

pullFilereader(file),

pull.through((chunk) => updateProgress(chunk.length))

) |

|

@dignifiedquire Thanks alot dude 👍 , It works. I appreciate the help. |

|

Perhaps dignifiedquire/pull-block#2 would solve the crashes with the original |

|

I ran the old example and indeed it should be much better with pull-block 1.4.0 landing there. |

|

Lot's of improvements added indeed! Let's redirect our attention to PR #1086 (comment), I successfully managed to upload more than 750Mb and fetch it through the gateway. |

Windows cannot have secrets (temporarily). License: MIT Signed-off-by: Alan Shaw <alan.shaw@protocol.ai>

files.addType: Bug

Severity: Critical

Description: When trying out the

exchange-files-in-browserexample, uploading a file larger than 50MB crashes the browser, The Ram gradually starts filling up till it crashes.Steps to reproduce the error:

exchange-files-in-browserexample directory.npm installnpm startEdit: Version fix.

The text was updated successfully, but these errors were encountered: