-

-

Notifications

You must be signed in to change notification settings - Fork 343

New issue

Have a question about this project? Sign up for a free GitHub account to open an issue and contact its maintainers and the community.

By clicking “Sign up for GitHub”, you agree to our terms of service and privacy statement. We’ll occasionally send you account related emails.

Already on GitHub? Sign in to your account

Cannot use WaveGAN with Glow-TTS and Nividia's Tacotron2 #169

Comments

|

Let me confirm some points:

|

|

Thank you for your reply.

Thanks! |

|

I checked the following code.

Why don't you try the following procedure? txt -> [Taco2] -> Mel -> [de-compression] -> [log10] -> [cmvn] -> [PWG] |

|

I'm having the same problem, but I don't understand the Here's what I've got: from audio_processing import dynamic_range_decompression

# generate the MEL using Glow-TTS

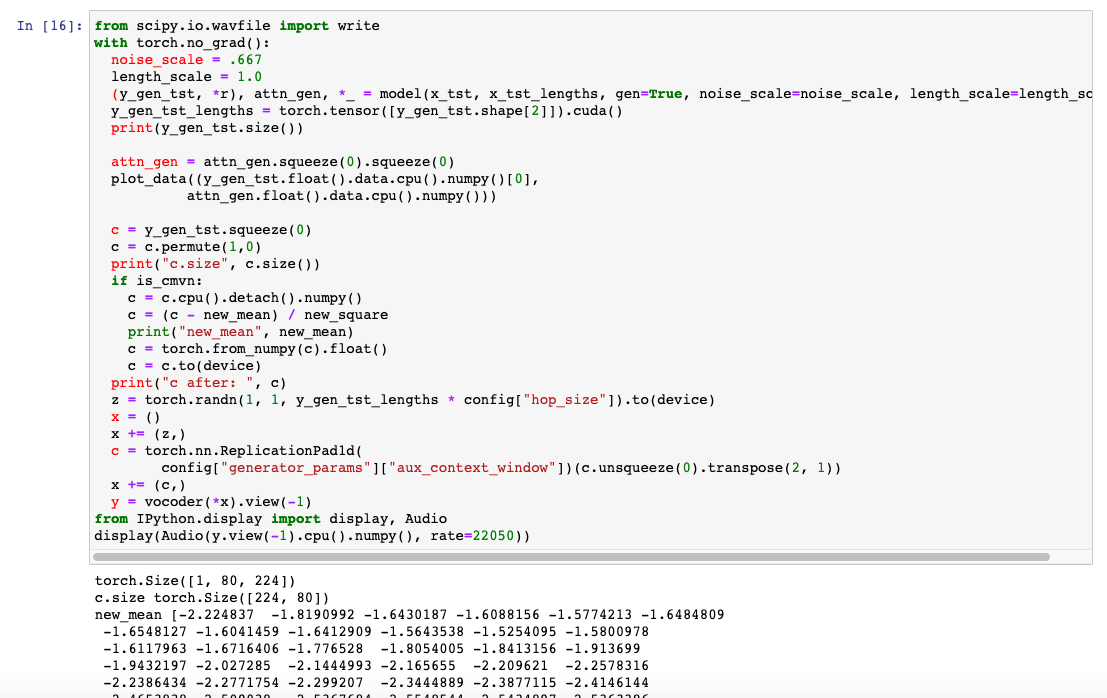

with torch.no_grad():

noise_scale = .667

length_scale = 1.0

(c, *r), attn_gen, *_ = model(x_tst, x_tst_lengths, gen=True, noise_scale=noise_scale, length_scale=length_scale)

# Decompress and log10 the output

decompressed = dynamic_range_decompression(c)

decompressed_log10 = np.log10(decompressed.cpu()).cuda()

# Run the PWG vocoder and play the output

with torch.no_grad():

xx = (decompressed_log10,)

y = pqmf.synthesis(vocoder(*xx)).view(-1)

from IPython.display import display, Audio

display(Audio(y.view(-1).cpu().numpy(), rate=config["sampling_rate"])) |

|

# load PWG statistics

mu = read_hdf5("/path/to/stats.h5", "mean")

var = read_hdf5("/path/to/stats.h5", "scale")

sigma = np.sqrt(var)

# mean-var normalization

decompressed_log10_norm = (decompressed_log10 - mu) / sigma

# then input to vocoder

... |

|

@kan-bayashi You are amazing! For anyone else running into this, you have to change the tensor shapes for from audio_processing import dynamic_range_decompression

from parallel_wavegan.utils import read_hdf5

# config

stats_path = '/path/to/stats.h5'

# generate the MEL using Glow-TTS

with torch.no_grad():

noise_scale = .667

length_scale = 1.0

(c, *r), attn_gen, *_ = model(x_tst, x_tst_lengths, gen=True, noise_scale=noise_scale, length_scale=length_scale)

# Decompress and log10 the output

decompressed = dynamic_range_decompression(c)

decompressed_log10 = np.log10(decompressed.cpu()).cuda()

# mean-var normalization

mu = read_hdf5(stats_path, "mean")

var = read_hdf5(stats_path, "scale")

sigma = np.sqrt(var)

decompressed_log10_norm = (decompressed_log10 - torch.from_numpy(mu).view(1, -1, 1).cuda()) / torch.from_numpy(sigma).view(1, -1, 1).cuda()

# Run the PWG vocoder and play the output

with torch.no_grad():

xx = (decompressed_log10_norm,)

y = pqmf.synthesis(vocoder(*xx)).view(-1)

from IPython.display import display, Audio

display(Audio(y.view(-1).cpu().numpy(), rate=config["sampling_rate"])) |

|

@seantempesta Great! |

|

Now we can combine with Nividia's tacotron2-based models. |

|

Hi @seantempesta. I copied your code and got the following error: Could you give me some advice?(e.g. In |

|

@Charlottecuc There are three vocoder models in this repository, PWG, MelGAN, and multi-band MelGAN.

And only the multi-band MelGAN needs PQMF filter as a post-processing to convert 4 ch signal into 1 ch signal. @seantempesta used multi-band MelGAN, so the input is only |

|

@kan-bayashi Great!!!!!Thank you for your advice. |

|

I've never met such a problem. |

|

Solved. Thank you :) |

|

@kan-bayashi the inference voice with lots of noise, could you please take a look? |

|

@ly1984 Please check the hyperparameters of mel-spectrogram extraction. Maybe you use different fmax and fmin. |

|

I've found the WaveGAN fmin: 80 fmax: 7600 and Glow-TTS "mel_fmin": 0.0, "mel_fmax": 8000.0, |

|

Yes. You need to retrain PWG or Glow-TTS to match the configuration. |

|

@kan-bayashi I am trying to use your models with mel spectrogram output from Nvidia's models, and although the above suggested methods get some results, the results are rather lackluster. Here's a colab notebook of an experiment

I observe the following from the experiment:

Can you please comment on, and help me identify any problems with

|

|

@rijulg Did you check this comment? And of course to the best quality, you need to match the feature extraction setting (e.g. FFT, shift). |

|

@kan-bayashi yes, I am indeed doing the log base conversion; I guess I (mistakenly) considered the log conversion part of your mean-var normalization process so did not mention it separately. |

|

In your code, the range of mel-basis is different. |

|

Ah, alright. Just to confirm there is no way of scaling right? Leaving the only option of retraining the models? |

|

Unfortunately, you need to retrain :( |

|

@seantempesta I tried your fix for GlowTTS inference with multiband melgan using the Mozilla-TTS multiband melgan, and though it did take away the noisy background but it left me with garbled up words. |

|

I ended up trying another method provided in the repo https://github.com/rishikksh20/melgan. I got the same garbled voice results. |

|

Since the sound itself is not affected by a wav normalization (audio /= (1 << (16 -1)), is there a way to use a PWGAN trained without wav norm to synthetize tacotron2 model output trained with wav norm? Additionally, someone know if wav norm is needed to converge tacotron2? I tried without wav norm to match a internal PWGAN trained without wav norm, but tacotron2 run 30k steps without attention alignment. |

|

@lucashueda I did not understand ‘wav norm’ you mentioned. Did you use wav with the scale from -66536 to 66536? |

With "wav norm" I mean " audio /= (1 << (16-1)) " to make a 16bit PCM file between -1 and +1. But i realize that different wav readers read these files differently, I was just confused with the "bin/preprocess.py" file where if I put the input_dir argument it just calls "load_wav" to read a wav file but if you pass a kaldi style file it performs the "wav norm", but as I saw the soundfile package already performs the normalization. |

|

Both cases will normalize the audio from -1 to 1. |

Hi @kan-bayashi , why do you need to do np.sqrt(var) ? In the compute_statistics.py, you have saved scale_ instead of var_. |

|

Oh, |

Hi. I trained the tacotron2 model (https://github.com/NVIDIA/tacotron2) and Glow-TTS model (https://github.com/jaywalnut310/glow-tts) by using the LJ speech dataset and can successfully synthesize voice by using WaveGlow as vocoder.

However, when I turned to the Parallel WaveGan, the synthzised waveform is quite strange:

(In the training time, the hop_size, sample_rate and window_size were set as the same for the tacotron, WaveGlow and waveGan model.)

I successfully synthesized speech using WaveGan with espnet's FastSpeech, but I failed to use waveGan to synthsize intelligible voice with any model derived from Nivida's Tacotron2 implementation (e.g. Glow-TTS). Could you please give me any advice?

(Because in Nivida's Tacotron2, there is no

cmvnto the input mel-spectrogram features, so I didn't calculate the cmvn of the training waves and didn't invert it back at the inference time)Thank you very much!

The text was updated successfully, but these errors were encountered: