-

Notifications

You must be signed in to change notification settings - Fork 27

V8 5.9 & Node.js 8.0 #146

Comments

|

/cc @nodejs/ctc @nodejs/v8 @nodejs/lts |

|

@rvagg we tested that same build on some private application code. Load testing that turned out to give 5-15% more throughput overall, even with all those regressions in the microbenchmarks. Even if streams microbenchmarks are lower, I see better throughput on some more realistic streams tests. I would note that our microbenchmarks are more written for crankshaft.js than our codebase. I think if we support v8 5.9, we might have to backport some of the performance regressions for which later v8 releases has fixes. As an example v8/v8@6d38f89 (which is be in 6.0) solves a very bad regression in the Given that v8 5.9 has been released, can we just have a build done with that, so we can check again? |

|

Thanks for the data point @mcollina, this is interesting. https://nodejs.org/download/nightly/ has builds for |

|

We had looked into performance and saw only improvements from 5.8 to 5.9 /cc @psmarshall |

|

As per the discussion in the thread for the CTC meeting I agree that we should have something which shows an assessment of the data (micro benchmarks, nightly runs, other data) to support whether it should be pulled in or not. I think earlier suggestion from @MylesBorins (possibly in the LTS WG) was that we might want to skip 5.9 and wait for 6.0 before updating Node version 8. The rational being that 6.0 will have lots of fixes (performance and otherwise) and we don't want to go LTS with V8 5.9, it should either be V5.8 or 6.0. |

|

If someone wants to spend some time on this, maybe a good way to run the benchmarks would be to compare 8.1.0 with the commits in the 8.2.0 proposal, that would remove all the extra noise. Also I believe that most of the benchmarks above are run on Windows. I don't know if it would make much difference but Linux is arguably more important as a deployment platform for Node (yes we have a very high number of Windows users but it's just not used for prod deployments as much). |

|

@rvagg That’s one of the reasons I’d like to see an 8.2.0 RC with V8 5.9 – it would make it a bit easier for people to check how their own applications perform. (And to answer your question in the release proposal thread: Yes, of course we can back out those changes if we agree that that’s the best course of action.) |

|

I looked into the benchmarks mentioned above, I'll write an overview here and get the data into a better format for more details soon. A lot of the regressions we saw happened to rely on some optimization that crankshaft had, and turbofan didn't. Because these are microbenchmarks, we saw that missing one optimization can tank a whole benchmark. In larger benchmarks, we might be a little bit slower here, and a little bit faster there, but we mostly see overall performance gains in the range of 5-15% like @mcollina mentioned. The microbenchmarks do give us useful information - they point out that one particular thing didn't work as fast as it did in crankshaft for some reason. e.g. In the url benchmarks we saw -40% to -70% regressions. This was due to missing inlining which is now fixed (see v8/v8@23ee743). These types of fixes also gave noticeable performance boosts on other, non-node benchmarks, so that's an indication that they are useful, and not just overfitting to the benchmark. http (one of the worst regressions) is basically entirely down to handling of String charCodeAt, which crankshaft inlined aggressively (tracking bug). Same with path. More data to come tomorrow. |

@rvagg When we had the last CTC/V8 meeting (or at least the last one I was able to go to), it was the V8 team that both proposed/organized the meeting and brought up the issue of CrankshiftScript in our code. (I think it was even one of them--Benedikt, maybe?--that coined the term.) They seemed very interested/invested in helping us figure out how to get from our current codebase to something that would play as nice or better with Turbofan. I could be wrong, but I think we're getting a lot of help from them already, even if it may not be as visible as we might expect. And I think requests for more help in specific areas would not fall on deaf ears. Apologies in advance if it sounds like I'm reading something into your comment that isn't there. I've personally been extremely pleased and grateful about the V8 team's assistance with Node.js for a long time now and so I'm taking the opportunity to express that. And none of this takes away from the main issue of course, which is that the task is enormous and fraught with "moving lever A fixes spring B but causes button C to stop working" type perils. We should plan and discuss publicly, like you're doing here. |

Sorry for not being absolutely clear—I agree that the fruit that the Node/V8 relationship is bearing is impressive and mutually valuable and I'm not trying to knock that, just that it seems to me from looking from the outside that the way we're going to have to tackle the problem is by changing things on our end to better adapt to V8 rather than expecting V8 to adapt to our overoptimised codebase. I also think that's perfectly reasonable fwiw because it's not as if our codebase is representative in any way of typical Node.js, nor is it idiomatic ES3/5, it really is CrankshaftScript and that's a dead reality now. So we're in for pain either way and it's exactly the whack-a-mole problem you mentioned that I'm most afraid of because it's bitten us so hard multiple times in the past and we now need to do it on a scale that we've never attempted before. I hope I'm not being interpreted as being willfully obstructionist here, I'm really only concerned about the issues I laid out in the OP. If the answer is simply that our benchmarks, or the particular runs of the benchmarks taken from the old 5.9 candidate, don't match a reality that we think matters then we significantly simplify this issue. 8.2.0-rc.1 coming soon, see nodejs/node#13744 (comment) |

|

@not-an-aardvark nodejs/node#13744 (comment):

We really are overdue for a proper representative benchmark suite. |

|

Per @rvagg's broadcast on twitter, here's a set of benchmarks run on 7.10.0 and 8.2.0-rc.1 (all values are ops/sec): I can explain what the benchmarks are if anyone's interested, but it sounds like the goal is just to see how some real world applications do. Some of the most interesting cases are the four that start with "QS" and the five that start with "Clamp" -- those are all different implementations of the same functions that we tested during development before picking the fastest to actually ship. For QS the fastest implementation is different between v8 versions. Clamp is within margin of error. These are all math-heavy functions and I think they would all run in d8. That is, these shouldn't be reflective of node API performance. I haven't looked at the generated assembly yet, and I have limited time to do so in the next few weeks if it would be helpful. BTW, from the buffer-swap.js benchmarks in the OP, only the ones with len=128 runs in JS; the others are CPP. (And only the ones in JS have a perf degradation.) I think you're also missing the |

|

I'd love to see the CrankshaftScript replaced with more idiomatic JS (or C++) to enable better performance possibilities from alternative JS runtimes like ChakraCore (used in node-chakracore) and SpiderMonkey (spidernode). RIght now, I don't think the Nodejs ecosystem isn't as strong as it could be due to this coupling between Node.js and v8 that effectively limits real-world deployable innovations (memory use, requests per second per watt, etc) versus browser-side JS. To echo folks above, I'd really like to see some hot-path aspects of this corrected for the next LTS. @rvagg for my purposes, AcmeAir, SSR benchmarks, and Octane are a reasonable iron triangle for community-run CI. Between the raw benchmark times+memory use, and monitoring/pinning JIT stats (bailouts, re-JITs, etc), I think many of the app-level regression cases for my context would be caught. |

|

(FWIW, besides |

|

The old data seems really outdated. I've just run the nodejs/node#11851 (comment) The pros and cons seem drastically different now (8.1.2 vs 8.2.0-rc-1): Label (click me): improvement confidence p.value

arrays\\var-int.js n=25 type="Array" -1.38 % 0.1449170222

arrays\\var-int.js n=25 type="Buffer" -3.60 % *** 0.0002448808

arrays\\var-int.js n=25 type="Float32Array" 0.51 % 0.6640802009

arrays\\var-int.js n=25 type="Float64Array" 0.33 % 0.7028409025

arrays\\var-int.js n=25 type="Int16Array" -1.35 % 0.1832062399

arrays\\var-int.js n=25 type="Int32Array" -2.18 % 0.0772578995

arrays\\var-int.js n=25 type="Int8Array" -2.88 % *** 0.0006455217

arrays\\var-int.js n=25 type="Uint16Array" -3.62 % *** 0.0003638146

arrays\\var-int.js n=25 type="Uint32Array" -1.99 % 0.1343990743

arrays\\var-int.js n=25 type="Uint8Array" -3.08 % ** 0.0074982413

improvement confidence p.value

arrays\\zero-float.js n=25 type="Array" 18.03 % *** 7.646284e-37

arrays\\zero-float.js n=25 type="Buffer" 21.01 % *** 7.406994e-40

arrays\\zero-float.js n=25 type="Float32Array" 20.62 % *** 1.541712e-31

arrays\\zero-float.js n=25 type="Float64Array" -16.98 % *** 7.101956e-24

arrays\\zero-float.js n=25 type="Int16Array" -3.52 % *** 9.136824e-05

arrays\\zero-float.js n=25 type="Int32Array" -0.24 % 7.432021e-01

arrays\\zero-float.js n=25 type="Int8Array" 19.16 % *** 3.006861e-29

arrays\\zero-float.js n=25 type="Uint16Array" -3.33 % *** 1.387510e-06

arrays\\zero-float.js n=25 type="Uint32Array" -1.14 % 1.474507e-01

arrays\\zero-float.js n=25 type="Uint8Array" 20.62 % *** 1.324800e-28

improvement confidence p.value

arrays\\zero-int.js n=25 type="Array" 16.56 % *** 1.737679e-21

arrays\\zero-int.js n=25 type="Buffer" 20.61 % *** 7.972851e-34

arrays\\zero-int.js n=25 type="Float32Array" 20.84 % *** 7.841709e-36

arrays\\zero-int.js n=25 type="Float64Array" -16.23 % *** 8.517111e-22

arrays\\zero-int.js n=25 type="Int16Array" -2.22 % 5.165204e-02

arrays\\zero-int.js n=25 type="Int32Array" 0.96 % 1.183342e-01

arrays\\zero-int.js n=25 type="Int8Array" 19.63 % *** 8.789434e-33

arrays\\zero-int.js n=25 type="Uint16Array" -2.96 % ** 5.486944e-03

arrays\\zero-int.js n=25 type="Uint32Array" 0.42 % 4.711222e-01

arrays\\zero-int.js n=25 type="Uint8Array" 21.33 % *** 8.041571e-33

|

|

@bmeurer, @nodejs/v8 Can you explain the https://github.com/nodejs/node/blob/master/benchmark/arrays/zero-float.js |

|

The Opbeat test suite is seeing significant performance degradations on Node 8. I've been trying to debug it for the last two weeks, and have found that one of the major victims seem to be Bluebird. I'd love to provide some benchmarks here if anyone are interested? (and in that case, what type of benchmarks would you prefer?) |

|

@watson That’s definitely something to look into, but to clarify, you are not talking about the 8.2.0 RC, but all versions of Node 8? |

|

@watson Node 8 currently has V8 5.8. It would be interesting to compare that with 8.2.0-rc-1. |

|

@targos Travis uses nvm to manage Node versions and nvm doesn't support RCs yet. But you can download the binary from the site during the build. EDIT: Simultaneous comment. :) |

|

That's wrong, nvm supports rc. $ nvm install rc

Downloading and installing node v8.2.0-rc.1...

Downloading https://nodejs.org/download/rc//v8.2.0-rc.1/node-v8.2.0-rc.1-darwin-x64.tar.xz...

######################################################################## 100.0%

Computing checksum with shasum -a 256

Checksums matched!

Now using node v8.2.0-rc.1 (npm v5.0.3)

rc -> v8.2.0-rc.1

Clearing mirror cache...

Done!/cc @ljharb maybe close out nvm-sh/nvm#779 |

|

@SimenB Where did you get that? I have the latest stable |

|

|

Huh, lemme check how that works for me... EDIT: Ah, from zsh-nvm. https://github.com/lukechilds/zsh-nvm. @lukechilds upstream it? ❤️ |

|

@SimenB Currently very busy working on something else, but it's on my list. |

|

|

|

@watson, when you say provide some benchmarks, I'd be interested to know what you had in mind. Are they micro-benchmarks are more system level. The Benchmarking WG is still looking for more system level benchmarks to add to the nightly runs. The goal is to cover the main Node.js uses cases and there are still many gaps. |

|

@mhdawson you didn't ask me, but for my purposes across several companies that deployed nodejs to production in both embedded (ARM SoC) and microservices (deployed to AWS and Azure) environments over the last ~5 years:

|

|

Can we get someone to help drive this through to resolution? I can't do it unfortunately but it needs someone, or some people, who are interested enough in seeing an answer come out of this to make sure that the CTC has either enough data, or solid enough recommendations from trusted parties, in order to make a decision. The alternative is to make a implicit decision for the negative by letting this thread go stale while we wait for Node 9, and even though I'd personally be comfortable with that I don't think it's responsible decision making on our part! |

|

I propose that we don't update V8 to 5.9 on |

|

I wouldn’t say that we are in that much of a hurry anyway, at the very least we should wait until after the July security release, and until nodejs/node#13804 is resolved. |

|

Can we make V8 6.0 have the same ABI of 5.8? |

|

@mcollina 5.8 was patched on v8.x to have the same ABI as 6.0. |

|

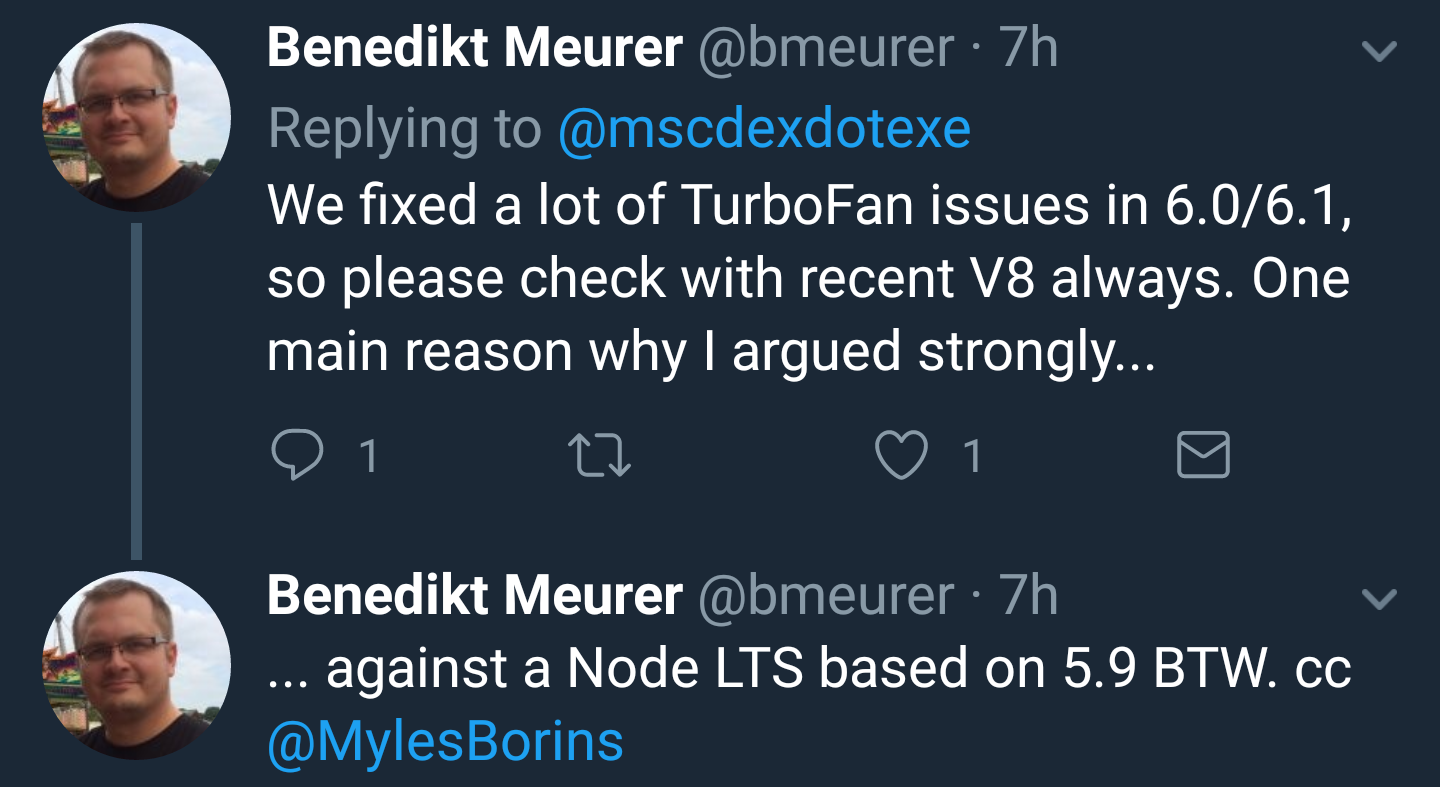

I am +1 on updating early and then cherry-picking fixes from 6.1. I would like to make mid-August as our deadline for a release with turbofan. That will give us a couple of months of testing before 8 become LTS. Any later than that, and we might have to stick with V8 5.8. I'm also ok in releasing with V8 5.9, and then migrate to 6.0 at the end of July. A lot of issues will not come out unless we do a full release. The sooner we start the better. I agree with @bmeurer that it is probably better to have V8 6.0 (plus cherry-picks) when node 8 goes LTS. |

|

I'm good with skipping 5.9 on 8.x and jumping to 6.0 no later than mid august. |

|

I generally support this idea. But it would be nice to get early accessible builds of Node 8 with 6.0 then. Can you maybe do like one or two RCs with 6.0 (which is pretty much frozen at this point), so people can test their apps and we can figure out which cherry-picks we need from 6.1 and what we need to to fix on 6.1 still? |

|

Also it'd be awesome to get an agreement on some kind of standard benchmark suite to reduce uncertainty somewhat in these situations. Like "don't significantly regress on AcmeAir, JetStream, ARES-6, TypeScript compiling itself and Webpack bundling some big app"? |

|

I opened nodejs/node#14004 to track the update to V8 6.0. |

|

Nice, that was quick :-) |

|

I want to chime in that focusing the decision primarily on performance at this point seems to be missing the point A large portion of the decision was made due to the backporting and security concerns of sticking with the old pipeline for LTS. It is important that we do not lose sight of those challenges while making the decision. While performance may not be at 100% of the old pipeline, this can improve over the 30 months of LTS. We will be unable to improve the security story or keep up with backports if we do not upgrade edit: removed disingenuous as it was harsh |

|

cc @natorion |

|

I agree that performance does not necessarily have to be at 100% but I think @rvagg (sorry if I'm mis-interpreting) is saying that we need data to be able to make an informed decision which I agree with. It is important to have enough data so that we can make an informed decision and be able to set expectations. If we think the right decision is to take a 20% performance hit (just an example not saying this is the case) and then improve over the LTS cycle that may be ok provided we communicate that ahead of time. On the other hand if don't have any idea what that impact is and end users discover a large performance regression in the LTS line that may impact confidence in the project. |

|

@mhdawson Just so we’re on the same page, what I understand Myles as saying, and what I understood to be the outcome #99 and all prior discussion that we had before this issue was opened, is that there are other very important factors that play into this decision that aren’t even related to performance, such as the impact it has on even just our ability to support Node 8 for the next 2 years. That being said: We have zero indication that real-world applications perform worse with TF+I, and everybody who I have seen give numbers so far has seen something between a 5 % and a 20 % performance increase, which matches the numbers reported by the V8 team (independently of Node) iirc. Yes, it’s unfortunate that we couldn’t get more feedback so far, and we should keep trying to get more, but if 5 out of 6 applications perform 15 % better, one performs 15 % worse (and to reiterate, that is hypothetical), we have a better support story for the future and the opportunity to backport further performance improvements from future V8 versions, then for me that’s enough to make this decision in favour of TF+I in Node 8 (again). |

|

I do not think we will ever had any objective data on this matter. This is unfortunate, but it is the nature of software: applications are mostly closed source. I think each one of us should make their tests on the closed source code they have access on and make an opinion about it. I am finding very hard to work with Node 8.1, as it is very inconsistent. Some things are crankshaft, some are turbofan, and there are several things that are already been fixed on 8.2-rc.1 (also because crankshaft has been disabled). The sooner we ship the better, but we should have consensus with this change. Nevertheless, I think we will have to float a significant amount of patches on top of V8 5.9 or 6.0. |

|

@addaleax I'm not disagreeing with any of the points above and I'm not trying to say that performance is the only element in the decision. I agree that some results being better, some worse, is just fine. On the other hand I do think it is something we need to acknowledge and do our best to address. What I think is important (others might disagree) is that we:

I think what @rvagg asked for in #146 (comment) is that somebody step up to co-ordinate/psh the work required to generate the data required and to document the case we are going to make that we have done the required due diligence. A first step might be to write up what we can say today based on the investigation/testing we have done so far and see how comfortable we would be as a group with that as our explanation for what we did to mitigate the risk. We might decide its good enough or that there are some additional things we should try to do between now and the LTS release. |

|

To add in a data point on the perf, I wrote a more real world, slimmed down and basic version of something we are running in production. (https://github.com/evanlucas/node-benchmarks/tree/master/api-gateway) It's pretty basic, but a lot more realistic than a I think the sooner we get 5.9+ into node 8.x, the better. Then we can actually identify real world performance problems (if they exist) and fix them before it goes LTS |

@mhdawson from my perspective #99 was a breakdown of exactly these concerns. We created a decision matrix, ran early benchmarks, and voted as a committee. While it was discussed that we would be willing to reverse the decision if there were major problems, I was not under the impression that the default would be for us to re-litigate the decision prior to landing. If anything the onus would be on individuals to bring forth problematic benchmarks, no vice versa. As far as communicating with the community, the original decision was followed up by a blog post which received very positive feedback. We should likely prepare another if / when the new pipeline lands to make clear expectations... but at this point I genuinely believe our engaged community has an idea of what to expect. from @rvagg's comment

This is somewhat frustrating for me. We had driven through a resolution and made a decision. We have been moving forward and working closely with the V8 team to get things working. At a certain point we are where we are, we can find problems, work towards fixing + improving them. Personally I have invested over 50 hours in various meetings, putting together documents, and driving consensus on this issue. I know there are various members of the Core Team + the V8 team who have put in similar hours, and potentially more. I would like to humbly request that individuals who would like to see us not ship with V8 6.0 take the time to put together the documentation that is being requested. If individuals do not have the time to do so we should move forward with our original decision, put together a blog post explaining the state of the decision, and spend our time improving the platform. |

|

Ref: for me nodejs/node#14131 is a good example of the low correlation between our micro-benchmarks and real-world impact. Something that should be kept in mind. The micro-benchmark suite has local value (evaluating a small change PR) but IMHO should not be the deciding factor in such big changes as V8 version changes. [free association, no disrespect regarding ant metaphor] "Is a frame of reference valid for a specific discussion": https://youtu.be/D3GVVkPb3OI?t=3m31s |

|

Just catching up on the conversation and no where near through all of the comments... however, I will say that I agree with @MylesBorins that I had assumed the decision had already been made. Yes, we needed to make sure there were no significant regressions but the intention has been to move forward with 6.0 in 8.x -- that's why we delayed the 8.0.0 release and landed all those ABI compatibility patches, and is the expectation that we set with users. At this point, I think the burden is on those who may not want 6.0 to land in 8.x to justify why we shouldn't rather than the other way around. |

|

I would like to propose that at this time we should close this issue and open a new issue to focus on the communications that will be required when we finally land TF+I. I believe we are less than two weeks away from a stable branch cut on 6.0. I've synced with the V8 team on the current problems present in nodejs/node#14004 and they will work with us on getting things fixed |

|

Sorry to hear there's a difference in expectations at the heart of this discussion. This, from @MylesBorins' blog post is the sentiment that I've been working on (emphasis mine):

Reviewing #99 I can see there are both the "give us the option" sentiment and also the "we will upgrade" sentiment represented in there. I suspect that some of us assumed that the 5.8 delay in 8.0.0 was to give us the option to go 5.9+ during 8.x should it make sense (requiring an additional decision point, which is what I've been trying to push for here), but others were assuming that we were voting for the clear path to upgrading to 5.9+ during 8.x and that 5.8 + the delay for 8.0.0 were just steps in that process. I'm not going to belabor this point as it seems that most people engaging here are just frustrated that we're even having the discussion and want to get on with TF+I, but if someone else wants to make this a voting point then please do so. However I think this whole issue points toward the need for greater clarity when doing this kind of non-trivial planning and ensuring that everyone involved is clear on what we're agreeing on and that our language is consistent. |

We have a really good record with our two LTS lines so far, I'm really concerned we're just rolling forward into a mini disaster for 8.0 LTS without weighing up the concerns.

@vsemozhetbyt and @targos so far appear to be the only ones who have investigated this, posted in nodejs/node#11851 which used a 5.9 candidate. Copied here for easier digestion:

arrays

arrays ("fixed" version by @targos)

assert

buffers

buffer-swap.js with --set n=1e6

child_process

domain

es

new es/foreach-bench.js

events

http

The most long

httpbenchmarks was a little shortened:check_is_http_token.jswas launched withset n=5e7instead of5e8andsimple.jswas launched with--runs 15instead of30.module

net

os

process

querystring

streams

streams (windows)

string_decoder

(

string-decoder.jswas launched withn=25e4instead of25e5)timers

timers (windows)

tls

url

util

vm

vm (windows)

I draw your attention particularly to buffers, events, http (!!), querystring, streams, string_decoder, url, util where we have significant losses. These all go to the core competences of Node and you can imagine the articles and blog posts about how far 8.0 LTS regressed.

We do have a couple of wins, es and timers in particular. The gains in es point the way toward what TurboFanScript might look like, or perhaps these are just doing a bit of catch-up on existing ES3/5 perf.

There is https://benchmarking.nodejs.org/, but we have a long way to go before it helps answer these kinds of questions. Currently they are mostly microbenchmarks too, plus AcmeAir (which is a questionable representation of real-world Node IMO) and then there's an Octane benchmark down the bottom where master has a non-trivial dip over 8.x. @nodejs/benchmarking may be able to speak with more authority to the representative nature of all those graphs though.

So, the basic question is what to do with 5.9+? We could go ahead and put it in 8.x. We could wait till 6.0 or 6.1 to do it. We could defer the upgrade till 9.x. But how do we go about answering this question given the complex dependencies (mainly re LTS)?

My personal inclination is to defer till 9.x so we have 2 full cycles to pick up the pieces before an LTS (similar strategy that Ubuntu has pulled off with its major reworks and component replacements—conservative in the LTS branches to lessen potential breakage and make support easier, then liberal in the in-between).

Can others please present alternative proposals with justifications and/or framing?

The text was updated successfully, but these errors were encountered: