-

Notifications

You must be signed in to change notification settings - Fork 91

Commit

This commit does not belong to any branch on this repository, and may belong to a fork outside of the repository.

* Add role: cluster_upgrade * Add fragments

- Loading branch information

Showing

9 changed files

with

457 additions

and

0 deletions.

There are no files selected for viewing

This file contains bidirectional Unicode text that may be interpreted or compiled differently than what appears below. To review, open the file in an editor that reveals hidden Unicode characters.

Learn more about bidirectional Unicode characters

| Original file line number | Diff line number | Diff line change |

|---|---|---|

| @@ -0,0 +1,3 @@ | ||

| --- | ||

| major_changes: | ||

| - cluster_upgrade - Migrate role (https://github.com/oVirt/ovirt-ansible-collection/pull/94). |

This file contains bidirectional Unicode text that may be interpreted or compiled differently than what appears below. To review, open the file in an editor that reveals hidden Unicode characters.

Learn more about bidirectional Unicode characters

| Original file line number | Diff line number | Diff line change |

|---|---|---|

| @@ -0,0 +1,52 @@ | ||

| oVirt Cluster Upgrade | ||

| ========= | ||

|

|

||

| The `cluster_upgrade` role iterates through all the hosts in a cluster and upgrades them. | ||

|

|

||

| Role Variables | ||

| -------------- | ||

|

|

||

| | Name | Default value | | | ||

| |-------------------------|-----------------------|-----------------------------------------------------| | ||

| | cluster_name | Default | Name of the cluster to be upgraded. | | ||

| | stopped_vms | UNDEF | List of virtual machines to stop before upgrading. | | ||

| | stop_non_migratable_vms <br/> <i>alias: stop_pinned_to_host_vms</i> | false | Specify whether to stop virtual machines pinned to the host being upgraded. If true, the pinned non-migratable virtual machines will be stopped and host will be upgraded, otherwise the host will be skipped. | | ||

| | upgrade_timeout | 3600 | Timeout in seconds to wait for host to be upgraded. | | ||

| | host_statuses | [UP] | List of host statuses. If a host is in any of the specified statuses then it will be upgraded. | | ||

| | host_names | [\*] | List of host names to be upgraded. | | ||

| | check_upgrade | false | If true, run check_for_upgrade action on all hosts before executing upgrade on them. If false, run upgrade only for hosts with available upgrades and ignore all other hosts. | | ||

| | reboot_after_upgrade | true | If true reboot hosts after successful upgrade. | | ||

| | use_maintenance_policy | true | If true the cluster policy will be switched to cluster_maintenance during upgrade otherwise the policy will be unchanged. | | ||

| | healing_in_progress_checks | 6 | Maximum number of attempts to check if gluster healing is still in progress. | | ||

| | healing_in_progress_check_delay | 300 | The delay in seconds between each attempt to check if gluster healing is still in progress. | | ||

| | wait_to_finish_healing | 5 | Delay in minutes to wait to finish gluster healing process after successful host upgrade. | | ||

|

|

||

| Example Playbook | ||

| ---------------- | ||

|

|

||

| ```yaml | ||

| --- | ||

| - name: oVirt infra | ||

| hosts: localhost | ||

| connection: local | ||

| gather_facts: false | ||

|

|

||

| vars: | ||

| engine_fqdn: ovirt-engine.example.com | ||

| engine_user: admin@internal | ||

| engine_password: 123456 | ||

| engine_cafile: /etc/pki/ovirt-engine/ca.pem | ||

|

|

||

| cluster_name: production | ||

| stopped_vms: | ||

| - openshift-master-0 | ||

| - openshift-node-0 | ||

| - openshift-node-image | ||

|

|

||

| roles: | ||

| - cluster_upgrade | ||

| collections: | ||

| - ovirt.ovirt | ||

| ``` | ||

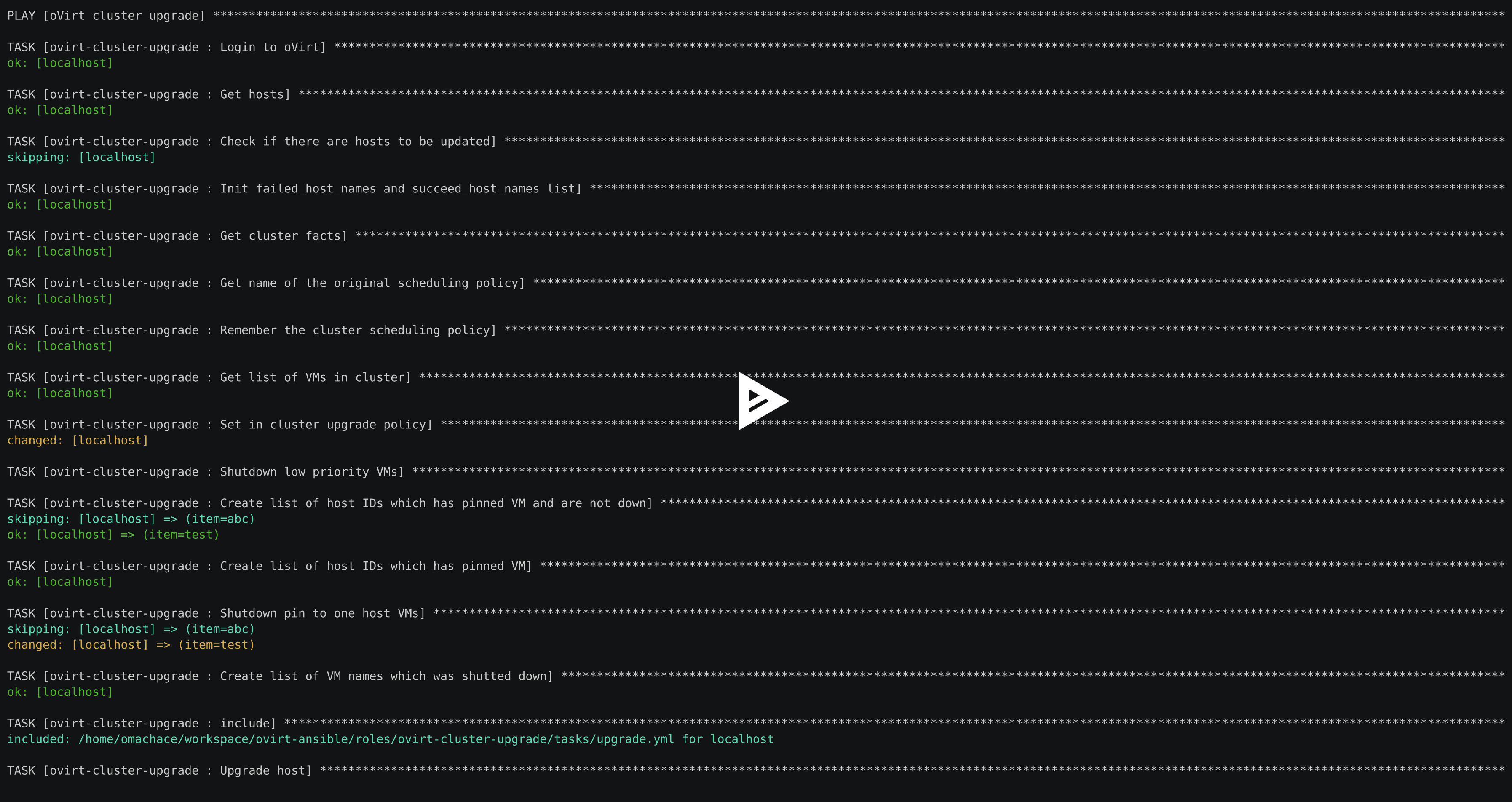

| [](https://asciinema.org/a/122760) |

This file contains bidirectional Unicode text that may be interpreted or compiled differently than what appears below. To review, open the file in an editor that reveals hidden Unicode characters.

Learn more about bidirectional Unicode characters

| Original file line number | Diff line number | Diff line change |

|---|---|---|

| @@ -0,0 +1,16 @@ | ||

| --- | ||

| # stop_pinned_to_host_vms is alias for stop_non_migratable_vms | ||

| stop_non_migratable_vms: "{{ stop_pinned_to_host_vms | default(false) }}" | ||

| upgrade_timeout: 3600 | ||

| cluster_name: Default | ||

| check_upgrade: false | ||

| reboot_after_upgrade: true | ||

| use_maintenance_policy: true | ||

| host_statuses: | ||

| - up | ||

| host_names: | ||

| - '*' | ||

| pinned_vms_names: [] | ||

| healing_in_progress_checks: 6 | ||

| healing_in_progress_check_delay: 300 | ||

| wait_to_finish_healing: 5 |

This file contains bidirectional Unicode text that may be interpreted or compiled differently than what appears below. To review, open the file in an editor that reveals hidden Unicode characters.

Learn more about bidirectional Unicode characters

| Original file line number | Diff line number | Diff line change |

|---|---|---|

| @@ -0,0 +1,26 @@ | ||

| --- | ||

| - name: oVirt cluster upgrade | ||

| hosts: localhost | ||

| connection: local | ||

| gather_facts: false | ||

|

|

||

| vars_files: | ||

| # Contains encrypted `engine_password` varibale using ansible-vault | ||

| - passwords.yml | ||

|

|

||

| vars: | ||

| engine_fqdn: ovirt.example.com | ||

| engine_user: admin@internal | ||

|

|

||

| cluster_name: mycluster | ||

| stop_non_migratable_vms: true | ||

| host_statuses: | ||

| - up | ||

| host_names: | ||

| - myhost1 | ||

| - myhost2 | ||

|

|

||

| roles: | ||

| - cluster_upgrade | ||

| collections: | ||

| - ovirt.ovirt |

This file contains bidirectional Unicode text that may be interpreted or compiled differently than what appears below. To review, open the file in an editor that reveals hidden Unicode characters.

Learn more about bidirectional Unicode characters

| Original file line number | Diff line number | Diff line change |

|---|---|---|

| @@ -0,0 +1,12 @@ | ||

| --- | ||

| # As an example this file is keep in plaintext, if you want to | ||

| # encrypt this file, please execute following command: | ||

| # | ||

| # $ ansible-vault encrypt passwords.yml | ||

| # | ||

| # It will ask you for a password, which you must then pass to | ||

| # ansible interactively when executing the playbook. | ||

| # | ||

| # $ ansible-playbook myplaybook.yml --ask-vault-pass | ||

| # | ||

| engine_password: 123456 |

This file contains bidirectional Unicode text that may be interpreted or compiled differently than what appears below. To review, open the file in an editor that reveals hidden Unicode characters.

Learn more about bidirectional Unicode characters

| Original file line number | Diff line number | Diff line change |

|---|---|---|

| @@ -0,0 +1,24 @@ | ||

| - name: Get name of the original scheduling policy | ||

| ovirt_scheduling_policy_info: | ||

| auth: "{{ ovirt_auth }}" | ||

| id: "{{ cluster_info.ovirt_clusters[0].scheduling_policy.id }}" | ||

| check_mode: "no" | ||

| register: sp_info | ||

|

|

||

| - name: Remember the cluster scheduling policy | ||

| set_fact: | ||

| cluster_scheduling_policy: "{{ sp_info.ovirt_scheduling_policies[0].name }}" | ||

|

|

||

| - name: Remember the cluster scheduling policy properties | ||

| set_fact: | ||

| cluster_scheduling_policy_properties: "{{ cluster_info.ovirt_clusters[0].custom_scheduling_policy_properties }}" | ||

|

|

||

| - name: Set in cluster upgrade policy | ||

| ovirt_cluster: | ||

| auth: "{{ ovirt_auth }}" | ||

| name: "{{ cluster_name }}" | ||

| scheduling_policy: cluster_maintenance | ||

| register: cluster_policy | ||

| when: | ||

| - (api_info.ovirt_api.product_info.version.major >= 4 and api_info.ovirt_api.product_info.version.major >= 2) or | ||

| (api_info.ovirt_api.product_info.version.major == 4 and api_info.ovirt_api.product_info.version.major == 1 and api_info.ovirt_api.product_info.version.revision >= 4) |

This file contains bidirectional Unicode text that may be interpreted or compiled differently than what appears below. To review, open the file in an editor that reveals hidden Unicode characters.

Learn more about bidirectional Unicode characters

| Original file line number | Diff line number | Diff line change |

|---|---|---|

| @@ -0,0 +1,208 @@ | ||

| --- | ||

| ## https://github.com/ansible/ansible/issues/22397 | ||

| ## Ansible 2.3 generates a WARNING when using {{ }} in defaults variables of role | ||

| ## this workarounds it until Ansible resolves the issue: | ||

| - name: Initialize variables | ||

| set_fact: | ||

| stop_non_migratable_vms: "{{ stop_non_migratable_vms }}" | ||

| provided_token: "{{ engine_token | default(lookup('env','OVIRT_TOKEN')) | default('') }}" | ||

|

|

||

| - block: | ||

| - name: Login to oVirt | ||

| ovirt_auth: | ||

| url: "{{ engine_url | default(lookup('env','OVIRT_URL')) | default(omit) }}" | ||

| username: "{{ engine_user | default(lookup('env','OVIRT_USERNAME')) | default(omit) }}" | ||

| hostname: "{{ engine_fqdn | default(lookup('env','OVIRT_HOSTNAME')) | default(omit) }}" | ||

| password: "{{ engine_password | default(lookup('env','OVIRT_PASSWORD')) | default(omit) }}" | ||

| ca_file: "{{ engine_cafile | default(lookup('env','OVIRT_CAFILE')) | default(omit) }}" | ||

| token: "{{ engine_token | default(lookup('env','OVIRT_TOKEN')) | default(omit) }}" | ||

| insecure: "{{ engine_insecure | default(true) }}" | ||

| when: ovirt_auth is undefined or not ovirt_auth | ||

| register: login_result | ||

| tags: | ||

| - always | ||

|

|

||

| - name: Get API info | ||

| ovirt_api_info: | ||

| auth: "{{ ovirt_auth }}" | ||

| register: api_info | ||

| check_mode: "no" | ||

|

|

||

| - name: Get cluster info | ||

| ovirt_cluster_info: | ||

| auth: "{{ ovirt_auth }}" | ||

| pattern: "name={{ cluster_name }}" | ||

| fetch_nested: True | ||

| nested_attributes: name | ||

| check_mode: "no" | ||

| register: cluster_info | ||

|

|

||

| - name: Set cluster upgrade status in progress | ||

| no_log: true | ||

| uri: | ||

| url: "{{ ovirt_auth.url }}/clusters/{{ cluster_info.ovirt_clusters[0].id }}/upgrade" | ||

| method: POST | ||

| body_format: json | ||

| validate_certs: false | ||

| headers: | ||

| Authorization: "Bearer {{ ovirt_auth.token }}" | ||

| body: | ||

| upgrade_action: start | ||

| register: upgrade_set | ||

| when: api_info.ovirt_api.product_info.version.major >= 4 and api_info.ovirt_api.product_info.version.minor >= 3 | ||

|

|

||

| - name: Log event cluster upgrade has started | ||

| ovirt_event: | ||

| auth: "{{ ovirt_auth }}" | ||

| state: present | ||

| description: "Cluster upgrade started for {{ cluster_name }}." | ||

| origin: "cluster_upgrade" | ||

| custom_id: "{{ 2147483647 | random | int }}" | ||

| severity: normal | ||

| cluster: "{{ cluster_info.ovirt_clusters[0].id }}" | ||

|

|

||

| - name: Get hosts | ||

| ovirt_host_info: | ||

| auth: "{{ ovirt_auth }}" | ||

| pattern: "cluster={{ cluster_name | mandatory }} {{ check_upgrade | ternary('', 'update_available=true') }} {{ host_names | map('regex_replace', '^(.*)$', 'name=\\1') | list | join(' or ') }} {{ host_statuses | map('regex_replace', '^(.*)$', 'status=\\1') | list | join(' or ') }}" | ||

| check_mode: "no" | ||

| register: host_info | ||

|

|

||

| - block: | ||

| - name: Print - no hosts to be updated | ||

| debug: | ||

| msg: "No hosts to be updated" | ||

|

|

||

| - name: Log event - no hosts to be updated | ||

| ovirt_event: | ||

| auth: "{{ ovirt_auth }}" | ||

| state: present | ||

| description: "There are no hosts to be updated for cluster {{ cluster_name }}." | ||

| origin: "cluster_upgrade" | ||

| custom_id: "{{ 2147483647 | random | int }}" | ||

| severity: normal | ||

| cluster: "{{ cluster_info.ovirt_clusters[0].id }}" | ||

| when: host_info.ovirt_hosts | length == 0 | ||

|

|

||

| - block: | ||

| - name: Log event about hosts that are marked to be updated | ||

| ovirt_event: | ||

| auth: "{{ ovirt_auth }}" | ||

| state: present | ||

| description: "Hosts {{ host_info.ovirt_hosts | map(attribute='name') | join(',') }} are marked to be updated in cluster {{ cluster_name }}." | ||

| origin: "cluster_upgrade" | ||

| custom_id: "{{ 2147483647 | random | int }}" | ||

| severity: normal | ||

| cluster: "{{ cluster_info.ovirt_clusters[0].id }}" | ||

|

|

||

| - include_tasks: cluster_policy.yml | ||

| when: use_maintenance_policy | ||

|

|

||

| - name: Get list of VMs in cluster | ||

| ovirt_vm_info: | ||

| auth: "{{ ovirt_auth }}" | ||

| pattern: "cluster={{ cluster_name }}" | ||

| check_mode: "no" | ||

| register: vms_in_cluster | ||

|

|

||

| - include_tasks: pinned_vms.yml | ||

|

|

||

| - name: Start ovirt job session | ||

| ovirt_job: | ||

| auth: "{{ ovirt_auth }}" | ||

| description: "Upgrading hosts" | ||

|

|

||

| # Update only those hosts that aren't in list of hosts were VMs are pinned | ||

| # or if stop_non_migratable_vms is enabled, which means we stop pinned VMs | ||

| - include_tasks: upgrade.yml | ||

| with_items: | ||

| - "{{ host_info.ovirt_hosts }}" | ||

| when: "item.id not in host_ids or stop_non_migratable_vms" | ||

|

|

||

| - name: Start ovirt job session | ||

| ovirt_job: | ||

| auth: "{{ ovirt_auth }}" | ||

| description: "Upgrading hosts" | ||

| state: finished | ||

|

|

||

| - name: Log event about cluster upgrade finished successfully | ||

| ovirt_event: | ||

| auth: "{{ ovirt_auth }}" | ||

| state: present | ||

| description: "Upgrade of cluster {{ cluster_name }} finished successfully." | ||

| origin: "cluster_upgrade" | ||

| severity: normal | ||

| custom_id: "{{ 2147483647 | random | int }}" | ||

| cluster: "{{ cluster_info.ovirt_clusters[0].id }}" | ||

|

|

||

| when: host_info.ovirt_hosts | length > 0 | ||

| rescue: | ||

| - name: Log event about cluster upgrade failed | ||

| ovirt_event: | ||

| auth: "{{ ovirt_auth }}" | ||

| state: present | ||

| description: "Upgrade of cluster {{ cluster_name }} failed." | ||

| origin: "cluster_upgrade" | ||

| custom_id: "{{ 2147483647 | random | int }}" | ||

| severity: error | ||

| cluster: "{{ cluster_info.ovirt_clusters[0].id }}" | ||

|

|

||

| - name: Update job failed | ||

| ovirt_job: | ||

| auth: "{{ ovirt_auth }}" | ||

| description: "Upgrading hosts" | ||

| state: failed | ||

|

|

||

| always: | ||

| - name: Set original cluster policy | ||

| ovirt_cluster: | ||

| auth: "{{ ovirt_auth }}" | ||

| name: "{{ cluster_name }}" | ||

| scheduling_policy: "{{ cluster_scheduling_policy }}" | ||

| scheduling_policy_properties: "{{ cluster_scheduling_policy_properties }}" | ||

| when: use_maintenance_policy and cluster_policy.changed | default(false) | ||

|

|

||

| - name: Start again stopped VMs | ||

| ovirt_vm: | ||

| auth: "{{ ovirt_auth }}" | ||

| name: "{{ item }}" | ||

| state: running | ||

| ignore_errors: "yes" | ||

| with_items: | ||

| - "{{ stopped_vms | default([]) }}" | ||

|

|

||

| - name: Start again pin to host VMs | ||

| ovirt_vm: | ||

| auth: "{{ ovirt_auth }}" | ||

| name: "{{ item }}" | ||

| state: running | ||

| ignore_errors: "yes" | ||

| with_items: | ||

| - "{{ pinned_vms_names | default([]) }}" | ||

| when: "stop_non_migratable_vms" | ||

|

|

||

| always: | ||

| - name: Set cluster upgrade status to finished | ||

| no_log: true | ||

| uri: | ||

| url: "{{ ovirt_auth.url }}/clusters/{{ cluster_info.ovirt_clusters[0].id }}/upgrade" | ||

| validate_certs: false | ||

| method: POST | ||

| body_format: json | ||

| headers: | ||

| Authorization: "Bearer {{ ovirt_auth.token }}" | ||

| body: | ||

| upgrade_action: finish | ||

| when: | ||

| - upgrade_set is defined and not upgrade_set.failed | default(false) | ||

| - api_info.ovirt_api.product_info.version.major >= 4 and api_info.ovirt_api.product_info.version.minor >= 3 | ||

|

|

||

| - name: Logout from oVirt | ||

| ovirt_auth: | ||

| state: absent | ||

| ovirt_auth: "{{ ovirt_auth }}" | ||

| when: | ||

| - login_result.skipped is defined and not login_result.skipped | ||

| - provided_token != ovirt_auth.token | ||

| tags: | ||

| - always |

This file contains bidirectional Unicode text that may be interpreted or compiled differently than what appears below. To review, open the file in an editor that reveals hidden Unicode characters.

Learn more about bidirectional Unicode characters

| Original file line number | Diff line number | Diff line change |

|---|---|---|

| @@ -0,0 +1,16 @@ | ||

| --- | ||

| - name: Create list of host IDs which has running non-migratable VM and are not down | ||

| set_fact: | ||

| host_ids_items: "{{ item.host.id }}" | ||

| with_items: | ||

| - "{{ vms_in_cluster.ovirt_vms | default([]) }}" | ||

| when: | ||

| - "item['placement_policy']['affinity'] != 'migratable'" | ||

| - "item.host is defined" | ||

| loop_control: | ||

| label: "{{ item.name }}" | ||

| register: host_ids_result | ||

|

|

||

| - name: Create list of host IDs which has pinned VM | ||

| set_fact: | ||

| host_ids: "{{ host_ids_result.results | rejectattr('ansible_facts', 'undefined') | map(attribute='ansible_facts.host_ids_items') | list }}" |

Oops, something went wrong.