-

Notifications

You must be signed in to change notification settings - Fork 0

Hyperparameter Optimization

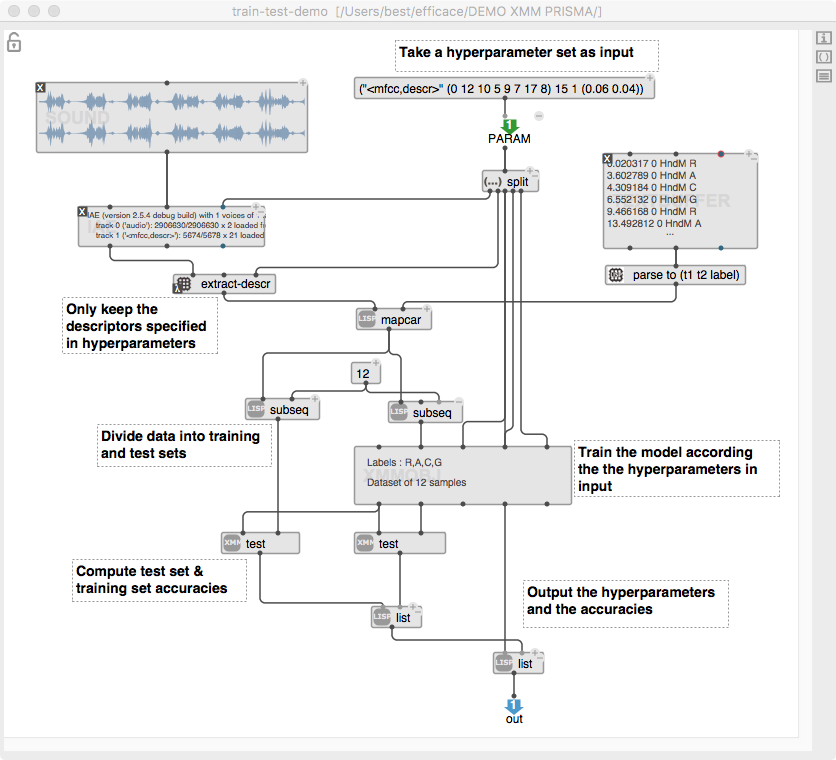

To improve one model's accuracy, the hyperparameters (descriptors, hidden states, gaussians, regularization) need to be tuned. But the amount of possibilities is udge, and training and testing models can take time. To help automatize this process, a genetic algorithm function has been implemented. It consists in a directed search towards the improvement of the accuracy of the model. To use this tool, you first need to create a patch that takes a hyperparameter set in input, trains and tests the model accordingly, and outputs the accuracies of testing.

The hyperparameter set format needs to be a list of 5 elements :

- The Pipo module for eventual IAE extraction

- The set of descriptors to keep (the function posn-match can be used to select several lists out of a matrix).

- The number of hidden states

- The number of gaussians

- The regularization values

For example, this hyperparameter set ("descr" (0 1 4 7) 15 2 (0.5 0.06)) specifies :

- The "descr" pipo module is used for the IAE extraction (outputs a matrix of 9 common descriptors)

- The descriptors 0, 1, 4, and 7 will be kept

- The model is trained with 15 hidden states, 2 gaussians per state, and 0.5 / 0.06 for relative / absolute regularization.

The output needs to be a list of two elements :

- the hyperparameter set (same as in input)

- a list of two accuracies (usually testing set accuracy and training set accuracies, the genetic algorithm will vary the hyperparameter sets to increase the first accuracy in the list).

Example of patch to build to use the genetic algorithm

Once your patch is set for those input and output formats, include it in a new patch as a lambda function. You can then create a gene-algo box, that will take as inputs :

- FUN : your lambda patch

- FIRSTPARAMS : a list a hyperparameter sets to start with

- DESCNUM : The total number of descriptors (for the "descr" pipo module for example, it is 9)

- Optionally Numchildren : the number of hyperparameter sets created per "parent" at each iteration (default is 4)

Example of genetic algorithm patch

You can then evaluate the gene-algo box and see how the algorithm evolves in the console.

To better understand the use of the genetic algorithm, please see the gene-algo.opat patch.

If any question or remark on the OM-XMM library, don't hesistate sending an email to best@ircam.fr .