Pytorch implementation of MasaCtrl: Tuning-free Mutual Self-Attention Control for Consistent Image Synthesis and Editing

Mingdeng Cao, Xintao Wang, Zhongang Qi, Ying Shan, Xiaohu Qie, Yinqiang Zheng

MasaCtrl enables performing various consistent non-rigid image synthesis and editing without fine-tuning and optimization.

MasaCtrl enables performing various consistent non-rigid image synthesis and editing without fine-tuning and optimization.

- [2023/4/25] Code released.

- [2023/4/17] Paper is available here.

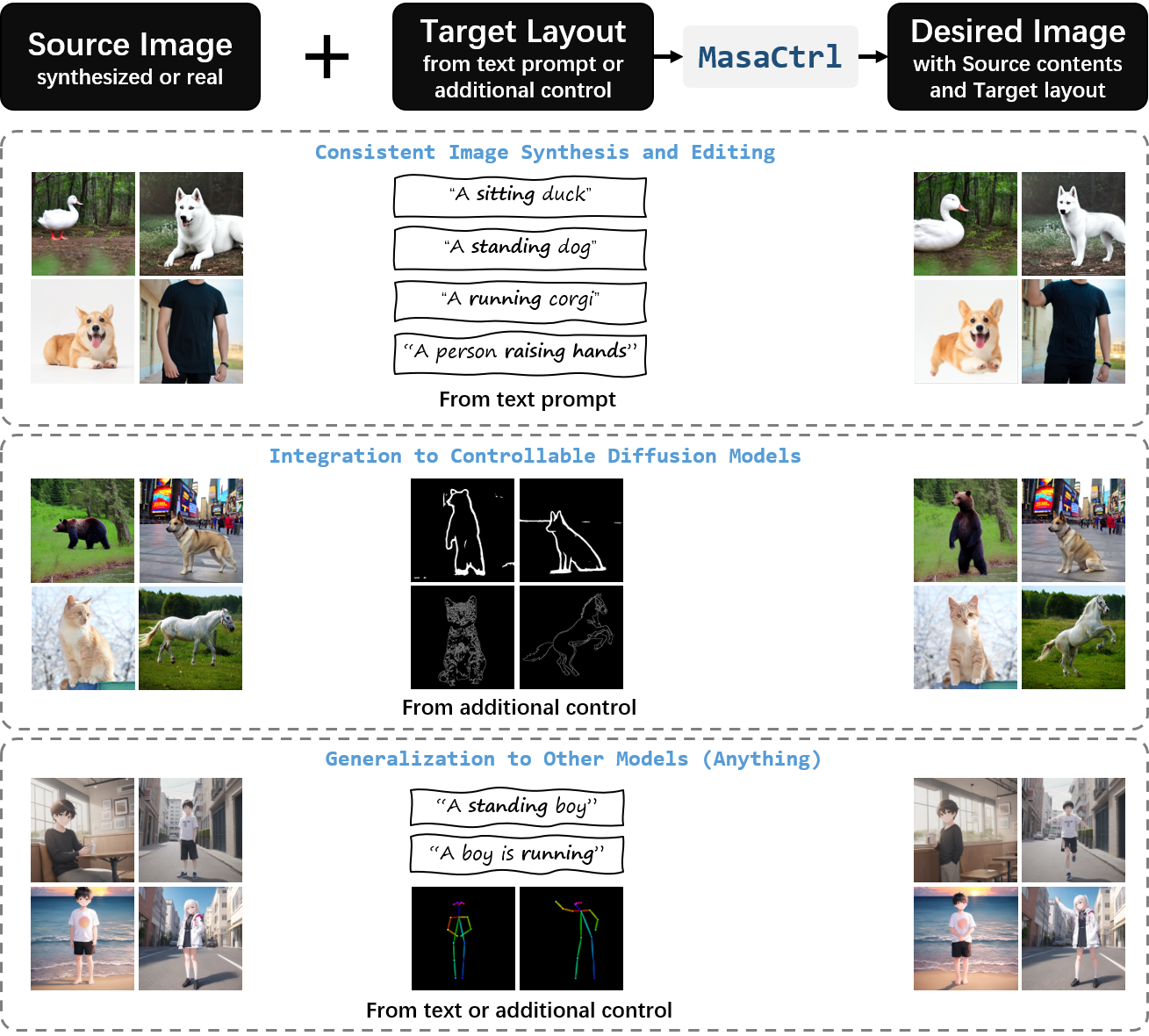

We propose MasaCtrl, a tuning-free method for non-rigid consistent image synthesis and editing. The key idea is to combine the contents from the source image and the layout synthesized from text prompt and additional controls into the desired synthesized or edited image, with Mutual Self-Attention Control.

MasaCtrl can perform prompt-based image synthesis and editing that changes the layout while maintaining contents of source image.

The target layout is synthesized directly from the target prompt.

Directly modifying the text prompts often cannot generate target layout of desired image, thus we further integrate our method into existing proposed controllable diffusion pipelines (like T2I-Adapter and ControlNet) to obtain stable synthesis and editing results.

The target layout controlled by additional guidance.

Our method also generalize well to other Stable-Diffusion-based models.

We implement our method with diffusers code base with similar code structure to Prompt-to-Prompt. The code runs on Python 3.8.5 with Pytorch 1.11. Conda environment is highly recommended.

pip install -r requirements.txt

Stable Diffusion: We mainly conduct expriemnts on Stable Diffusion v1-4, while our method can generalize to other versions (like v1-5).

You can download these checkpoints on their official repository and Hugging Face.

Personalized Models: You can download personlized models from CIVITAI or train your own customized models.

To run the synthesis with MasaCtrl, single GPU with at least 16 GB VRAM is required.

The notebook playground.ipynb provides the synthesis samples.

Will be releasing soon.

We thank awesome research works Prompt-to-Prompt, T2I-Adapter.

@misc{cao2023masactrl,

title={MasaCtrl: Tuning-Free Mutual Self-Attention Control for Consistent Image Synthesis and Editing},

author={Mingdeng Cao and Xintao Wang and Zhongang Qi and Ying Shan and Xiaohu Qie and Yinqiang Zheng},

year={2023},

eprint={2304.08465},

archivePrefix={arXiv},

primaryClass={cs.CV}

}If your have any comments or questions, please open a new issue or feel free to contact Mingdeng Cao and Xintao Wang.