Exporting Segment Anything models to different formats.

The Segment Anything repository does not have a way to export encoder to ONNX format. There are some pull requests for this feature, but they have not accepted by SAM authors. Therefore, I want to create an easy tool to export Segment Anything models to different output formats as an easy option.

Supported models:

- SAM ViT-B

- SAM ViT-L

- SAM ViT-H

- MobileSAM*

From PyPi:

pip install samexporterFrom source:

git clone https://github.com/vietanhdev/samexporter

cd samexporter

pip install -e .- Download all models from Segment Anything repository (*.pth).

- Download MobileSAM from https://github.com/ChaoningZhang/MobileSAM.

original_models

+ sam_vit_b_01ec64.pth

+ sam_vit_h_4b8939.pth

+ sam_vit_l_0b3195.pth

+ mobile_sam.pt

...

- Convert encoder SAM-H to ONNX format:

python -m samexporter.export_encoder --checkpoint original_models/sam_vit_h_4b8939.pth \

--output output_models/sam_vit_h_4b8939.encoder.onnx \

--model-type vit_h \

--quantize-out output_models/sam_vit_h_4b8939.encoder.quant.onnx \

--use-preprocess- Convert decoder SAM-H to ONNX format:

python -m samexporter.export_decoder --checkpoint original_models/sam_vit_h_4b8939.pth \

--output output_models/sam_vit_h_4b8939.decoder.onnx \

--model-type vit_h \

--quantize-out output_models/sam_vit_h_4b8939.decoder.quant.onnx \

--return-single-maskRemove --return-single-mask if you want to return multiple masks.

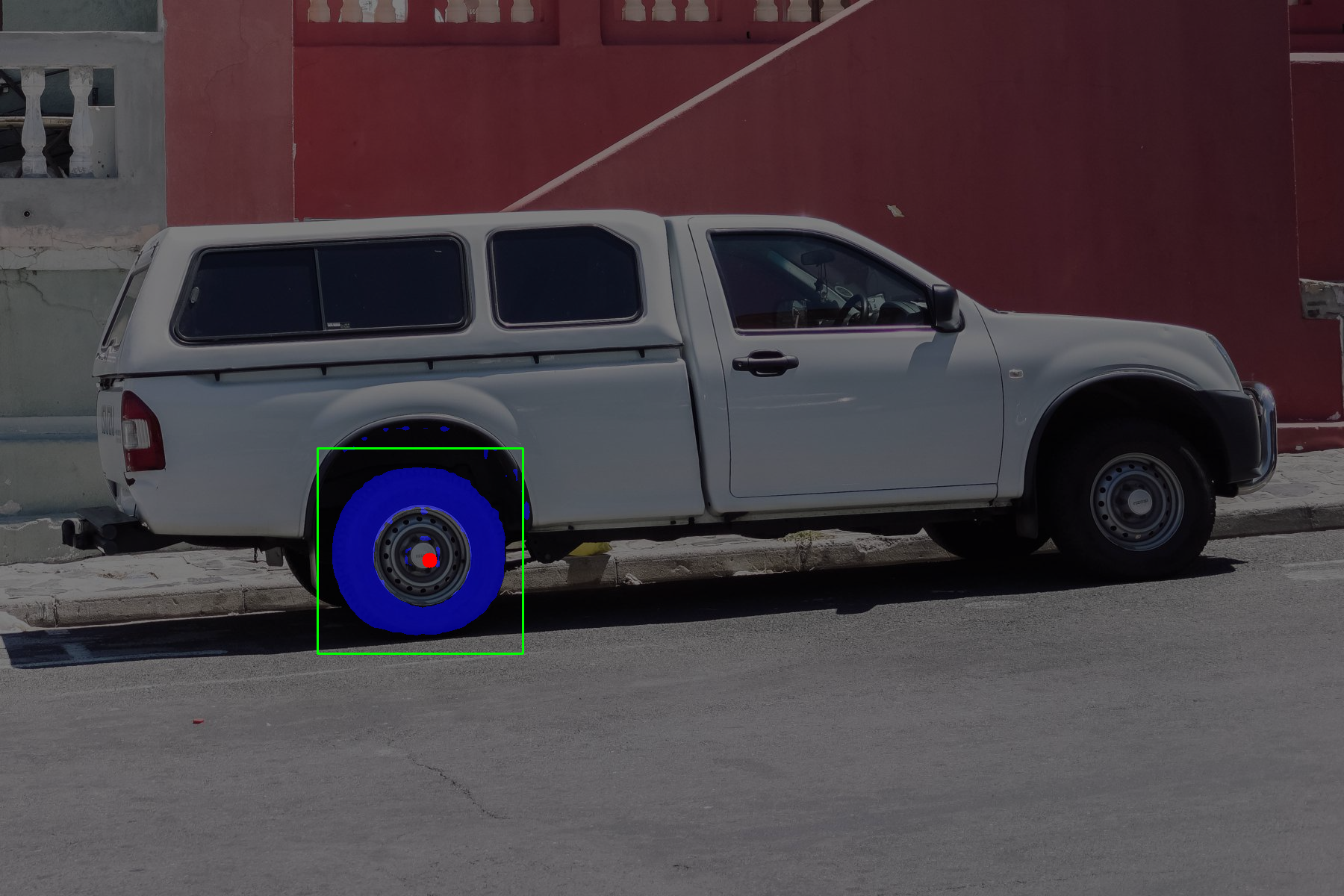

- Inference using the exported ONNX model:

python -m samexporter.inference \

--encoder_model output_models/sam_vit_h_4b8939.encoder.onnx \

--decoder_model output_models/sam_vit_h_4b8939.decoder.onnx \

--image images/truck.jpg \

--prompt images/truck_prompt.json \

--output output_images/truck.png \

--showpython -m samexporter.inference \

--encoder_model output_models/sam_vit_h_4b8939.encoder.onnx \

--decoder_model output_models/sam_vit_h_4b8939.decoder.onnx \

--image images/plants.png \

--prompt images/plants_prompt1.json \

--output output_images/plants_01.png \

--showpython -m samexporter.inference \

--encoder_model output_models/sam_vit_h_4b8939.encoder.onnx \

--decoder_model output_models/sam_vit_h_4b8939.decoder.onnx \

--image images/plants.png \

--prompt images/plants_prompt2.json \

--output output_images/plants_02.png \

--showShort options:

- Convert all Meta's models to ONNX format:

bash convert_all_meta_sam.sh- Convert MobileSAM to ONNX format:

bash convert_mobile_sam.sh- Use "quantized" models for faster inference and smaller model size. However, the accuracy may be lower than the original models.

- SAM-B is the most lightweight model, but it has the lowest accuracy. SAM-H is the most accurate model, but it has the largest model size. SAM-M is a good trade-off between accuracy and model size.

This package was originally developed for auto labeling feature in AnyLabeling project. However, you can use it for other purposes.

This project is licensed under the MIT License - see the LICENSE file for details.