-

Notifications

You must be signed in to change notification settings - Fork 2

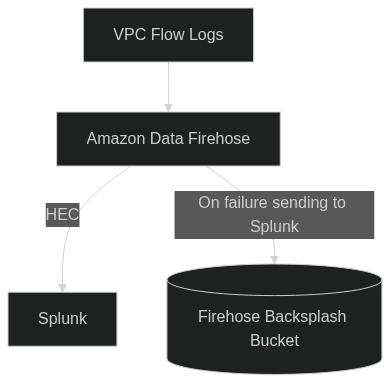

VPC Flow Logs Firehose Method

VPC Flow Logs can be sent directly to a Amazon Data Firehose, bypassing CloudWatch Logs or S3 before sending events to Splunk. The advantage of this is primarily cost savings, but the disadvantage is that the only copy of the VPC Flow Logs will exist in Splunk. In the event that the Firehose cannot reach Splunk, events are sent to an S3 backsplash bucket for later retrieval.

Mermaid code of visual overview:

graph TB;

vpcflowlogs[VPC Flow Logs]

kdf[Amazon Data Firehose]

splunk[Splunk]

s3Backsplash[(Firehose Backsplash Bucket)]

vpcflowlogs-->kdf

kdf-->|HEC|splunk

kdf-->|On failure sending to Splunk|s3Backsplash

There is code for a Splunk Dashboard Studio dashboard located in https://github.com/splunk/splunk-aws-gdi-toolkit/blob/main/VPCFlowLogs-Firehose-Resources/splunkDashboard.json that can be used to check the health of the different events coming in through this method.

Instructions to deploy this ingestion method depend on what type of data (data sourcetype essentially) you are looking to send to Splunk.

These instructions are for configuring AWS VPC Flow Logs to be sent to Splunk. VPC Flow Logs are a logging source, designed to capture network traffic metadata from AWS VPCs, subnets, and/or ENIs. VPC Flow Logs can be thought of as a combination of netflow and basic firewall logs, in the cloud, similar to what netflow is for on-prem networks. It can be helpful from a security perspective to help monitor for unwanted or unauthorized network access, a compliance perspective to record network traffic, and an operational perspective to troubleshoot networking issues.

- Install the Splunk Add-on for Amazon Web Services (AWS).

- If you use Splunk Cloud, install the add-on on the ad-hoc search head or ad-hoc search head cluster.

- If you do not use Splunk Cloud, install this add-on on the HEC endpoint (probably either the indexer(s) or heavy forwarder), your indexer(s), and your search head(s).

- Configure any firewall rules in front of Splunk to receive data from Amazon Data Firehose.

- Reference the AWS documentation for the IP ranges required. Make sure to add the IP ranges from the region you'll be deploying the CloudFormation to.

- If you use Splunk Cloud, you'll want to add the relevant IP range(s) to the HEC feature in the IP allowlist.

- If you do not use Splunk Cloud, you'll need to consult with your Splunk Architect, Splunk Admin, and/or network team to determine which firewall rules to change and where.

- Create a HEC token with Indexer acknowledgment turned on, in Splunk to ingest the events, with these specific instructions:

- Make sure to enable indexer acknowledgment.

- Set the source name override to something descriptive such as

aws_vpc_flow_logs. - Set the sourcetype to

aws:cloudwatchlogs:vpcflow. - Select the index you want the data to be sent to.

- Amazon Data Firehose does check the format of the tokens, so we recommend letting Splunk generate this rather than setting it manually through inputs.conf.

- If you use Splunk Cloud, follow these instructions

- If you do not use Splunk Cloud, the HEC token will need to be created on the Splunk instance that will be receiving this data (probably either the indexer(s) or a heavy forwarder). Instructions for this can be found here.

- Deploy the VPCFlowLogs-Firehose-Resources/kdfToSplunk.yml CloudFormation Template in each region in each account that VPC Flow Logs need to be collected from. This will create the necessary resources to receive the VPC Flow Logs and send them to Splunk over HEC. Specifically for VPC Flow Logs to be sent to Splunk, these parameters need to be set:

- splunkHECEndpoint:

https://{{url}}:{{port}}- For Splunk Cloud, this will be

https://http-inputs-firehose-{{stackName}}.splunkcloud.com:443, where{{stackName}}is the name of your Splunk Cloud stack. - For non-Splunk Cloud deployments, consult with your Splunk Architect or Splunk Admin.

- For Splunk Cloud, this will be

- splunkHECToken: The value of the HEC token from step 3

- stage: If this is going into production, set this to something like

prod

- splunkHECEndpoint:

- Configure VPC Flow Logs to be sent to the Amazon Data Firehose deployed in step 4. Example code is available here: VPCFlowLogs-Firehose-Resources/vpcFlowLogsToKDF.yml. Specifically with this CloudFormation template, the following parameters need to be set:

- kdfArn: The ARN of the Amazon Data Firehose configured in step 4.

- vpcFlowLogResourceId: The ID of the subnet, network interface, or VPC for which you want to create a flow log. Taken straight from the parameter named

resourceIdin the AWS documentation. - vpcFlowLogResourceType: The type of resource for which to create the flow log. Select NetworkInterface if you specified an ENI for vpcFlowLogResourceId, "Subnet" if you specified a subnet ID for vpcFlowLogResourceId, or "VPC" if you specified a VPC ID for vpcFlowLogResourceId"

- stage: If this is going into production, set this to something like

prod

- Verify the data is being ingested. The easiest way to do this is to wait a few minutes, then run a search like

index={{splunkIndex}} sourcetype=aws:cloudwatchlogs:vpcflow | head 100, where{{splunkIndex}}is the destination index selected in step 3.

- Deploy kdfToSplunk.yml:

aws cloudformation create-stack --region us-west-2 --stack-name splunk-vpcflowlog-kdf-to-splunk --capabilities CAPABILITY_NAMED_IAM --template-body file://kdfToSplunk.yml --parameters ParameterKey=cloudWatchAlertEmail,ParameterValue=jsmith@contoso.com ParameterKey=contact,ParameterValue=jsmith@contoso.com ParameterKey=splunkHECEndpoint,ParameterValue=https://http-inputs-firehose-contoso.splunkcloud.com:443 ParameterKey=splunkHECToken,ParameterValue=01234567-89ab-cdef-0123-456789abcdef ParameterKey=stage,ParameterValue=prod

- Deploy vpcFlowLogsToKDF.yml:

aws cloudformation create-stack --region us-west-2 --stack-name splunk-vpcflow-to-kdf --template-body file://vpcFlowLogsToKDF.yml --parameters ParameterKey=contact,ParameterValue=jsmith@contoso.com ParameterKey=kdfArn,ParameterValue=arn:aws:firehose:us-west-2:0123456789012:deliverystream/0123456789012-us-west-2-vpcflow2-firehose ParameterKey=vpcFlowLogResourceId,ParameterValue=vpc-0123456789abcdef0 ParameterKey=vpcFlowLogResourceType,ParameterValue=VPC ParameterKey=stage,ParameterValue=prod - For each VPC Flow Log configuration, remove the Flow Log or point it to a destination that is not Amazon Data Firehose.

- Empty the bucket named

{{accountId}}-{{region}}-vpcflowlog-firehose-backsplash-bucketof all files. - Delete the CloudFormation stack that deployed the resources in kdfToSplunk.yml.

- Delete the HEC token that was deployed to Splunk to ingest the data.

- Remove any firewall exceptions that were created for Amazon Data Firehose to send to Splunk over HEC.

- What if I want to send data to Edge Processor? The recommended way to get the data to Edge Processor is to set up the architecture defined in the EP SVA, with Firehose acting as the HEC source. You will need to configure a signed certificate on the load balancer since Amazon Data Firehose requires the destination it's sending to to have a signed certificate and appropriate DNS entry. The DNS Round Robin architecture could also be used.

- What if I want to send data to Ingest Processor? To get data to Ingest Processor, first send it to the Splunk Cloud environment like normal, then when creating the pipeline to interact with the data specify a partition that will apply the data being sent from the Splunk AWS GDI Toolkit. For more information on how to do this, refer to the Ingest Processor documentation.

-

The CloudFormation template fails to deploy when I try to deploy it..

- Verify that the role you are using to deploy the CloudFormation template has the appropriate permissions to deploy the resources you're trying to deploy.

- Verify that the parameters are set correctly on the stack.

- Also, in the CloudFormation console, check the events on the failed stack for hints to where it failed.

- Also see the official Troubleshooting CloudFormation documentation.

-

Events aren't getting to Splunk. What should I check? In this order, check the following:

- That the CloudFormation template deployed without error.

- That the parameters (especially splunkHECEndpoint and splunkHECToken) are correct.

- That the event source (ex GuardDuty or Security Hub) are generating events and sending to CloudWatch Events.

- That there are no errors related to ingestion in Splunk.

- That any firewall ports are open from Firehose to Splunk.

-

Not all of the events are getting to Splunk or Amazon Data Firehose is being throttled.

- Amazon Data Firehose has a number of quotas associated with each Firehose. You can check whether you're being throttled by navigating to the monitoring tab in the AWS console for the Firehose and checking if the "Throttled records (Count)" value is greater than zero. If the Firehose is being throttled, you can use the Kinesis Firehose Service quota increase form to request that quota be increased.