chore(deps): update container image docker.io/localai/localai to v2.17.0 by renovate #23480

Add this suggestion to a batch that can be applied as a single commit.

This suggestion is invalid because no changes were made to the code.

Suggestions cannot be applied while the pull request is closed.

Suggestions cannot be applied while viewing a subset of changes.

Only one suggestion per line can be applied in a batch.

Add this suggestion to a batch that can be applied as a single commit.

Applying suggestions on deleted lines is not supported.

You must change the existing code in this line in order to create a valid suggestion.

Outdated suggestions cannot be applied.

This suggestion has been applied or marked resolved.

Suggestions cannot be applied from pending reviews.

Suggestions cannot be applied on multi-line comments.

Suggestions cannot be applied while the pull request is queued to merge.

Suggestion cannot be applied right now. Please check back later.

This PR contains the following updates:

v2.16.0-aio-cpu->v2.17.0-aio-cpuv2.16.0-cublas-cuda11-ffmpeg-core->v2.17.0-cublas-cuda11-ffmpeg-corev2.16.0-cublas-cuda11-core->v2.17.0-cublas-cuda11-corev2.16.0-cublas-cuda12-ffmpeg-core->v2.17.0-cublas-cuda12-ffmpeg-corev2.16.0-cublas-cuda12-core->v2.17.0-cublas-cuda12-corev2.16.0-ffmpeg-core->v2.17.0-ffmpeg-corev2.16.0->v2.17.0Warning

Some dependencies could not be looked up. Check the Dependency Dashboard for more information.

Release Notes

mudler/LocalAI (docker.io/localai/localai)

v2.17.0Compare Source

Ahoj! this new release of LocalAI comes with tons of updates, and enhancements behind the scenes!

🌟 Highlights TLDR;

🤖 Automatic model identification for llama.cpp-based models

Just drop your GGUF files into the model folders, and let LocalAI handle the configurations. YAML files are now reserved for those who love to tinker with advanced setups.

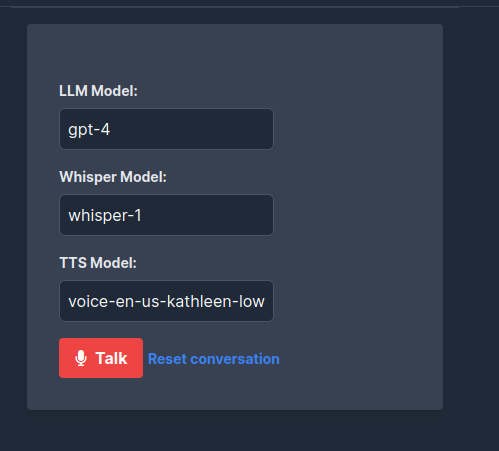

🔊 Talk to your LLM!

Introduced a new page that allows direct interaction with the LLM using audio transcription and TTS capabilities. This feature is so fun - now you can just talk with any LLM with a couple of clicks away.

🍏 Apple single-binary

Experience enhanced support for the Apple ecosystem with a comprehensive single-binary that packs all necessary libraries, ensuring LocalAI runs smoothly on MacOS and ARM64 architectures.

ARM64

Expanded our support for ARM64 with new Docker images and single binary options, ensuring better compatibility and performance on ARM-based systems.

Note: currently we support only arm core images, for instance:

localai/localai:master-ffmpeg-core,localai/localai:latest-ffmpeg-core,localai/localai:v2.17.0-ffmpeg-core.🐞 Bug Fixes and small enhancements

We’ve ironed out several issues, including image endpoint response types and other minor problems, boosting the stability and reliability of our applications. It is now also possible to enable CSRF when starting LocalAI, thanks to @dave-gray101.

🌐 Models and Galleries

Enhanced the model gallery with new additions like Mirai Nova, Mahou, and several updates to existing models ensuring better performance and accuracy.

Now you can check new models also in https://models.localai.io, without running LocalAI!

Installation and Setup

A new install.sh script is now available for quick and hassle-free installations, streamlining the setup process for new users.

Installation can be configured with Environment variables, for example:

List of the Environment Variables:

We are looking into improving the installer, and as this is a first iteration any feedback is welcome! Open up an issue if something doesn't work for you!

Enhancements to mixed grammar support

Mixed grammar support continues receiving improvements behind the scenes.

🐍 Transformers backend enhancements

trust_remote_code: trueflagShout-out to @fakezeta for these enhancements!

Install models with the CLI

Now the CLI can install models directly from the gallery. For instance:

This command ensures the model is installed in the model folder at startup.

🐧 Linux single binary now supports rocm, nvidia, and intel

Single binaries for Linux now contain Intel, AMD GPU, and NVIDIA support. Note that you need to install the dependencies separately in the system to leverage these features. In upcoming releases, this requirement will be handled by the installer script.

📣 Let's Make Some Noise!

A gigantic THANK YOU to everyone who’s contributed—your feedback, bug squashing, and feature suggestions are what make LocalAI shine. To all our heroes out there supporting other users and sharing their expertise, you’re the real MVPs!

Remember, LocalAI thrives on community support—not big corporate bucks. If you love what we're building, show some love! A shoutout on social (@LocalAI_OSS and @mudler_it on twitter/X), joining our sponsors, or simply starring us on GitHub makes all the difference.

Also, if you haven't yet joined our Discord, come on over! Here's the link: https://discord.gg/uJAeKSAGDy

Thanks a ton, and.. enjoy this release!

What's Changed

Bug fixes 🐛

pkg/downloadershould respect basePath forfile://urls by @dave-gray101 in https://github.com/mudler/LocalAI/pull/2481Exciting New Features 🎉

response_typein the OpenAI API request by @prajwalnayak7 in https://github.com/mudler/LocalAI/pull/2347response_regexto be a list by @mudler in https://github.com/mudler/LocalAI/pull/2447OpaqueErrorsto hide error information by @dave-gray101 in https://github.com/mudler/LocalAI/pull/2486local-ai models installto install from galleries by @mudler in https://github.com/mudler/LocalAI/pull/2555🧠 Models

📖 Documentation and examples

👒 Dependencies

Other Changes

-j4forbuild-linux:by @dave-gray101 in https://github.com/mudler/LocalAI/pull/2514New Contributors

Full Changelog: mudler/LocalAI@v2.16.0...v2.17.0

Configuration

📅 Schedule: Branch creation - At any time (no schedule defined), Automerge - At any time (no schedule defined).

🚦 Automerge: Enabled.

♻ Rebasing: Whenever PR becomes conflicted, or you tick the rebase/retry checkbox.

🔕 Ignore: Close this PR and you won't be reminded about these updates again.

This PR has been generated by Renovate Bot.