kubeless is a Kubernetes-native serverless framework that lets you deploy small bits of code without having to worry about the underlying infrastructure plumbing. It leverages Kubernetes resources to provide auto-scaling, API routing, monitoring, troubleshooting and more.

Kubeless stands out as we use a ThirdPartyResource (now called Custom Resource Definition) to be able to create functions as custom kubernetes resources. We then run an in-cluster controller that watches these custom resources and launches runtimes on-demand. The controller dynamically injects the functions code into the runtimes and make them available over HTTP or via a PubSub mechanism.

As event system, we currently use Kafka and bundle a kafka setup in the Kubeless namespace for development. Help to support additional event framework like nats.io would be more than welcome.

Kubeless is purely open-source and non-affiliated to any commercial organization. Chime in at anytime, we would love the help and feedback !

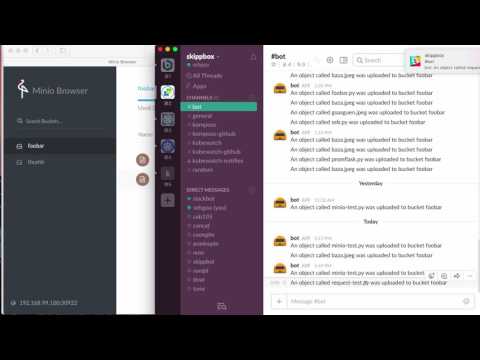

Click on the picture below to see a screencast demonstrating event based function triggers with kubeless.

- A UI available. It can run locally or in-cluster.

- A serverless framework plugin is available.

Download kubeless cli from the release page. Then using one of yaml manifests found in the release package to deploy kubeless. It will create a kubeless namespace and a function ThirdPartyResource. You will see a kubeless controller, and kafka, zookeeper statefulset running.

There are several kubeless manifests being shipped for multiple k8s environments (non-rbac, rbac and openshift), please consider to pick up the correct one:

kubeless-$RELEASE.yamlis used for non-RBAC Kubernetes cluster.kubeless-rbac-$RELEASE.yamlis used for RBAC-enabled Kubernetes cluster.kubeless-openshift-$RELEASE.yamlis used to deploy Kubeless to OpenShift (1.5+).

For example, this below is a show case of deploying kubeless to a non-RBAC Kubernetes cluster.

$ export RELEASE=v0.1.0

$ kubectl create ns kubeless

$ kubectl create -f https://github.com/kubeless/kubeless/releases/download/$RELEASE/kubeless-$RELEASE.yaml

$ kubectl get pods -n kubeless

NAME READY STATUS RESTARTS AGE

kafka-0 1/1 Running 0 1m

kubeless-controller-3331951411-d60km 1/1 Running 0 1m

zoo-0 1/1 Running 0 1m

$ kubectl get deployment -n kubeless

NAME DESIRED CURRENT UP-TO-DATE AVAILABLE AGE

kubeless-controller 1 1 1 1 1m

$ kubectl get statefulset -n kubeless

NAME DESIRED CURRENT AGE

kafka 1 1 1m

zoo 1 1 1m

$ kubectl get thirdpartyresource

NAME DESCRIPTION VERSION(S)

function.k8s.io Kubeless: Serverless framework for Kubernetes v1

$ kubectl get functionsYou are now ready to create functions.

You can use the CLI to create a function. Functions have two possible types:

- http trigger (function will expose an HTTP endpoint)

- pubsub trigger (function will consume event on a specific topic)

Here is a toy:

def foobar(context):

print context.json

return context.jsonYou create it with:

$ kubeless function deploy get-python --runtime python2.7 \

--handler test.foobar \

--from-file test.py \

--trigger-httpYou will see the function custom resource created:

$ kubectl get functions

NAME KIND

get-python Function.v1.k8s.io

$ kubeless function ls

NAME NAMESPACE HANDLER RUNTIME TYPE TOPIC

get-python default test.foobar python2.7 HTTPYou can then call the function with:

$ kubeless function call get-python --data '{"echo": "echo echo"}'

Connecting to function...

Forwarding from 127.0.0.1:30000 -> 8080

Forwarding from [::1]:30000 -> 8080

Handling connection for 30000

{"echo": "echo echo"}Or you can curl directly with kubectl proxy, for example:

$ kubectl proxy -p 8080 &

$ curl --data '{"Another": "Echo"}' localhost:8080/api/v1/proxy/namespaces/default/services/get-python/ --header "Content-Type:application/json"

{"Another": "Echo"}Kubeless also supports ingress which means you can provide your custom URL to the function. Please refer to this doc for more details.

Messages need to be JSON messages. A function can be as simple as:

def foobar(context):

print context.json

return context.jsonYou create it the same way than an HTTP function except that you specify a --trigger-topic.

$ kubeless function deploy test --runtime python2.7 \

--handler test.foobar \

--from-file test.py \

--trigger-topic test-topicYou can delete and list functions:

$ kubeless function ls

NAME NAMESPACE HANDLER RUNTIME TYPE TOPIC

test default test.foobar python2.7 PubSub test-topic

$ kubeless function delete test

$ kubeless function ls

NAME NAMESPACE HANDLER RUNTIME TYPE TOPICYou can create, list and delete PubSub topics:

$ kubeless topic create another-topic

Created topic "another-topic".

$ kubeless topic delete another-topic

$ kubeless topic lsSee the examples directory for a list of various examples. Minio, SLACK, Twitter etc ...

Also checkout the functions repository.

- You need go v1.7+

- To cross compile the kubeless command you will need gox set up in your environment

- We use make to build the project.

- Ensure you have a GOPATH setup

fecth the project:

$ got get -d github.com/kubeless/kubeless

$ cd $GOPATH/src/github.com/kubeless/kubeless/build for your local system kubeless and kubeless-controller:

$ make binaryYou can build kubeless (cli) for multiple platforms with:

$ make binary-crossTo build a deployable kubeless-controller docker image:

$ make controller-image

$ docker tag kubeless-controller [your_image_name] There are other solutions, like fission and funktion. There is also an incubating project at the ASF: OpenWhisk. We believe however, that Kubeless is the most Kubernetes native of all.

Kubeless uses k8s primitives, there is no additional API server or API router/gateway. Kubernetes users will quickly understand how it works and be able to leverage their existing logging and monitorig setup as well as their troubleshooting skills.

We would love to get your help, feel free to land a hand. We are currently looking to implement the following high level features:

- Add other runtimes, currently Python, NodeJS and Ruby are supported. We are also providing a way to use custom runtime. Please check this doc for more details.

- Investigate other messaging bus (e.g nats.io)

- Instrument the runtimes via Prometheus to be able to create pod autoscalers automatically (e.g use custom metrics not just CPU)

- Optimize for functions startup time

- Add distributed tracing (maybe using istio)

- Break out the triggers and runtimes