-

Notifications

You must be signed in to change notification settings - Fork 49

Documentation 2.0.0

- Getting Started

- How To Use

- How It Works

AET (Automated Exploratory Testing) is an online testing tool which aids front end client side layout regression testing of websites and portfolios. It allows team to ensure that a change in one part of the software did not introduce any defects in other parts of application. AET is a flexible application that can be adapted and tailored to the requirements of a given project.

The aim of AET is to assure better quality of the software. This goal is achieved by a couple of factors.

AET allows to easily and quickly create and maintain test as well as analyze their results. Because of that it encourages to cover wide part of software with tests. Test automation also has strong influence on test coverage, because AET is able to cover much bigger part of software than manual testing. Automation also helps to eliminate human errors as well as saves time. Finally, AET supports the continuous delivery of software.

This is a quick guide showing how to setup AET environment and run an example test.

Before start make sure that you have enough memory on your machine (8 GB is minimum, 16 GB recommended though).

You need to download and install following software:

- VirtualBox 5.0

- Vagrant 1.8.1

- ChefDK 0.11.0

- Maven (at least version 3.0.4)

Open command prompt as an administrator and execute the following commands:

vagrant plugin install vagrant-omnibusvagrant plugin install vagrant-berkshelfvagrant plugin install vagrant-hostmanager

Navigate to the vagrant module directory. Run berks install and then vagrant up to start virtual machine. This process may take a few minutes.

Create file named suite.xml with following content:

<?xml version="1.0" encoding="UTF-8" ?>

<suite name="test-suite" company="company" project="project">

<test name="first-test" useProxy="rest">

<collect>

<open/>

<resolution width="800" height="600" />

<sleep duration="1500"/>

<screen/>

<source/>

<status-codes/>

<js-errors/>

</collect>

<compare xmlns="http://www.cognifide.com/aet/compare/">

<screen comparator="layout"/>

<source comparator="w3c-html5"/>

<status-codes filterRange="400,600"/>

<js-errors>

<js-errors-filter source="http://w.iplsc.com/external/jquery/jquery-1.8.3.js" line="2" />

</js-errors>

</compare>

<urls>

<url href="https://en.wikipedia.org/wiki/Main_Page"/>

</urls>

</test>

</suite>Then create another file named pom.xml with following content:

<?xml version="1.0" encoding="UTF-8"?>

<project xmlns="http://maven.apache.org/POM/4.0.0"

xmlns:xsi="http://www.w3.org/2001/XMLSchema-instance"

xsi:schemaLocation="http://maven.apache.org/POM/4.0.0 http://maven.apache.org/xsd/maven-4.0.0.xsd">

<modelVersion>4.0.0</modelVersion>

<groupId>test-group</groupId>

<artifactId>test-project</artifactId>

<version>1.0.0</version>

<packaging>pom</packaging>

<name>Test project</name>

<url>http://www.example.com</url>

<properties>

<aet.version>1.4.3</aet.version>

<project.build.sourceEncoding>UTF-8</project.build.sourceEncoding>

</properties>

<build>

<plugins>

<plugin>

<groupId>com.cognifide.aet</groupId>

<artifactId>aet-maven-plugin</artifactId>

<version>${aet.version}</version>

</plugin>

</plugins>

</build>

</project>It does not need to be in the same directory as suite.xml file.

Once you have created both suite.xml and pom.xml files open command prompt in the directory which contains pom.xml file and execute following command:

mvn aet:run -DtestSuite=full/path/to/suite.xml

Remember to provide path to your suite.xml file.

Once the test run finishes there should be target directory created inside a dicrectory containing the pom.xml file. Inside the target directory you should find redirect.html file. Open this file and the test report will show up in your web browser.

Congratulations! You have successfully created and run your first AET test.

You need JDK 8 and Maven 3.3.1 or newer to build AET application. To build and upload application use following command from application root:

mvn clean install -P upload

In order to be able to deploy bundles to Karaf instance define vagrant vm location in your setting.xml file ($USER_HOME/m2):

<server>

<id>aet-vagrant-instance</id>

<username>developer</username>

<password>developer</password>

<configuration>

<sshExecutable>plink</sshExecutable>

<scpExecutable>pscp</scpExecutable>

</configuration>

</server>

Active MQ

a JMS (Java Message Service) Server which is a basic communication channel between AET System components.

AET

an acronym for Automatic Exploratory Testing, an online testing tool developed by Cognifide.

AET Core

a set of system modules that are crucial to whole system work. The AET system will not work properly without all core modules configured and running properly.

AET Jobs

implementations of jobs that can perform a particular task (e.g. collect screenshots, compare sources, validate a page against W3C HTML5).

AET Maven Plugin

a default client application for the AET system that is used to trigger the execution of the Test Suite.

Amazon Web Services

Cloud Computing Services where AET environment is setup.

Apache Karaf

see Karaf.

Artifact

usually used in the context of a small piece of data, the result of some operation (e.g. a collected screenshot or a list of W3C HTML5 validation errors).

AWS

see Amazon Web Services.

Baseline

The act of taking a snap shot of the url/page and saving it to a file for future comparison in a number of ways to find differences.

Browsermob

a proxy server used by AET to collect some kinds of data from tested pages.

Cleaner

a module responsible for removing old and unused artefacts from the database.

Collector

a module responsible for gathering data necessary for its further processing (e.g. validation, comparison).

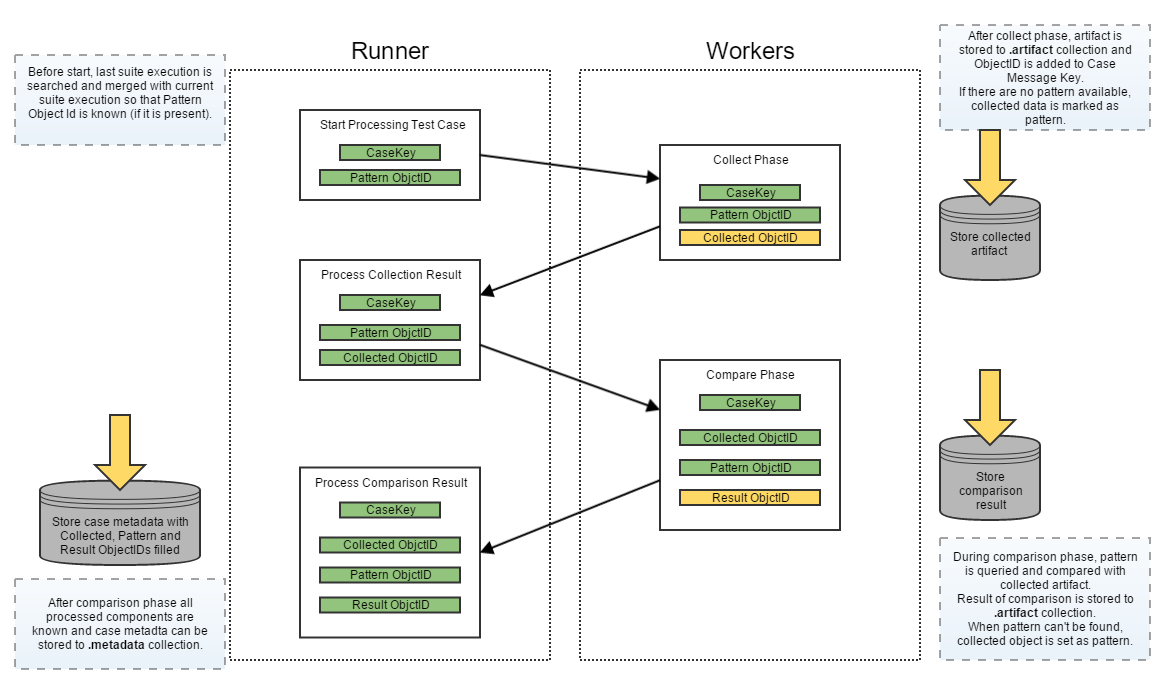

Collection

the first phase of the AET service during which all specified data is collected (e.g. screenshots, page source, js errors). Once they are collected successfully, all collection results are saved in the database.

Comparator

a module responsible for comparing data currently collected to its existing pattern or validating it against a set of defined rules.

Comparison

the second phase of the AET service that performs the operation on the data. collected during the first phase In some cases the collected data is compared to patterns, in others special validation is performed (e.g. W3C HTML5). The second phase starts before the collection finishes - just the moment when required artefacts are collected and become ready to be compared (e.g. to compare two screenshots system does not have to wait until the source of a page is collected).

Cookie Collector

a collector responsible for collecting cookies.

Cookie Comparator

a comparator responsible for processing collected cookies.

Cookie Modifier

a modifier that allows to modify cookies for a given page, i.e. to add or remove cookies.

Data Filter

a module responsible for filtering the collected data before performing comparison e.g. filtering uninteresting js errors before the js errors check takes place.

Data Storage

a database abstraction layer which contains versioned data (data grid).

Extract Element Modifier

a modifier that allows to extract an element from the html source (collected by the Screen Collector) by providing the id attribute or the class attribute.

Feature

a part of the AET system which covers full testing case e.g. layout - this feature consists of the Screen Collector, the screen comparator and the layout reporter module.

Firefox

a browser the AET tool makes use of, currently the version that is used is 30 en-US.

Header Modifier

a modifier responsible for adding additional headers to a page.

Hide Modifiers

a modifier responsible for hiding an element on a page that is unnecessary for a given test.

Html-report

a basic report in a form of a HTML file.

Java

a programming language that is used to develop the AET tool.

Java Development Kit

see JDK.

Java Management Extensions

see JMX.

Java Message Service

see JMS.

JavaScript

see JS.

JDK

the Java Development Kit is a program development environment for developing Java applications.

Jenkins

a continuous Integration (CI) server which is used as the user interface wrapper for the AET Maven Plugin.

Jetty

a simple Http Server, used as a container for web applications.

JMS

an acronym for the Java Message Service, simple message standard that allows application components to communicate with one another.

JMX

Java Management Extensions (JMX) is a technology that is used to manage and monitor advanced interfaces of Java applications. In the AET tool it is used to manage ActiveMQ.

JS

a dynamic programming language.

JS Error

a JavaScript error that occurs in a script during its execution.

JS Errors Collector

a collector responsible for collecting JavaScript errors occurring on a given page.

JS Errors Comparator

a comparator responsible for processing the collected JavaScript error resource.

JS Errors Filter

a filter that filters the results returned by the JS Errors Collector. It removes matched JavaScript errors from reports.

JUnit

a simple framework allowing to develop repeatable tests. It is an instance of the xUnit architecture for unit testing frameworks. More information about it can be found at: http://junit.org/.

Karaf

in fact Apache Karaf is an OSGi container that provides a basic configuration for existing OSGi implementations (e.g. Apache Felix).

Layout Comparator

a comparator responsible for comparing a collected screenshot of page to its pattern.

Login Modifier

a modifier that allows to log in into the application and access secured sites.

Maven

a software project management and comprehension tool. It used as a base for the AET Maven Plugin.

Modifier

a module responsible for converting the target before the data collection process is performed e.g. modifying a requested header, adding a new cookie, hiding a visible element.

MongoDB

an open-source cross-platform document-oriented database that the AET tool makes use of for data storage and management. MongoDB is developed by MongoDB Inc.

Open

A module that is a special operand for the Collect Phase.

OSGi

a modular system and services platform for Java. It is used as an application environment for AET Java components.

Pattern

a sample model of data. Collection results are compared to their patterns to discover potential differences.

pom.xml

a Maven tool configuration file that contains information about the project and configuration details used by Maven to build the project.

Rebasing

am operation changing the existing pattern to the current result.

Regression testing

This is a type of software testing that seeks to uncover new software bugs, or regressions, in existing functional and non-functional areas of a system. It is especially useful after changes such as enhancements, patches or configuration changes, have been made .

Remove Lines Data Modifier

a modifier that allows to remove lines from the source (data or pattern) that a given page is compared to.

Remove Nodes Data Modifier

a modifier that allows to delete some node(s) from a html tree. Node(s) are defined by the xpath selector.

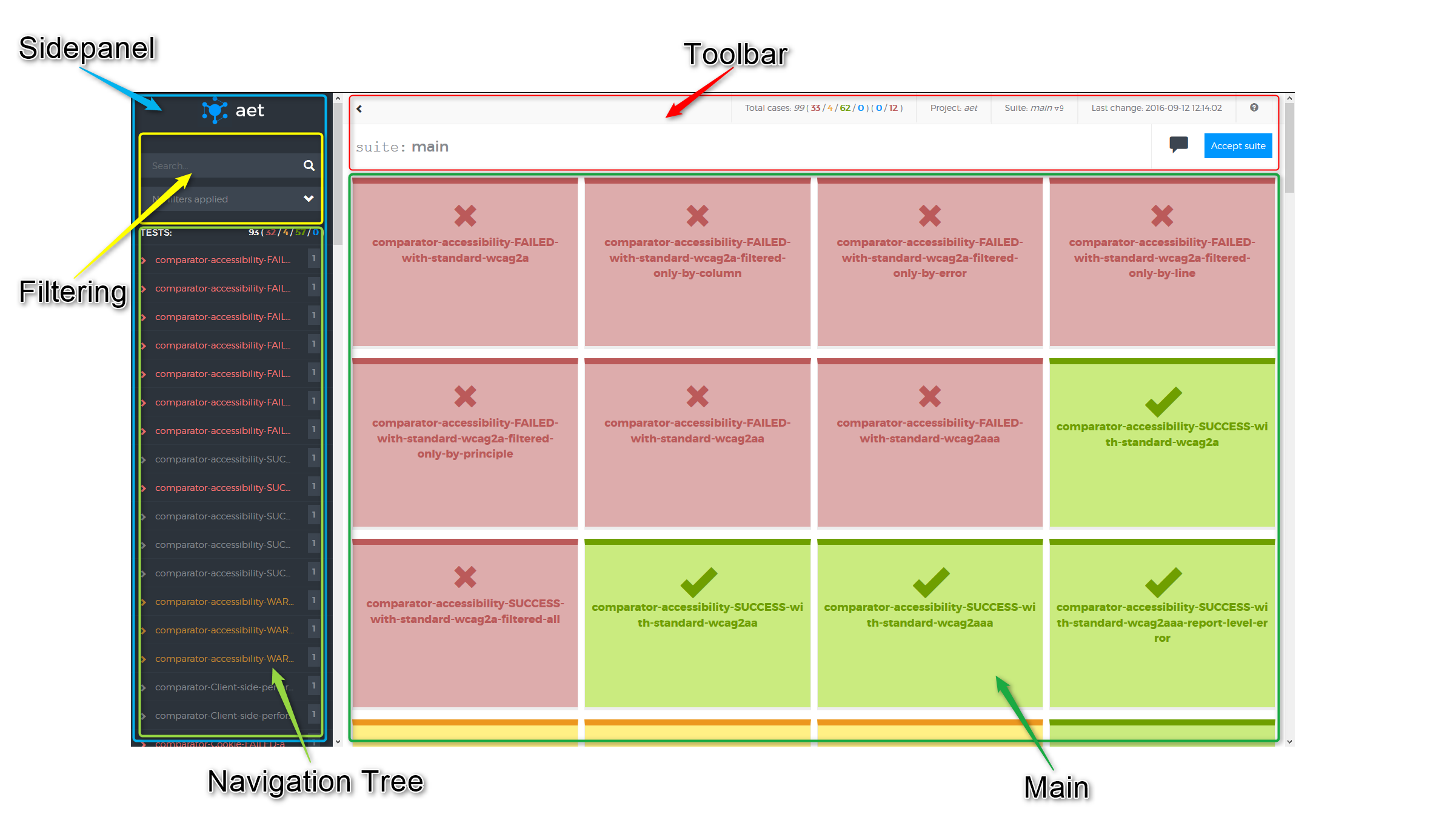

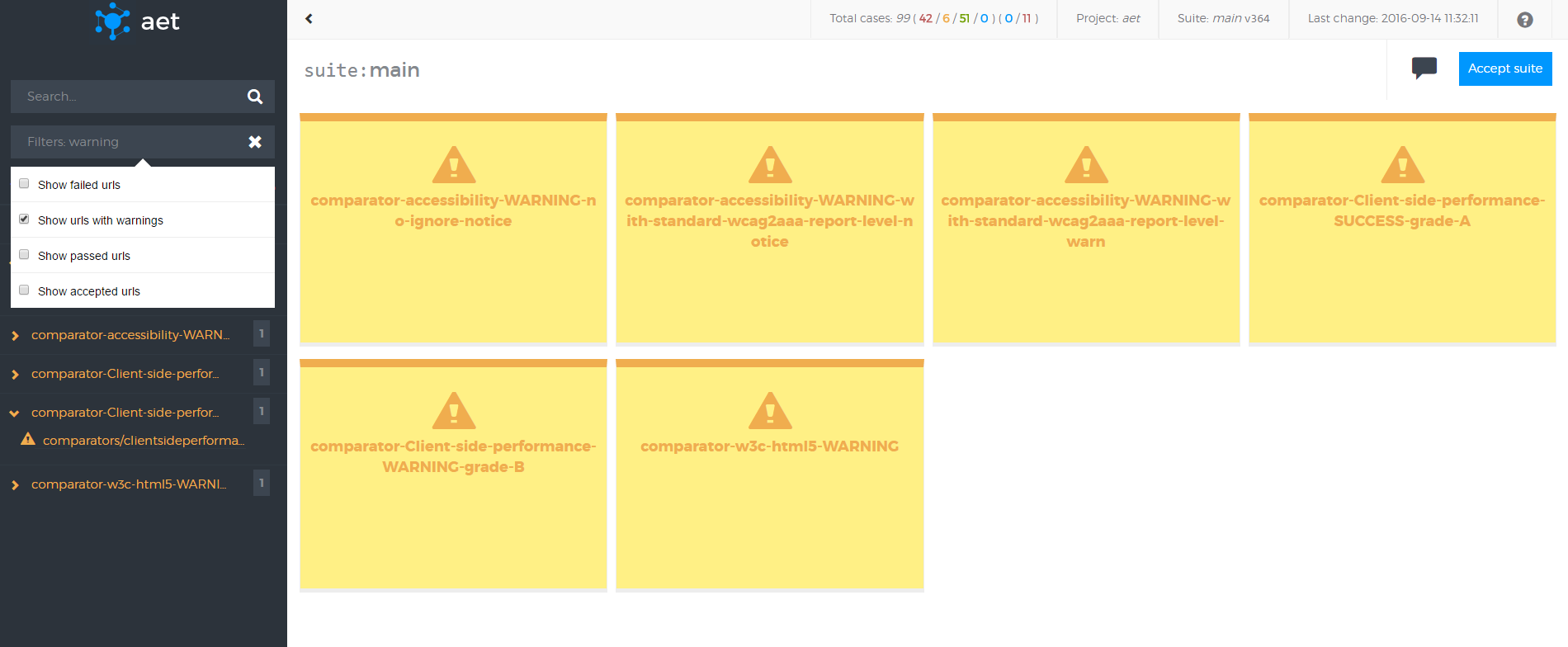

Report (Web application)

Web application for viewing / browsing AET tests results. (Only chrome browser is supported for now).

Representational State Transfer API

see Rest API.

Resolution Modifier

a modifier responsible for changing the size of the browser screen.

Resource type

a unique name for the resource produced by the collector and consumed by the comparator.

Rest API

a Representational State Transfer API for the data stored in the AET Database. It enables the user to browse the data and artifacts stored after a run of the Test Suite was completed.

Runner

a unit responsible for the communication with the client and dispatching processing among workers.

SCM repository

a data structure storing metadata for a set of files that is managed by a source control management (SCM) system responsible for managing changes in files. The most popular examples of SCM systems are Git (http://git-scm.com/) and SVN (https://subversion.apache.org/).

Screen Collector

a collector responsible for collecting a screenshot of the page under a given URL.

Selenium

a portable software testing framework for web applications.

Selenium Driver

a test tool that allows to perform specific actions in a browser environment (e.g. take a screenshot of a page).

Sleep Modifier

a modifier responsible for ceasing the execution of a given test temporarily. It causes a current thread to sleep.

Source Collector

a collector responsible for collecting the source of a page under a given URL. Unlike other collectors the Source Collector does not use Web Driver. It connects directly to a web server.

Source Comparator

a comparator responsible for comparing a collected page source with its pattern.

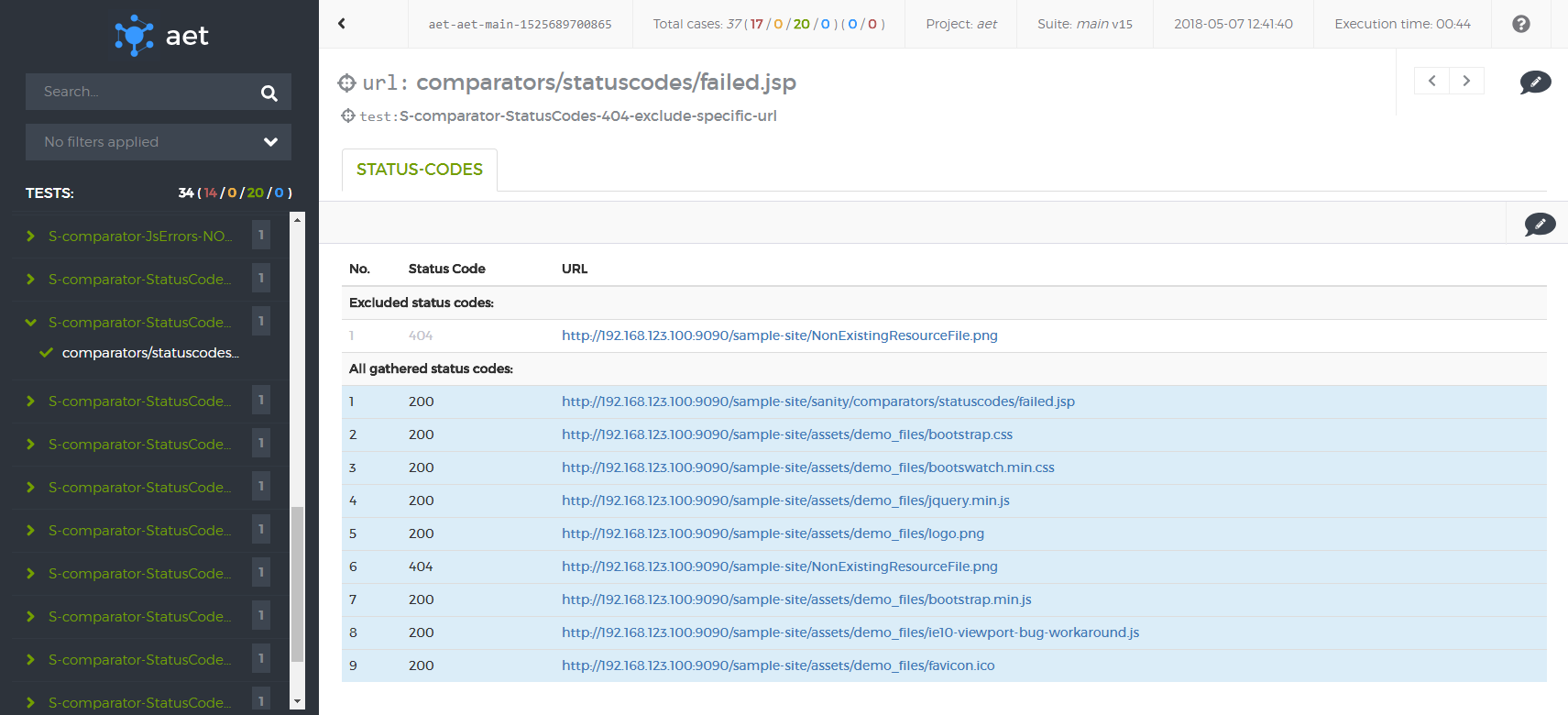

Status Code

a response code for the resource request. For a detailed list of codes please refer to the Hypertext Transfer Protocol documentation at: http://www.w3.org/Protocols/rfc2616/rfc2616-sec10.html.

Status Codes Collector

a collector responsible for collecting status codes for links to resources on a page under a given URL.

Status Codes Comparator

a comparator responsible for processing collected Status Codes.

Step

a single operation performed on url defined in <collect> phase of suite.

Test

a definition of logical set of Test Cases performed on a set of URLs.

Test Suite

a set of Tests (at least one) finished with the Report.

Test Case

a single URL Test against a feature, e.g. a W3C HTML page test, a screenshot for the resolution 800x600 test.

Thresholds

a feature allowing to declare a Jenkins build as ‘success’, ‘unstable’ or ‘failed’ depending on the number of Tests that failed or were skipped.

Wait For Page Loaded Modifier

a modifier that waits until a page is loaded or a fixed amount of time is up.

Web Console

the OSGi console installed on Apache Karaf. By default it is accessible via a browser: http://localhost:8181/system/console/configMgr. The default user/password are as follows: karaf/karaf.

Worker

a single processing unit that can perform a defined amount of tasks (e.g. collect a screenshot, compare a source).

W3C HTML5 Comparator

a comparator responsible for validating a collected page source against W3C HTML5 standards.

xunit-report

a Report that visualizes risks on the Jenkins job board and that contains information about the number of performed tests and the number of failures (potential threats).

There are two ways to setup AET environment: basic and advanced.

Basic setup uses Vagrant to create a single virtual machine running Linux OS (currently CentOS 6.7). This virtual machine contains all AET services as well as all required software. In this configuration, tests are using Linux version of Firefox web browser. Please note that there are differences in web pages rendering between Linux and Windows versions of Firefox and if you want to use Windows then you must use advanced setup.

See Basic Setup for more details.

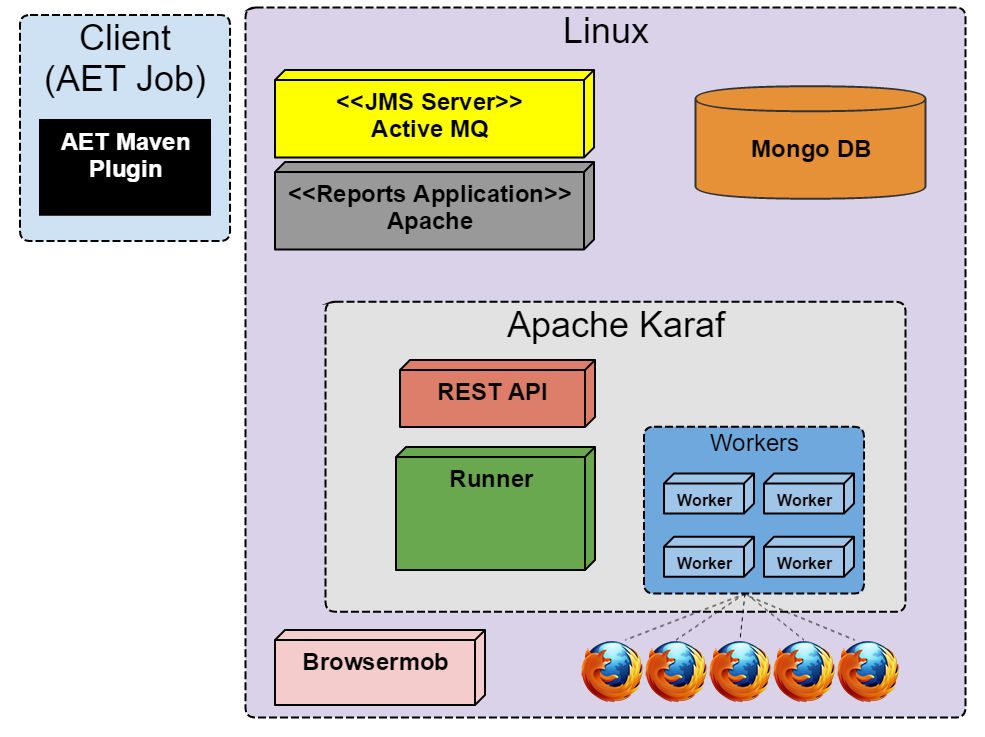

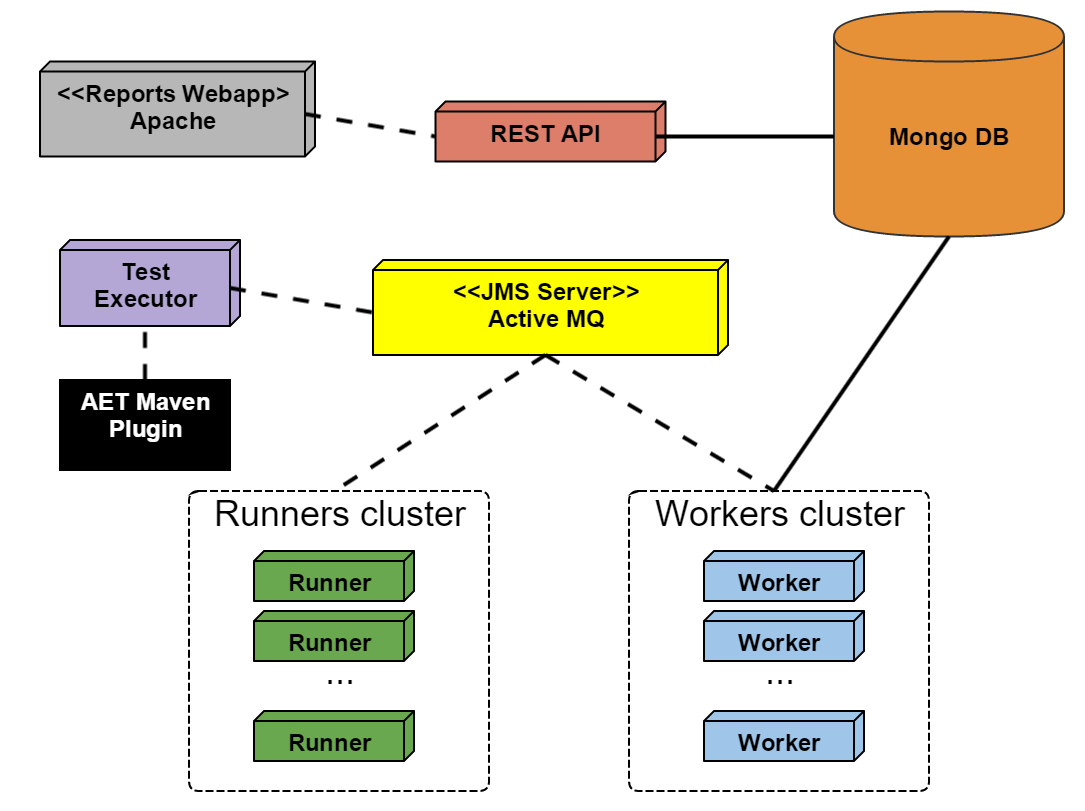

Diagram below shows basic AET setup.

Advanced setup on the other hand consists of two machines - one with Linux OS and one with Windows, both complementary to each other. Linux machine hosts services such as MongoDB, and ActiveMQ whereas Windows machine hosts Karaf, Browsermob proxy and Firefox. In this configuration, tests are using Windows version of Firefox web browser.

See Linux and Windows Setup for more details.

Diagram below shows advanced AET setup.

This setup uses vagrant module, a pseudo-cookbook which is responsible for local environment provisioning using Vagrant (powered by Chef + Berkshelf under the hood).

Currently a virtual machine with the following services is created:

- Karaf

- Apache

- Tomcat

- ActiveMQ

- MongoDb

- Brosermob

- Firefox

- X environment

All services are running using default ports. For communication please use IP address:

192.168.123.100

By default Vagrant virtual machine needs 3 GB of RAM and 2 vCPUs, so please make sure that you have enough memory on your machine (8 GB is minimum, 16 GB recommended though).

- Download and install VirtualBox 5.0

- Download and install Vagrant 1.8.1

- Download and install ChefDK 0.11.0

As an administrator execute the following commands:

vagrant plugin install vagrant-omnibusvagrant plugin install vagrant-berkshelfvagrant plugin install vagrant-hostmanager

Whenever you'd like to keep all Vagrant related data and virtual machine disks in non-standard directories please:

- set

VAGRANT_HOMEvariable to new location (by default it is set to$HOME/vagrant.d). - update VirtualBox settings (

File -> Preferences -> General) to move all disks to other directory.

Once you set all described things up just execute:

berks update && vagrant destroy -f && vagrant up

All commands have to be executed when you're inside a directory that contains Vagrantfile.

Next please execute:

-

berks install- downloads Chef dependencies from external sources. It acts asmvn clean install, but for Chef cookbooks. -

vagrant up- creates new virtual machine (.boxfile will be downloaded during first run), runs Chef inside it, sets domains and port forwarding up.

Whenever new version is released please execute the following:

-

git pullto get latest version ofVagrantfile. -

berks updateto update Chef dependencies. -

vagrant provisionto re-run Chef on the virtual machine.

To get into the virtual machine via SSH please execute vagrant ssh from the same directory that contains Vagrantfile. After that please type sudo -i and press ENTER to switch to root.

If you prefer to use PuTTY, mRemote or any other connection manager, please log in as user vagrant with password vagrant on localhost port 2222. Keep in mind that the port may be different if you have more than one Vagrant machine running at the same time. You can check current assignment by executing vagrant ssh-config command from directory that contains your Vagrantfile.

-

vagrant reloadrestarts Vagrant machine and re-applies settings defined inVagrantfile. It's useful whenever you've changed port forwarding or synced folder configuration. -

vagrant destroy -fdeletes entire virtual machine. -

vagrant reload --provisionrestarts virtual machine and re-run Chef afterwards. -

vagrant suspendsuspends currently running virtual machine. -

vagrant resumeresumes suspended virtual machine. -

vagrant statusshow status of virtual machine described inVagrantfile. -

vagrant halthalts/turns off virtual machine.

Local port is a port exposed on your machine. You can access services via localhost:<PORT>.

VM port refers to port assigned inside Vagrant's virtual machine.

Port forwarding rules can be easily changed in Vagrantfile.

| Local port | VM port | Description |

|---|---|---|

| 8181 | 8181 | Karaf |

- When getting following error on deploying application to local vagrant:

run

What went wrong: Execution failed for task ':deployDevClearCache'. > java.net.ConnectException: Connection timed out: connectifup eth1command on vagrant using ssh.

This section describes advanced setup of AET using Linux and Windows. The main advantage of this approach is ability to run tests on Firefox on Windows, which is more reliable than Firefox on Linux.

Please note that full list of required tools and its versions can be found in System Components section.

- Turn off Firewall. This may be achieved differently on various linux distribution, for example on CentOS

selinuxandiptablesshould be disabled. - Install MongoDB in version 2.6.4-1

- Install JDK from Oracle (1.7)

- Install ActiveMQ in version 5.9.0

- Enable JMX for ActiveMQ with connector under port

11199 - Switch Persistence for ActiveMQ

- Enable cleaning unused topic for ActiveMQ

- Enable JMX for ActiveMQ with connector under port

- Install Apache Server

- Configure site for the following path:

/opt/aet/apache/aet_reports/current

- Configure site for the following path:

- Install Java 7 JDK and update JAVA_HOME environment variable.

- Change default console resolution - install VNC server (e.g. http://www.tightvnc.com/), connect by VNC client to console and change resolution (min. 1024x768).

- Turn off Windows Firewall (both, private and public network location settings).

- Install Karaf in version 2.3.9

- Update Apache Felix Framework to version 4.2.1

- Install Karaf as a Windows service

- Check if it's working under http://localhost:8181/system/console/ and credentials karaf/karaf.

- Install Browsermob in version 2.0.0

- Install Browsermob as a Windows service

- Check if it's working under http://localhost:9272/proxy.

- Install Firefox 38.6.0 ESR

- Turn off automatic updates

- Check the following connections between Windows and Linux:

- MongoDB:

telnet ${LINUX_MACHINE_PRIVATE_IP} 27017 - ActiveMQ:

telnet ${LINUX_MACHINE_PRIVATE_IP} 61616 - ActiveMQ's JMX:

jconsole.exe ${LINUX_MACHINE_PRIVATE_IP}:11199

- MongoDB:

Here's a description where to deploy all the artifacts.

| Artifact | Environment | Default folder |

|---|---|---|

| bundles.zip | Windows - Karaf | /deploy |

| features.zip | Windows - Karaf | /deploy |

| configs.zip | Windows - Karaf | /etc |

| report.zip | Linux - Apache | /opt/aet/apache/aet_reports/current |

This section describes how to configure the AET OSGi services so that they could connect to the appropriate system components.

The services are configured through the Karaf Web Console which is hosted on Windows machine. Assuming that this machine's IP address is 192.168.0.2, the Karaf console is available under following address: http://192.168.0.2:8181/system/console/configMgr.

The example configuration assumes the following:

- The IP address of Linux machine is

192.168.0.1 - The IP address of Windows machine is

192.168.0.2 - The Apache HTTP server serves Reports application under domain

http:\\aet-report

The diagram below shows which AET OSGi service should connect to which system component on the appropriate machine. On the diagram the arrows point from the AET services to the system components. The notes on the arrows contain the properties of each service which should be set and example values according to assumptions stated above.

There are two more services that require configuration which are not present on the diagram above. The services are AET Collector Message Listener and AET Comparator Message Listener. There must be at least one of each of those services configured. Below there are listed the properties of each of above mentioned services with required values.

| Property name | Value |

|---|---|

| Collector name | Has to be unique within Collector Message Listeners. |

| Consumer queue name | Fixed value AET.collectorJobs

|

| Producer queue name | Fixed value AET.collectorResults

|

| Embedded Proxy Server Port | Has to be unique within Collector Message Listeners. |

| Property name | Value |

|---|---|

| Comparator name | Has to be unique within Comparator Message Listeners. |

| Consumer queue name | Fixed value AET.comparatorJobs

|

| Producer queue name | Fixed value AET.comparatorResults

|

In general the test suite is an XML document that defines tests performed over collection of web pages. This chapter covers test suite API, with description of each element.

<?xml version="1.0" encoding="UTF-8" ?>

<!-- Each test suite consists of one suite -->

<suite name="test-suite" company="cognifide" project="project">

<!-- The First test of Test Suite -->

<!-- The flow is [collect] [compare] [urls] -->

<test name="first-test" useProxy="rest">

<!-- Description of the collect phase -->

<collect>

<open/>

<resolution width="800" height="600" />

<!-- sleep 1500 ms before next steps - used on every url defined in urls -->

<sleep duration="1500"/>

<screen/>

<source/>

<status-codes/>

<js-errors/>

</collect>

<!-- Description of compare phase, says what collected data should be compared to the patterns, can also define the exact comparator. If none chosen, the default one is taken. -->

<compare xmlns="http://www.cognifide.com/aet/compare/">

<screen comparator="layout"/>

<source comparator="w3c-html5"/>

<status-codes filterRange="400,600"/>

<js-errors>

<js-errors-filter source="http://w.iplsc.com/external/jquery/jquery-1.8.3.js" line="2" />

</js-errors>

</compare>

<!-- List of urls which will be taken into tests -->

<urls>

<url href="http://www.cognifide.com"/>

</urls>

</test>

</suite>Root element of test suite definition is suite element.

| ! Important |

|---|

When defining a suite a user should think of three mandatory parameters properly: name, company, project. Those parameters are used by the AET System to identify the suite. Any change in one of those parameters values in the future will occur in treating the suite as a completely new one, which will in effect gather all the patterns from scratch. |

Root element for xml definition, each test suite definition consists of exactly one suite tag.

| Attribute name | Description | Mandatory |

|---|---|---|

name |

Name of the test suite. Should consist only of lowercase letters, digits and/or characters: -, _. |

yes |

company |

Name of the company. Should consist only of lowercase letters, digits and/or characters: -. |

yes |

project |

Name of the project. Should consist only of lowercase letters, digits and/or characters: -. |

yes |

domain |

General domain name consistent for all considered urls. Every url link is built as a concatenation of domain name and href attribute of it. If domain property is not set, then href value in url definition should contain full valid url. See more in [[Urls |

Urls]] section. |

suite element contains one or more test elements.

This tag is definition of the single test in test suite. Test suite can contain many tests.

| Attribute name | Description | Mandatory |

|---|---|---|

name |

Name of the test. Should consists only of letters, digits and/or characters: -, _. This value is also presented on report (more details in [[Suite Report |

SuiteReport]] section). |

useProxy |

Defines which (if any) Proxy should be used during collection phase. If not provided, empty or set with "false", proxy won't be used. If set to "true", default Proxy Manager will be used. Otherwise Proxy Manager with provided name will be used (see Proxy). Proxy is needed by Status Codes Collector and Header Modifier. |

no |

zIndex |

Specifies order of tests on HTML Report. A test with greater zIndex is always before test with lower value. Default value is 0. This attribute accepts integers in range <-2147483648; 2147483647>. |

no |

Each test element contains:

- one collect and one compare element - test execution phases,

- one urls element - list of urls to process.

Proxy is provided by two separated implementations: embedded and rest.

Embedded proxy does not need standalone Browsermob Server, but does not support SSL. Embedded proxy is used as default when useProxy is setted to "true" (which is equivalent to setting useProxy="embedded").

Example usage

<?xml version="1.0" encoding="UTF-8" ?>

<suite name="test-suite" company="cognifide" project="project">

<test name="header-modify-test" useProxy="embedded">

...

</test>

...

</suite>Rest proxy requires standalone Browsermob Server.

Example usage

<?xml version="1.0" encoding="UTF-8" ?>

<suite name="test-suite" company="cognifide" project="project">

<test name="header-modify-test" useProxy="rest">

...

</test>

...

</suite>This tag contain list of collectors and modifiers which will be run. It specifies what pages' data should be collected and it allows for some data modification before collection step. All collect steps are processed in defined order.

Each collector provides some specific result of gathering current data (i.e. png, html files) and a common metadata file - result.json.

Following elements are available in collect element:

This tag contain list of Comparators. Each comparator takes collected resource of defined type and runs it against comparator. It provides some specific result files illustrating found differences (i.e png, html files) and a common metadata file - result.json.

Each resource type has default comparator, user can use other comparators for each type by providing attribute comparator with comparator name, e.g.:

<source comparator="my_source_comparator"/>runs my_source_comparator against each source collected during collection phase. Each comparator can contain list of Data Filters which will be performed before each compare phase.

Data filters are used to modify gathered data before these data are passed to comparator. For example you may remove some node from html tree. Data filters are defined in test suite xml as subnodes of comparator node.

Each Data Filter has predefined type of data on which it operates.

See Urls.

Currently, running an AET suite requires using aet-maven-plugin which is an AET client application.

- Maven installed (recommended version - 3.0.4).

- Proper version of AET Maven plugin installed.

- Well-formed and valid xml test suite file available (described with details in Defining Suite chapter),

-

pom.xmlfile with defined aet-maven-plugin configuration (described below).

This file (pom.xml) is a Maven tool configuration file that contains information about the project and configuration details used by Maven to build the project.

Running AET suite requires creating and configuring such a file. The File presented below might be used as a template for setup AET suite runs:

<?xml version="1.0" encoding="UTF-8"?>

<project xmlns="http://maven.apache.org/POM/4.0.0"

xmlns:xsi="http://www.w3.org/2001/XMLSchema-instance"

xsi:schemaLocation="http://maven.apache.org/POM/4.0.0 http://maven.apache.org/xsd/maven-4.0.0.xsd">

<modelVersion>4.0.0</modelVersion>

<groupId>{PROJECT-GROUP}</groupId>

<artifactId>{PROJECT-NAME}</artifactId>

<version>1.0.0</version>

<packaging>pom</packaging>

<name>Tests</name>

<url>http://www.example.com</url>

<properties>

<aet.version>{PLUGIN-VERSION}</aet.version>

<project.build.sourceEncoding>UTF-8</project.build.sourceEncoding>

</properties>

<build>

<plugins>

<plugin>

<groupId>com.cognifide.aet</groupId>

<artifactId>aet-maven-plugin</artifactId>

<version>${aet.version}</version>

</plugin>

</plugins>

</build>

</project>User should configure three variables before proceeding to the next steps:

-

{PROJECT-GROUP}which is a group the project belongs to. It should follow the package name rules, i.e. it is reversed domain name controlled by project owner and consists of lowercase letters and dots,- example:

com.example.test

- example:

-

{PROJECT-NAME}which is this build identifier for Maven tool. It should consist only of lowercase letters and-characters,- example:

aet-sanity-test

- example:

-

{PLUGIN-VERSION}which should be set to the aet-maven-plugin version currently used- example:

1.0.0

- example:

Having the version as the maven property (${aet.version}) enables defining this parameter from the command line later, e.g. -Daet.version=1.1.0.

Running the AET suite with AET Maven plugin from the command line can be done by invoking a maven command in the directory where the pom.xml file has been defined:

mvn aet:run -DtestSuite=FULL_PATH_TO_TEST_SUITE

The testSuite parameter is the path to the xml suite configuration file.

During test suite processing there will be information on its progress displayed in the console. It reflects how many artifacts were currently collected, compared and reported. When processing is finished the information about the processing status - BUILD SUCCESS or BUILD FAILURE - is displayed in the console.

When the test run completes, the resulting report files can be found in the maven run target folder.

Check Client Application for more details about aet-maven-plugin.

Generally it is a good idea to create a separate SCM repository (e.g. GIT or SVN) for AET suites. This will enable running AET suites using Jenkins easily.

AET test reports are updated on real time basis and can be viewed on the console. This progress information is accessible in using two methods:

as a command line and with use of Jenkins job. To see progress

- log on Jenkins

- choose proper build execution from Build history panel and

- click Console Output.

For every test suite started the execution information is provided in the progress log:

[INFO] ********************************************************************************

[INFO] ********************** Job Setup finished at 10:14:43.249.**********************

[INFO] *** Suite is now processed by the system, progress will be available below. ****

[INFO] ********************************************************************************

During test processing detailed information about actual progress is displayed as in the following example:

...

[INFO] [06:34:20.680]: COLLECTED: [success: 0, total: 72] ::: COMPARED: [success: 0, total: 0]

[INFO] [06:34:31.686]: COLLECTED: [success: 1, total: 72] ::: COMPARED: [success: 1, total: 1]

[INFO] [06:34:35.689]: COLLECTED: [success: 2, total: 72] ::: COMPARED: [success: 1, total: 2]

[INFO] [06:34:36.691]: COLLECTED: [success: 2, total: 72] ::: COMPARED: [success: 2, total: 2]

[INFO] [06:34:43.695]: COLLECTED: [success: 3, total: 72] ::: COMPARED: [success: 2, total: 3]

[INFO] [06:34:44.698]: COLLECTED: [success: 3, total: 72] ::: COMPARED: [success: 3, total: 3]

...

where:

collected - shows results of collectors' work - how many artifacts have been successfully collected and what is the total number of all artifacts to be collected,

compared - shows results of comparators' work - how many artifacts have been successfully compared and what is the total number of all artifacts to be compared. The total number of artifacts to be compared depends on collectors' work progress - increases when the number of successfully collected artifacts increase.

If there are problems during processing, warning information with some description of processing step and its parameters is displayed:

[WARN] CollectionStep: source named source with parameters: {} thrown exception. TestName: comparator-Source-Long-Response-FAILED UrlName: comparators/source/failed_long_response.jsp Url: http://192.168.123.100:9090/sample-site/sanity/comparators/source/failed_long_response.jsp

In this example source collector failed to collect necessary artifacts. This information is subsequently reflected in the progress log:

...

[INFO] [06:36:44.832]: COLLECTED: [success: 46, failed: 1, total: 72] ::: COMPARED: [success: 46, total: 46]

[INFO] [06:36:50.837]: COLLECTED: [success: 47, failed: 1, total: 72] ::: COMPARED: [success: 47, total: 47]

[INFO] [06:36:52.840]: COLLECTED: [success: 48, failed: 1, total: 72] ::: COMPARED: [success: 47, total: 48]

...

In the example above one artifact has failed during collection phase.

When the AET test processing completes the information about received reports and processing status - BUILD SUCCESS or BUILD FAILURE is shown on the console - as shown below:

[INFO] Received report message: ReportMessage{company=aet-demo-sanity, project=demo-sanity-test, testSuiteName=main, status=OK, environment=win7-ff16, domain=http://192.168.123.100:9090/sample-site/sanity/, correlationId=aet-demo-sanity-demo-sanity-test-main-1426570459612}

[INFO] [06:38:03.549]: COLLECTED: [success: 71, failed: 1, total: 72] ::: COMPARED: [success: 71, total: 71]

[INFO] Received report message: ReportMessage{company=aet-demo-sanity, project=demo-sanity-test, testSuiteName=main, status=OK, environment=win7-ff16, domain=http://192.168.123.100:9090/sample-site/sanity/, correlationId=aet-demo-sanity-demo-sanity-test-main-1426570459612}

[INFO] Total: 2 of 2 reports received.

[INFO] ------------------------------------------------------------------------

[INFO] BUILD SUCCESS

[INFO] ------------------------------------------------------------------------

[INFO] Total time: 3:45.645s

[INFO] Finished at: Tue Mar 17 06:38:03 CET 2015

[INFO] Final Memory: 14M/246M

[INFO] ------------------------------------------------------------------------

BUILD SUCCESS - status means that test processing is successfully finished and reports are generated in target folder.

BUILD FAILURE - status means that there were some technical problem during processing for example database is not responding and it is not possible to receive reports.

Jenkins console output presents the same information as described above, but if test suite is defined to generate xunit-report additional information such as Junit processing is logged on console:

[xUnit] [INFO] - Starting to record.

[xUnit] [INFO] - Processing JUnit

[xUnit] [INFO] - [JUnit] - 1 test report file(s) were found with the pattern 'test-suite/target/xunit-report.xml' relative to '/var/lib/jenkins/jobs/aet-sanity-test-integration/workspace' for the testing framework 'JUnit'.

[xUnit] [INFO] - Converting '/var/lib/jenkins/jobs/aet-sanity-test-integration/workspace/test-suite/target/xunit-report.xml' .

[xUnit] [INFO] - Check 'Failed Tests' threshold.

[xUnit] [INFO] - The new number of tests for this category exceeds the specified 'new unstable' threshold value.

[xUnit] [INFO] - Setting the build status to UNSTABLE

[xUnit] [INFO] - Stopping recording.

Build step 'Publish xUnit test result report' changed build result to UNSTABLE

Finished: UNSTABLE

The meaning of 'Successful' and 'Failed' build is quite different here, because final build status depends mainly on tests results and thresholds configuration. The build can result with BUILD SUCCESS status (which means that all workers - collectors, comparators, reporters finish their work and proper reports were generated), but final Jenkins build status can be for example UNSTABLE becase there were some new test failures.

A Jenkins build is considered as UNSTABLE (yellow) or FAILURE (red) if the new (tests that failed now, but did not fail in previous run) or total number of failed tests exceeds the specified thresholds. For example:when "yellow total" threshold is set to 0 and one or more test cases failed, then build is mark as UNSTABLE.

AET tests can use a number of useful features which check different elements of the page. You can find more information about them in subpages of this page.

Below you can find a few common cases which demonstrate how to use those features.

This is the case where you want to test layout of some page by making a screenshot of page in specified resolution. Your page has some dynamic content e.g. carousel or advertisement and you don't want it to influence the results of your test. The following code snippet shows the test suite for such case:

<?xml version="1.0" encoding="UTF-8" ?>

<suite name="test-suite" company="company" project="project">

<test name="first-test" useProxy="rest">

<collect>

<open/>

<hide xpath="//div[@id='mw-panel']" />

<resolution width="800" height="600" />

<sleep duration="1500"/>

<screen/>

</collect>

<compare>

<screen comparator="layout"/>

</compare>

<urls>

<url href="https://en.wikipedia.org/wiki/Main_Page"/>

</urls>

</test>

</suite>This test checks the layout of Wikipedia's main page. After opening page the Hide Modifier is used to hide the navigation bar on the left side of page. Then the Resolution Modifier is used to set the screenshot resolution to 800x600 and Screen Collector makes a screenshot. Finally Layout Comparator compares page layout with previous test run.

This is the case when you want to check the status codes of requests generated by page and you are only interested in specific range of codes, e.g. client errors (codes 400-499). The following code snippet shows the test suite for such case:

<?xml version="1.0" encoding="UTF-8" ?>

<suite name="test-suite" company="company" project="project">

<test name="first-test" useProxy="rest">

<collect>

<open/>

<status-codes />

</collect>

<compare>

<status-codes filterRange="400,499" />

</compare>

<urls>

<url href="https://en.wikipedia.org/wiki/Main_Pagee"/>

</urls>

</test>

</suite>This test uses Status Codes Collector to gather status codes for given url (which in this case points to non-existent resource). Then the Status Codes Comparator is used to display status codes that fit within range from 400 to 499.

This is the case when you want to dismiss a dialog asking for a consent for storing cookies which has to be displayed if the page needs to be compliant with EU legislation. Some pages use cookies to determine if the dialog should be displayed. The following code snippet shows the test suite for such case:

<suite name="test-suite" company="company" project="project">

<test name="first-test" useProxy="rest">

<collect>

<modify-cookie action="add" cookie-name="eu_cn" cookie-value="1" />

<open/>

<sleep duration="1500"/>

<screen/>

</collect>

<compare>

<screen comparator="layout"/>

</compare>

<urls>

<url href="http://example.com/"/>

</urls>

</test>

</suite>This test uses Cookie Modifier to add a cookie named eu_cn with value 1 which in this example tells that the user already gave consent to store cookies. After that the screenshots is collected and the layout of page is compared with previous test.

This is the case when you want to check if there are any JavaScript errors on the page. You know that, for example, some third party library used has an error but you don't want it to affect your test. The following code snippet shows the test suite for such case:

<suite name="test-suite" company="company" project="project">

<test name="first-test" useProxy="rest">

<collect>

<open/>

<js-errors/>

</collect>

<compare>

<js-errors>

<js-errors-filter error="Uncaught ReferenceError: variable is not defined"/>

</js-errors>

</compare>

<urls>

<url href="http://example.com/"/>

</urls>

</test>

</suite>This test uses JS Errors Collector to collect JavaScript errors. Then the JS Errors Comparator is used do display the issues. Within comparator the JS Errors Data Filter ignoring specified error is defined.

This is the case, when you want to check source of the page but you want to exclude some code from comparison. It could be, for instance, an embedded analytics script. The following code snippet shows the test suite for such case:

<suite name="test-suite" company="company" project="project">

<test name="first-test" useProxy="rest">

<collect>

<source />

</collect>

<compare>

<source comparator="source" compareType="allFormatted">

<remove-nodes xpath="//script" />

</source>

</compare>

<urls>

<url href="https://en.wikipedia.org/wiki/Main_Page"/>

</urls>

</test>

</suite>This test uses Source Collector to gather source code. Then Source Comparator compares source of the page with previous test. The applied Remove Nodes Data Filter removes all <script> nodes from source before comparison takes place.

Open module is special operand for collect phase. It is responsible for opening web page for given url and preparing browser environment to perform chain of collections and modifications.

Second usage of this module is to allow user easily perform actions before page is being opened, such as modify headers, cookies etc.

| ! Open module |

|---|

| Each collect phase must contain open module. |

| ! Note |

|---|

| In some cases it is recommended to use **[[Sleep Modifier |

Module name: open

No parameters

<?xml version="1.0" encoding="UTF-8" ?>

<suite name="test-suite" company="cognifide" project="project">

<test name="open-test">

<collect>

...

<!-- example action before page is opened -->

<open/>

<!-- collect page data -->

...

</collect>

<compare>

...

</compare>

<urls>

...

</urls>

</test>

...

<reports>

...

</reports>

</suite>Collector is module which main task is to collect data from tested pages.

Each collector presented in section below consist of two elements:

- module name (produced resource type),

- parameters.

This name is unique identifier of system functionality. Each collector has its unique name, this name should be also unique for all modules in collect phase. This is always name of tag definition for collector.

AET System does not know what work will be performed by collector when it reads suite definition. The only thing that is known is module name. System will recognize which collector should be called by matching definition from collect phase with name registered in system. When no collector in system with defined name is found, system exception will occur and test will be not performed. This solution enables adding new features to the system without system downtime (just by installing new feature bundle).

Each collector produces resource of defined type. This type can be later recognized by comparators and data filters. Two collectors can't produce data with the same resource type. Produced resource type is always equal to collector module name.

This is set of key-value pairs using which user can pass some configuration and information to collector. Parameters for collectors are usually not mandatory - passing this parameter is not obligatory, usually this is some collector functionality extension. However, there is one special property: name. Collector with set name can be treated in special way by comparators (some comparators may look only for collection results from specifically named collectors), example:

...

<collect>

<open/>

<sleep duration="1000"/>

<screen width="1280" height="1024" name="desktop"/>

<screen width="768" height="1024" name="tablet"/>

<screen width="320" height="480" name="mobile"/>

</collect>

<compare>

<screen collectorName="mobile"/>

</compare>

...During collect phase, three screenshot with different resolutions will be taken and saved to database. However, only one of them (mobile) will be compared with pattern during comparison phase and presented on report (under "Layout For Mobile" section).

Following picture presents elements described earlier:

where:

- Module name (produced resource type),

- Parameters,

- Special collector property: name,

- Special comparator property: collectorName.

| ! Beta Version |

|---|

| This AET Plugin is currently in BETA version. |

Accessibility Collector is responsible for collecting validation result containing violations of a defined coding standard found on a page. It uses HTML_CodeSniffer tool to find violations.

Module name: accessibility

| Parameter | Value | Description | Mandatory |

|---|---|---|---|

standard |

WCAG2A WCAG2AA (default) WCAG2AAA |

Parameter specifies a standard against which the page is validated. More information on standards: WCAG2 | no |

<?xml version="1.0" encoding="UTF-8" ?>

<suite name="test-suite" company="cognifide" project="project">

<test name="source-test">

<collect>

...

<accessibility standard="WCAG2AAA" />

...

</collect>

<compare>

...

</compare>

<urls>

...

</urls>

</test>

...

<reports>

...

</reports>

</suite>| ! Beta Version |

|---|

| This AET Plugin is currently in BETA version. |

Client Side Performance Collector is responsible for collecting performance analysis result. It uses YSlow tool to perform analysis.

Module name: client-side-performance

| ! Important information |

|---|

| In order to use this collector proxy must be used. |

No parameters.

<?xml version="1.0" encoding="UTF-8" ?>

<suite name="test-suite" company="cognifide" project="project" environment="win7-ff16">

<test name="source-test" useProxy="rest">

<collect>

...

<client-side-performance />

...

</collect>

<compare>

...

</compare>

<urls>

...

</urls>

</test>

...

<reports>

...

</reports>

</suite>Cookie collector is responsible for collecting cookies.

Module name: cookie

No parameters.

<?xml version="1.0" encoding="UTF-8" ?>

<suite name="test-suite" company="cognifide" project="project">

<test name="cookie-test">

<collect>

...

<cookie/>

...

</collect>

<compare>

...

</compare>

<urls>

...

</urls>

</test>

...

<reports>

...

</reports>

</suite>JS Errors Collector is responsible for collecting javascript errors occuring on given page.

Module name: js-errors

No parameters.

<?xml version="1.0" encoding="UTF-8" ?>

<suite name="test-suite" company="cognifide" project="project">

<test name="js-errors-test">

<collect>

...

<js-errors/>

...

</collect>

<compare>

...

</compare>

<urls>

...

</urls>

</test>

...

<reports>

...

</reports>

</suite>| ! Compare collected screenshot |

|---|

| Please remember that defining collector and not using it during comparison phase is configuration error. From now on suites that define screen collection and does not use it during comparison phase will be rejected during suite validation phase. |

| ! Notice |

|---|

| Since AET 1.4.0 version AET Screen Collector will have no parameters. Please use [[Resolution Modifier |

Screen Collector is responsible for collecting screenshot of the page under given URL.

Module name: screen

Note that you cannot maximize the window and specify the dimension at the same time. If no parameters provided, default browser size is set before taking screenshot.

<?xml version="1.0" encoding="UTF-8" ?>

<suite name="test-suite" company="cognifide" project="project">

<test name="screen-test">

<collect>

...

<screen name="desktop" />

...

</collect>

<compare>

...

</compare>

<urls>

...

</urls>

</test>

...

<reports>

...

</reports>

</suite>Instead of

<screen width="1280" height="1024" name="desktop" />please use:

<resolution width="1280" height="1024"/>

<sleep duration="1000" />

<screen name="desktop" />| ! Note |

|---|

| Before taking screenshot [[Hide modifier |

Source Collector is responsible for collecting source of the page under given URL. Unlike others collectors source collector doesn't use web driver, it connects directly to web server.

Module name: source

| ! Note |

|---|

| System is waiting up to 20 seconds before request is timed out. This parameter is not configurable. |

No parameters.

<?xml version="1.0" encoding="UTF-8" ?>

<suite name="test-suite" company="cognifide" project="project">

<test name="source-test">

<collect>

...

<source />

...

</collect>

<compare>

...

</compare>

<urls>

...

</urls>

</test>

...

<reports>

...

</reports>

</suite>Status Codes Collector is responsible for collecting status codes of links to resources on the page under given URL.

Module name: status-codes

| ! Important information |

|---|

| In order to use this collector *[[proxy |

No parameters.

<?xml version="1.0" encoding="UTF-8" ?>

<suite name="test-suite" company="cognifide" project="project">

<test name="status-codes-test">

<collect>

...

<status-codes />

...

</collect>

<compare>

...

</compare>

<urls>

...

</urls>

</test>

...

<reports>

...

</reports>

</suite>Modifier is module which performs particular modification on data before collection happens.

Each modifier consists of two elements:

- module name,

- parameters.

This name is unique identifier for each modifier (and each module in collect phase).

This is set of key-value pairs using which user can pass some configuration and information to modifier. Parameters for modifiers can be divided into two groups:

- mandatory - parameters without which modification will not be possible,

- optional - passing this parameter is not obligatory, usually they trigger some functionality extensions.

Click Modifier allows to perform click action on some element on page. When element is not found (e.g. by improper xpath value) warning will be logged but test will be passed to the next steps.

Module name: click

| ! Important information |

|---|

| In order to use this modifier it must be declared after open module in test suite XML definition. Remember that element that will be clicked must be visible in the moment of performing click action. |

| Parameter | Default value | Description | Mandatory |

|---|---|---|---|

xpath |

xpath of element to click | yes | |

timeout |

Timeout for element to appear, in milliseconds. Max value of this parameter is 15000 milliseconds (15 seconds). | yes |

<?xml version="1.0" encoding="UTF-8" ?>

<suite name="test-suite" company="cognifide" project="project">

<test name="click-test">

<collect>

<open />

...

<click xpath="//*[@id='header_0_container1_0_pRow']/div[1]/div/div/a/img" timeout="3000" />

<sleep duration="2000" />

...

<resolution width="1280" height="800" />

<screen name="desktop" />

...

</collect>

<compare>

...

<screen comparator="layout" />

...

</compare>

<urls>

...

</urls>

</test>

...

<reports>

...

</reports>

</suite>Cookie Modifier allows to modify cookies for given page, i.e. add or remove some cookies.

Module name: modify-cookie

| ! Important information |

|---|

| In order to use this modifier it must be declared before open module in test suite XML definition. When declared after open module (but before Cookie Collector) it can be used as filter for Cookie Collector. |

| Parameter | Value | Description | Mandatory |

|---|---|---|---|

action |

add remove |

Specifies what action should be taken with given cookie | yes |

cookie-name |

Cookie name | yes | |

cookie-value |

Cookie value | Yes, if add action is chosen |

|

cookie-domain |

Cookie domain attribute value | No, used only if add action is chosen |

|

cookie-path |

Cookie path attribute value | No, used only if add action is chosen |

| ! Note |

|---|

If cookie-domain provided WebDriver will reject cookies unless the Domain attribute specifies a scope for the cookie that would include the origin server. For example, the user agent will accept a cookie with a Domain attribute of example.com or of foo.example.com from foo.example.com, but the user agent will not accept a cookie with a Domain attribute of bar.example.com or of baz.foo.example.com. For more information read here. |

| ! Note |

|---|

If cookie-path provided WebDriver will reject cookie unless the path portion of the url matches (or is a subdirectory of) the cookie's Path attribute, where the %x2F (/) character is interpreted as a directory separator. |

<?xml version="1.0" encoding="UTF-8" ?>

<suite name="test-suite" company="cognifide" project="project">

<test name="cookie-modify-test">

<collect>

...

<modify-cookie action="add" cookie-name="sample-cookie" cookie-value="sample-cookie-value"/>

<modify-cookie action="remove" cookie-name="another-cookie"/>

...

<open />

...

</collect>

<compare>

...

</compare>

<urls>

...

</urls>

</test>

...

<reports>

...

</reports>

</suite>Header Modifier is responsible for injecting additional headers to page before it is opened to test.

Module name: header

| ! Important information |

|---|

| In order to use this modifier it must be declared before open module in test suite XML definition and *[[proxy |

| Parameter | Value | Description | Mandatory |

|---|---|---|---|

key |

x | Key for header | yes |

value |

y | Value for header | yes |

<?xml version="1.0" encoding="UTF-8" ?>

<suite name="test-suite" company="cognifide" project="project">

<test name="header-modify-test">

<collect>

...

<header key="Authorization" value="Basic emVuT2FyZXVuOnozbkdAckQZbiE=" />

...

<open />

...

</collect>

<compare>

...

</compare>

<urls>

...

</urls>

</test>

...

<reports>

...

</reports>

</suite>Hide Modifier is responsible for hiding some unnecessary for test element on page. Affects Screen Collector results. Hiding is done by setting css visibility property to hidden. Works with webDriver only. You can hide many elements by defining many <hide> nodes. If xpath covers more than one element then all elements that match defined xpath will be hidden.

Module name: hide

| ! Important information |

|---|

| In order to use this modifier it must be declared after open module in test suite XML definition. |

| Parameter | Value | Description | Mandatory |

|---|---|---|---|

xpath |

xpath_to_element | Xpath to element(s) to hide | yes |

leaveBlankSpace |

boolean | Defines if element(s) should be invisible (effect as using display=none) or should be not displayed (effect as using visibility=hidden). When set to true, blank and transparent space is left in place of the hidden element, otherwise, element is completely removed from the view. When not defined, hide modifier behaves as if leaveBlankSpace property was set to true. |

no |

<?xml version="1.0" encoding="UTF-8" ?>

<suite name="test-suite" company="cognifide" project="project">

<test name="hide-test">

<collect>

<open />

...

<hide xpath="//*[@id='logo']" />

<hide xpath="//*[@id='primaryNavMenu']/li[2]/a/div" />

...

<resolution width="1280" height="800" />

<screen name="desktop" />

...

</collect>

<compare>

...

</compare>

<urls>

...

</urls>

</test>

...

<reports>

...

</reports>

</suite>| ! Note |

|---|

| This module is no longer supported and may not work correctly. It may be removed in future version. |

Login Modifier allows to login into pages that have access secured with login form. If input element wont be available (wont be loaded yet) then Login Modifier will wait up to 10s for login input, then for password input and at the end for submit button to appear. If any element won't be ready then TimeoutException will be thrown.

Module name: login

| ! Important information |

|---|

| In order to use this modifier it must be declared before open module in test suite XML definition. |

| Parameter | Value | Mandatory | Default value |

|---|---|---|---|

login |

User's login | no | admin |

password |

Password | no | admin |

login-page |

Url to login page | no | http://localhost:4502/libs/granite/core/content/login.html |

login-input-selector |

Xpath expression for login input | no | //input[@name='j_username'] |

password-input-selector |

Xpath expression for password input | no | //input[@name='j_password'] |

submit-button-selector |

Xpath expression for submit button | no | //*[@type='submit'] |

login-token-key |

Name for cookie we get after successfull login | no | login-token |

timeout |

Number of milliseconds (between 0 and 10000) that modifier will wait to login page response after submiting credentials. | no | 0 |

force-login |

Enforces login even when login cookie is present. | no | false |

<?xml version="1.0" encoding="UTF-8" ?>

<suite name="test-suite" company="cognifide" project="project">

<test name="login-test">

<collect>

<login login="user"

password="password"

login-page="http://192.168.180.19:5503/libs/cq/core/content/login.html"

login-input-selector="//input[@name='j_username']"

password-input-selector="//input[@name='j_password']"

submit-button-selector="//*[@type='submit']" />

<open />

...

</collect>

<compare>

...

</compare>

<urls>

...

</urls>

</test>

...

<reports>

...

</reports>

</suite>Resolution Modifier is responsible for changing browser screen size. Affects Screen Collector results.

| ! Note |

|---|

| Please note that final resoulution of screenshots may be different when scrollbar is dispayed. Default width of Firefox's Scrollbar is equal to 33px. (so when you want to grab viewport of size 1024, then set width parameter to 1057px) |

Module name: resolution

| Parameter | Value | Description | Mandatory |

|---|---|---|---|

maximize |

false (default) |

This property is deprecated and will be removed in future release. | |

width |

int (1 to 100000) | Window width | no |

height |

int (1 to 100000) | Window height | no |

| ! Important information |

|---|

| You cannot maximize the window and specify the dimension at the same time. If you specify height param you have to also specify width param and vice versa. |

<?xml version="1.0" encoding="UTF-8" ?>

<suite name="test-suite" company="cognifide" project="project">

<test name="resolution-modify-test">

<collect>

...

<resolution width="200" height="300"/>

<screen />

...

</collect>

<compare>

...

</compare>

<urls>

...

</urls>

</test>

...

<reports>

...

</reports>

</suite>Sleep Modifier is responsible for temporarily ceasing execution, causes current thread to sleep. It is useful in situations when page resources have a long loading time - it suspends next collectors for some time.

Module name: sleep

| Parameter | Value | Description | Mandatory |

|---|---|---|---|

duration |

int (1 to 30000) | Sleep time, in milliseconds | yes |

| ! Important information |

|---|

| One sleep duration cannot be longer than 30000 milliseconds (30 seconds). Two consecutive sleep modifiers are not allowed. Total sleep duration (sum of all sleeps) in test collection phase cannot be longer than 120000 milliseconds (2 minutes). |

<?xml version="1.0" encoding="UTF-8" ?>

<suite name="test-suite" company="cognifide" project="project">

<test name="sleep-test">

<collect>

...

<open />

...

<sleep duration="3000" />

...

</collect>

<compare>

...

</compare>

<urls>

...

</urls>

</test>

...

<reports>

...

</reports>

</suite>Wait For Page Loaded Modifier waits until page is loaded (all DOM elements are loaded - this does not wait for dynamically loaded elements by e.g. JavaScript) or fixed amount of time is up. The idea of waiting for page is counting amount of elements [by findElements(By.xpath("//*"))] on current page state in loop. If number of elements has increased since last checkout, continue loop (or break if timeout). Else if number of elements is still, assume the page is loaded and finish waiting.

Module name: wait-for-page-loaded

No parameters.

| ! Important information |

|---|

| Timeout for waiting is 10000 milliseconds. Page is checked every 1000 milliseconds. |

<?xml version="1.0" encoding="UTF-8" ?>

<suite name="test-suite" company="cognifide" project="project">

<test name="wait-for-page-loaded-test">

<collect>

...

<open />

...

<wait-for-page-loaded />

...

</collect>

<compare>

...

</compare>

<urls>

...

</urls>

</test>

...

<reports>

...

</reports>

</suite>Comparator is module which main task is to consume data and compare it with pattern or against defined set of rules.

Each comparator presented in section below consists of three elements:

- consumed resource type,

- module name (comparator),

- parameters.

This is name of resource type consumed by defined comparator. This is always name of tag definition for comparator.

This name says the system which resource type should be consumed by defined comparator. When no comparator in system can consume defined resource type, system exception will occur and test will not be performed. This solution enables adding new features to the system without system downtime (just by installing new feature bundle).

Each comparator can consume only one type of resource.

This is special parameter, unique name for comparator type treated as interpretation of given resource type. System will recognize which implementation of comparator should be called by this name. This parameter is required for each comparator but system will assume default comparator for each resource type when no comparator property is defined.

- cookie -> CookieComparator,

- js errors -> JSErrorsComparator,

- screen -> LayoutComparator,

- source -> SourceComparator,

- status-codes -> StatusCodesComparator.

Example of usage can be found in system for source comparison, where two comparators exists: W3C HTML5 Comparator and Source Comparator. Example below shows sample usage:

...

<collect>

<open/>

<source/>

</collect>

<compare>

<source comparator="source"/>

<source comparator="w3c-html5"/>

</compare>

...When test defined as above is executed, only one collection of page source is performed. But result of this collection is used twice during comparison phase. First by Source Comparator and then by W3C HTML5 Comparator.

This is set of key-value pairs using which user can pass some configuration and information to comparator. Parameters for comparators can be divided into two groups:

- mandatory - parameters without which comparison will be not possible,

- optional - passing this parameter is not obligatory, usually this is some comparator functionality extension.

There exists special comparator property collectorName which is connected with collector's name property. By using collectorName property combined with collector's name property, user can control which comparator instance compares results collected by particular collector. See examples below:

...

<collect>

<open/>

<sleep duration="1000"/>

<resolution width="1280" height="1024" name="desktop"/>

<screen name="desktop"/>

<resolution width="768" height="1024" name="tablet"/>

<screen name="tablet"/>

<resolution width="320" height="480" name="mobile"/>

<screen name="mobile"/>

</collect>

<compare>

<screen collectorName="mobile"/>

<screen collectorName="tablet"/>

</compare>

...Configuration above will trigger three screens collections (desktop, tablet and mobile) and two comparisons (mobile and tablet). Screenshot taken for desktop will be not compared.

...

<collect>

<open/>

<sleep duration="1000"/>

<resolution width="1280" height="1024" name="desktop"/>

<screen name="desktop"/>

<resolution width="768" height="1024" name="tablet"/>

<screen name="tablet"/>

<resolution width="320" height="480" name="mobile"/>

<screen name="mobile"/>

</collect>

<compare>

<screen/>

</compare>

...Configuration above will trigger three screens collections (desktop, tablet and mobile) and three comparisons (desktop, table, mobile).

...

<collect>

<open/>

<sleep duration="1000"/>

<resolution width="1280" height="1024" name="desktop"/>

<screen name="desktop"/>

<resolution width="768" height="1024" name="tablet"/>

<screen name="tablet"/>

<resolution width="320" height="480" name="mobile"/>

<screen name="mobile"/>

</collect>

<compare>

<screen/>

<screen collectorName="tablet"/>

</compare>

...Configuration above will trigger three screens collections (desktop, tablet and mobile) and four comparisons (desktop, tablet, mobile and one additional for tablet).

Following picture presents definitions described earlier:

where:

- Consumed resource type,

- Special property: collectorName,

- Special property: comparator,

- Module name (comparator).

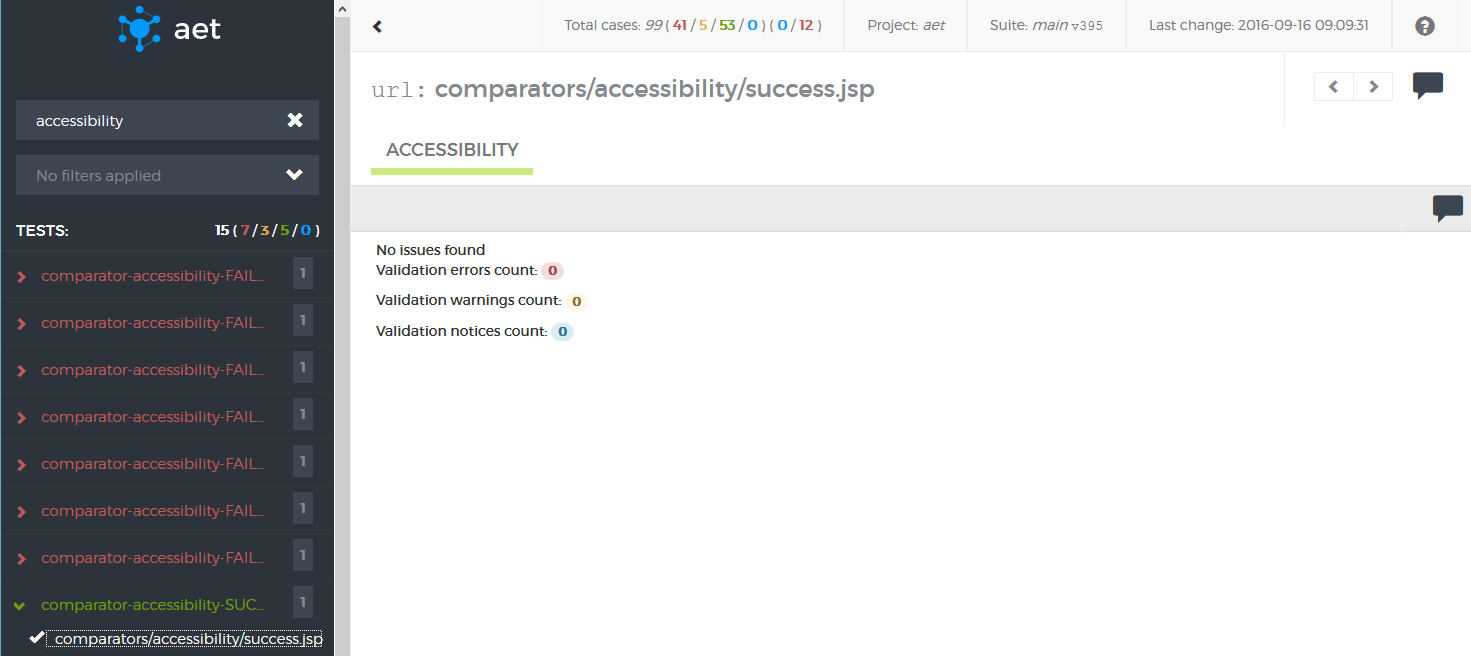

| ! Beta Version |

|---|

| This AET Plugin is currently in BETA version. |

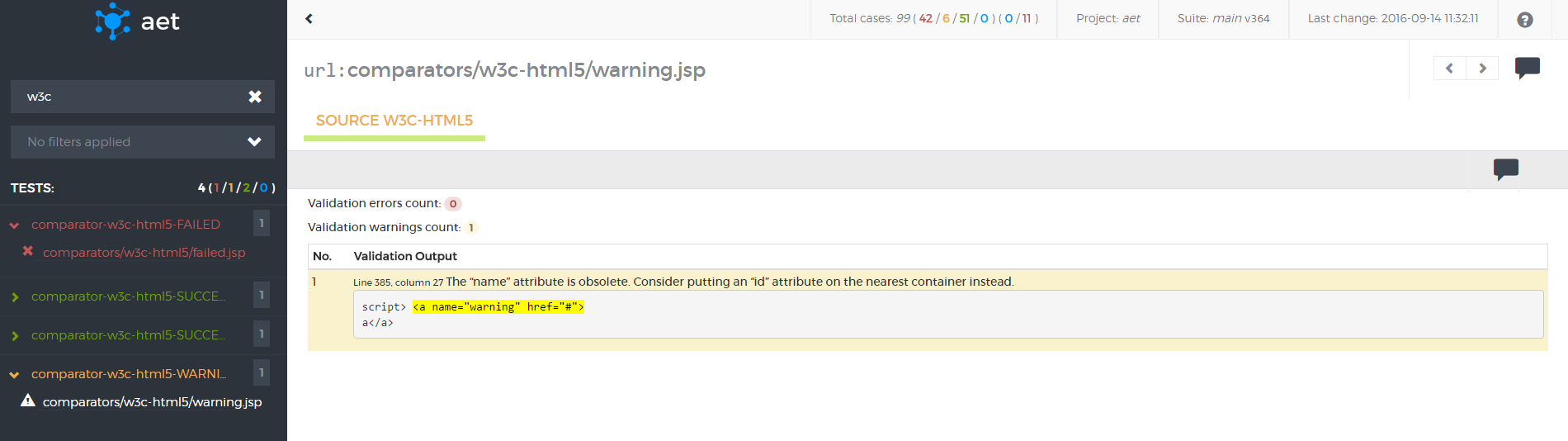

Accessibility Comparator is responsible for processing of collected accessibility validation result. It uses html CodeSniffer library.

Module name: accessibility

Resource name: accessibility

| Parameter | Value | Description | Mandatory |

|---|---|---|---|

report-level |

ERROR (default) WARN NOTICE |

Only violations of type ERROR are displayed on report. Violations of type WARN and ERROR are displayed on report. All violations are displayed on report. |

no |

| ignore-notice | boolean (default: true) |

If ignore-notice=true test status does not depend on the notices amount.If ignore-notice=false notices are treated as warnings in calculating test status. Enforces report-level = NOTICE. |

no |

showExcluded |

boolean (default: true) |

Flag that says if excluded issues (see [[Accessibility Data Filter | AccessibilityDataFilter]]) should be displayed in report. By default set to true. |

<?xml version="1.0" encoding="UTF-8" ?>

<suite name="test-suite" company="cognifide" project="project">

<test name="accessibility-test">

<collect>

...

<accessibility />

...

</collect>

<compare>

...

<accessibility report-level="WARN" />

...

</compare>

<urls>

...

</urls>

</test>

...

<reports>

...

</reports>

</suite>| ! Beta Version |

|---|

| This AET Plugin is currently in BETA version. |

Client Side Performance Comparator is responsible for processing of collected client side performance analysis result. Comparator uses YSlow tool in order to perform comparison phase on collected results.

Module name: client-side-performance

Resource name: client-side-performance

No parameters

<?xml version="1.0" encoding="UTF-8" ?>

<suite name="test-suite" company="cognifide" project="project">

<test name="client-side-performance-test">

<collect>

...

<client-side-performance />

...

</collect>

<compare>

...

<client-side-performance />

...

</compare>

<urls>

...

</urls>

</test>

...

<reports>

...

</reports>

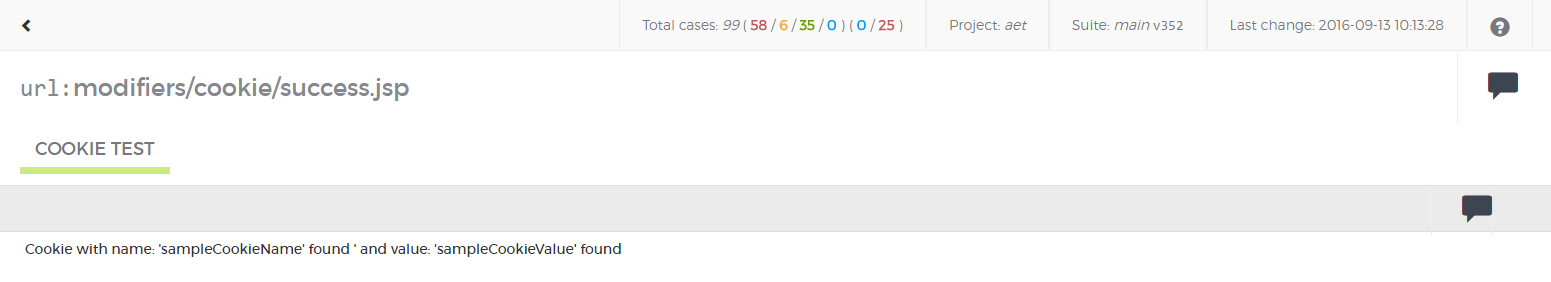

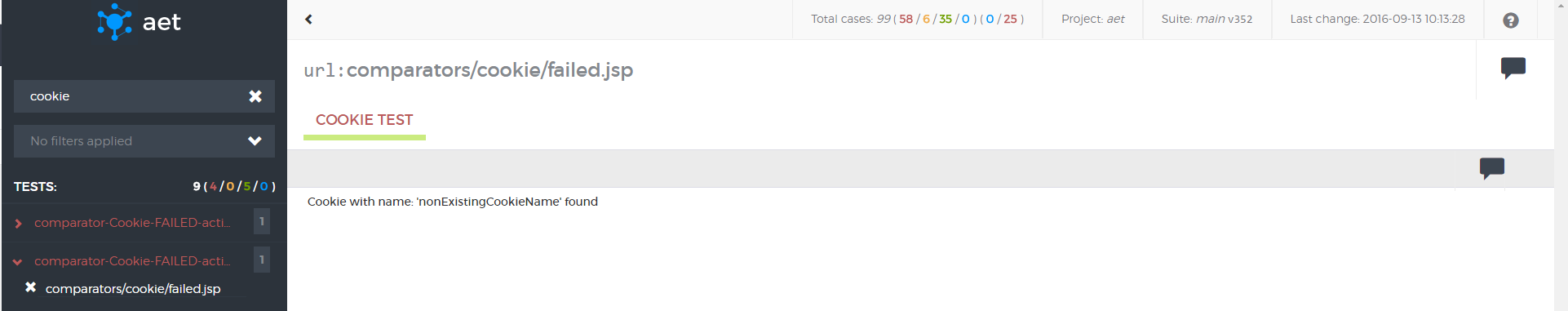

</suite>Cookie Comparator is responsible for processing of collected cookies. This can be simply listing of collected cookies, verifying if cookie exists or comparing collected cookie with pattern.

Cookie feature allows to collect patterns and can be rebased from report only in compare action mode*.*

Module name: cookie

Resource name: cookie

| Parameter | Value | Description | Mandatory |

|---|---|---|---|

action |

list test compare |

Displays the list of cookies Tests if cookie with the given name and value exists Compares the current data with the pattern (compares only cookie names, values are ignored) |

no If action parameter is not provided, default list action is performed |

cookie-name |

Name of the cookie to test, applicable only for test action | yes, if action set to test

|

|

cookie-value |

Value of the cookie to test, applicable only for test action | no | |

showMatched |

boolean (default: true) |

Works only in compare mode. Flag that says if matched cookies should be displayed in report. By default set to true. |

no |

<?xml version="1.0" encoding="UTF-8" ?>

<suite name="test-suite" company="cognifide" project="project">

<test name="cookie-test">

<collect>

...

<cookie />

...

</collect>

<compare>

...

<cookie />

...

</compare>

<urls>

...

</urls>

</test>

...

<reports>

...

</reports>

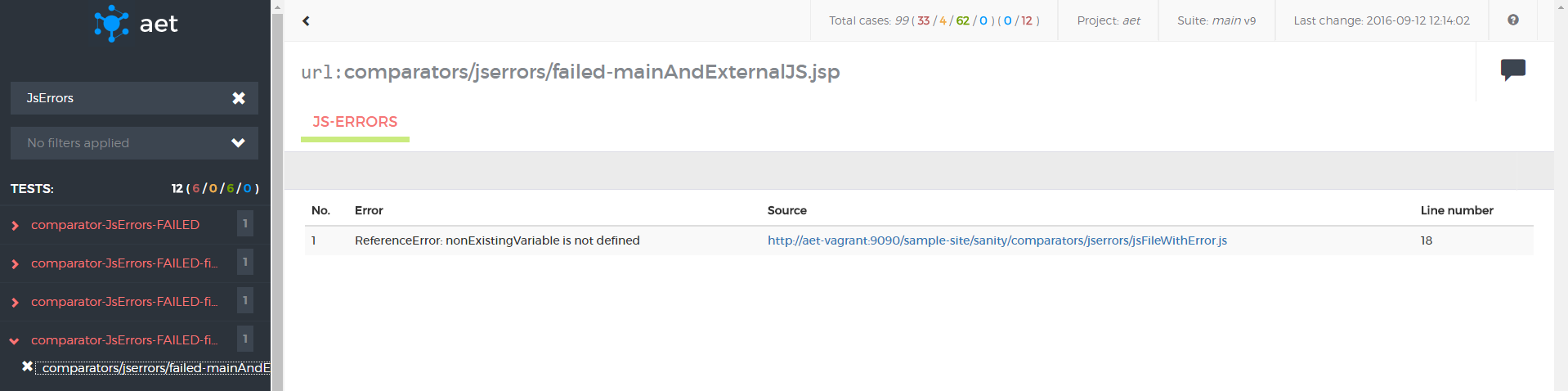

</suite>JS Errors Comparator is responsible for processing of collected javascript errors resource. In this case it is simply displaying list of javascript errors.

JS Errors feature do not allow to collect patterns, so it does not compare results with any patterns - rebase action is also not avaliable.

Module name: js-errors

Resource name: js-errors

No parameters

<?xml version="1.0" encoding="UTF-8" ?>

<suite name="test-suite" company="cognifide" project="project">

<test name="js-errors-test">

<collect>

...

<js-errors />

...

</collect>

<compare>

...

<js-errors />

...

</compare>

<urls>

...

</urls>

</test>

...

<reports>

...

</reports>

</suite>| ! Important information |

|---|

| [[JS Errors Data Filter |

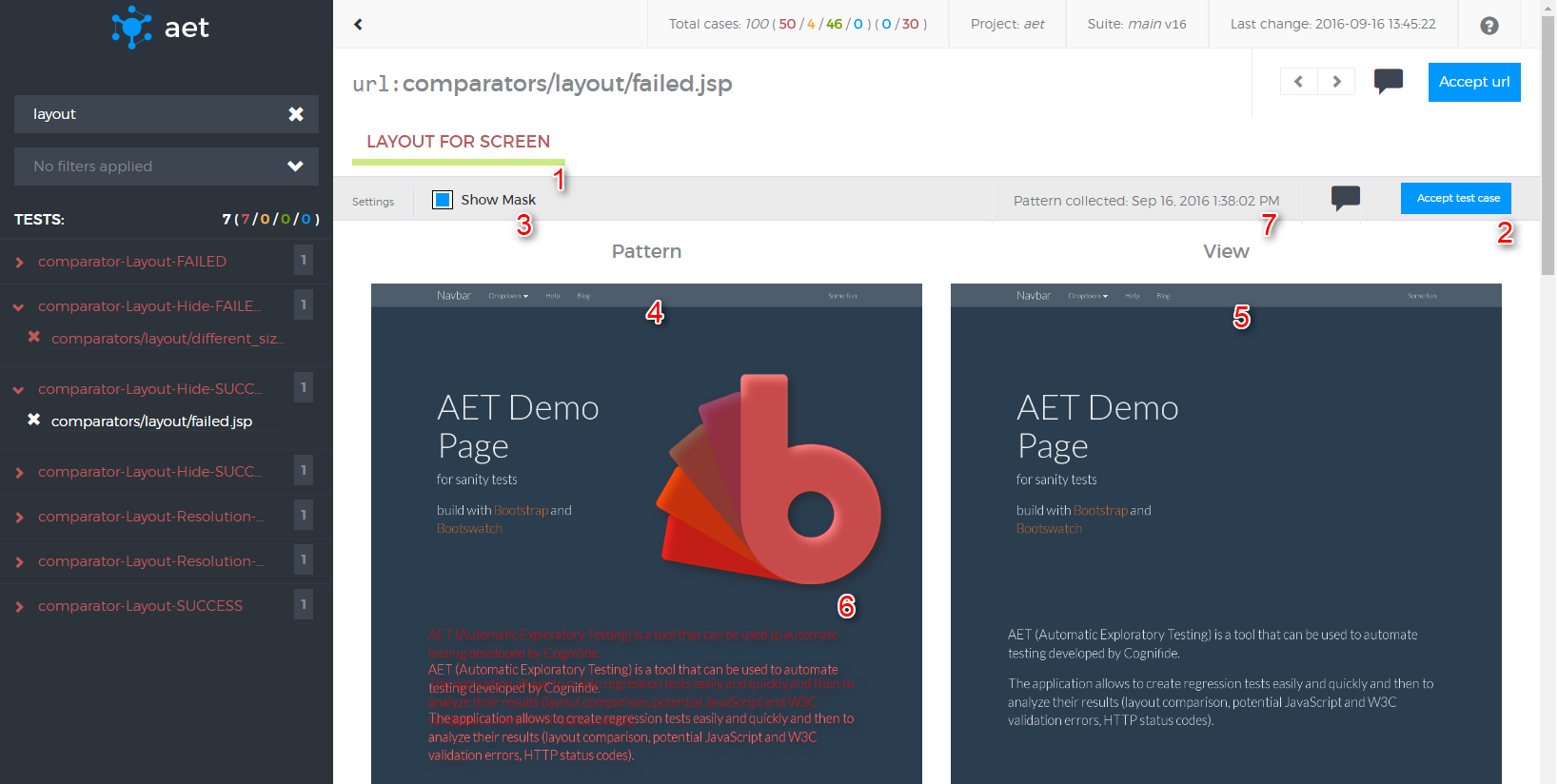

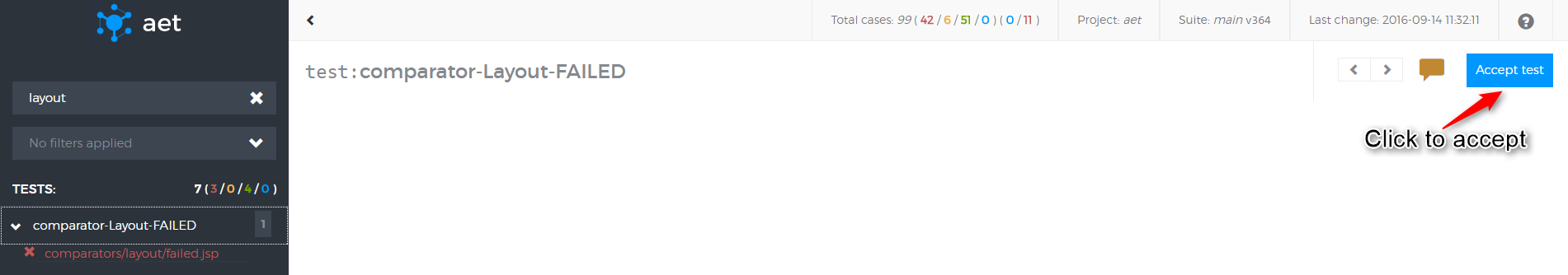

Layout Comparator is responsible for comparing collected screenshot of page with pattern. This is default comparator for screen resource.

Can be rebased from report.

Module name: layout

Resource name: screen

No parameters

<?xml version="1.0" encoding="UTF-8" ?>

<suite name="test-suite" company="cognifide" project="project">

<test name="layout-compare-test">

<collect>

...

<screen />

...

</collect>

<compare>

...

<screen comparator="layout" />

...

</compare>

<urls>

...

</urls>

</test>

...

<reports>

...

</reports>

</suite>Since AET 1.3 fast comparison of screenshots will be implemented. Taken screenshot MD5 will be matched against current pattern. If hashes will be the same, screenshot will be treated as one without differences and no further comparison will be performed.

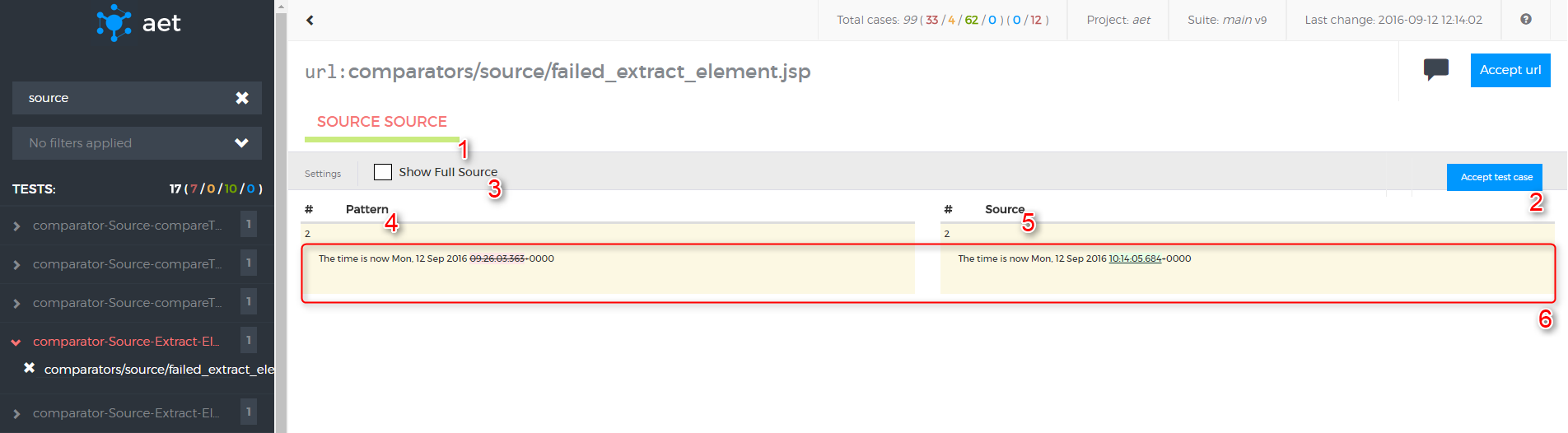

Source Comparator is responsible for comparing collected page source with pattern.

Can be rebased from report.

Module name: source

Resource name: source

| Parameter | Value | Description | Mandatory |

|---|---|---|---|

compareType |

content | Compare only text inside HTML nodes. Ignore formatting, tag names and attributes. | no If compareType is not provided default all value is taken. |

| markup | Compare only HTML markup and attributes. Ignore text inside HTML tags, formatting and white-spaces. Remove empty lines. | ||

| allFormatted | Compare full source with formatting and white-spaces ignored. Remove empty lines. | ||

| all | Compare all source (default). |

<?xml version="1.0" encoding="UTF-8" ?>