-

Notifications

You must be signed in to change notification settings - Fork 2.6k

New issue

Have a question about this project? Sign up for a free GitHub account to open an issue and contact its maintainers and the community.

By clicking “Sign up for GitHub”, you agree to our terms of service and privacy statement. We’ll occasionally send you account related emails.

Already on GitHub? Sign in to your account

Add support for virtual machines #142

Comments

|

Whilst you could do this by creating a non racked device type for Virtual devices the issue is that they are not able to be displayed in the location or rack pages. Having some additional support for displaying these type of virtual devices would be very handy. |

|

+1. We have a lot of VMs and I am creating them as devices on a single rack, I would like to create VMs in a cluster or something so... 2016-07-15 8:05 GMT-04:00 jennec notifications@github.com:

|

|

+1 Virtual devices would be much appreciated. Having lots of VM's and/or containers, it would be practical to have a "virtual device" with one or more interfaces, again having IP's tied to them. The "virtual device" would then be tied to a physical device (e.g. the server hosting those VM's and/or containers). This way, if you move a VM from one physical device, to another, all you'd need to do, is to change the physical/virtual connection, and the IPAM would be updated (i.e. you only have to change one thing, and the IPAM-documentation reflects that). |

|

I think this is a great idea that many commercial DCIM products also provide modeling for. Given the state of the underlay, the documentation/features that have not been focused on much(RPC) and foundation that needs to be setup to support VM, this feels like its sometime off in the future like version 4.0 or later? VMs are something that can be moved between hypervisors as needed or on demand. Furthermore, a VM in the current devops mantras are here today, gone tomorrow for a number of reasons. I vote to add it, if that is the intent of the project on the roadmap now, but far off in the future. Given how Openstack and VMware handle bare metal / VM inventory very differently, there are a host of other items we will have to sort out to possibly develop a model like what is planned for with the network in netbox and auditing via RPC. |

|

I would suggest that this could simply be achieved by creating a new device type that is associated with the location rather than the rack or container device. This way all VMs can be documented and the IPAM would be accurate. Currently IPAM is missing all Virtual machines which account for approx 90% of all IP allocations for my company. Being able to record these IPs against a virtual device type, regardless of its underlying hypervisor type, would be a great help. |

|

Obviously, this is all opinion here, but looking at the IPAM alternatives and the roadmap for netbox, I would suggest we do not rush to add virtual devices to the current system until we better map this out. phpIPAM (a very popular IPAM) handles IPs as objects linked to names/descriptions. Yes IPs/interfaces of a VM or server in that case are not related to one another by a common object id, but they can be correlated when searching and using a common naming standard. It also gets tedious creating all of these vm objects/IT assets manually. Overlays, virtual hosts, user/server endpoints need some thoughtful consideration over the next few months. It just feels like other parts of the model need to mature before this is tackled. For example, by first instituting DNS lookups, the tedious nature of create better information about IPs can be helped along by leveraging existing records(if you set those up obviously). |

|

The "device must have a rack location" immediately struck me as.. limiting. As an ISP, we have devices in places that are mounted to the wall or in a cable doghouse, etc. I was happy to find the workaround of adding a non-rack device. At that point I decided I could treat "Rack" as a code word for IDF and it fits in 99% of the cases. The only place it falls apart for me is the aforementioned virtual machine case, where we might spread out hypervisors in a cluster to different places in a datacenter (for power, fiber, environmental redundancy) and then we would need to say this virtual machine is in ... whichever rack. I suppose there are even times when someone might spread out hypervisors to different datacenters, which means you might need to assign a device to a region rather than a site. The reality is that devices are already tied to a site (which could be considered a location). It would be nice if Rack was considered an optional value so we could say no rack. Alternatively, if we could generalize locations but make them nested, you could have "3rd floor IDF", or "Datacenter 2nd Floor" as a location, then as a sublocation you could say "Cage Z5", then under that you put "Rack 3" I guess it all comes down to how people want to shoehorn their data into the places that are provided. You could name your site "Telx Datacenter - 3rd Floor" then setup racks under it as "Cage Z5 - Rack 2", with another site being "Telx Datacenter - 2nd Floor". I don't like this workaround, but I don't know what a good solution would be. |

|

Noting here that both #180 (comment) & #198 mention that a device & rack will always be considered physical. With that in mind does anyone have any ideas for how to handle implement handling static IP assignments of VMs in netbox beyond just assigning a description to an IP address? |

|

@Gelob , you can add a 'Virtual' form factor interface to the physical host. Although the limitation here is the VM is always expected to be on that same physical host. |

|

As an environment with mixed cloud and datacenter infrastructure, I'd love to see the ability to define certain devices as VM hosts or guests and link them together for ease of navigation and the ability to quickly see the hosts that hold each guest. Also, not sure if this should be a separate request or not; but I would love to see the ability to give an AWS credential and poll/query all of the AWS infrastructure for always up-to-date IPAM, Device Mangement/Documentation etc. |

|

Could you extend this by creating a non racked device that has container On Monday, 15 August 2016, Joachim Tingvold notifications@github.com

Chris Jenner |

Yes, I guess that should work as well. |

|

1+ |

|

this would also be immensely useful for virtualized storage systems like NetApp for example. My suggestion for this would be something like this: Allow to create something like a "Cluster" which is just a container for multiple devices. Then, you can create "virtual" devices (devices of a special type or with a "virtual" checkbox clicked) that are not assigned to a rack but to a "Cluster". the "Cluster" could have other properties as well, for example it could be (logically) linked to another Cluster as "failover partner" or something If these virtual devices are considered devices as well, this could even be done recursively, for example to add KVM machines or Docker containers that are themselves running in a virtual linux VM. |

|

This is the only thing stopping us from completing our migration from Racktables. This is one thing I liked how Racktables worked. You could flag a server object as a Hypervisor. Then you could nest VM's against servers with that flag. You could also create Virtual Cluster objects, assign Servers to the Cluster and then nest the VM's under the Cluster. |

|

I also was looking at this as a DCIM solution. We have a majority of our serves as virtual machines, both in Amazon and an internal cloud that we don't control. I would like to use netbox, but the lack of support for virtual machines is frustrating. I see the solutions listed here for adding them as "device bays" for the servers they are hosted on, but as this stuff is all in "clouds", there are no physical servers we would track to do so with... |

|

@Gorian the way I have been doing it with my VM's for the moment its to use site as "Virginia AWS" and Device type "AWS VM". I also add them to a rack group so they are easier to find but, I do not rack them. |

|

I'd like to reiterate that the DCIM component of NetBox relates strictly to physical infrastructure only. It has absolutely no support for virtual machines, and any attempt to shoehorn the management of VMs into it will almost certainly end in sadness and drinking. The only overlap with VMs present in the current feature set is on the IPAM side; obviously, people want the ability to track VM IP assignments as well. It wouldn't make sense to try and extend the current DCIM component to accommodate virtual machines: VMs don't have rack positions, interface form factors, console, power, etc. The ideal approach would almost certainly be to create a new subapplication alongside the DCIM, IPAM, and other components to track virtual machines. But before we can do this, we need to decide what the data model will look like. Defining a model to represent a VM should be fairly straightforward, as is associating it with a physical hypervisor device. We can also create a model to represent virtual interfaces which can only be associated with virtual machines. The gap left to close, then, is the assignment of IP addresses to these virtual interfaces. Currently, the |

|

@jeremystretch What do you think about the fact that virtual machines can migrate between hosts (live or not)? Perhaps the data model could contain the information about what physical servers can host a given VM with optional information about it's current placement, which might or might not be true at the moment, but the additional information could point to different machines where the VM might be. |

|

@drybjed I want to avoid getting into hypervisor orchestration. I think it would make sense to support some concept of a hypervisor cluster, but that's probably as far as we'll go. I don't have much experience with traditional enterprise VM management though, so I'm open to suggestions. |

|

I guess I don't understand why you need to tie the VMs to a host. Why can't you just support the concept of free-floating VMs in some cloud? At work we have tons of internal clouds, people use VPS, or EC2, and may know nothing about the physical hardware, or be able to track the host it's running on. Just let us say "this is a vm, this is it's IP" etc. and call it a day. Still super useful for cases where we have 200+ virtual machines, and no physical hardware to track, we still want something better than excel sheets to track and manage the servers we do have |

|

I think we have two suitable options. Invert IPAddress assignmentCurrently, the I'm not a huge fan of this approach, as it requires some fairly disruptive data migrations, and imposes additional database hits when saving objects (each new Convert

|

|

@Gorian I'd guess that most people who track IP assignments to VMs also want to track where those VMs are physically located. Assignment of a VM to a physical hypervisor can probably be optional, though it would be difficult to organize them otherwise. |

|

For anyone still following along, I've gotten a mostly-complete implementation of this in the virtualization branch, though it's still very much a work in progress. I'm hoping to have the first v2.2 beta out sometime in early September. |

|

That is great news. When the beta drops, I'll be ready to test. I've been waiting for this since the very beginning and it's the only thing holding us back from fully implementing Netbox! 👍🏻 |

|

@Chris-ZA If you feel like it, you can spin up an instance of the |

|

@jeremystretch I see a problem when trying to add devices to a cluster (the virtualization hosts) I need to select a region, but I have no regions defined (it's all onsite so far). I have a few sites that are not assigned to any region, but they cannot be selected in the drop-down list. I'd hate having to create a "default" region and assigning all my sites (and racks) to it, just to be able to select the correct devices here |

|

@jeremystretch Also, the "Show IPs" button on a Virtual Machine is broken. It redirects to an empty page. No error messages, just an empty page. |

|

@jeremystretch Also, When assigning IP Addresses to VMs, the "create and add another" button redirects to a "generic" IP creation form, that is not in any way connected to the VM anymore. I.e. it doesn't have the "Interface Assignment" form where you can select to which interface to "bind" the IP. And since it seems there is no (?) easy (?) way to assign an IP Address to a VM later (you cannot change the "parent", at least I have not found out how), this is probably not how it should work. Note that this is also the way it works on "regular" devices, so it's probably the way it is intended. It still feels a bit strange though... |

|

@jeremystretch Can we also get the possibility of adding secrets to a VM, like it works with devices? Would be helpful for the login credentials... |

|

@darkstar Thanks, I'll look into those bugs. But in the future, please try to avoid commenting many times in succession as it generates a lot of noise for people who have subscribed to an issue. You can edit your previous comments on GitHub to add more content.

I'm on the fence about this, and leaning toward "no." I want to maintain NetBox's focus on infrastructure and I feel like extending secrets to VMs crosses too far into systems administration territory. |

Okay, I can see how this is an edge case. I'm fine with that. But maybe at least the cluster can have a secret, since it kind of belongs to the "infrastructure" side of things. Meanwhile, the other 2 problems I reported were fixed in your last 2 commits and work fine. Thanks for that quick (and apparently easy ;-) fix. Really looking forward to seeing these features in netbox as it's currently the only remaining issue that keeps me from ditching out Excel list and using netbox exclusively :) |

|

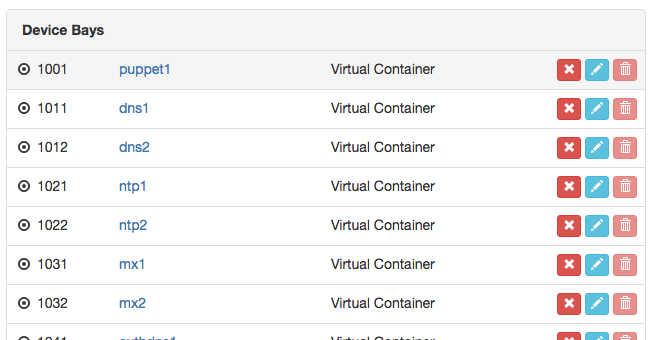

I haven't followed this discussion for a while, nor had the time to test the branch, but I have a quick question; will it be possible to move/migrate/change existing devices into these "VM" devices after upgrading? (All our VMs are implemented as devices attached to "Device Bays"). |

|

Probably not. At least I see no possible way right now. You might have success with exporting and re-importing them, maybe after slightly changing the CSV file |

|

From my comment almost a year ago:

That said, it should be feasible to migrate devices in bulk via the command shell. Essentially, you'll need to:

(We're reusing the same interface object for VMs so IP assignments will all stay intact through the migration.) Something like this should work, though obviously it needs to be fleshed out and tested: |

|

Thank you for this. I'd like to see VirtualMachine have "role" (which I'd be happy to share tags with DeviceRole); and less importantly "status" - e.g. to plan a VM and reserve an IP for it, or track VMs which are intentionally shut down.

Well, I'd argue that is what secrets are doing for Devices today. If you're using secrets to store the root password or the SNMP community (for a server anyway), then you're using this to administer the OS which is running on the device, as opposed to the device itself. If secrets are only being used for IPMI logins, then I'll accept that's infrastructure not system administration :-) And as for network devices, remember that you can get virtual routers which run as VMs. Infrastructure is both hard and soft these days... |

|

FYI I've opened the |

|

Great work!! Will it support Custom Fields like normal devices? I think this is a must to be able to add custom stuff How could a in VM cluster work in terms of IP addresses and not making them duplicate? Is it even currently possible? Is it possible to assign IP address the the Cluster of Hosts as well? |

|

@RyanBreaker This should be addressed in 136d16b |

|

Since the first v2.2 beta has been released, I'm going to close out this issue. 🎉 For any further bugs or related feature requests, please open a separate issue using the normal template. I will label issues with the |

Any plans to support virtual devices? e.g. a VM or virtualized network appliance which may have multiple interfaces and be parented to a single 'Device' or a 'Platform' of devices?

The text was updated successfully, but these errors were encountered: