Commit

This commit does not belong to any branch on this repository, and may belong to a fork outside of the repository.

Minor optimizations to the codegen of TaskFnInputFunction (#8304)

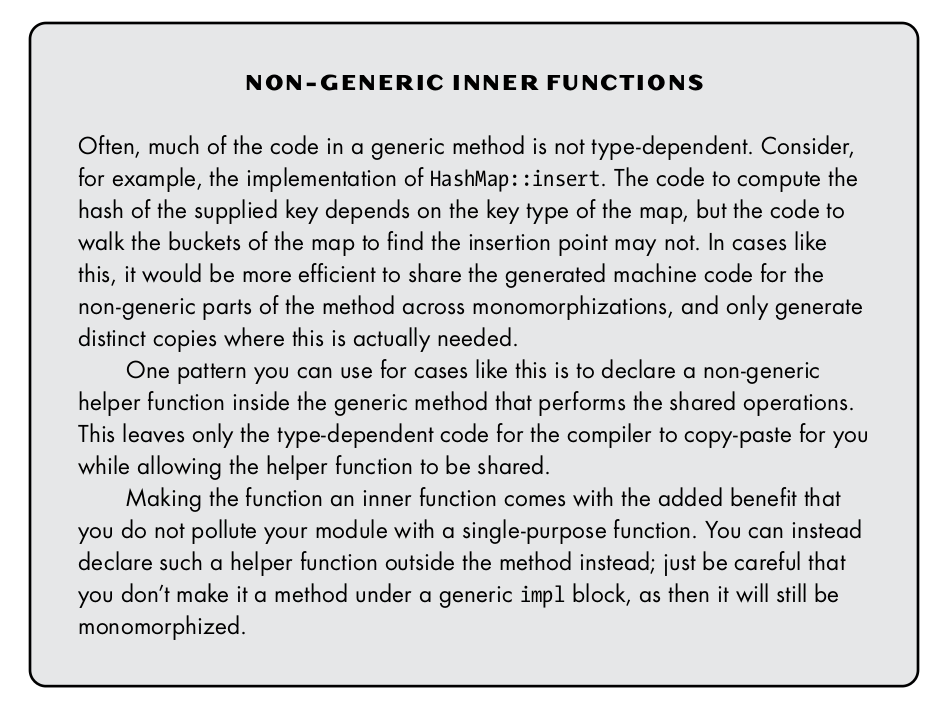

## What? I noticed a number of small optimizations that could be applied to these hot and heavily-monomorphized functions: - Using `with_context()` instead of `.context()`, to avoid evaluating the error message in the common case that it's unused. I also tried `concat!()` since this can be a static string, but the resulting binary is slightly larger, and we don't need to optimize for the unlikely error case. - Extracted the parts of the monomorphized functions that didn't require the type parameters into separate non-generic functions. While the goal here is mostly to reduce binary size and compilation time, this optimization on it's own seems to help with the runtime benchmarks too (though I didn't test it rigorously in isolation). Here's a section from [Rust for Rustaceans](https://rust-for-rustaceans.com/) explaining this "non-generic function" trick:  ## Binary Size? Slightly negative, at least for stripped debug builds: ``` time pnpm pack-next ``` ``` -rw-r--r-- 1 bgw bgw 167895040 Jun 4 17:20 next-swc.after.tar -rw-r--r-- 1 bgw bgw 168622080 Jun 4 15:37 next-swc.before.tar ``` ## Runtime Performance? Using https://github.com/bgw/benchmark-scripts/ ### Microbenchmark (`turbo_tasks_memory_stress/fibonacci/200`) ``` $ TURBOPACK_BENCH_STRESS=yes cargo bench -p turbo-tasks-memory -- fibonacci/200 Compiling turbo-tasks v0.1.0 (/home/bgw/turbo/crates/turbo-tasks) Compiling turbo-tasks-memory v0.1.0 (/home/bgw/turbo/crates/turbo-tasks-memory) Compiling turbo-tasks-testing v0.1.0 (/home/bgw/turbo/crates/turbo-tasks-testing) Finished `bench` profile [optimized] target(s) in 10.79s Running benches/mod.rs (target/release/deps/mod-8c0f970371f8713d) turbo_tasks_memory_stress/fibonacci/200 time: [64.420 ms 64.683 ms 64.941 ms] thrpt: [309.53 Kelem/s 310.76 Kelem/s 312.03 Kelem/s] change: time: [-2.2828% -1.7587% -1.2206%] (p = 0.00 < 0.05) thrpt: [+1.2357% +1.7902% +2.3361%] Performance has improved. Found 1 outliers among 20 measurements (5.00%) 1 (5.00%) low mild ``` ### "Realistic" Benchmark (`bench_startup/Turbopack CSR/1000 modules`) The difference is small. I patched the benchmark to increase the number of iterations so I could get something statistically significant. ``` diff --git a/crates/turbopack-bench/src/lib.rs b/crates/turbopack-bench/src/lib.rs index 4e3df12db0..d950d76071 100644 --- a/crates/turbopack-bench/src/lib.rs +++ b/crates/turbopack-bench/src/lib.rs @@ -35,8 +35,8 @@ pub mod util; pub fn bench_startup(c: &mut Criterion, bundlers: &[Box<dyn Bundler>]) { let mut g = c.benchmark_group("bench_startup"); - g.sample_size(10); - g.measurement_time(Duration::from_secs(60)); + g.sample_size(100); + g.measurement_time(Duration::from_secs(600)); bench_startup_internal(g, false, bundlers); } ``` ``` cargo bench -p turbopack-cli -- bench_startup ``` ``` Finished `bench` profile [optimized] target(s) in 1.30s Running benches/mod.rs (target/release/deps/mod-2681e324dfd90da1) bench_startup/Turbopack CSR/1000 modules time: [2.2684 s 2.2717 s 2.2750 s] change: [-1.9365% -1.7387% -1.5602%] (p = 0.00 < 0.05) Performance has improved. Found 3 outliers among 100 measurements (3.00%) 1 (1.00%) low mild 2 (2.00%) high mild ``` ## Build Speed? Not enough of a difference to measure. ``` rm -rf target/ && time cargo build -p turbopack-cli ``` Before: ``` real 10m42.174s ``` After: ``` real 10m40.735s ```

- Loading branch information