-

Notifications

You must be signed in to change notification settings - Fork 1.9k

New issue

Have a question about this project? Sign up for a free GitHub account to open an issue and contact its maintainers and the community.

By clicking “Sign up for GitHub”, you agree to our terms of service and privacy statement. We’ll occasionally send you account related emails.

Already on GitHub? Sign in to your account

Minor optimizations to the codegen of TaskFnInputFunction #8304

Merged

Conversation

This file contains bidirectional Unicode text that may be interpreted or compiled differently than what appears below. To review, open the file in an editor that reveals hidden Unicode characters.

Learn more about bidirectional Unicode characters

…ic helper function

…haring across monomorphized instances

|

The latest updates on your projects. Learn more about Vercel for Git ↗︎

4 Ignored Deployments

|

|

This stack of pull requests is managed by Graphite. Learn more about stacking. |

🟢 Turbopack Benchmark CI successful 🟢Thanks |

|

✅ This change can build |

|

.context() calls in TaskFnInputFunction impls

kdy1

approved these changes

Jun 5, 2024

kdy1

added a commit

to vercel/next.js

that referenced

this pull request

Jun 5, 2024

# Turbopack * vercel/turborepo#8272 <!-- Donny/강동윤 - feat: Update `swc_core` to `v0.92.8` --> * vercel/turborepo#8262 <!-- Alexander Lyon - add crate to calculate prehashes --> * vercel/turborepo#8174 <!-- Tobias Koppers - use prehash to avoid rehashing the key in the task cache --> * vercel/turborepo#7674 <!-- Alexander Lyon - [turbo trace] add ability to filter by value and occurences --> * vercel/turborepo#8287 <!-- Donny/강동윤 - feat: Update `swc_core` to `v0.92.10` --> * vercel/turborepo#8037 <!-- Alexander Lyon - create turbo-static for compile time graph analysis --> * vercel/turborepo#8293 <!-- Will Binns-Smith - Sync Cargo.lock with Next.js --> * vercel/turborepo#8239 <!-- Benjamin Woodruff - Reduce amount of code generated by ValueDebugFormat --> * vercel/turborepo#8304 <!-- Benjamin Woodruff - Minor optimizations to the codegen of TaskFnInputFunction --> * vercel/turborepo#8221 <!-- Donny/강동윤 - perf: Introduce `RcStr` --> ### What? I tried using `Arc<String>` in vercel/turborepo#7772, but a team member suggested creating a new type so we can replace underlying implementation easily in the future. ### Why? To reduce memory usage. ### How? Closes PACK-2776

ForsakenHarmony

pushed a commit

to vercel/next.js

that referenced

this pull request

Jul 25, 2024

…borepo#8304) ## What? I noticed a number of small optimizations that could be applied to these hot and heavily-monomorphized functions: - Using `with_context()` instead of `.context()`, to avoid evaluating the error message in the common case that it's unused. I also tried `concat!()` since this can be a static string, but the resulting binary is slightly larger, and we don't need to optimize for the unlikely error case. - Extracted the parts of the monomorphized functions that didn't require the type parameters into separate non-generic functions. While the goal here is mostly to reduce binary size and compilation time, this optimization on it's own seems to help with the runtime benchmarks too (though I didn't test it rigorously in isolation). Here's a section from [Rust for Rustaceans](https://rust-for-rustaceans.com/) explaining this "non-generic function" trick:  ## Binary Size? Slightly negative, at least for stripped debug builds: ``` time pnpm pack-next ``` ``` -rw-r--r-- 1 bgw bgw 167895040 Jun 4 17:20 next-swc.after.tar -rw-r--r-- 1 bgw bgw 168622080 Jun 4 15:37 next-swc.before.tar ``` ## Runtime Performance? Using https://github.com/bgw/benchmark-scripts/ ### Microbenchmark (`turbo_tasks_memory_stress/fibonacci/200`) ``` $ TURBOPACK_BENCH_STRESS=yes cargo bench -p turbo-tasks-memory -- fibonacci/200 Compiling turbo-tasks v0.1.0 (/home/bgw/turbo/crates/turbo-tasks) Compiling turbo-tasks-memory v0.1.0 (/home/bgw/turbo/crates/turbo-tasks-memory) Compiling turbo-tasks-testing v0.1.0 (/home/bgw/turbo/crates/turbo-tasks-testing) Finished `bench` profile [optimized] target(s) in 10.79s Running benches/mod.rs (target/release/deps/mod-8c0f970371f8713d) turbo_tasks_memory_stress/fibonacci/200 time: [64.420 ms 64.683 ms 64.941 ms] thrpt: [309.53 Kelem/s 310.76 Kelem/s 312.03 Kelem/s] change: time: [-2.2828% -1.7587% -1.2206%] (p = 0.00 < 0.05) thrpt: [+1.2357% +1.7902% +2.3361%] Performance has improved. Found 1 outliers among 20 measurements (5.00%) 1 (5.00%) low mild ``` ### "Realistic" Benchmark (`bench_startup/Turbopack CSR/1000 modules`) The difference is small. I patched the benchmark to increase the number of iterations so I could get something statistically significant. ``` diff --git a/crates/turbopack-bench/src/lib.rs b/crates/turbopack-bench/src/lib.rs index 4e3df12db0..d950d76071 100644 --- a/crates/turbopack-bench/src/lib.rs +++ b/crates/turbopack-bench/src/lib.rs @@ -35,8 +35,8 @@ pub mod util; pub fn bench_startup(c: &mut Criterion, bundlers: &[Box<dyn Bundler>]) { let mut g = c.benchmark_group("bench_startup"); - g.sample_size(10); - g.measurement_time(Duration::from_secs(60)); + g.sample_size(100); + g.measurement_time(Duration::from_secs(600)); bench_startup_internal(g, false, bundlers); } ``` ``` cargo bench -p turbopack-cli -- bench_startup ``` ``` Finished `bench` profile [optimized] target(s) in 1.30s Running benches/mod.rs (target/release/deps/mod-2681e324dfd90da1) bench_startup/Turbopack CSR/1000 modules time: [2.2684 s 2.2717 s 2.2750 s] change: [-1.9365% -1.7387% -1.5602%] (p = 0.00 < 0.05) Performance has improved. Found 3 outliers among 100 measurements (3.00%) 1 (1.00%) low mild 2 (2.00%) high mild ``` ## Build Speed? Not enough of a difference to measure. ``` rm -rf target/ && time cargo build -p turbopack-cli ``` Before: ``` real 10m42.174s ``` After: ``` real 10m40.735s ```

ForsakenHarmony

pushed a commit

to vercel/next.js

that referenced

this pull request

Jul 29, 2024

…borepo#8304) ## What? I noticed a number of small optimizations that could be applied to these hot and heavily-monomorphized functions: - Using `with_context()` instead of `.context()`, to avoid evaluating the error message in the common case that it's unused. I also tried `concat!()` since this can be a static string, but the resulting binary is slightly larger, and we don't need to optimize for the unlikely error case. - Extracted the parts of the monomorphized functions that didn't require the type parameters into separate non-generic functions. While the goal here is mostly to reduce binary size and compilation time, this optimization on it's own seems to help with the runtime benchmarks too (though I didn't test it rigorously in isolation). Here's a section from [Rust for Rustaceans](https://rust-for-rustaceans.com/) explaining this "non-generic function" trick:  ## Binary Size? Slightly negative, at least for stripped debug builds: ``` time pnpm pack-next ``` ``` -rw-r--r-- 1 bgw bgw 167895040 Jun 4 17:20 next-swc.after.tar -rw-r--r-- 1 bgw bgw 168622080 Jun 4 15:37 next-swc.before.tar ``` ## Runtime Performance? Using https://github.com/bgw/benchmark-scripts/ ### Microbenchmark (`turbo_tasks_memory_stress/fibonacci/200`) ``` $ TURBOPACK_BENCH_STRESS=yes cargo bench -p turbo-tasks-memory -- fibonacci/200 Compiling turbo-tasks v0.1.0 (/home/bgw/turbo/crates/turbo-tasks) Compiling turbo-tasks-memory v0.1.0 (/home/bgw/turbo/crates/turbo-tasks-memory) Compiling turbo-tasks-testing v0.1.0 (/home/bgw/turbo/crates/turbo-tasks-testing) Finished `bench` profile [optimized] target(s) in 10.79s Running benches/mod.rs (target/release/deps/mod-8c0f970371f8713d) turbo_tasks_memory_stress/fibonacci/200 time: [64.420 ms 64.683 ms 64.941 ms] thrpt: [309.53 Kelem/s 310.76 Kelem/s 312.03 Kelem/s] change: time: [-2.2828% -1.7587% -1.2206%] (p = 0.00 < 0.05) thrpt: [+1.2357% +1.7902% +2.3361%] Performance has improved. Found 1 outliers among 20 measurements (5.00%) 1 (5.00%) low mild ``` ### "Realistic" Benchmark (`bench_startup/Turbopack CSR/1000 modules`) The difference is small. I patched the benchmark to increase the number of iterations so I could get something statistically significant. ``` diff --git a/crates/turbopack-bench/src/lib.rs b/crates/turbopack-bench/src/lib.rs index 4e3df12db0..d950d76071 100644 --- a/crates/turbopack-bench/src/lib.rs +++ b/crates/turbopack-bench/src/lib.rs @@ -35,8 +35,8 @@ pub mod util; pub fn bench_startup(c: &mut Criterion, bundlers: &[Box<dyn Bundler>]) { let mut g = c.benchmark_group("bench_startup"); - g.sample_size(10); - g.measurement_time(Duration::from_secs(60)); + g.sample_size(100); + g.measurement_time(Duration::from_secs(600)); bench_startup_internal(g, false, bundlers); } ``` ``` cargo bench -p turbopack-cli -- bench_startup ``` ``` Finished `bench` profile [optimized] target(s) in 1.30s Running benches/mod.rs (target/release/deps/mod-2681e324dfd90da1) bench_startup/Turbopack CSR/1000 modules time: [2.2684 s 2.2717 s 2.2750 s] change: [-1.9365% -1.7387% -1.5602%] (p = 0.00 < 0.05) Performance has improved. Found 3 outliers among 100 measurements (3.00%) 1 (1.00%) low mild 2 (2.00%) high mild ``` ## Build Speed? Not enough of a difference to measure. ``` rm -rf target/ && time cargo build -p turbopack-cli ``` Before: ``` real 10m42.174s ``` After: ``` real 10m40.735s ```

ForsakenHarmony

pushed a commit

to vercel/next.js

that referenced

this pull request

Jul 29, 2024

…borepo#8304) ## What? I noticed a number of small optimizations that could be applied to these hot and heavily-monomorphized functions: - Using `with_context()` instead of `.context()`, to avoid evaluating the error message in the common case that it's unused. I also tried `concat!()` since this can be a static string, but the resulting binary is slightly larger, and we don't need to optimize for the unlikely error case. - Extracted the parts of the monomorphized functions that didn't require the type parameters into separate non-generic functions. While the goal here is mostly to reduce binary size and compilation time, this optimization on it's own seems to help with the runtime benchmarks too (though I didn't test it rigorously in isolation). Here's a section from [Rust for Rustaceans](https://rust-for-rustaceans.com/) explaining this "non-generic function" trick:  ## Binary Size? Slightly negative, at least for stripped debug builds: ``` time pnpm pack-next ``` ``` -rw-r--r-- 1 bgw bgw 167895040 Jun 4 17:20 next-swc.after.tar -rw-r--r-- 1 bgw bgw 168622080 Jun 4 15:37 next-swc.before.tar ``` ## Runtime Performance? Using https://github.com/bgw/benchmark-scripts/ ### Microbenchmark (`turbo_tasks_memory_stress/fibonacci/200`) ``` $ TURBOPACK_BENCH_STRESS=yes cargo bench -p turbo-tasks-memory -- fibonacci/200 Compiling turbo-tasks v0.1.0 (/home/bgw/turbo/crates/turbo-tasks) Compiling turbo-tasks-memory v0.1.0 (/home/bgw/turbo/crates/turbo-tasks-memory) Compiling turbo-tasks-testing v0.1.0 (/home/bgw/turbo/crates/turbo-tasks-testing) Finished `bench` profile [optimized] target(s) in 10.79s Running benches/mod.rs (target/release/deps/mod-8c0f970371f8713d) turbo_tasks_memory_stress/fibonacci/200 time: [64.420 ms 64.683 ms 64.941 ms] thrpt: [309.53 Kelem/s 310.76 Kelem/s 312.03 Kelem/s] change: time: [-2.2828% -1.7587% -1.2206%] (p = 0.00 < 0.05) thrpt: [+1.2357% +1.7902% +2.3361%] Performance has improved. Found 1 outliers among 20 measurements (5.00%) 1 (5.00%) low mild ``` ### "Realistic" Benchmark (`bench_startup/Turbopack CSR/1000 modules`) The difference is small. I patched the benchmark to increase the number of iterations so I could get something statistically significant. ``` diff --git a/crates/turbopack-bench/src/lib.rs b/crates/turbopack-bench/src/lib.rs index 4e3df12db0..d950d76071 100644 --- a/crates/turbopack-bench/src/lib.rs +++ b/crates/turbopack-bench/src/lib.rs @@ -35,8 +35,8 @@ pub mod util; pub fn bench_startup(c: &mut Criterion, bundlers: &[Box<dyn Bundler>]) { let mut g = c.benchmark_group("bench_startup"); - g.sample_size(10); - g.measurement_time(Duration::from_secs(60)); + g.sample_size(100); + g.measurement_time(Duration::from_secs(600)); bench_startup_internal(g, false, bundlers); } ``` ``` cargo bench -p turbopack-cli -- bench_startup ``` ``` Finished `bench` profile [optimized] target(s) in 1.30s Running benches/mod.rs (target/release/deps/mod-2681e324dfd90da1) bench_startup/Turbopack CSR/1000 modules time: [2.2684 s 2.2717 s 2.2750 s] change: [-1.9365% -1.7387% -1.5602%] (p = 0.00 < 0.05) Performance has improved. Found 3 outliers among 100 measurements (3.00%) 1 (1.00%) low mild 2 (2.00%) high mild ``` ## Build Speed? Not enough of a difference to measure. ``` rm -rf target/ && time cargo build -p turbopack-cli ``` Before: ``` real 10m42.174s ``` After: ``` real 10m40.735s ```

ForsakenHarmony

pushed a commit

to vercel/next.js

that referenced

this pull request

Aug 1, 2024

…borepo#8304) ## What? I noticed a number of small optimizations that could be applied to these hot and heavily-monomorphized functions: - Using `with_context()` instead of `.context()`, to avoid evaluating the error message in the common case that it's unused. I also tried `concat!()` since this can be a static string, but the resulting binary is slightly larger, and we don't need to optimize for the unlikely error case. - Extracted the parts of the monomorphized functions that didn't require the type parameters into separate non-generic functions. While the goal here is mostly to reduce binary size and compilation time, this optimization on it's own seems to help with the runtime benchmarks too (though I didn't test it rigorously in isolation). Here's a section from [Rust for Rustaceans](https://rust-for-rustaceans.com/) explaining this "non-generic function" trick:  ## Binary Size? Slightly negative, at least for stripped debug builds: ``` time pnpm pack-next ``` ``` -rw-r--r-- 1 bgw bgw 167895040 Jun 4 17:20 next-swc.after.tar -rw-r--r-- 1 bgw bgw 168622080 Jun 4 15:37 next-swc.before.tar ``` ## Runtime Performance? Using https://github.com/bgw/benchmark-scripts/ ### Microbenchmark (`turbo_tasks_memory_stress/fibonacci/200`) ``` $ TURBOPACK_BENCH_STRESS=yes cargo bench -p turbo-tasks-memory -- fibonacci/200 Compiling turbo-tasks v0.1.0 (/home/bgw/turbo/crates/turbo-tasks) Compiling turbo-tasks-memory v0.1.0 (/home/bgw/turbo/crates/turbo-tasks-memory) Compiling turbo-tasks-testing v0.1.0 (/home/bgw/turbo/crates/turbo-tasks-testing) Finished `bench` profile [optimized] target(s) in 10.79s Running benches/mod.rs (target/release/deps/mod-8c0f970371f8713d) turbo_tasks_memory_stress/fibonacci/200 time: [64.420 ms 64.683 ms 64.941 ms] thrpt: [309.53 Kelem/s 310.76 Kelem/s 312.03 Kelem/s] change: time: [-2.2828% -1.7587% -1.2206%] (p = 0.00 < 0.05) thrpt: [+1.2357% +1.7902% +2.3361%] Performance has improved. Found 1 outliers among 20 measurements (5.00%) 1 (5.00%) low mild ``` ### "Realistic" Benchmark (`bench_startup/Turbopack CSR/1000 modules`) The difference is small. I patched the benchmark to increase the number of iterations so I could get something statistically significant. ``` diff --git a/crates/turbopack-bench/src/lib.rs b/crates/turbopack-bench/src/lib.rs index 4e3df12db0..d950d76071 100644 --- a/crates/turbopack-bench/src/lib.rs +++ b/crates/turbopack-bench/src/lib.rs @@ -35,8 +35,8 @@ pub mod util; pub fn bench_startup(c: &mut Criterion, bundlers: &[Box<dyn Bundler>]) { let mut g = c.benchmark_group("bench_startup"); - g.sample_size(10); - g.measurement_time(Duration::from_secs(60)); + g.sample_size(100); + g.measurement_time(Duration::from_secs(600)); bench_startup_internal(g, false, bundlers); } ``` ``` cargo bench -p turbopack-cli -- bench_startup ``` ``` Finished `bench` profile [optimized] target(s) in 1.30s Running benches/mod.rs (target/release/deps/mod-2681e324dfd90da1) bench_startup/Turbopack CSR/1000 modules time: [2.2684 s 2.2717 s 2.2750 s] change: [-1.9365% -1.7387% -1.5602%] (p = 0.00 < 0.05) Performance has improved. Found 3 outliers among 100 measurements (3.00%) 1 (1.00%) low mild 2 (2.00%) high mild ``` ## Build Speed? Not enough of a difference to measure. ``` rm -rf target/ && time cargo build -p turbopack-cli ``` Before: ``` real 10m42.174s ``` After: ``` real 10m40.735s ```

ForsakenHarmony

pushed a commit

to vercel/next.js

that referenced

this pull request

Aug 14, 2024

# Turbopack * vercel/turborepo#8272 <!-- Donny/강동윤 - feat: Update `swc_core` to `v0.92.8` --> * vercel/turborepo#8262 <!-- Alexander Lyon - add crate to calculate prehashes --> * vercel/turborepo#8174 <!-- Tobias Koppers - use prehash to avoid rehashing the key in the task cache --> * vercel/turborepo#7674 <!-- Alexander Lyon - [turbo trace] add ability to filter by value and occurences --> * vercel/turborepo#8287 <!-- Donny/강동윤 - feat: Update `swc_core` to `v0.92.10` --> * vercel/turborepo#8037 <!-- Alexander Lyon - create turbo-static for compile time graph analysis --> * vercel/turborepo#8293 <!-- Will Binns-Smith - Sync Cargo.lock with Next.js --> * vercel/turborepo#8239 <!-- Benjamin Woodruff - Reduce amount of code generated by ValueDebugFormat --> * vercel/turborepo#8304 <!-- Benjamin Woodruff - Minor optimizations to the codegen of TaskFnInputFunction --> * vercel/turborepo#8221 <!-- Donny/강동윤 - perf: Introduce `RcStr` --> ### What? I tried using `Arc<String>` in vercel/turborepo#7772, but a team member suggested creating a new type so we can replace underlying implementation easily in the future. ### Why? To reduce memory usage. ### How? Closes PACK-2776

ForsakenHarmony

pushed a commit

to vercel/next.js

that referenced

this pull request

Aug 15, 2024

* vercel/turborepo#8272 <!-- Donny/강동윤 - feat: Update `swc_core` to `v0.92.8` --> * vercel/turborepo#8262 <!-- Alexander Lyon - add crate to calculate prehashes --> * vercel/turborepo#8174 <!-- Tobias Koppers - use prehash to avoid rehashing the key in the task cache --> * vercel/turborepo#7674 <!-- Alexander Lyon - [turbo trace] add ability to filter by value and occurences --> * vercel/turborepo#8287 <!-- Donny/강동윤 - feat: Update `swc_core` to `v0.92.10` --> * vercel/turborepo#8037 <!-- Alexander Lyon - create turbo-static for compile time graph analysis --> * vercel/turborepo#8293 <!-- Will Binns-Smith - Sync Cargo.lock with Next.js --> * vercel/turborepo#8239 <!-- Benjamin Woodruff - Reduce amount of code generated by ValueDebugFormat --> * vercel/turborepo#8304 <!-- Benjamin Woodruff - Minor optimizations to the codegen of TaskFnInputFunction --> * vercel/turborepo#8221 <!-- Donny/강동윤 - perf: Introduce `RcStr` --> I tried using `Arc<String>` in vercel/turborepo#7772, but a team member suggested creating a new type so we can replace underlying implementation easily in the future. To reduce memory usage. Closes PACK-2776

ForsakenHarmony

pushed a commit

to vercel/next.js

that referenced

this pull request

Aug 16, 2024

* vercel/turborepo#8272 <!-- Donny/강동윤 - feat: Update `swc_core` to `v0.92.8` --> * vercel/turborepo#8262 <!-- Alexander Lyon - add crate to calculate prehashes --> * vercel/turborepo#8174 <!-- Tobias Koppers - use prehash to avoid rehashing the key in the task cache --> * vercel/turborepo#7674 <!-- Alexander Lyon - [turbo trace] add ability to filter by value and occurences --> * vercel/turborepo#8287 <!-- Donny/강동윤 - feat: Update `swc_core` to `v0.92.10` --> * vercel/turborepo#8037 <!-- Alexander Lyon - create turbo-static for compile time graph analysis --> * vercel/turborepo#8293 <!-- Will Binns-Smith - Sync Cargo.lock with Next.js --> * vercel/turborepo#8239 <!-- Benjamin Woodruff - Reduce amount of code generated by ValueDebugFormat --> * vercel/turborepo#8304 <!-- Benjamin Woodruff - Minor optimizations to the codegen of TaskFnInputFunction --> * vercel/turborepo#8221 <!-- Donny/강동윤 - perf: Introduce `RcStr` --> I tried using `Arc<String>` in vercel/turborepo#7772, but a team member suggested creating a new type so we can replace underlying implementation easily in the future. To reduce memory usage. Closes PACK-2776

Sign up for free

to join this conversation on GitHub.

Already have an account?

Sign in to comment

Add this suggestion to a batch that can be applied as a single commit.

This suggestion is invalid because no changes were made to the code.

Suggestions cannot be applied while the pull request is closed.

Suggestions cannot be applied while viewing a subset of changes.

Only one suggestion per line can be applied in a batch.

Add this suggestion to a batch that can be applied as a single commit.

Applying suggestions on deleted lines is not supported.

You must change the existing code in this line in order to create a valid suggestion.

Outdated suggestions cannot be applied.

This suggestion has been applied or marked resolved.

Suggestions cannot be applied from pending reviews.

Suggestions cannot be applied on multi-line comments.

Suggestions cannot be applied while the pull request is queued to merge.

Suggestion cannot be applied right now. Please check back later.

What?

I noticed a number of small optimizations that could be applied to these hot and heavily-monomorphized functions:

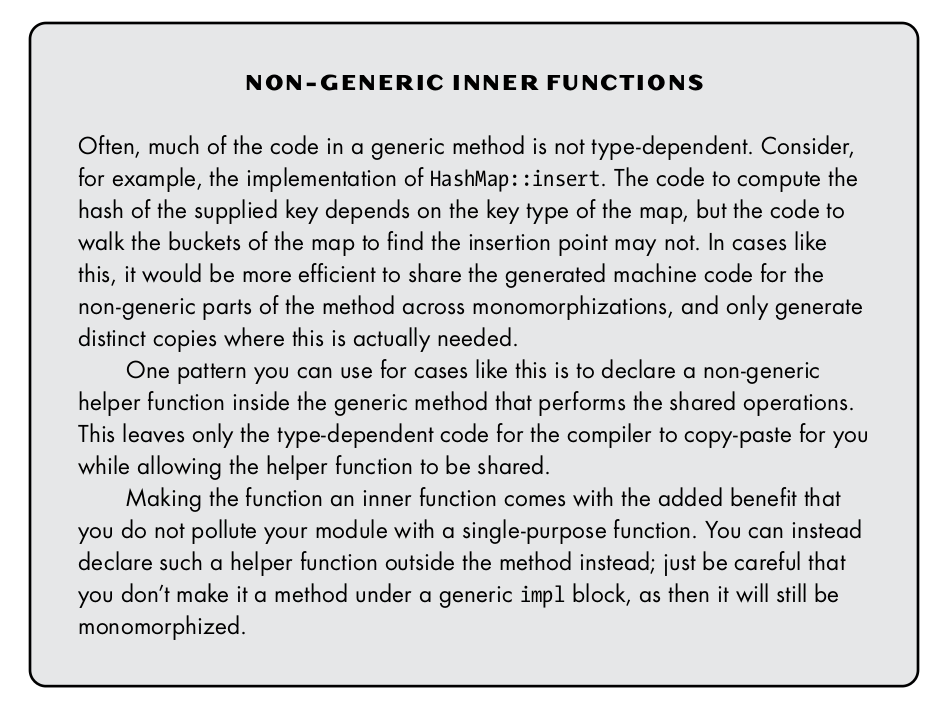

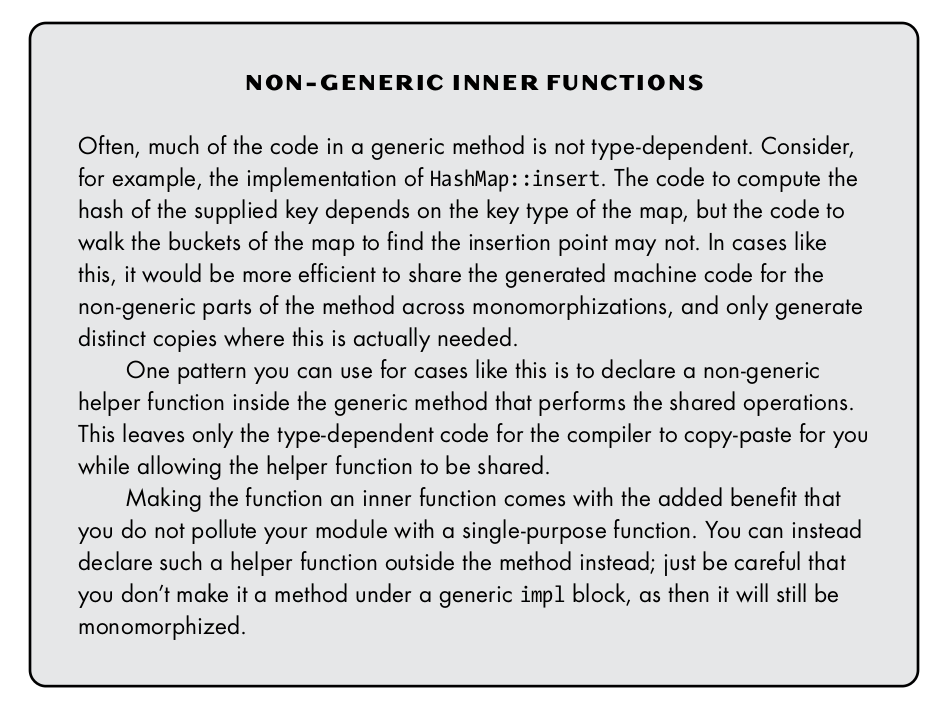

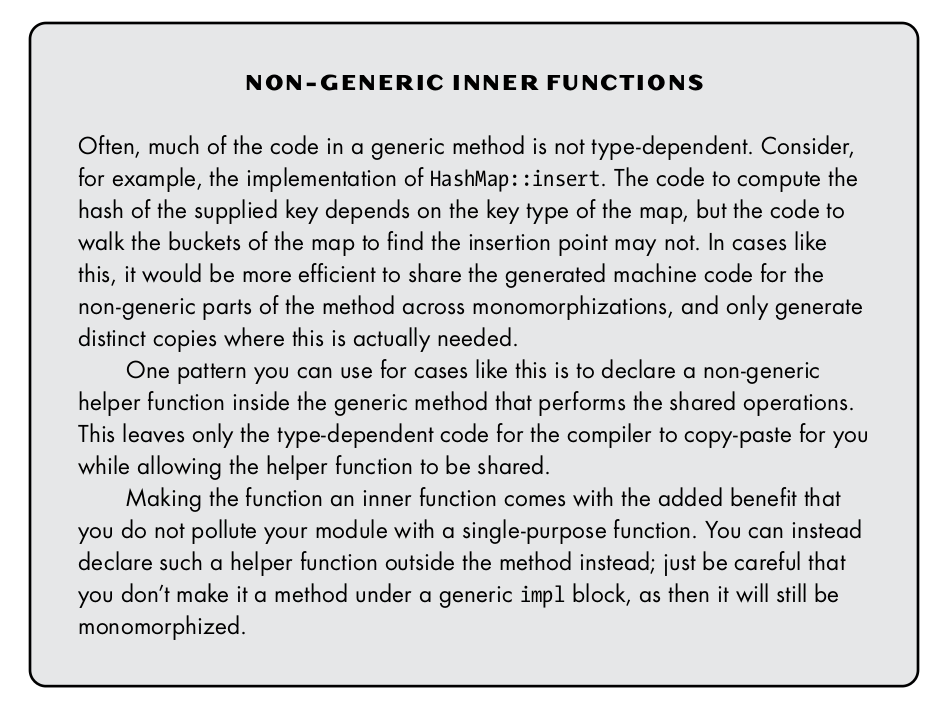

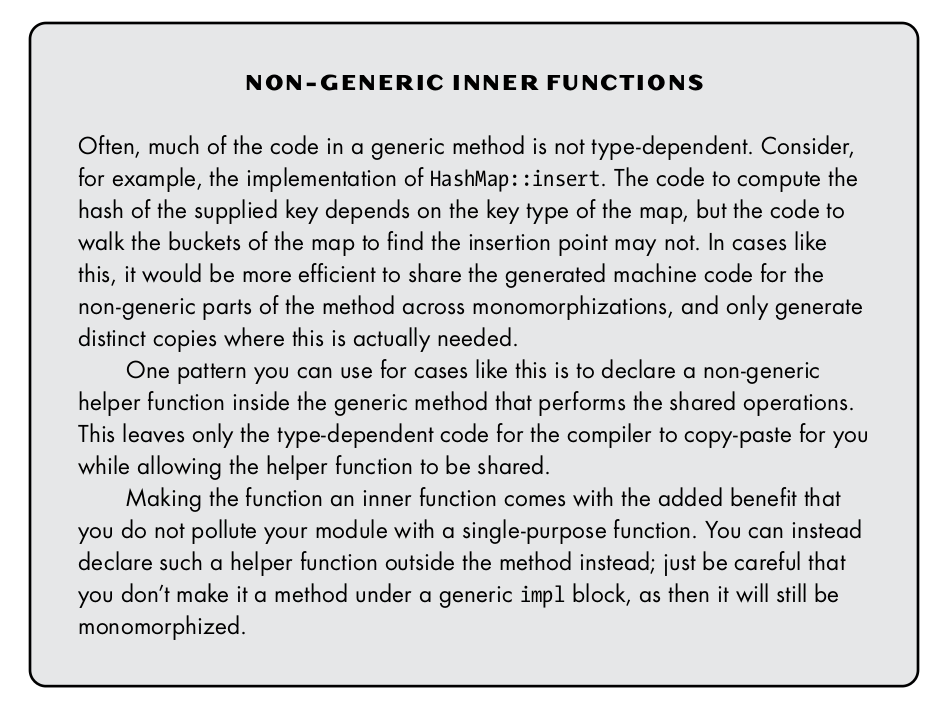

with_context()instead of.context(), to avoid evaluating the error message in the common case that it's unused. I also triedconcat!()since this can be a static string, but the resulting binary is slightly larger, and we don't need to optimize for the unlikely error case.Here's a section from Rust for Rustaceans explaining this "non-generic function" trick:

Binary Size?

Slightly negative, at least for stripped debug builds:

Runtime Performance?

Using https://github.com/bgw/benchmark-scripts/

Microbenchmark (

turbo_tasks_memory_stress/fibonacci/200)"Realistic" Benchmark (

bench_startup/Turbopack CSR/1000 modules)The difference is small. I patched the benchmark to increase the number of iterations so I could get something statistically significant.

Build Speed?

Not enough of a difference to measure.

Before:

After: