-

-

Notifications

You must be signed in to change notification settings - Fork 3.7k

New issue

Have a question about this project? Sign up for a free GitHub account to open an issue and contact its maintainers and the community.

By clicking “Sign up for GitHub”, you agree to our terms of service and privacy statement. We’ll occasionally send you account related emails.

Already on GitHub? Sign in to your account

[Merged by Bors] - Fix color banding by dithering image before quantization #5264

Conversation

|

Please note that this fixes #5262 while filling out the PR description :) |

|

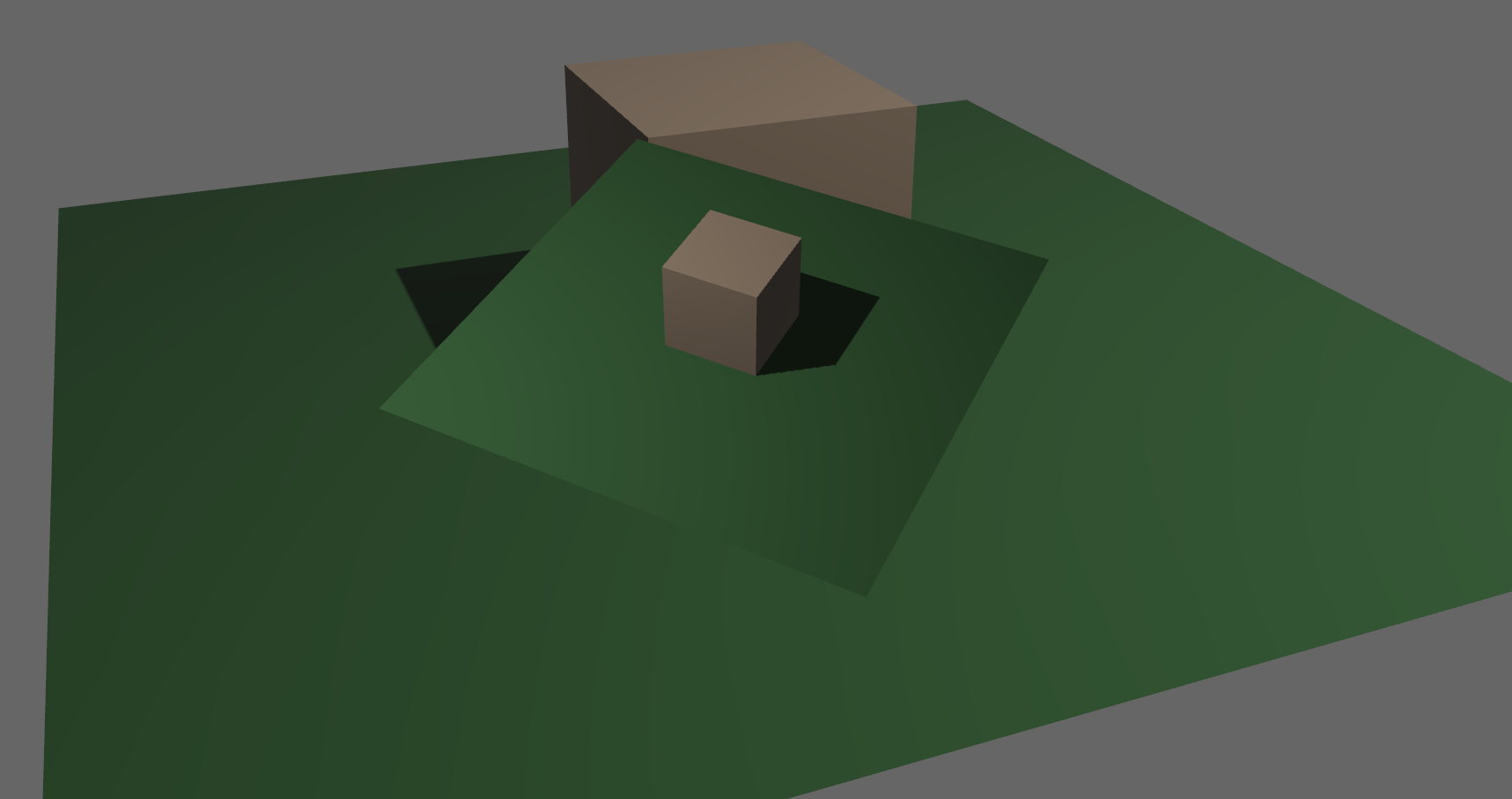

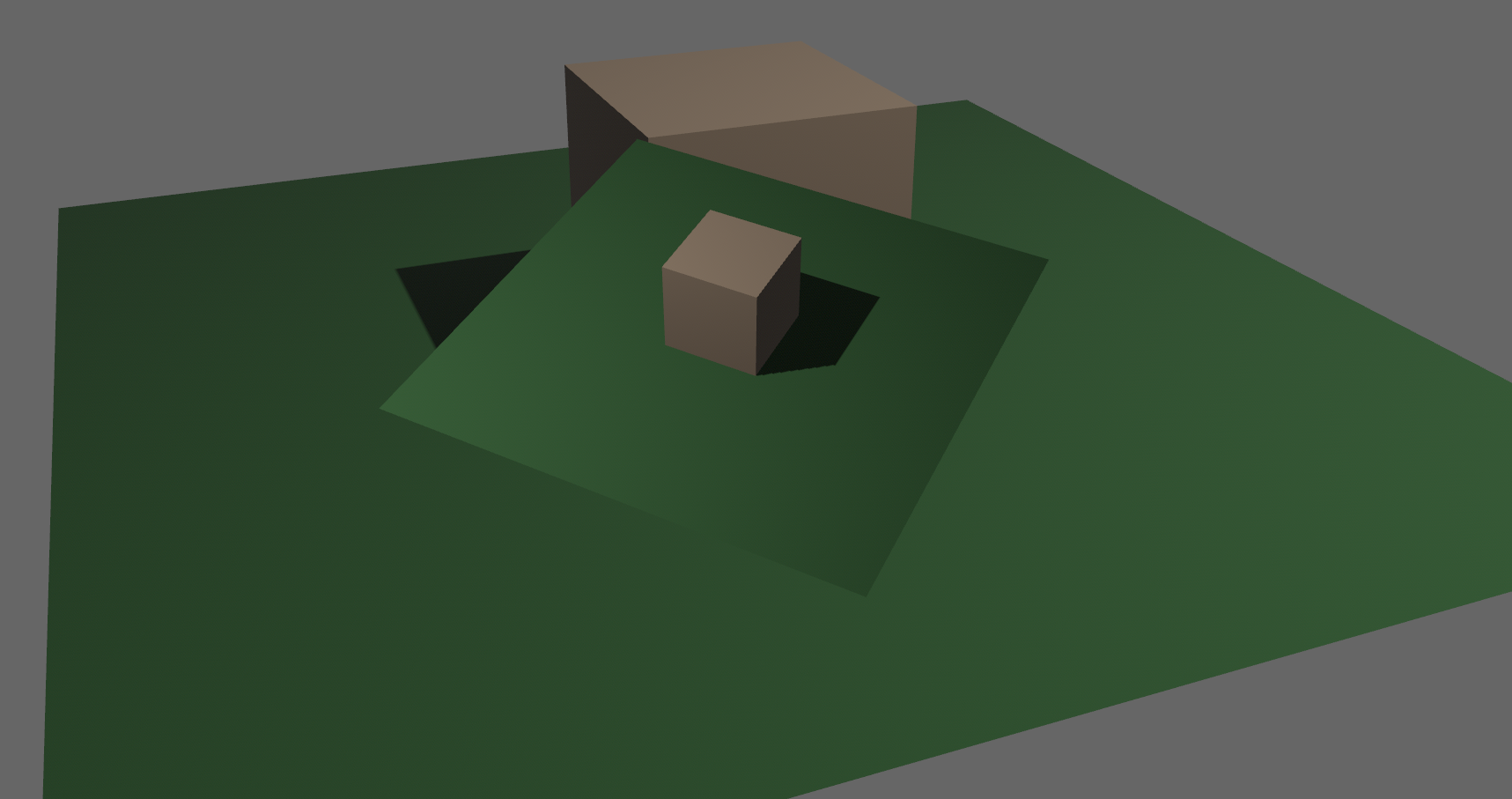

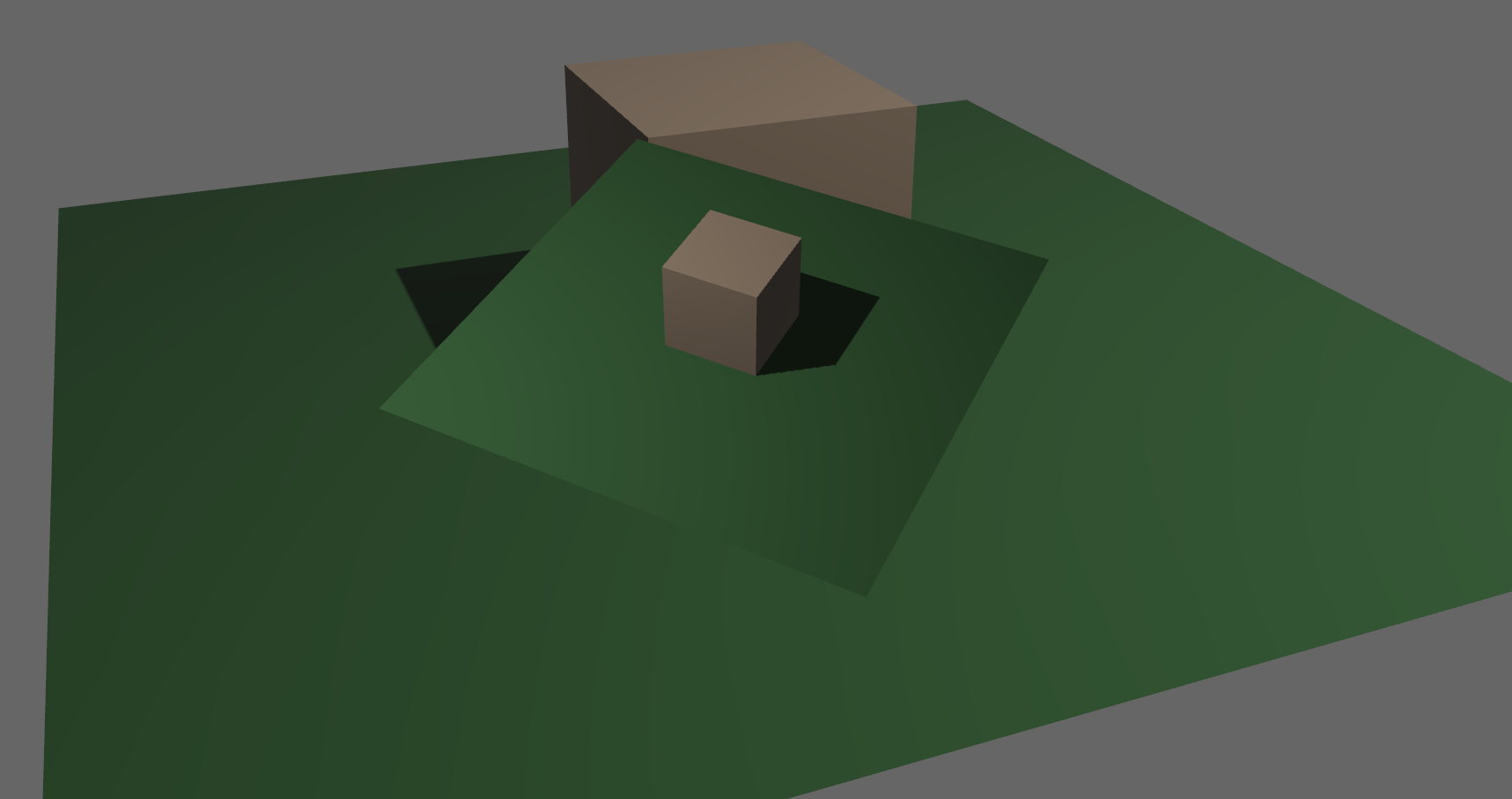

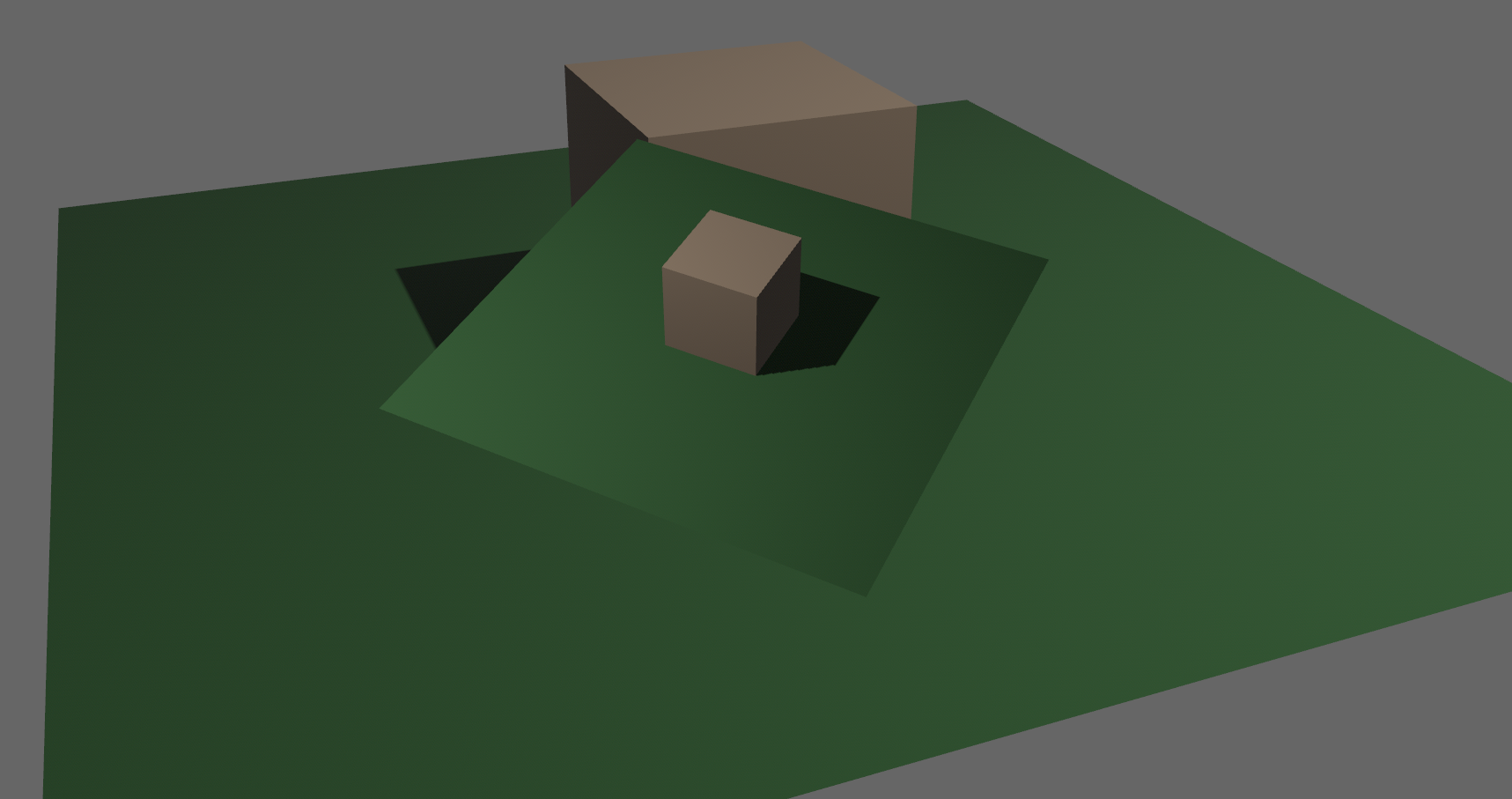

Taking a look at the before / after screen shots, I'm strongly in favor of this being enabled by default. The banding was very noticeable before, and the new dithering pattern is much cleaner. First impressions matter, and the behavior out-of-the-box should work well for most users, especially beginners. |

|

FYI I'm planning on making an example to better stress test noise and banding performance before removing draft status. |

8d3439c to

daacd0c

Compare

|

@CptPotato I rebased on #3425, and added it to the tonemapping pass. This means dithering is now a fullscreen post-process and should work with images/ui/2d/etc that suffer from quantization banding. Could probably improve by moving to its own node that outputs to a @superdump could you provide some input on whether you see this as a feasible path forward? |

|

As a note to others viewing the images above. If you still see banding in the dithered image click on it to view it in another tab. |

The result looks good! Regarding dithering as a separate node: I think that might be a bit overkill for the actual "work" that would be done in the dither shader. As far as I can tell a separate node will require an additional intermediate render target

|

Yup, that's correct. Maybe that make sense though? The goal of dithering is to remove artifacts from quantization, which seems to fit alongside tonemapping (mapping between color spaces).

The issue with this is device compatibility from what @superdump mentioned on discord.

Any resources on what that would be? |

|

Thanks for the clarification.

A more accurate SRGB to linear (and vice versa) transform is defined piece-wise: It uses an exponent of

That being said, if you keep the conversion in both directions (linear -> srgb -> linear) in the shader the difference it makes on the dithering may be negligible. I can't say for sure, though. |

|

Thanks for the links!

This is a good point. Dithering just relies on the rounding being correct, such that dithering never causes more than one full step away from the true signal value during quantization. As long as the log approximation is "pretty close" (a rigorous technical term), it wouldn't cause quantization to round another full step for the large majority of cases. |

This is only relevant if rendering directly into the swapchain texture. The formats supported by the swapchain are more limited as they're specifically for outputting to a display. If rendering to an intermediate texture then the format support is much broader. The tonemapping / upscaling PR does allow for using an independent intermediate texture rather than rendering directly to the swapchain texture, so in some cases at least we could do this. Basically probably only the final swapchain texture might need to be an srgb format and we can use non-srgb in-between. We could even do gamma correction manually and not use an srgb format at all, though I'm not sure of exact swapchain texture format support across a variety of different backends / GPU vendors though so it may be that some/many only support srgb formats. |

|

I asked Alex Vlachos about the license of the GDC 2015 code: https://twitter.com/AlexVlachos/status/1557662473775239175

I've clarified in follow-up tweets that 'no license' doesn't mean public domain and linked to the CC0 license pages for information about that. However, they state "Free to use any way you want" and the intent is clear from that at least. |

daacd0c to

b3d7830

Compare

|

I've reloaded this information into my mental cache and had a think through the current state of things. SDR:

'HDR' (it's still SDR at the end as we don't have HDR output to the window yet, but it allows us to do post-processing on linear HDR before tonemapping):

I think this should be correct... |

|

Nope, I forgot about upscaling in the SDR case:

|

|

This should be disable-able (probably on the existing Tonemapping settings, given that they are currently tied together). Disabling dither there should specialize the tonemapping pipeline to remove the dither code. |

de8f1ab to

6275ad3

Compare

|

I'm not super proficient with pipeline specialization, but I think I've completed this as requested. Dithering can be toggled, but requires tonemapping to be enabled. This is reflected in the new type signature of |

6275ad3 to

2ab94a2

Compare

| var dither = vec3<f32>(dot(vec2<f32>(171.0, 231.0), frag_coord)).xxx; | ||

| dither = fract(dither.rgb / vec3<f32>(103.0, 71.0, 97.0)); | ||

| return (dither - 0.5) / 255.0; | ||

| } |

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

| } | |

| } | |

|

just checking, as it's not what I understood from the discussion: should this dithering setting be ignored when HDR is enabled? |

Nope. Dithering should be coupled to tonemapping (at least for now, as they are logically coupled in the hdr tonemapping pass). If HDR is enabled, dithering should happen in the tonemapping pass. If HDR is disabled, it should happen in the PBR / sprite shader. This impl doesn't quite line up with that, as we aren't specializing the tonemapping pipeline when dither is enabled, which means it is skipped when hdr is enabled. |

|

Working on a fix now |

|

Fixed! I also made a personal style call by renaming |

Thanks. I agree with the rename, I had the same initially but chose to follow the preexisting |

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

I’m marking this as approved assuming it is all tested in its current state as I only had a couple of nit comments.

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

works as I expect it now 👍

|

bors r+ |

# Objective - Closes #5262 - Fix color banding caused by quantization. ## Solution - Adds dithering to the tonemapping node from #3425. - This is inspired by Godot's default "debanding" shader: https://gist.github.com/belzecue/ - Unlike Godot: - debanding happens after tonemapping. My understanding is that this is preferred, because we are running the debanding at the last moment before quantization (`[f32, f32, f32, f32]` -> `f32`). This ensures we aren't biasing the dithering strength by applying it in a different (linear) color space. - This code instead uses and reference the origin source, Valve at GDC 2015   ## Additional Notes Real time rendering to standard dynamic range outputs is limited to 8 bits of depth per color channel. Internally we keep everything in full 32-bit precision (`vec4<f32>`) inside passes and 16-bit between passes until the image is ready to be displayed, at which point the GPU implicitly converts our `vec4<f32>` into a single 32bit value per pixel, with each channel (rgba) getting 8 of those 32 bits. ### The Problem 8 bits of color depth is simply not enough precision to make each step invisible - we only have 256 values per channel! Human vision can perceive steps in luma to about 14 bits of precision. When drawing a very slight gradient, the transition between steps become visible because with a gradient, neighboring pixels will all jump to the next "step" of precision at the same time. ### The Solution One solution is to simply output in HDR - more bits of color data means the transition between bands will become smaller. However, not everyone has hardware that supports 10+ bit color depth. Additionally, 10 bit color doesn't even fully solve the issue, banding will result in coherent bands on shallow gradients, but the steps will be harder to perceive. The solution in this PR adds noise to the signal before it is "quantized" or resampled from 32 to 8 bits. Done naively, it's easy to add unneeded noise to the image. To ensure dithering is correct and absolutely minimal, noise is adding *within* one step of the output color depth. When converting from the 32bit to 8bit signal, the value is rounded to the nearest 8 bit value (0 - 255). Banding occurs around the transition from one value to the next, let's say from 50-51. Dithering will never add more than +/-0.5 bits of noise, so the pixels near this transition might round to 50 instead of 51 but will never round more than one step. This means that the output image won't have excess variance: - in a gradient from 49 to 51, there will be a step between each band at 49, 50, and 51. - Done correctly, the modified image of this gradient will never have a adjacent pixels more than one step (0-255) from each other. - I.e. when scanning across the gradient you should expect to see: ``` |-band-| |-band-| |-band-| Baseline: 49 49 49 50 50 50 51 51 51 Dithered: 49 50 49 50 50 51 50 51 51 Dithered (wrong): 49 50 51 49 50 51 49 51 50 ```   You can see from above how correct dithering "fuzzes" the transition between bands to reduce distinct steps in color, without adding excess noise. ### HDR The previous section (and this PR) assumes the final output is to an 8-bit texture, however this is not always the case. When Bevy adds HDR support, the dithering code will need to take the per-channel depth into account instead of assuming it to be 0-255. Edit: I talked with Rob about this and it seems like the current solution is okay. We may need to revisit once we have actual HDR final image output. --- ## Changelog ### Added - All pipelines now support deband dithering. This is enabled by default in 3D, and can be toggled in the `Tonemapping` component in camera bundles. Banding is a graphical artifact created when the rendered image is crunched from high precision (f32 per color channel) down to the final output (u8 per channel in SDR). This results in subtle gradients becoming blocky due to the reduced color precision. Deband dithering applies a small amount of noise to the signal before it is "crunched", which breaks up the hard edges of blocks (bands) of color. Note that this does not add excess noise to the image, as the amount of noise is less than a single step of a color channel - just enough to break up the transition between color blocks in a gradient. Co-authored-by: Carter Anderson <mcanders1@gmail.com>

|

Pull request successfully merged into main. Build succeeded:

|

…5264) # Objective - Closes bevyengine#5262 - Fix color banding caused by quantization. ## Solution - Adds dithering to the tonemapping node from bevyengine#3425. - This is inspired by Godot's default "debanding" shader: https://gist.github.com/belzecue/ - Unlike Godot: - debanding happens after tonemapping. My understanding is that this is preferred, because we are running the debanding at the last moment before quantization (`[f32, f32, f32, f32]` -> `f32`). This ensures we aren't biasing the dithering strength by applying it in a different (linear) color space. - This code instead uses and reference the origin source, Valve at GDC 2015   ## Additional Notes Real time rendering to standard dynamic range outputs is limited to 8 bits of depth per color channel. Internally we keep everything in full 32-bit precision (`vec4<f32>`) inside passes and 16-bit between passes until the image is ready to be displayed, at which point the GPU implicitly converts our `vec4<f32>` into a single 32bit value per pixel, with each channel (rgba) getting 8 of those 32 bits. ### The Problem 8 bits of color depth is simply not enough precision to make each step invisible - we only have 256 values per channel! Human vision can perceive steps in luma to about 14 bits of precision. When drawing a very slight gradient, the transition between steps become visible because with a gradient, neighboring pixels will all jump to the next "step" of precision at the same time. ### The Solution One solution is to simply output in HDR - more bits of color data means the transition between bands will become smaller. However, not everyone has hardware that supports 10+ bit color depth. Additionally, 10 bit color doesn't even fully solve the issue, banding will result in coherent bands on shallow gradients, but the steps will be harder to perceive. The solution in this PR adds noise to the signal before it is "quantized" or resampled from 32 to 8 bits. Done naively, it's easy to add unneeded noise to the image. To ensure dithering is correct and absolutely minimal, noise is adding *within* one step of the output color depth. When converting from the 32bit to 8bit signal, the value is rounded to the nearest 8 bit value (0 - 255). Banding occurs around the transition from one value to the next, let's say from 50-51. Dithering will never add more than +/-0.5 bits of noise, so the pixels near this transition might round to 50 instead of 51 but will never round more than one step. This means that the output image won't have excess variance: - in a gradient from 49 to 51, there will be a step between each band at 49, 50, and 51. - Done correctly, the modified image of this gradient will never have a adjacent pixels more than one step (0-255) from each other. - I.e. when scanning across the gradient you should expect to see: ``` |-band-| |-band-| |-band-| Baseline: 49 49 49 50 50 50 51 51 51 Dithered: 49 50 49 50 50 51 50 51 51 Dithered (wrong): 49 50 51 49 50 51 49 51 50 ```   You can see from above how correct dithering "fuzzes" the transition between bands to reduce distinct steps in color, without adding excess noise. ### HDR The previous section (and this PR) assumes the final output is to an 8-bit texture, however this is not always the case. When Bevy adds HDR support, the dithering code will need to take the per-channel depth into account instead of assuming it to be 0-255. Edit: I talked with Rob about this and it seems like the current solution is okay. We may need to revisit once we have actual HDR final image output. --- ## Changelog ### Added - All pipelines now support deband dithering. This is enabled by default in 3D, and can be toggled in the `Tonemapping` component in camera bundles. Banding is a graphical artifact created when the rendered image is crunched from high precision (f32 per color channel) down to the final output (u8 per channel in SDR). This results in subtle gradients becoming blocky due to the reduced color precision. Deband dithering applies a small amount of noise to the signal before it is "crunched", which breaks up the hard edges of blocks (bands) of color. Note that this does not add excess noise to the image, as the amount of noise is less than a single step of a color channel - just enough to break up the transition between color blocks in a gradient. Co-authored-by: Carter Anderson <mcanders1@gmail.com>

Objective

Solution

[f32, f32, f32, f32]->f32). This ensures we aren't biasing the dithering strength by applying it in a different (linear) color space.Additional Notes

Real time rendering to standard dynamic range outputs is limited to 8 bits of depth per color channel. Internally we keep everything in full 32-bit precision (

vec4<f32>) inside passes and 16-bit between passes until the image is ready to be displayed, at which point the GPU implicitly converts ourvec4<f32>into a single 32bit value per pixel, with each channel (rgba) getting 8 of those 32 bits.The Problem

8 bits of color depth is simply not enough precision to make each step invisible - we only have 256 values per channel! Human vision can perceive steps in luma to about 14 bits of precision. When drawing a very slight gradient, the transition between steps become visible because with a gradient, neighboring pixels will all jump to the next "step" of precision at the same time.

The Solution

One solution is to simply output in HDR - more bits of color data means the transition between bands will become smaller. However, not everyone has hardware that supports 10+ bit color depth. Additionally, 10 bit color doesn't even fully solve the issue, banding will result in coherent bands on shallow gradients, but the steps will be harder to perceive.

The solution in this PR adds noise to the signal before it is "quantized" or resampled from 32 to 8 bits. Done naively, it's easy to add unneeded noise to the image. To ensure dithering is correct and absolutely minimal, noise is adding within one step of the output color depth. When converting from the 32bit to 8bit signal, the value is rounded to the nearest 8 bit value (0 - 255). Banding occurs around the transition from one value to the next, let's say from 50-51. Dithering will never add more than +/-0.5 bits of noise, so the pixels near this transition might round to 50 instead of 51 but will never round more than one step. This means that the output image won't have excess variance:

You can see from above how correct dithering "fuzzes" the transition between bands to reduce distinct steps in color, without adding excess noise.

HDR

The previous section (and this PR) assumes the final output is to an 8-bit texture, however this is not always the case. When Bevy adds HDR support, the dithering code will need to take the per-channel depth into account instead of assuming it to be 0-255. Edit: I talked with Rob about this and it seems like the current solution is okay. We may need to revisit once we have actual HDR final image output.

Changelog

Added

Tonemappingcomponent in camera bundles. Banding is a graphical artifact created when the rendered image is crunched from high precision (f32 per color channel) down to the final output (u8 per channel in SDR). This results in subtle gradients becoming blocky due to the reduced color precision. Deband dithering applies a small amount of noise to the signal before it is "crunched", which breaks up the hard edges of blocks (bands) of color. Note that this does not add excess noise to the image, as the amount of noise is less than a single step of a color channel - just enough to break up the transition between color blocks in a gradient.